Posted by Jay Ji, Senior Product Manager, Google PI; Christian Frueh, Software Engineer, Google Research and Pedro Vergani, Staff Designer, Insight UX

Posted by Jay Ji, Senior Product Manager, Google PI; Christian Frueh, Software Engineer, Google Research and Pedro Vergani, Staff Designer, Insight UX

A customizable AI-powered character template that demonstrates the power of LLMs to create interactive experiences with depth

Google’s Partner Innovation team has developed a series of Generative AI templates to showcase how combining Large Language Models with existing Google APIs and technologies can solve specific industry use cases.

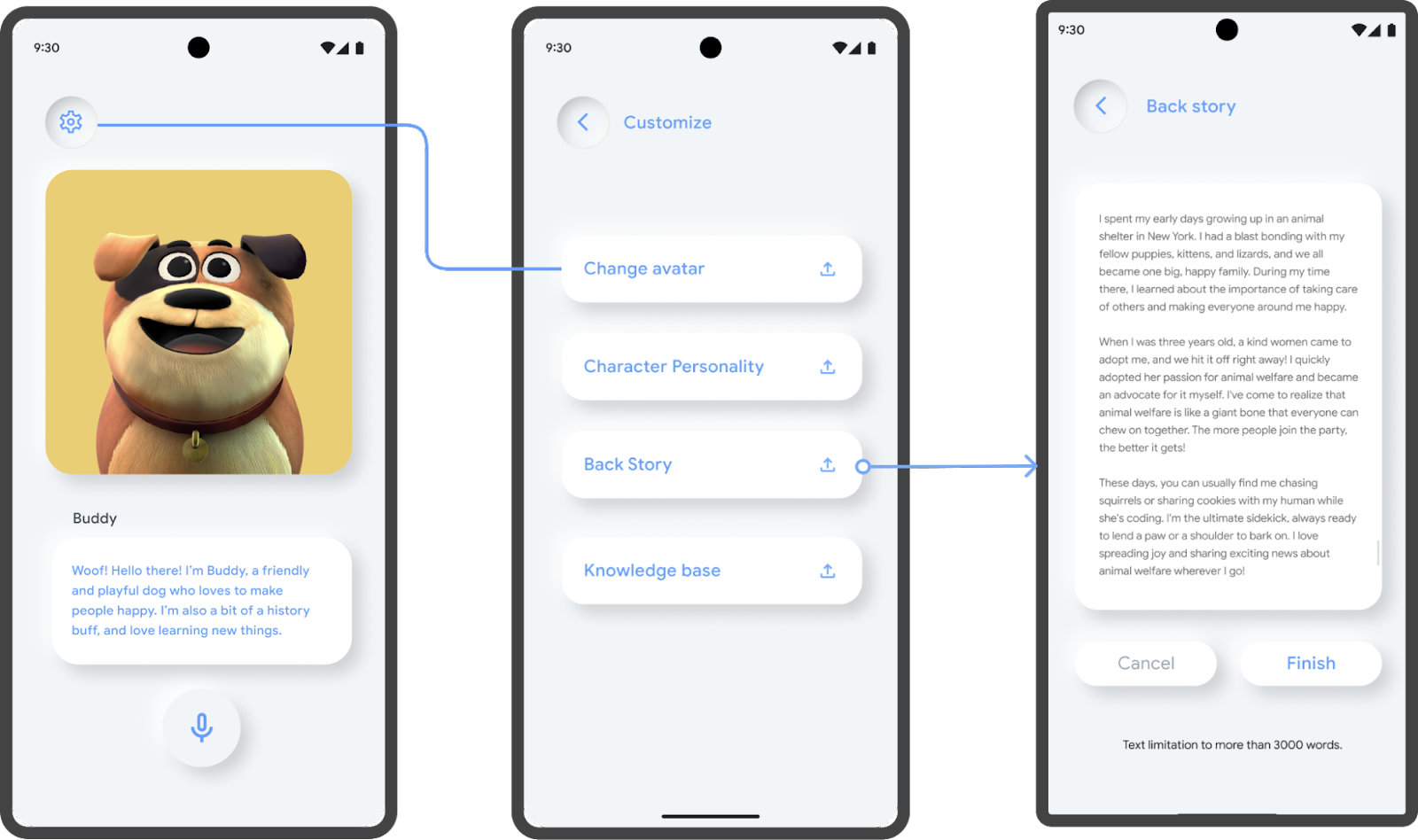

Talking Character is a customizable 3D avatar builder that allows developers to bring an animated character to life with Generative AI. Both developers and users can configure the avatar’s personality, backstory and knowledge base, and thus create a specialized expert with a unique perspective on any given topic. Then, users can interact with it in both text or verbal conversation.

As one example, we have defined a base character model, Buddy. He’s a friendly dog that we have given a backstory, personality and knowledge base such that users can converse about typical dog life experiences. We also provide an example of how personality and backstory can be changed to assume the persona of a reliable insurance agent - or anything else for that matter.

Our code template is intended to serve two main goals:

First, provide developers and users with a test interface to experiment with the powerful concept of prompt engineering for character development and leveraging specific datasets on top of the PaLM API to create unique experiences.

Second, showcase how Generative AI interactions can be enhanced beyond simple text or chat-led experiences. By leveraging cloud services such as speech-to-text and text-to-speech, and machine learning models to animate the character, developers can create a vastly more natural experience for users.

Potential use cases of this type of technology are diverse and include application such as interactive creative tool in developing characters and narratives for gaming or storytelling; tech support even for complex systems or processes; customer service tailored for specific products or services; for debate practice, language learning, or specific subject education; or simply for bringing brand assets to life with a voice and the ability to interact with.

Technical Implementation

Interactions

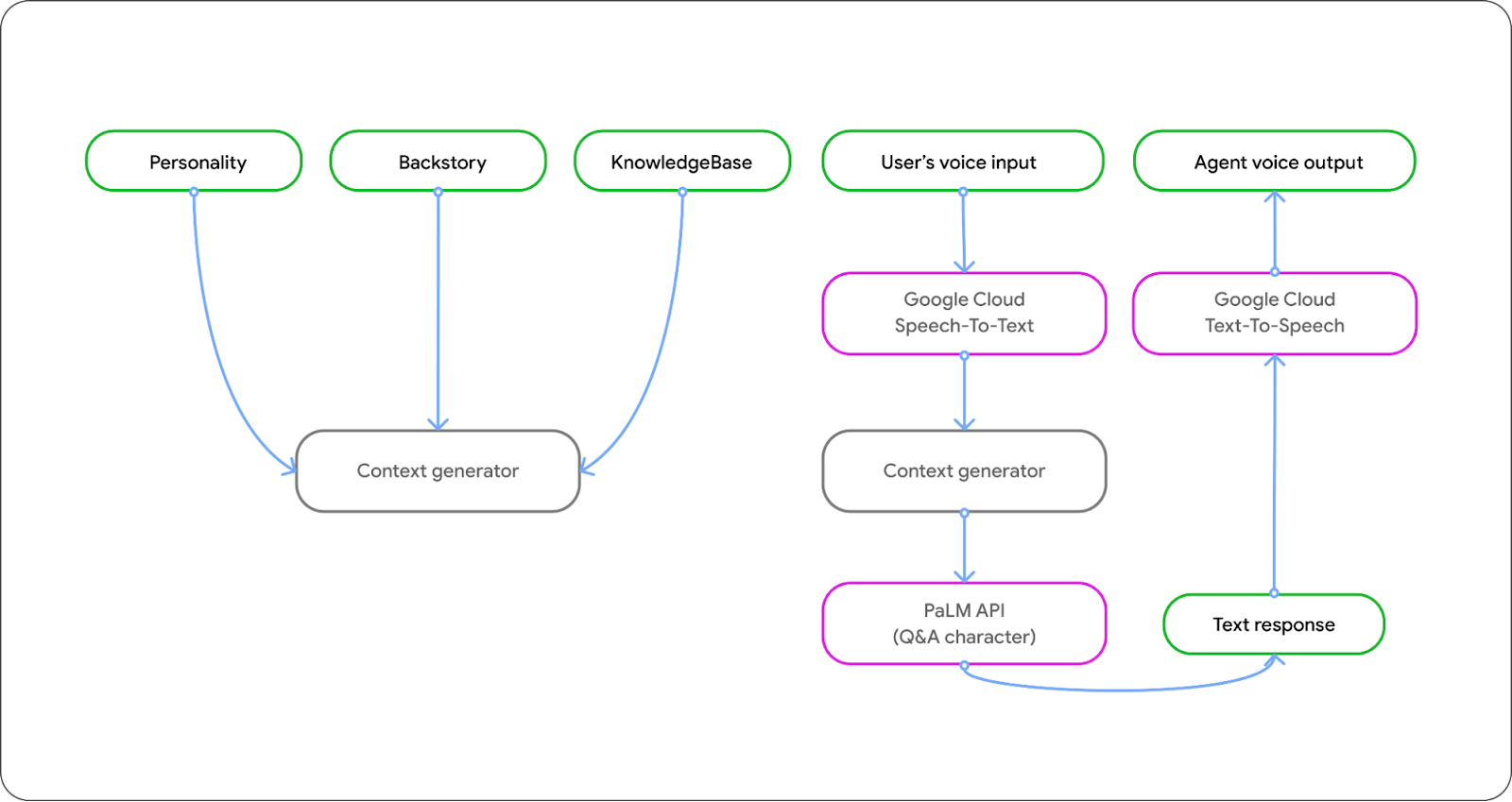

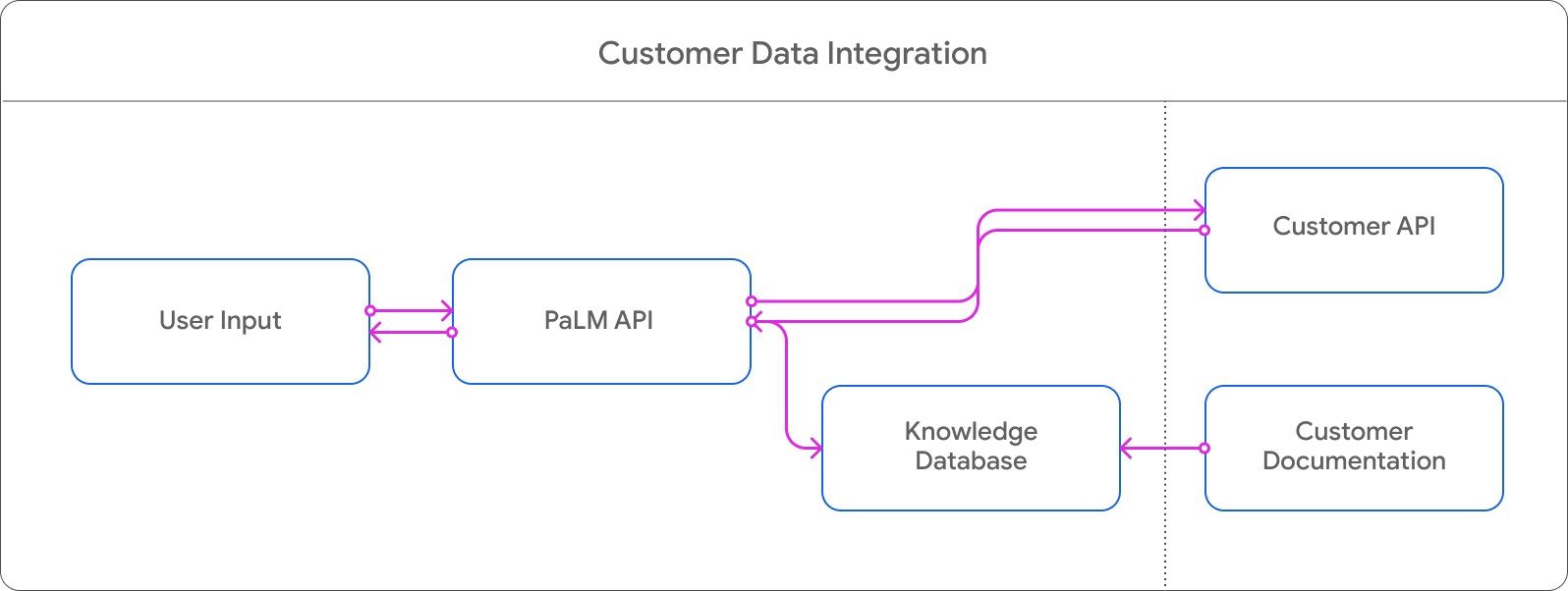

We use several separate technology components to enable a 3D avatar to have a natural conversation with users. First, we use Google's speech-to-text service to convert speech inputs to text, which is then fed into the PaLM API. We then use text-to-speech to generate a human-sounding voice for the language model's response.

Animation

To enable an interactive visual experience, we created a ‘talking’ 3D avatar that animates based on the pattern and intonation of the generated voice. Using the MediaPipe framework, we leveraged a new audio-to-blendshapes machine learning model for generating facial expressions and lip movements that synchronize to the voice pattern.

Blendshapes are control parameters that are used to animate 3D avatars using a small set of weights. Our audio-to-blendshapes model predicts these weights from speech input in real-time, to drive the animated avatar. This model is trained from ‘talking head’ videos using Tensorflow, where we use 3D face tracking to learn a mapping from speech to facial blendshapes, as described in this paper.

Once the generated blendshape weights are obtained from the model, we employ them to morph the facial expressions and lip motion of the 3D avatar, using the open source JavaScript 3D library three.js.

Character Design

In crafting Buddy, our intent was to explore forming an emotional bond between users and its rich backstory and distinct personality. Our aim was not just to elevate the level of engagement, but to demonstrate how a character, for example one imbued with humor, can shape your interaction with it.

A content writer developed a captivating backstory to ground this character. This backstory, along with its knowledge base, is what gives depth to its personality and brings it to life.

We further sought to incorporate recognizable non-verbal cues, like facial expressions, as indicators of the interaction's progression. For instance, when the character appears deep in thought, it's a sign that the model is formulating its response.

Prompt Structure

Finally, to make the avatar easily customizable with simple text inputs, we designed the prompt structure to have three parts: personality, backstory, and knowledge base. We combine all three pieces to one large prompt, and send it to the PaLM API as the context.

Partnerships and Use Cases

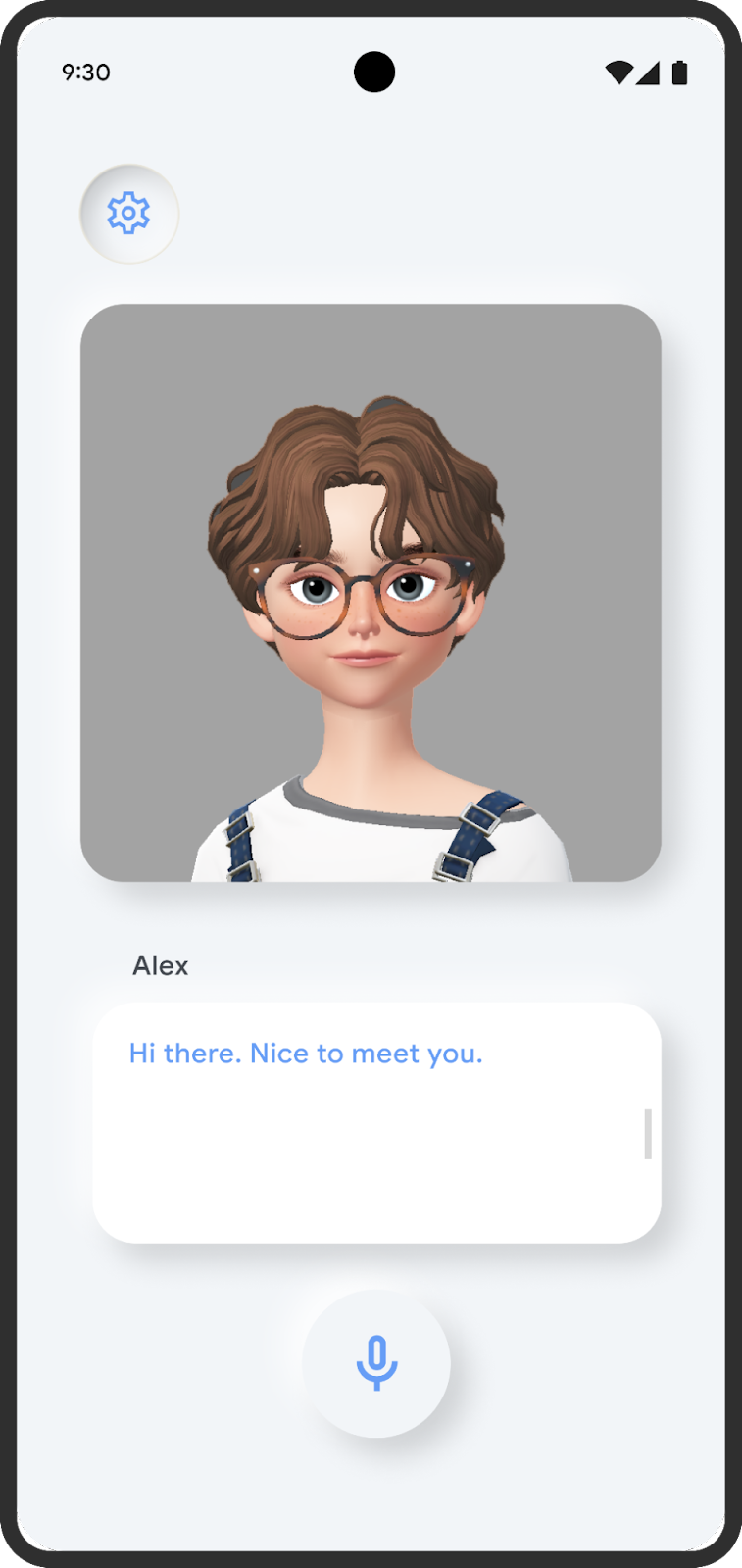

ZEPETO, beloved by Gen Z, is an avatar-centric social universe where users can fully customize their digital personas, explore fashion trends, and engage in vibrant self-expression and virtual interaction. Our Talking Character template allows users to create their own avatars, dress them up in different clothes and accessories, and interact with other users in virtual worlds. We are working with ZEPETO and have tested their metaverse avatar with over 50 blendshapes with great results.

"Seeing an AI character come to life as a ZEPETO avatar and speak with such fluidity and depth is truly inspiring. We believe a combination of advanced language models and avatars will infinitely expand what is possible in the metaverse, and we are excited to be a part of it."- Daewook Kim, CEO, ZEPETO

The demo is not restricted to metaverse use cases, though. The demo shows how characters can bring text corpus or knowledge bases to life in any domain.

For example in gaming, LLM powered NPCs could enrich the universe of a game and deepen user experience through natural language conversations discussing the game’s world, history and characters.

In education, characters can be created to represent different subjects a student is to study, or have different characters representing different levels of difficulty in an interactive educational quiz scenario, or representing specific characters and events from history to help people learn about different cultures, places, people and times.

In commerce, the Talking Character kit could be used to bring brands and stores to life, or to power merchants in an eCommerce marketplace and democratize tools to make their stores more engaging and personalized to give better user experience. It could be used to create avatars for customers as they explore a retail environment and gamify the experience of shopping in the real world.

Even more broadly, any brand, product or service can use this demo to bring a talking agent to life that can interact with users based on any knowledge set of tone of voice, acting as a brand ambassador, customer service representative, or sales assistant.

Open Source and Developer Support

Google’s Partner Innovation team has developed a series of Generative AI Templates showcasing the possibilities when combining LLMs with existing Google APIs and technologies to solve specific industry use cases. Each template was launched at I/O in May this year, and open-sourced for developers and partners to build upon.

We will work closely with several partners on an EAP that allows us to co-develop and launch specific features and experiences based on these templates, as and when the API is released in each respective market (APAC timings TBC). Talking Agent will also be open sourced so developers and startups can build on top of the experiences we have created. Google’s Partner Innovation team will continue to build features and tools in partnership with local markets to expand on the R&D already underway. View the project on GitHub here.

Acknowledgements

We would like to acknowledge the invaluable contributions of the following people to this project: Mattias Breitholtz, Yinuo Wang, Vivek Kwatra, Tyler Mullen, Chuo-Ling Chang, Boon Panichprecha, Lek Pongsakorntorn, Zeno Chullamonthon, Yiyao Zhang, Qiming Zheng, Joyce Li, Xiao Di, Heejun Kim, Jonghyun Lee, Hyeonjun Jo, Jihwan Im, Ajin Ko, Amy Kim, Dream Choi, Yoomi Choi, KC Chung, Edwina Priest, Joe Fry, Bryan Tanaka, Sisi Jin, Agata Dondzik, Miguel de Andres-Clavera.

In our latest #WeArePlay film, we’re spotlighting Tessa and Saasha - best friends turned co-founders of Olio. They’ve been on a mission to help people reduce waste by encouraging communities to share, sell or give-away what they no longer need - from leftover food to household items. The app now helps millions take one big step closer to living in a zero waste world.

In our latest #WeArePlay film, we’re spotlighting Tessa and Saasha - best friends turned co-founders of Olio. They’ve been on a mission to help people reduce waste by encouraging communities to share, sell or give-away what they no longer need - from leftover food to household items. The app now helps millions take one big step closer to living in a zero waste world.

Posted by Leticia Lago, Developer Marketing

Posted by Leticia Lago, Developer Marketing

Posted by Kevin Hernandez, Developer Relations Community Manager

Posted by Kevin Hernandez, Developer Relations Community Manager

Posted by Jose Ugia – Developer Relations Engineer

Posted by Jose Ugia – Developer Relations Engineer

Posted by Kevin Hernandez, Developer Relations Community Manager

Posted by Kevin Hernandez, Developer Relations Community Manager

Posted by Yiling Liu, Product Manager, Google Partner Innovation

Posted by Yiling Liu, Product Manager, Google Partner Innovation

Posted by Stephen McDonald, Developer Programs Engineer

Posted by Stephen McDonald, Developer Programs Engineer

Posted by Kevin Hernandez, Developer Relations Community Manager

Posted by Kevin Hernandez, Developer Relations Community Manager