Google Ads

A typical use case is when a company wants to offer Google ads natively on their platform to their users. For example, customers who have an online store with Shopify can promote their business using Google ads, with just a few clicks and without needing to go to the Google Ads platform. They’re able to do it directly on Shopify’s platform—the Google Ads API makes this possible.

Anyone can use the app, called Fran Ads, to save significant time on product development. Just follow the simple installation steps in the README files (frontend README file and backend README file) on the GitHub repo! The app uses React for the frontend, and Django for the backend; two of the most popular web frameworks.

Blasco acts as an external Product Manager for Google’s strategic partners, driving the entire product development lifecycle. He created this project to help Google’s partners and businesses seeking to offer Google Ads to their users.

The goal is to accelerate the Google Ads integration process and decrease associated development costs. Some companies are using Fran Ads to see what an integration looks like, while others are using the technical guide to learn how to start using the Google Ads API.In general, companies can use Fran Ads as an SDK to begin working with elements within the Google Ads API, and serve as a guidance system for integrating with Google. This project will minimize the number of times the wheel needs to be reinvented, accelerating innovation and facilitating adoption. Developers can clone the code repositories, follow the steps, and have a web app integrated with Google Ads in just a few minutes. They can adapt and build on top of this project, or they can just use the functions they need for the features they want to develop.

Also, you will learn how refresh tokens work for Google APIs, and how to manage them for your web application.

Francisco wrote a detailed technical guide explaining how to build every feature of the app. Some of the most important features are:1. Create a new Google Ads account

2. Link an existing Google Ads account

As you can see from the list above, the app will create Smart Campaigns — a simplified, automated campaign designed for new advertisers and SMBs

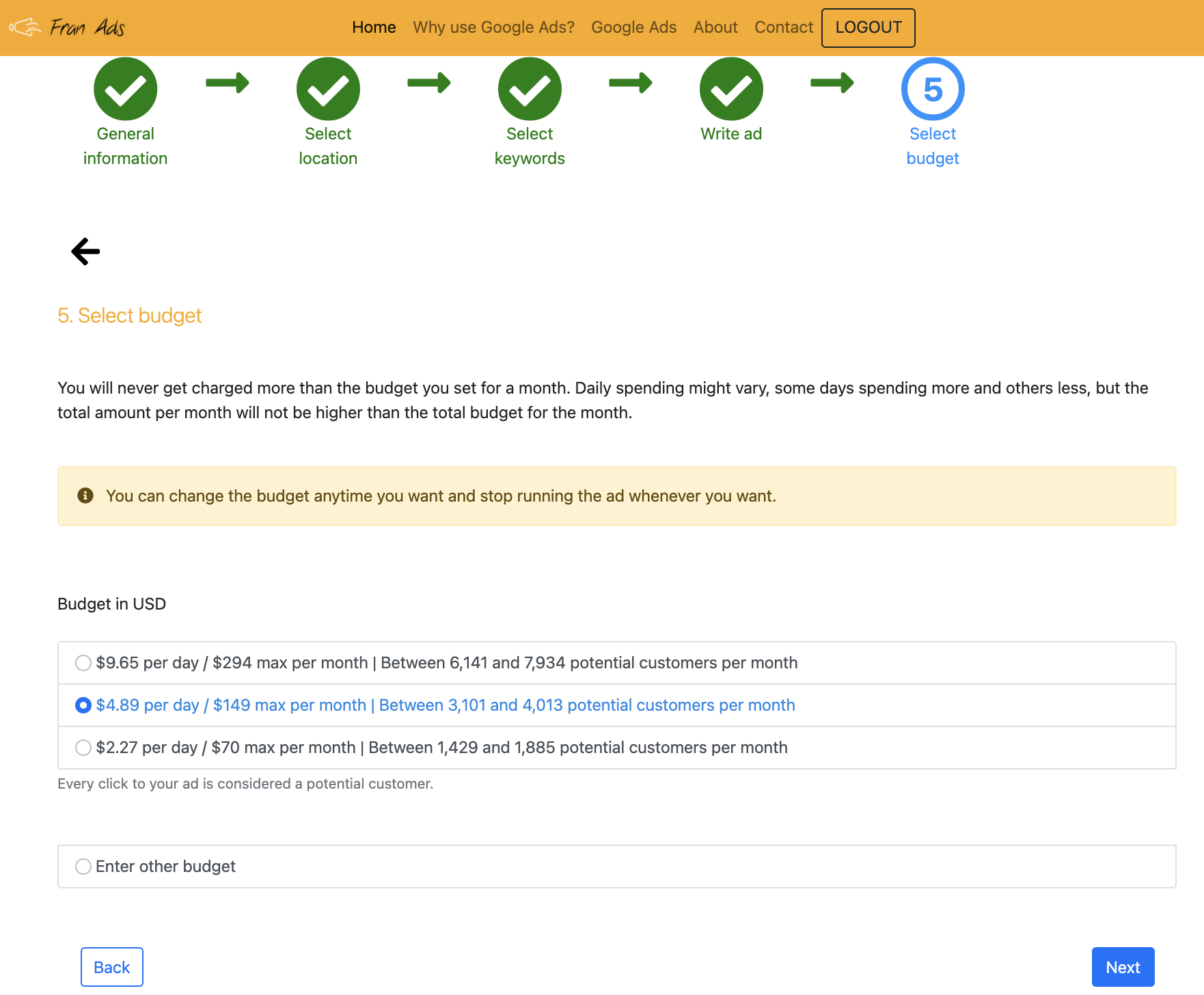

Google made public the suggestion services through the Google Ads API. Fran Ads uses those services to recommend keyword themes, headlines & descriptions for the ad, and budget. These recommendations are specific for each advertiser, depending on several factors such as type of business, location, and keyword themes.

An example of three Google recommendations for an advertiser.

You can also see an alert message that the budget can be changed anytime, so users can pause spending on the campaign. This is important because many new users, especially SMBs, have doubts about spending on something new. Therefore, it is important to communicate to them that the decision they are making at that moment is not set in stone.

When you start using Fran Ads, you will see there is guidance so users complete the tasks they want.

Guidance on how to complete tasks based on Google’s best practices.

Google’s best practices suggest that advertisers use between seven and ten keyword themes per campaign. Therefore, Fran Ads is designed for users to select up to seven keyword themes. Refer to the image of step three when creating a Smart Campaign on Fran Ads. However, you can set it to ten if you like.

The technical guide also provides: