Posted by Gina Biernacki, Product Manager

At Google, we're committed to making authentication safer and easier for our developers, so users can easily access the apps and websites they love. Google Identity Services (GIS) continues to advance and further develop our authentication solutions that offer immense value to our partner ecosystem, including highly requested features including a new verified phone number directly embedded in our authentication flows.For developers, our focus has always been to offer a frictionless experience that makes it easier for users to onboard and return to partner platforms, while also helping developers create a trusted relationship with their users.

What’s New

Today we are announcing a few new features that are now available across our libraries:Android

- Verified Phone Number on Android

- Phone Hint on Android

Web

- One Tap on Intelligent Tracking Prevention (ITP) Browsers

iOS and macOS

- Added macOS Support

Android

Verified Phone Number

As an identity provider, we are making it seamless for developers to supplement additional user data leveraging the user’s Google Account, while ensuring clear user consent is woven into the experience. We understand that phone numbers are a critical piece of account management, both for our users in mobile-first markets and for our developers that require increased identity verification or two-step verification.On Android devices, developers can now request users to share a verified phone number associated with their Google Account as part of the sign-up flow. Developers have shared with us that safeguarding their users through SMS verification represents a significant cost to their business. Now developers can simply request a phone number from the user, which has already been verified by Google, decreasing their overall SMS costs during the onboarding process.

Verified Phone Number is now available on GIS for Android and we plan to expand to other surfaces in the future.

Phone Number Hint

Another way a developer can request a user’s phone number is through the new Phone Number Hint API on Android devices. Phone Number Hint allows developers to call an API where users can share a phone number from the SIM cards of their device, independent of a sign up or sign in flow. This API is preferred over the native Android API call as it does not require any additional permissions, reducing time and effort for our developers. Phone Number Hint API does not require a user to be signed in to their Google account and does not save any information to the Google account.Web

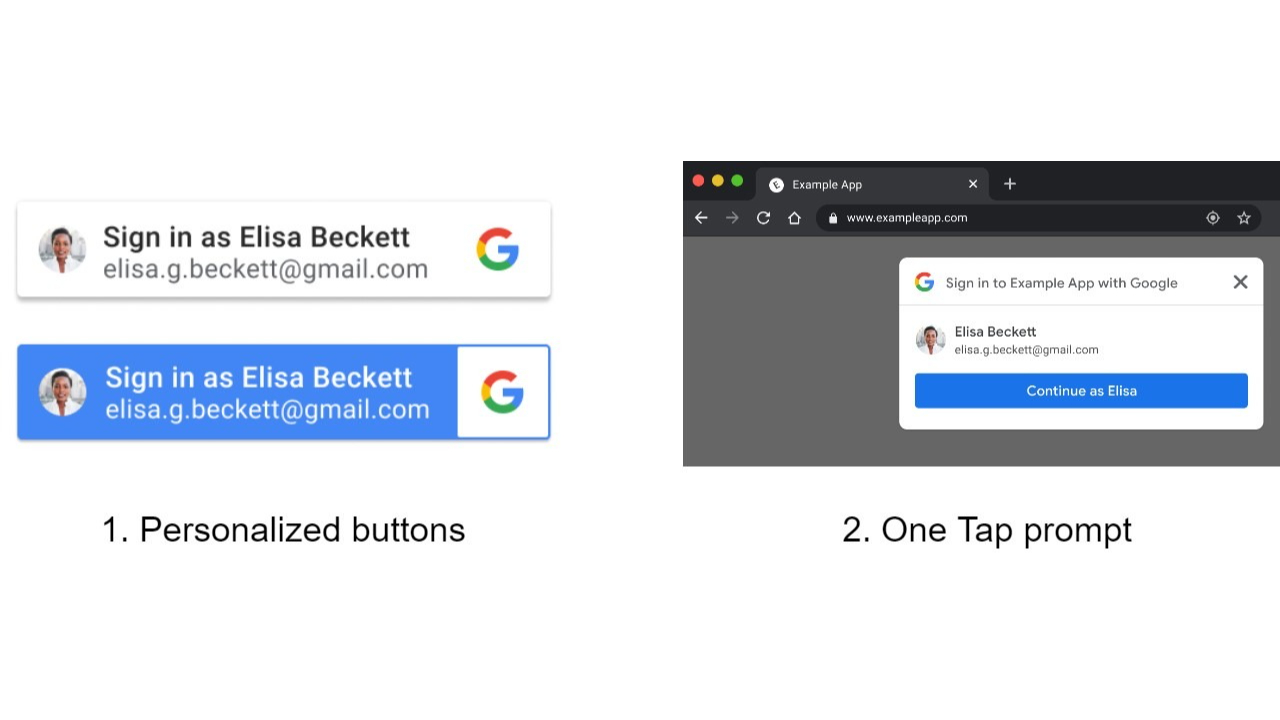

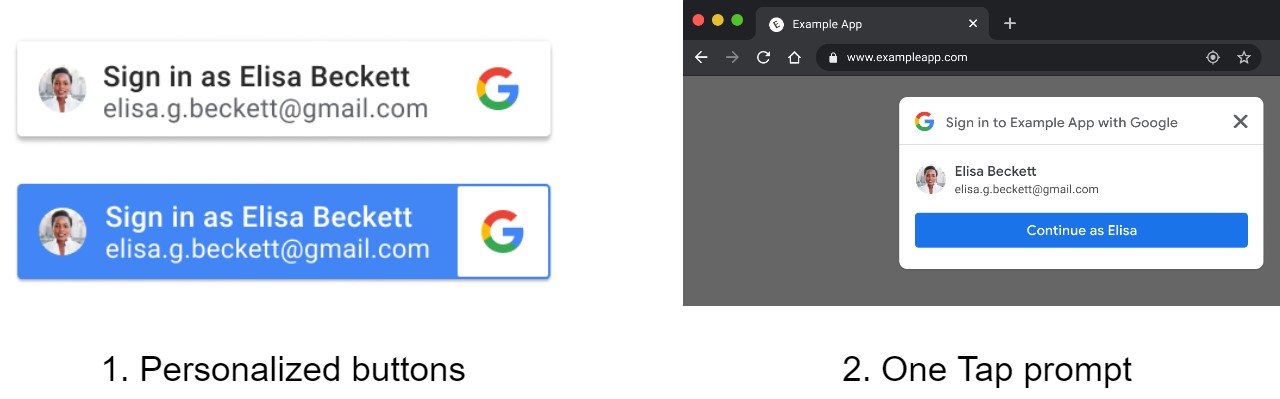

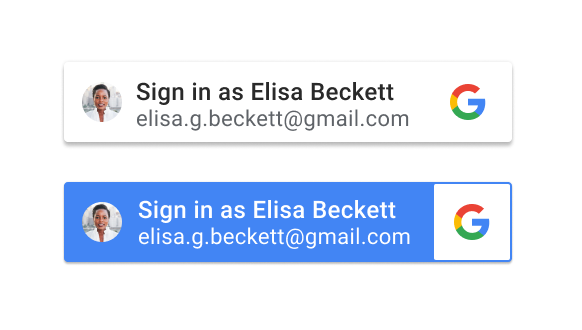

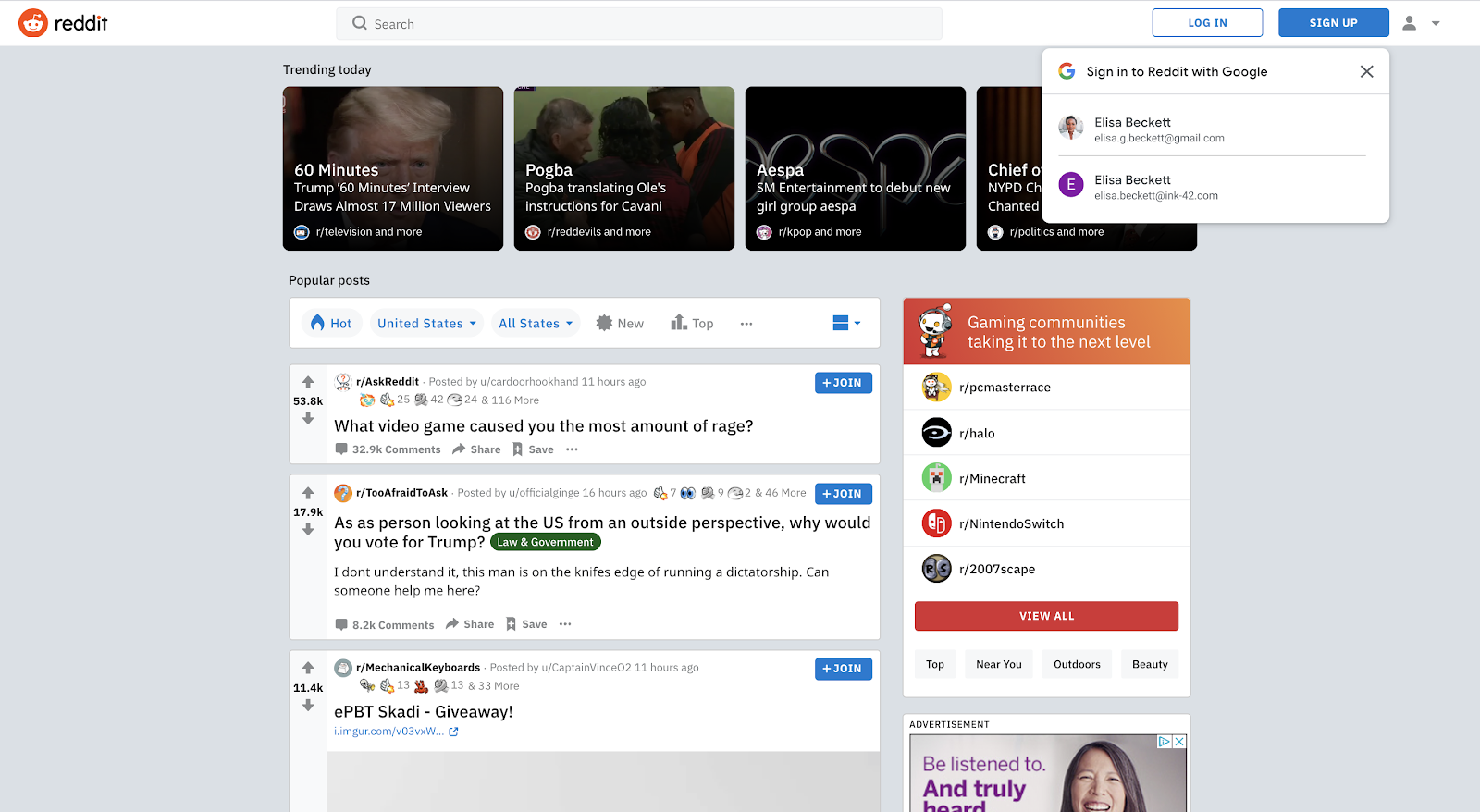

One Tap on ITP Browsers

ITP (Intelligent Tracking Prevention) browsers restrict the use of third party cookies, preventing the tracking of users across the web. GIS One Tap is now available on ITP browsers, including Safari, Firefox and Chrome on iOS. This creates a One Tap experience that’s consistent across multiple platforms, for both users and developers. Our developers prefer One Tap for many reasons:

- increasing user conversion

- Industry leading security

- reduced risks associated with password management

- allowing sign in and sign up without redirecting users to a dedicated sign in / up page

- mitigate duplicate accounts with personalized prompts and automatic sign-in on return visits

Once the user chooses to continue, a popup window will be opened. The UX in the popup window is similar to the standard One Tap user experience.

We will roll out this new feature by default on October 12, 2022 to all developers who have enabled One Tap. Refer to our documentation to begin supporting One Tap on ITP browsers immediately or to opt-out of default support.

iOS and macOS

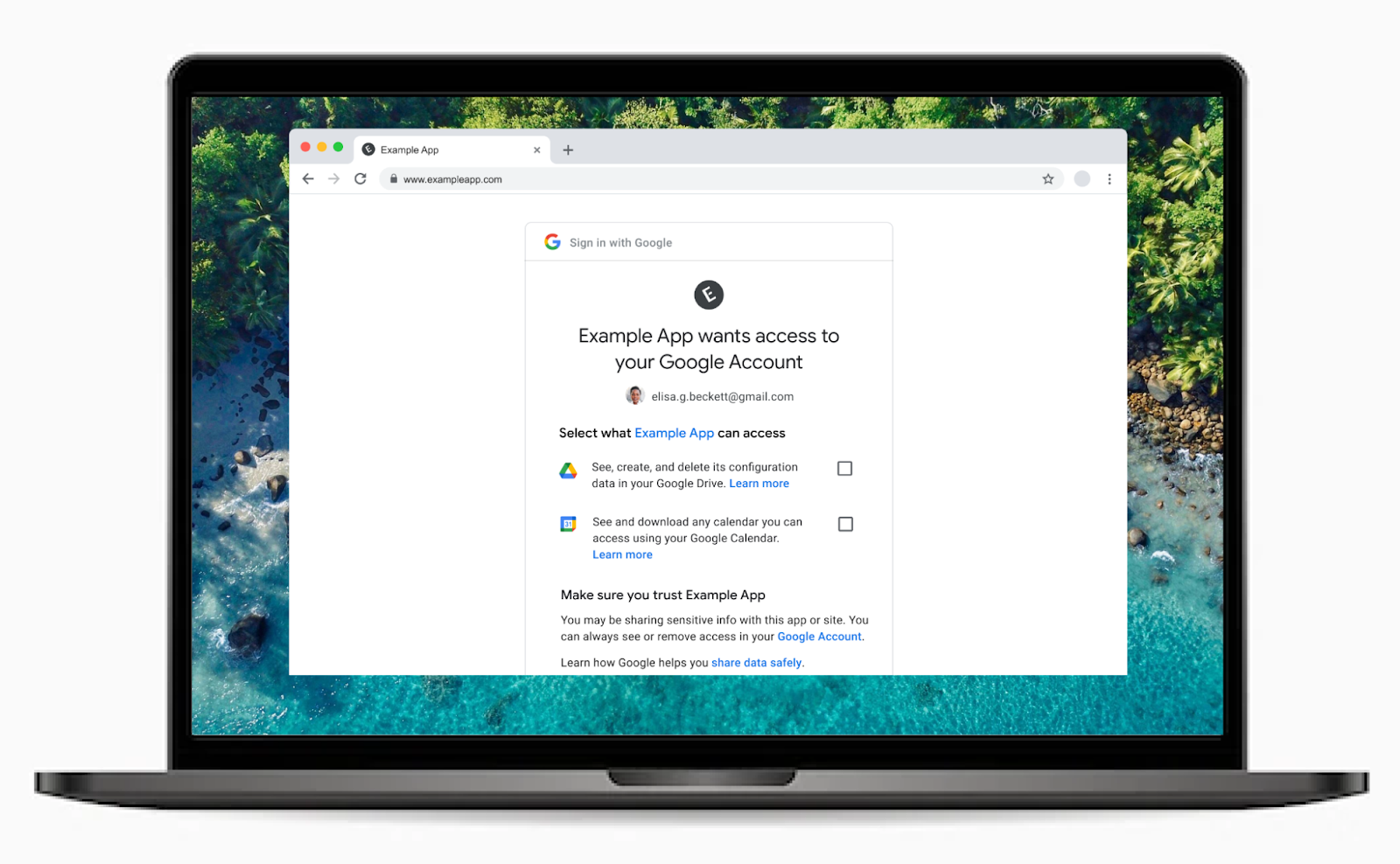

macOS Platform Expansion

Over the last few years, the Apple ecosystem has expanded to include a number of distinct platforms (iOS, iPadOS, macOS, tvOS, watchOS). As a step toward supporting the broader Apple ecosystem, we have launched macOS support as part of Google Sign-In for iOS and macOS. This provides support for the core GIS experiences (authentication, authorization, and token storage) for native macOS apps.Deprecations

Upcoming Deprecations

In addition to new features available in the GIS library, we also want to remind our developers about upcoming deprecations.

- Smart Lock for Passwords on Android Deprecation

- Google Sign-In for Websites Deprecation

Smart Lock for Passwords Deprecation

We are announcing the deprecation of the Smart Lock for Passwords Android SDK, which handles password saving and retrieval, ID token requests, and sign-in hint retrieval. You can now find all Smart Lock for Passwords functionality available in the GIS library. We recommend all developers now use the GIS library for new functionality. For existing integrations, Smart Lock for Passwords traffic will be redirected to the GIS library by default, without any changes needed by developers.Google Sign-In for Websites Deprecation

We would like to remind our developers that on March 31, 2023 we will fully retire the Google Sign-In JavaScript Platform Library for web applications. Please check if this affects your web application, and if necessary, to plan for a migration.We are excited to see these new features continue to bring measurable value to both our developers and users, and look forward to continuing supporting our developers with their identity-related needs. Please visit our developer site to get started.

Posted by Alicja Heisig

Posted by Alicja Heisig