Deep neural networks have radically transformed natural language processing (NLP) in the last decade, primarily through their application in data centers using specialized hardware. However, issues such as preserving user privacy, eliminating network latency, enabling offline functionality, and reducing operation costs have rapidly spurred the development of NLP models that can be run on-device rather than in data centers. Yet mobile devices have limited memory and processing power, which requires models running on them to be small and efficient — without compromising quality.

Last year, we published a neural architecture called PRADO, which at the time achieved state-of-the-art performance on many text classification problems, using a model with less than 200K parameters. While most models use a fixed number of parameters per token, the PRADO model used a network structure that required extremely few parameters to learn the most relevant or useful tokens for the task.

Today we describe a new extension to the model, called pQRNN, which advances the state of the art for NLP performance with a minimal model size. The novelty of pQRNN is in how it combines a simple projection operation with a quasi-RNN encoder for fast, parallel processing. We show that the pQRNN model is able to achieve BERT-level performance on a text classification task with orders of magnitude fewer number of parameters.

What Makes PRADO Work?

When developed a year ago, PRADO exploited NLP domain-specific knowledge on text segmentation to reduce the model size and improve the performance. Normally, the text input to NLP models is first processed into a form that is suitable for the neural network, by segmenting text into pieces (tokens) that correspond to values in a predefined universal dictionary (a list of all possible tokens). The neural network then uniquely identifies each segment using a trainable parameter vector, which comprises the embedding table. However, the way in which text is segmented has a significant impact on the model performance, size, and latency. The figure below shows the spectrum of approaches used by the NLP community and their pros and cons.

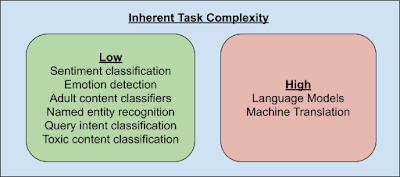

Since the number of text segments is such an important parameter for model performance and compression, it raises the question of whether or not an NLP model needs to be able to distinctly identify every possible text segment. To answer this question we look at the inherent complexity of NLP tasks.

Only a few NLP tasks (e.g., language models and machine translation) need to know subtle differences between text segments and thus need to be capable of uniquely identifying all possible text segments. In contrast, the majority of other tasks can be solved by knowing a small subset of these segments. Furthermore, this subset of task-relevant segments will likely not be the most frequent, as a significant fraction of segments will undoubtedly be dedicated to articles, such as a, an, the, etc., which for many tasks are not necessarily critical. Hence, allowing the network to determine the most relevant segments for a given task results in better performance. In addition, the network does not need to be able to uniquely identify these segments, but only needs to recognize clusters of text segments. For example, a sentiment classifier just needs to know segment clusters that are strongly correlated to the sentiment in the text.

Leveraging these insights, PRADO was designed to learn clusters of text segments from words rather than word pieces or characters, which enabled it to achieve good performance on low-complexity NLP tasks. Since word units are more meaningful, and yet the most relevant words for most tasks are reasonably small, many fewer model parameters are needed to learn such a reduced subset of relevant word clusters.

Improving PRADO

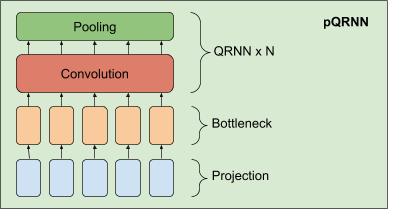

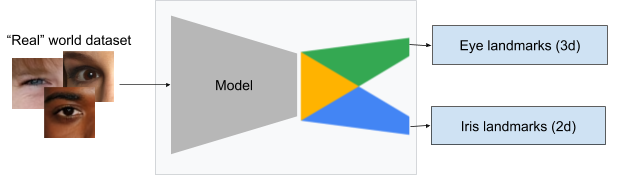

Building on the success of PRADO, we developed an improved NLP model, called pQRNN. This model is composed of three building blocks, a projection operator that converts tokens in text to a sequence of ternary vectors, a dense bottleneck layer and a stack of QRNN encoders.

The implementation of the projection layer in pQRNN is identical to that used in PRADO and helps the model learn the most relevant tokens without a fixed set of parameters to define them. It first fingerprints the tokens in the text and converts it to a ternary feature vector using a simple mapping function. This results in a ternary vector sequence with a balanced symmetric distribution that uniquely represents the text. This representation is not directly useful since it does not have any information needed to solve the task of interest and the network has no control over this representation. We combine it with a dense bottleneck layer to allow the network to learn a per word representation that is relevant for the task at hand. The representation resulting from the bottleneck layer still does not take the context of the word into account. We learn a contextual representation by using a stack of bidirectional QRNN encoders. The result is a network that is capable of learning a contextual representation from just text input without employing any kind of preprocessing.

Performance

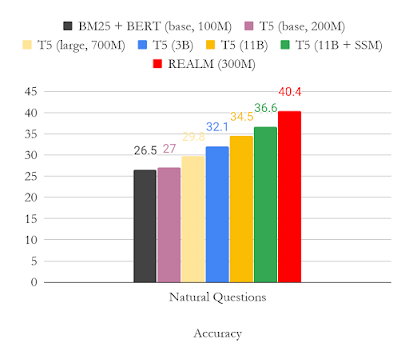

We evaluated pQRNN on the civil_comments dataset and compared it with the BERT model on the same task. Simply because the model size is proportional to the number of parameters, pQRNN is much smaller than BERT. But in addition, pQRNN is quantized, further reducing the model size by a factor of 4x. The public pretrained version of BERT performed poorly on the task hence the comparison is done to a BERT version that is pretrained on several different relevant multilingual data sources to achieve the best possible performance.

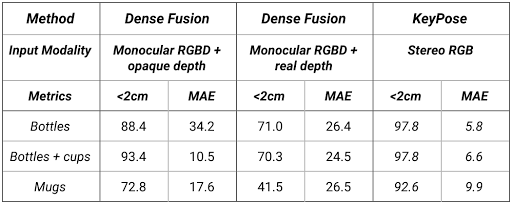

|

| We capture the area under the curve (AUC) for the two models. Without any kind of pre-training and just trained on the supervised data, the AUC for pQRNN is 0.963 using 1.3 million quantized (8-bit) parameters. With pre-training on several different data sources and fine-tuning on the supervised data, the BERT model gets 0.976 AUC using 110 million floating point parameters. |

Conclusion

Using our previous generation model PRADO, we have demonstrated how it can be used as the foundation for the next generation of state-of-the-art light-weight text classification models. We present one such model, pQRNN, and show that this new architecture can nearly achieve BERT-level performance, despite using 300x fewer parameters and being trained on only the supervised data. To stimulate further research in this area, we have open-sourced the PRADO model and encourage the community to use it as a jumping off point for new model architectures.

Acknowledgements

We thank Yicheng Fan, Márius Šajgalík, Peter Young and Arun Kandoor for contributing to the open sourcing effort and helping improve the models. We would also like to thank Amarnag Subramanya, Ashwini Venkatesh, Benoit Jacob, Catherine Wah, Dana Movshovitz-Attias, Dang Hien, Dmitry Kalenichenko, Edgar Gonzàlez i Pellicer, Edward Li, Erik Vee, Evgeny Livshits, Gaurav Nemade, Jeffrey Soren, Jeongwoo Ko, Julia Proskurnia, Rushin Shah, Shirin Badiezadegan, Sidharth KV, Victor Cărbune and the Learn2Compress team for their support. We would like to thank Andrew Tomkins and Patrick Mcgregor for sponsoring this research project.