A mobile phone’s camera is a powerful tool for capturing everyday moments. However, capturing a dynamic scene using a single camera is fundamentally limited. For instance, if we wanted to adjust the camera motion or timing of a recorded video (e.g., to freeze time while sweeping the camera around to highlight a dramatic moment), we would typically need an expensive Hollywood setup with a synchronized camera rig. Would it be possible to achieve similar effects solely from a video captured using a mobile phone’s camera, without a Hollywood budget?

In “DynIBaR: Neural Dynamic Image-Based Rendering”, a best paper honorable mention at CVPR 2023, we describe a new method that generates photorealistic free-viewpoint renderings from a single video of a complex, dynamic scene. Neural Dynamic Image-Based Rendering (DynIBaR) can be used to generate a range of video effects, such as “bullet time” effects (where time is paused and the camera is moved at a normal speed around a scene), video stabilization, depth of field, and slow motion, from a single video taken with a phone’s camera. We demonstrate that DynIBaR significantly advances video rendering of complex moving scenes, opening the door to new kinds of video editing applications. We have also released the code on the DynIBaR project page, so you can try it out yourself.

| Given an in-the-wild video of a complex, dynamic scene, DynIBaR can freeze time while allowing the camera to continue to move freely through the scene. |

Background

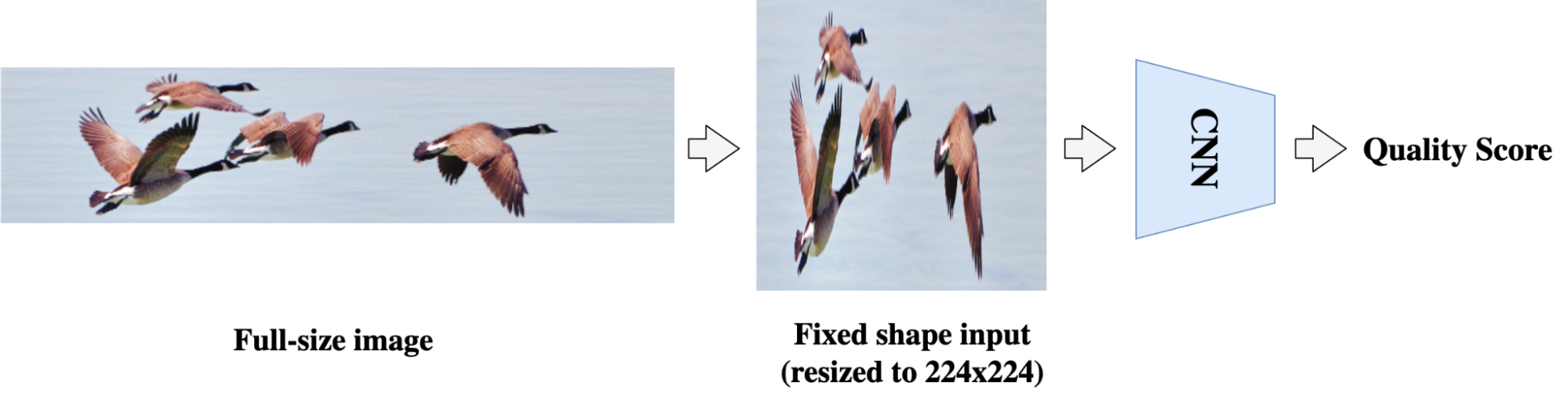

The last few years have seen tremendous progress in computer vision techniques that use neural radiance fields (NeRFs) to reconstruct and render static (non-moving) 3D scenes. However, most of the videos people capture with their mobile devices depict moving objects, such as people, pets, and cars. These moving scenes lead to a much more challenging 4D (3D + time) scene reconstruction problem that cannot be solved using standard view synthesis methods.

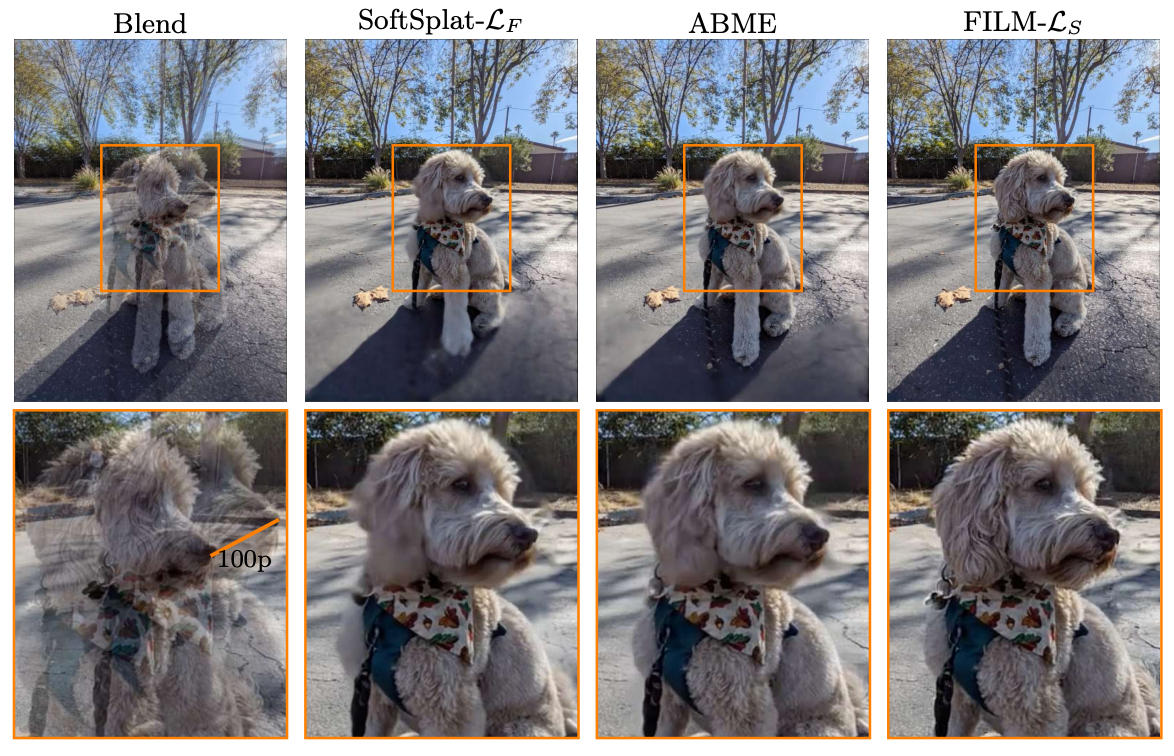

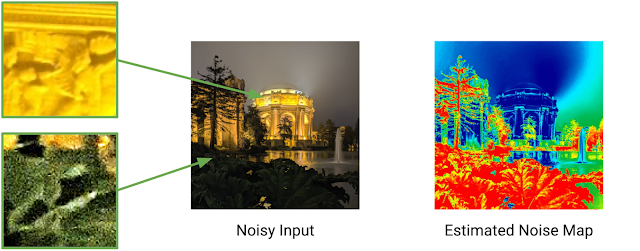

| Standard view synthesis methods output blurry, inaccurate renderings when applied to videos of dynamic scenes. |

Other recent methods tackle view synthesis for dynamic scenes using space-time neural radiance fields (i.e., Dynamic NeRFs), but such approaches still exhibit inherent limitations that prevent their application to casually captured, in-the-wild videos. In particular, they struggle to render high-quality novel views from videos featuring long time duration, uncontrolled camera paths and complex object motion.

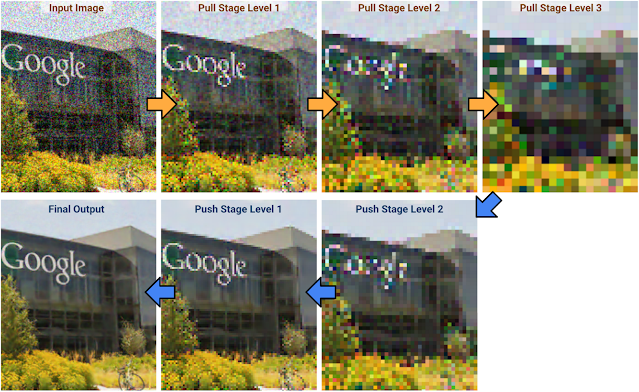

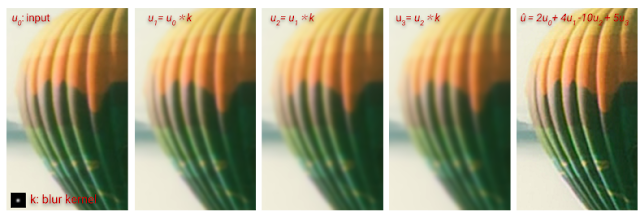

The key pitfall is that they store a complicated, moving scene in a single data structure. In particular, they encode scenes in the weights of a multilayer perceptron (MLP) neural network. MLPs can approximate any function — in this case, a function that maps a 4D space-time point (x, y, z, t) to an RGB color and density that we can use in rendering images of a scene. However, the capacity of this MLP (defined by the number of parameters in its neural network) must increase according to the video length and scene complexity, and thus, training such models on in-the-wild videos can be computationally intractable. As a result, we get blurry, inaccurate renderings like those produced by DVS and NSFF (shown below). DynIBaR avoids creating such large scene models by adopting a different rendering paradigm.

| DynIBaR (bottom row) significantly improves rendering quality compared to prior dynamic view synthesis methods (top row) for videos of complex dynamic scenes. Prior methods produce blurry renderings because they need to store the entire moving scene in an MLP data structure. |

Image-based rendering (IBR)

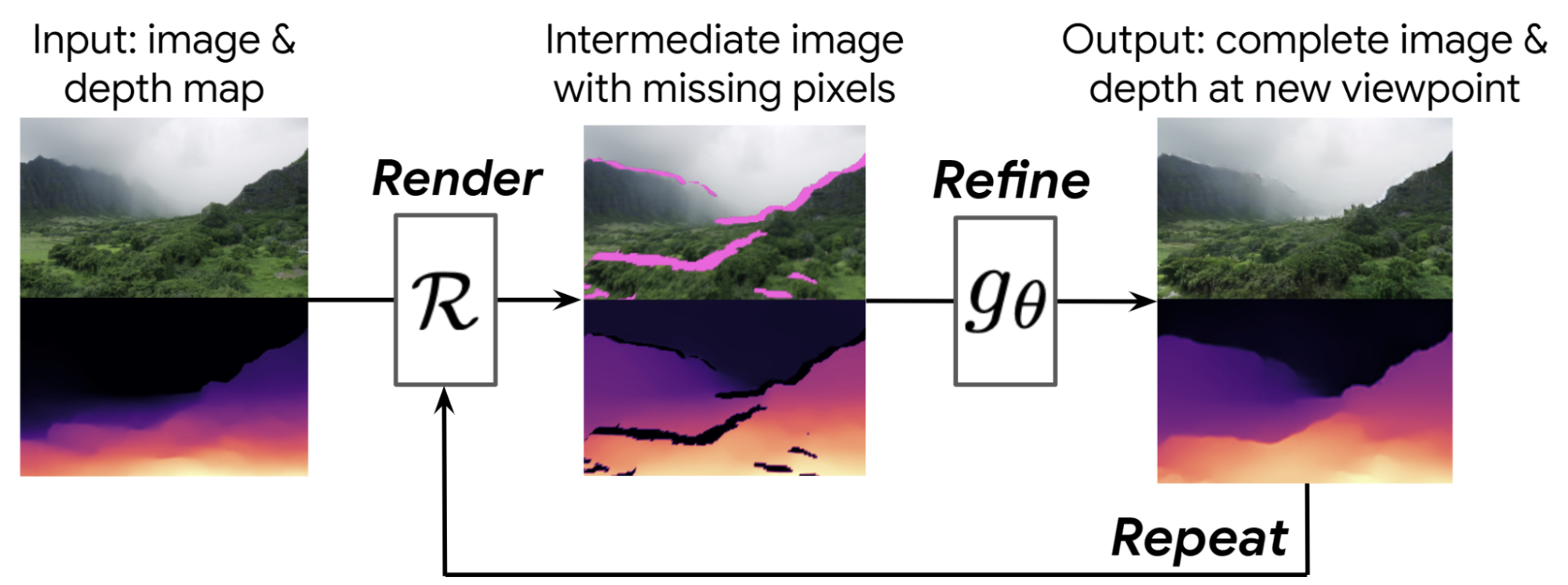

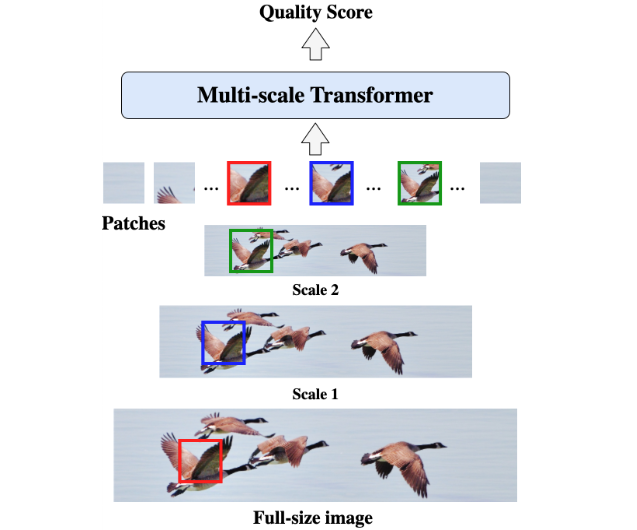

A key insight behind DynIBaR is that we don’t actually need to store all of the scene contents in a video in a giant MLP. Instead, we directly use pixel data from nearby input video frames to render new views. DynIBaR builds on an image-based rendering (IBR) method called IBRNet that was designed for view synthesis for static scenes. IBR methods recognize that a new target view of a scene should be very similar to nearby source images, and therefore synthesize the target by dynamically selecting and warping pixels from the nearby source frames, rather than reconstructing the whole scene in advance. IBRNet, in particular, learns to blend nearby images together to recreate new views of a scene within a volumetric rendering framework.

DynIBaR: Extending IBR to complex, dynamic videos

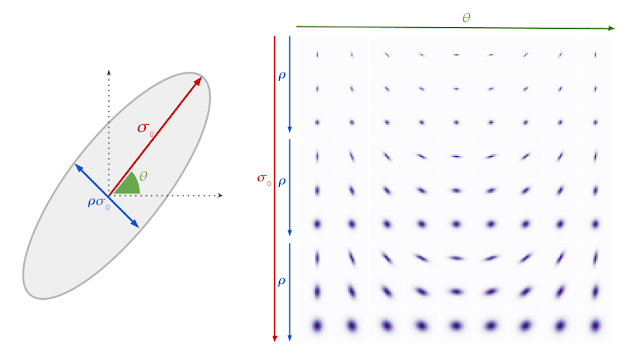

To extend IBR to dynamic scenes, we need to take scene motion into account during rendering. Therefore, as part of reconstructing an input video, we solve for the motion of every 3D point, where we represent scene motion using a motion trajectory field encoded by an MLP. Unlike prior dynamic NeRF methods that store the entire scene appearance and geometry in an MLP, we only store motion, a signal that is more smooth and sparse, and use the input video frames to determine everything else needed to render new views.

We optimize DynIBaR for a given video by taking each input video frame, rendering rays to form a 2D image using volume rendering (as in NeRF), and comparing that rendered image to the input frame. That is, our optimized representation should be able to perfectly reconstruct the input video.

|

| We illustrate how DynIBaR renders images of dynamic scenes. For simplicity, we show a 2D world, as seen from above. (a) A set of input source views (triangular camera frusta) observe a cube moving through the scene (animated square). Each camera is labeled with its timestamp (t-2, t-1, etc). (b) To render a view from camera at time t, DynIBaR shoots a virtual ray through each pixel (blue line), and computes colors and opacities for sample points along that ray. To compute those properties, DyniBaR projects those samples into other views via multi-view geometry, but first, we must compensate for the estimated motion of each point (dashed red line). (c) Using this estimated motion, DynIBaR moves each point in 3D to the relevant time before projecting it into the corresponding source camera, to sample colors for use in rendering. DynIBaR optimizes the motion of each scene point as part of learning how to synthesize new views of the scene. |

However, reconstructing and deriving new views for a complex, moving scene is a highly ill-posed problem, since there are many solutions that can explain the input video — for instance, it might create disconnected 3D representations for each time step. Therefore, optimizing DynIBaR to reconstruct the input video alone is insufficient. To obtain high-quality results, we also introduce several other techniques, including a method called cross-time rendering. Cross-time rendering refers to the use of the state of our 4D representation at one time instant to render images from a different time instant, which encourages the 4D representation to be coherent over time. To further improve rendering fidelity, we automatically factorize the scene into two components, a static one and a dynamic one, modeled by time-invariant and time-varying scene representations respectively.

Creating video effects

DynIBaR enables various video effects. We show several examples below.

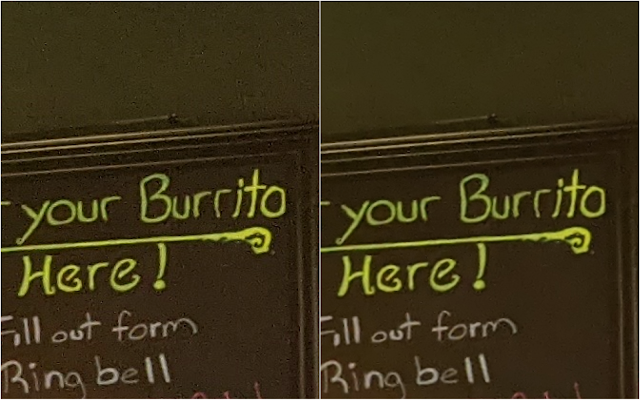

Video stabilization

We use a shaky, handheld input video to compare DynIBaR’s video stabilization performance to existing 2D video stabilization and dynamic NeRF methods, including FuSta, DIFRINT, HyperNeRF, and NSFF. We demonstrate that DynIBaR produces smoother outputs with higher rendering fidelity and fewer artifacts (e.g., flickering or blurry results). In particular, FuSta yields residual camera shake, DIFRINT produces flicker around object boundaries, and HyperNeRF and NSFF produce blurry results.

Simultaneous view synthesis and slow motion

DynIBaR can perform view synthesis in both space and time simultaneously, producing smooth 3D cinematic effects. Below, we demonstrate that DynIBaR can take video inputs and produce smooth 5X slow-motion videos rendered using novel camera paths.

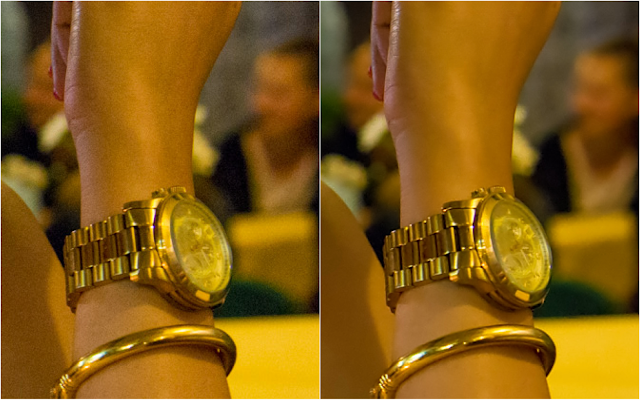

Video bokeh

DynIBaR can also generate high-quality video bokeh by synthesizing videos with dynamically changing depth of field. Given an all-in-focus input video, DynIBar can generate high-quality output videos with varying out-of-focus regions that call attention to moving (e.g., the running person and dog) and static content (e.g., trees and buildings) in the scene.

Conclusion

DynIBaR is a leap forward in our ability to render complex moving scenes from new camera paths. While it currently involves per-video optimization, we envision faster versions that can be deployed on in-the-wild videos to enable new kinds of effects for consumer video editing using mobile devices.

Acknowledgements

DynIBaR is the result of a collaboration between researchers at Google Research and Cornell University. The key contributors to the work presented in this post include Zhengqi Li, Qianqian Wang, Forrester Cole, Richard Tucker, and Noah Snavely.