Posted by Oscar Rodriguez, Developer Relations Engineer

With the recent launch of the Play Integrity API, more developers are now taking action to protect their games and apps from potentially risky and fraudulent interactions.

In addition to useful signals on the integrity of the app, the integrity of the device, and licensing information, the Play Integrity API features a simple, yet very useful feature called “nonce” that, when correctly used, can further strengthen the existing protections the Play Integrity API offers, as well as mitigate certain types of attacks, such as person-in-the-middle (PITM) tampering attacks, and replay attacks.

In this blog post, we will take a deeper look at what the nonce is, how it works, and how it can be used to further protect your app.

What is a nonce?

In cryptography and security engineering, a nonce (number once) is a number that is used only once in a secure communication. There are many applications for nonces, such as in authentication, encryption and hashing.

In the Play Integrity API, the nonce is an opaque base-64 encoded binary blob that you set before invoking the API integrity check, and it will be returned as-is inside the signed response of the API. Depending on how you create and validate the nonce, it is possible to leverage it to further strengthen the existing protections the Play Integrity API offers, as well as mitigate certain types of attacks, such as person-in-the-middle (PITM) tampering attacks, and replay attacks.

Apart from returning the nonce as-is in the signed response, the Play Integrity API doesn’t perform any processing of the actual nonce data, so as long as it is a valid base-64 value, you can set any arbitrary value. That said, in order to digitally sign the response, the nonce is sent to Google’s servers, so it is very important not to set the nonce to any type of personally identifiable information (PII), such as the user’s name, phone or email address.

Setting the nonce

After having set up your app to use the Play Integrity API, you set the nonce with the setNonce() method, or its appropriate variant, available in the Kotlin, Java, Unity, and Native versions of the API.

Kotlin:

val nonce: String = ...

// Create an instance of a manager.

val integrityManager =

IntegrityManagerFactory.create(applicationContext)

// Request the integrity token by providing a nonce.

val integrityTokenResponse: Task<IntegrityTokenResponse> =

integrityManager.requestIntegrityToken(

IntegrityTokenRequest.builder()

.setNonce(nonce) // Set the nonce

.build())

Java:

String nonce = ...

// Create an instance of a manager.

IntegrityManager integrityManager =

IntegrityManagerFactory.create(getApplicationContext());

// Request the integrity token by providing a nonce.

Task<IntegrityTokenResponse> integrityTokenResponse =

integrityManager

.requestIntegrityToken(

IntegrityTokenRequest.builder()

.setNonce(nonce) // Set the nonce

.build());

Unity:

string nonce = ...

// Create an instance of a manager.

var integrityManager = new IntegrityManager();

// Request the integrity token by providing a nonce.

var tokenRequest = new IntegrityTokenRequest(nonce);

var requestIntegrityTokenOperation =

integrityManager.RequestIntegrityToken(tokenRequest);

Native:

/// Create an IntegrityTokenRequest object.

const char* nonce = ...

IntegrityTokenRequest* request;

IntegrityTokenRequest_create(&request);

IntegrityTokenRequest_setNonce(request, nonce); // Set the nonce

IntegrityTokenResponse* response;

IntegrityErrorCode error_code =

IntegrityManager_requestIntegrityToken(request, &response);

Verifying the nonce

The response of the Play Integrity API is returned in the form of a JSON Web Token (JWT), whose payload is a plain-text JSON text, in the following format:

{

requestDetails: { ... }

appIntegrity: { ... }

deviceIntegrity: { ... }

accountDetails: { ... }

}

The nonce can be found inside the requestDetails structure, which is formatted in the following manner:

requestDetails: {

requestPackageName: "...",

nonce: "...",

timestampMillis: ...

}

The value of the nonce field should exactly match the one you previously passed to the API. Furthermore, since the nonce is inside the cryptographically signed response of the Play Integrity API, it is not feasible to alter its value after the response is received. It is by leveraging these properties that it is possible to use the nonce to further protect your app.

Protecting high-value operations

Let us consider the scenario in which a malicious user is interacting with an online game that reports the player score to the game server. In this case, the device is not compromised, but the user can view and modify the network data flow between the game and the server with the help of a proxy server or a VPN, so the malicious user can report a higher score, while the real score is much lower.

Simply calling the Play Integrity API is not sufficient to protect the app in this case: the device is not compromised, and the app is legitimate, so all the checks done by the Play Integrity API will pass.

However, it is possible to leverage the nonce of the Play Integrity API to protect this particular high-value operation of reporting the game score, by encoding the value of the operation inside the nonce. The implementation is as follows:

- The user initiates the high-value action.

- Your app prepares a message it wants to protect, for example, in JSON format.

- Your app calculates a cryptographic hash of the message it wants to protect. For example, with the SHA-256, or the SHA-3-256 hashing algorithms.

- Your app calls the Play Integrity API, and calls

setNonce() to set the nonce field to the cryptographic hash calculated in the previous step.

- Your app sends both the message it wants to protect, and the signed result of the Play Integrity API to your server.

- Your app server verifies that the cryptographic hash of the message that it received matches the value of the nonce field in the signed result, and rejects any results that don't match.

The following sequence diagram illustrates these steps:

As long as the original message to protect is sent along with the signed result, and both the server and client use the exact same mechanism for calculating the nonce, this offers a strong guarantee that the message has not been tampered with.

Notice that in this scenario, the security model works under the assumption that the attack is happening in the network, not the device or the app, so it is particularly important to also verify the device and app integrity signals that the Play Integrity API offers as well.

Preventing replay attacks

Let us consider another scenario in which a malicious user is trying to interact with a server-client app protected by the Play Integrity API, but wants to do so with a compromised device, in a way so the server doesn’t detect this.

To do so, the attacker first uses the app with a legitimate device, and gathers the signed response of the Play Integrity API. The attacker then uses the app with the compromised device, intercepts the Play Integrity API call, and instead of performing the integrity checks, it simply returns the previously recorded signed response.

Since the signed response has not been altered in any way, the digital signature will look okay, and the app server may be fooled into thinking it is communicating with a legitimate device. This is called a replay attack.

The first line of defense against such an attack is to verify the timestampMillis field in the signed response. This field contains the timestamp when the response was created, and can be useful in detecting suspiciously old responses, even when the digital signature is verified as authentic.

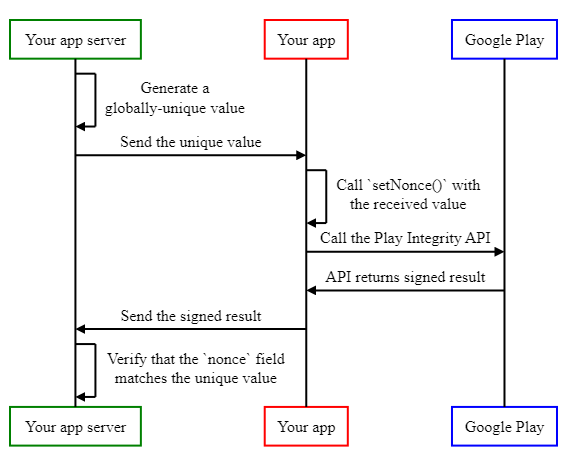

That said, it is also possible to leverage the nonce in the Play Integrity API, to assign a unique value to each response, and verifying that the response matches the previously set unique value. The implementation is as follows:

- The server creates a globally unique value in a way that malicious users cannot predict. For example, a cryptographically-secure random number 128 bits or larger.

- Your app calls the Play Integrity API, and sets the nonce field to the unique value received by your app server.

- Your app sends the signed result of the Play Integrity API to your server.

- Your server verifies that the nonce field in the signed result matches the unique value it previously generated, and rejects any results that don't match.

The following sequence diagram illustrates these steps:

With this implementation, each time the server asks the app to call the Play Integrity API, it does so with a different globally unique value, so as long as this value cannot be predicted by the attacker, it is not possible to reuse a previous response, as the nonce won’t match the expected value.

Combining both protections

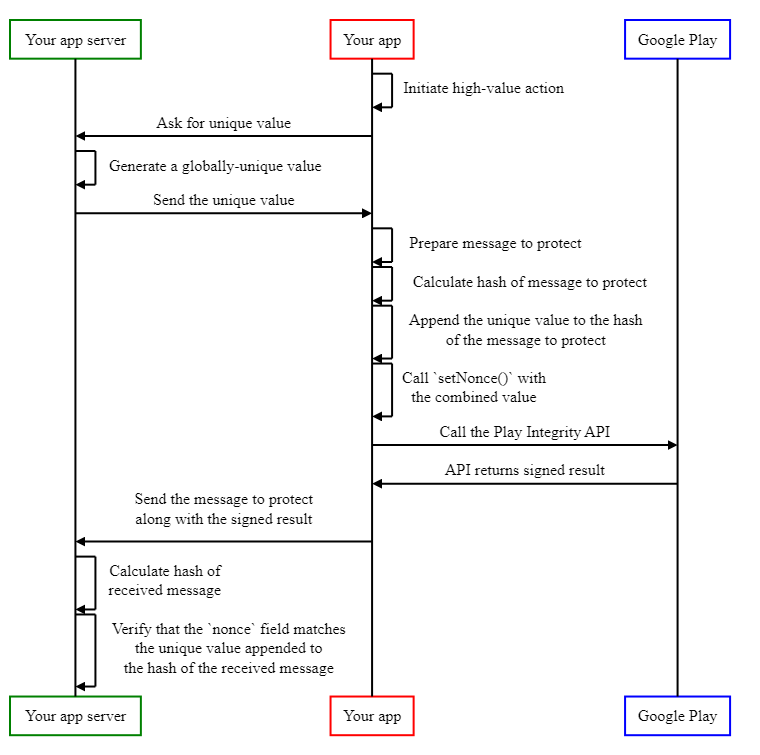

While the two mechanisms described above work in very different ways, if an app requires both protections at the same time, it is possible to combine them in a single Play Integrity API call, for example, by appending the results of both protections into a larger base-64 nonce. An implementation that combines both approaches is as follows:

- The user initiates the high-value action.

- Your app asks the server for a unique value to identify the request

- Your app server generates a globally unique value in a way that malicious users cannot predict. For example, you may use a cryptographically-secure random number generator to create such a value. We recommend creating values 128 bits or larger.

- Your app server sends the globally unique value to the app.

- Your app prepares a message it wants to protect, for example, in JSON format.

- Your app calculates a cryptographic hash of the message it wants to protect. For example, with the SHA-256, or the SHA-3-256 hashing algorithms.

- Your app creates a string by appending the unique value received from your app server, and the hash of the message it wants to protect.

- Your app calls the Play Integrity API, and calls setNonce() to set the nonce field to the string created in the previous step.

- Your app sends both the message it wants to protect, and the signed result of the Play Integrity API to your server.

- Your app server splits the value of the nonce field, and verifies that the cryptographic hash of the message, as well as the unique value it previously generated match to the expected values, and rejects any results that don't match.

The following sequence diagram illustrates these steps:

These are some examples of ways you can use the nonce to further protect your app against malicious users. If your app handles sensitive data, or is vulnerable against abuse, we hope you consider taking action to mitigate these threats with the help of the Play Integrity API.

To learn more about using the Play Integrity API and to get started, visit the documentation at g.co/play/integrityapi.

Posted by Aditya Pathak – Product Manager, Google Play

Posted by Aditya Pathak – Product Manager, Google Play

Posted by Kylie McRoberts, Program Manager and Alec Guertin, Security Engineer

Posted by Kylie McRoberts, Program Manager and Alec Guertin, Security Engineer