Following our recent Google I/O announcement recommending Kotlin Multiplatform (KMP) for sharing business logic across mobile, web, server, and desktop platforms, and our move to use KMP in Google Workspace, KotlinConf 2024 was the next moment to share the highlights and connect with the Kotlin community.

Kotlin Multiplatform, developed by JetBrains, allows developers to build cross-platform apps by compiling Kotlin code into platform-native binaries while leveraging the full capabilities of a modern, memory-managed language. This approach has been a long-term investment for the Google Workspace team, enabling them to share the business logic between different platforms.

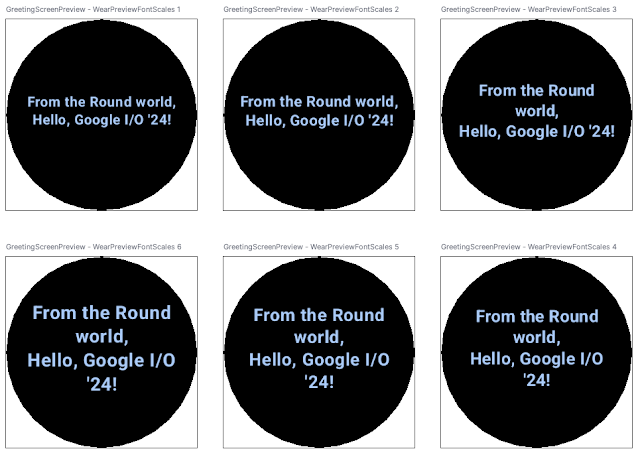

The Android team has been working to support KMP and recently released an alpha version of Room with KMP support. As of today, Annotations, Collections and DataStore are already in stable with KMP support . We've also commonified Lifecycle, ViewModel and Paging libraries to allow integrations with non-Android platforms.

Keynotes and Technical Sessions

The conference kicked off with a keynote, as part of which, Google’s Jeffrey van Gogh gave an overview of Google’s contributions to the Kotlin ecosystem. As part of this, Jeffrey delved into how Google leverages Kotlin Multiplatform (KMP) to streamline development across its own product portfolio. Jeffrey highlighted the benefits of code sharing and efficiency that KMP brings to Google's projects, aligning with our recent recommendations for Android app development.

Our technical sessions at KotlinConf 2024 span a range of topics:

- A Tale of Two Languages by John Pampuch offered an engaging comparison of Java and Kotlin's evolution, highlighting their symbiotic relationship and mutual influence.

- The Android Jetpack team, represented by Elif Bilgin, Yigit Boyar, and Daniel Santiago Rivera, unveiled Enabling Kotlin Multiplatform Success: The Android Jetpack Journey. They provided insights into the current state of KMP in Jetpack, shared updates on KMP-enabled Jetpack libraries, and explored the migration process of a well-established Jetpack library to KMP.

- Going Fast with Kotlin by Andrei Shikov shared valuable insights gained from optimizing Compose for Android. Andrei highlighted interesting performance nuances in Kotlin and the guardrails the Compose team established to ensure optimal performance.

- Kotlin Multiplatform in Google Workspace by Jason Parachoniak discussed Google Workspace's ongoing migration from a Java-oriented multiplatform foundation to Kotlin Multiplatform, aligning with Google's broader adoption of KMP. Jason shared lessons learned and the current state of this ambitious transition.

- Write Your Own Kotlin Lint Checks! by Tor Norbye, Android Studio Engineering Director, empowered developers to extend Android Lint, a static analysis tool used by millions, by creating their own checks. Despite the name, it's not actually Android specific -- it's also used to analyze server Kotlin and Java code inside of Google!

Community Engagement at KotlinConf

We are always looking into ways to be actively engaged with the Kotlin community. If you attended KotlinConf, we hope you got a chance to check out our booth, with opportunities to chat with our engineers, get your questions answered, and learn more about how you can leverage Kotlin and KMP.

Learn more about KMP

In addition, you can view updated docs and a new mobile sample on KMP. These resources should have what you need to start learning KMP and if you have any feedback or come across any issues, please share them through this link.

Looking Ahead

We are excited about the future of Kotlin and are planning to add KMP support to more AndroidX libraries. We are looking forward to seeing how you will adopt and build the next generation of apps using KMP.

Thanks to KotlinConf organizers, speakers, attendees, and the entire Kotlin community for making this event happen and bringing Kotlin enthusiasts together.

Posted by Murat Yener – Developer Relations Engineer

Posted by Murat Yener – Developer Relations Engineer

Learn how new Google AI on Android features can boost employee productivity, help developers to build smarter tools and improve business workflows.

Learn how new Google AI on Android features can boost employee productivity, help developers to build smarter tools and improve business workflows.

For Global Accessibility Awareness Day, here’s new updates to our accessibility products.

For Global Accessibility Awareness Day, here’s new updates to our accessibility products.

Posted by Matt Van Der Staay – Engineering Director, Google Home

Posted by Matt Van Der Staay – Engineering Director, Google Home

Posted by Vivek Radhakrishnan – Technical Program Manager, and Seung Nam – Product Manager

Posted by Vivek Radhakrishnan – Technical Program Manager, and Seung Nam – Product Manager

Posted by Maru Ahues Bouza, Product Management Director, Android Developer

Posted by Maru Ahues Bouza, Product Management Director, Android Developer

Kseniia Shumelchyk, Android Developer Relations Engineer, and Garan Jenkin, Android Developer Relations Engineer

Kseniia Shumelchyk, Android Developer Relations Engineer, and Garan Jenkin, Android Developer Relations Engineer