Reducing the Computational Cost of Deep Reinforcement Learning Research

It is widely accepted that the enormous growth of deep reinforcement learning research, which combines traditional reinforcement learning with deep neural networks, began with the publication of the seminal DQN algorithm. This paper demonstrated the potential of this combination, showing that it could produce agents that could play a number of Atari 2600 games very effectively. Since then, there have been several approaches that have built on and improved the original DQN. The popular Rainbow algorithm combined a number of these recent advances to achieve state-of-the-art performance on the ALE benchmark. This advance, however, came at a very high computational cost, which has the unfortunate side effect of widening the gap between those with ample access to computational resources and those without.

In “Revisiting Rainbow: Promoting more Insightful and Inclusive Deep Reinforcement Learning Research”, to be presented at ICML 2021, we revisit this algorithm on a set of small- and medium-sized tasks. We first discuss the computational cost associated with the Rainbow algorithm. We explore how the same conclusions regarding the benefits of combining the various algorithmic components can be reached with smaller-scale experiments, and further generalize that idea to how research done on a smaller computational budget can provide valuable scientific insights.

The Cost of Rainbow

A major reason for the computational cost of Rainbow is that the standards in academic publishing often require evaluating new algorithms on large benchmarks like ALE, which consists of 57 Atari 2600 games that reinforcement learning agents may learn to play. For a typical game, it takes roughly five days to train a model using a Tesla P100 GPU. Furthermore, if one wants to establish meaningful confidence bounds, it is common to perform at least five independent runs. Thus, to train Rainbow on the full suite of 57 games required around 34,200 GPU hours (or 1425 days) in order to provide convincing empirical performance statistics. In other words, such experiments are only feasible if one is able to train on multiple GPUs in parallel, which can be prohibitive for smaller research groups.

Revisiting Rainbow

As in the original Rainbow paper, we evaluate the effect of adding the following components to the original DQN algorithm: double Q-learning, prioritized experience replay, dueling networks, multi-step learning, distributional RL, and noisy nets.

We evaluate on a set of four classic control environments, which can be fully trained in 10-20 minutes (compared to five days for ALE games):

|

| Upper left: In CartPole, the task is to balance a pole on a cart that the agent can move left and right. Upper right: In Acrobot, there are two arms and two joints, where the agent applies force to the joint between the two arms in order to raise the lower arm above a threshold. Lower left: In LunarLander, the agent is meant to land the spaceship between the two flags. Lower right: In MountainCar, the agent must build up momentum between two hills to drive to the top of the rightmost hill. |

We investigated the effect of both independently adding each of the components to DQN, as well as removing each from the full Rainbow algorithm. As in the original Rainbow paper, we find that, in aggregate, the addition of each of these algorithms does improve learning over the base DQN. However, we also found some important differences, such as the fact that distributional RL — commonly thought to be a positive addition on its own — does not always yield improvements on its own. Indeed, in contrast to the ALE results in the Rainbow paper, in the classic control environments, distributional RL only yields an improvement when combined with another component.

|

| Each plot shows the training progress when adding the various components to DQN. The x-axis is training steps,the y-axis is performance (higher is better). |

|

| Each plot shows the training progress when removing the various components from Rainbow. The x-axis is training steps,the y-axis is performance (higher is better). |

We also re-ran the Rainbow experiments on the MinAtar environment, which consists of a set of five miniaturized Atari games, and found qualitatively similar results. The MinAtar games are roughly 10 times faster to train than the regular Atari 2600 games on which the original Rainbow algorithm was evaluated, but still share some interesting aspects, such as game dynamics and having pixel-based inputs to the agent. As such, they provide a challenging mid-level environment, in between the classic control and the full Atari 2600 games.

When viewed in aggregate, we find our results to be consistent with those of the original Rainbow paper — the impact resulting from each algorithmic component can vary from environment to environment. If we were to suggest a single agent that balances the tradeoffs of the different algorithmic components, our version of Rainbow would likely be consistent with the original, in that combining all components produces a better overall agent. However, there are important details in the variations of the different algorithmic components that merit a more thorough investigation.

Beyond the Rainbow

When DQN was introduced, it made use of the Huber loss and the RMSProp Optimizer. It has been common practice for researchers to use these same choices when building on DQN, as most of their effort is spent on other algorithmic design decisions. In the spirit of reassessing these assumptions, we revisited the loss function and optimizer used by DQN on a lower-cost, small-scale classic control and MinAtar environments. We ran some initial experiments using the Adam optimizer, which has lately been the most popular optimizer choice, combined with a simpler loss function, the mean-squared error loss (MSE). Since the selection of optimizer and loss function is often overlooked when developing a new algorithm, we were surprised to see that we observed a dramatic improvement on all the classic control and MinAtar environments.

We thus decided to evaluate the different ways of combining the two optimizers (RMSProp and Adam) with the two losses (Huber and MSE) on the full ALE suite (60 Atari 2600 games). We found that Adam+MSE is a superior combination than RMSProp+Huber.

|

| Measuring the improvement Adam+MSE gives over the default DQN settings (RMSProp + Huber); higher is better. |

Additionally, when comparing the various optimizer-loss combinations, we find that when using RMSProp, the Huber loss tends to perform better than MSE (illustrated by the gap between the solid and dotted orange lines).

|

| Normalized scores aggregated over all 60 Atari 2600 games, comparing the different optimizer-loss combinations. |

Conclusion

On a limited computational budget we were able to reproduce, at a high-level, the findings of the Rainbow paper and uncover new and interesting phenomena. Evidently it is much easier to revisit something than to discover it in the first place. Our intent with this work, however, was to argue for the relevance and significance of empirical research on small- and medium-scale environments. We believe that these less computationally intensive environments lend themselves well to a more critical and thorough analysis of the performance, behaviors, and intricacies of new algorithms.

We are by no means calling for less emphasis to be placed on large-scale benchmarks. We are simply urging researchers to consider smaller-scale environments as a valuable tool in their investigations, and reviewers to avoid dismissing empirical work that focuses on smaller-scale environments. By doing so, in addition to reducing the environmental impact of our experiments, we will get both a clearer picture of the research landscape and reduce the barriers for researchers from diverse and often underresourced communities, which can only help make our community and scientific advances stronger.

Acknowledgments

Thank you to Johan, the first author of this paper, for his hard work and persistence in seeing this through! We would also like to thank Marlos C. Machado, Sara Hooker, Matthieu Geist, Nino Vieillard, Hado van Hasselt, Eleni Triantafillou, and Brian Tanner for their insightful comments on this work.

Source: Google AI Blog

Reducing the Computational Cost of Deep Reinforcement Learning Research

It is widely accepted that the enormous growth of deep reinforcement learning research, which combines traditional reinforcement learning with deep neural networks, began with the publication of the seminal DQN algorithm. This paper demonstrated the potential of this combination, showing that it could produce agents that could play a number of Atari 2600 games very effectively. Since then, there have been several approaches that have built on and improved the original DQN. The popular Rainbow algorithm combined a number of these recent advances to achieve state-of-the-art performance on the ALE benchmark. This advance, however, came at a very high computational cost, which has the unfortunate side effect of widening the gap between those with ample access to computational resources and those without.

In “Revisiting Rainbow: Promoting more Insightful and Inclusive Deep Reinforcement Learning Research”, to be presented at ICML 2021, we revisit this algorithm on a set of small- and medium-sized tasks. We first discuss the computational cost associated with the Rainbow algorithm. We explore how the same conclusions regarding the benefits of combining the various algorithmic components can be reached with smaller-scale experiments, and further generalize that idea to how research done on a smaller computational budget can provide valuable scientific insights.

The Cost of Rainbow

A major reason for the computational cost of Rainbow is that the standards in academic publishing often require evaluating new algorithms on large benchmarks like ALE, which consists of 57 Atari 2600 games that reinforcement learning agents may learn to play. For a typical game, it takes roughly five days to train a model using a Tesla P100 GPU. Furthermore, if one wants to establish meaningful confidence bounds, it is common to perform at least five independent runs. Thus, to train Rainbow on the full suite of 57 games required around 34,200 GPU hours (or 1425 days) in order to provide convincing empirical performance statistics. In other words, such experiments are only feasible if one is able to train on multiple GPUs in parallel, which can be prohibitive for smaller research groups.

Revisiting Rainbow

As in the original Rainbow paper, we evaluate the effect of adding the following components to the original DQN algorithm: double Q-learning, prioritized experience replay, dueling networks, multi-step learning, distributional RL, and noisy nets.

We evaluate on a set of four classic control environments, which can be fully trained in 10-20 minutes (compared to five days for ALE games):

|

| Upper left: In CartPole, the task is to balance a pole on a cart that the agent can move left and right. Upper right: In Acrobot, there are two arms and two joints, where the agent applies force to the joint between the two arms in order to raise the lower arm above a threshold. Lower left: In LunarLander, the agent is meant to land the spaceship between the two flags. Lower right: In MountainCar, the agent must build up momentum between two hills to drive to the top of the rightmost hill. |

We investigated the effect of both independently adding each of the components to DQN, as well as removing each from the full Rainbow algorithm. As in the original Rainbow paper, we find that, in aggregate, the addition of each of these algorithms does improve learning over the base DQN. However, we also found some important differences, such as the fact that distributional RL — commonly thought to be a positive addition on its own — does not always yield improvements on its own. Indeed, in contrast to the ALE results in the Rainbow paper, in the classic control environments, distributional RL only yields an improvement when combined with another component.

|

| Each plot shows the training progress when adding the various components to DQN. The x-axis is training steps,the y-axis is performance (higher is better). |

|

| Each plot shows the training progress when removing the various components from Rainbow. The x-axis is training steps,the y-axis is performance (higher is better). |

We also re-ran the Rainbow experiments on the MinAtar environment, which consists of a set of five miniaturized Atari games, and found qualitatively similar results. The MinAtar games are roughly 10 times faster to train than the regular Atari 2600 games on which the original Rainbow algorithm was evaluated, but still share some interesting aspects, such as game dynamics and having pixel-based inputs to the agent. As such, they provide a challenging mid-level environment, in between the classic control and the full Atari 2600 games.

When viewed in aggregate, we find our results to be consistent with those of the original Rainbow paper — the impact resulting from each algorithmic component can vary from environment to environment. If we were to suggest a single agent that balances the tradeoffs of the different algorithmic components, our version of Rainbow would likely be consistent with the original, in that combining all components produces a better overall agent. However, there are important details in the variations of the different algorithmic components that merit a more thorough investigation.

Beyond the Rainbow

When DQN was introduced, it made use of the Huber loss and the RMSProp Optimizer. It has been common practice for researchers to use these same choices when building on DQN, as most of their effort is spent on other algorithmic design decisions. In the spirit of reassessing these assumptions, we revisited the loss function and optimizer used by DQN on a lower-cost, small-scale classic control and MinAtar environments. We ran some initial experiments using the Adam optimizer, which has lately been the most popular optimizer choice, combined with a simpler loss function, the mean-squared error loss (MSE). Since the selection of optimizer and loss function is often overlooked when developing a new algorithm, we were surprised to see that we observed a dramatic improvement on all the classic control and MinAtar environments.

We thus decided to evaluate the different ways of combining the two optimizers (RMSProp and Adam) with the two losses (Huber and MSE) on the full ALE suite (60 Atari 2600 games). We found that Adam+MSE is a superior combination than RMSProp+Huber.

|

| Measuring the improvement Adam+MSE gives over the default DQN settings (RMSProp + Huber); higher is better. |

Additionally, when comparing the various optimizer-loss combinations, we find that when using RMSProp, the Huber loss tends to perform better than MSE (illustrated by the gap between the solid and dotted orange lines).

|

| Normalized scores aggregated over all 60 Atari 2600 games, comparing the different optimizer-loss combinations. |

Conclusion

On a limited computational budget we were able to reproduce, at a high-level, the findings of the Rainbow paper and uncover new and interesting phenomena. Evidently it is much easier to revisit something than to discover it in the first place. Our intent with this work, however, was to argue for the relevance and significance of empirical research on small- and medium-scale environments. We believe that these less computationally intensive environments lend themselves well to a more critical and thorough analysis of the performance, behaviors, and intricacies of new algorithms.

We are by no means calling for less emphasis to be placed on large-scale benchmarks. We are simply urging researchers to consider smaller-scale environments as a valuable tool in their investigations, and reviewers to avoid dismissing empirical work that focuses on smaller-scale environments. By doing so, in addition to reducing the environmental impact of our experiments, we will get both a clearer picture of the research landscape and reduce the barriers for researchers from diverse and often underresourced communities, which can only help make our community and scientific advances stronger.

Acknowledgments

Thank you to Johan, the first author of this paper, for his hard work and persistence in seeing this through! We would also like to thank Marlos C. Machado, Sara Hooker, Matthieu Geist, Nino Vieillard, Hado van Hasselt, Eleni Triantafillou, and Brian Tanner for their insightful comments on this work.

Source: Google AI Blog

Dev Channel Update for Desktop

The Dev channel has been updated to 93.0.4573.0 for Windows, Linux and Mac.

A partial list of changes is available in the log. Interested in switching release channels? Find out how. If you find a new issue, please let us know by filing a bug. The community help forum is also a great place to reach out for help or learn about common issues.

Prudhvikumar Bommana

Source: Google Chrome Releases

How working at Google allows me to keep giving back

I was born in a small town in the South of France to an Algerian dad and a Vietnamese mom. Like many kids from immigrant families, I took school seriously because I saw success in the classroom as a way to fit in.

I was incredibly lucky to have teachers in high school who spent extra hours after school pushing me and surfaced opportunities I wouldn’t have heard of otherwise. Without them, I probably would have settled for less. Instead I’m the first in my family to go to university. The helping hand I got from them growing up is what motivates me today to find opportunities to give back — and thanks to Google there’s plenty of ways for me to give back at work.

Vanessa, third from right, with other Google.org Fellows and the team at Generation, a nonprofit that helps job seekers get placed into life-changing careers.

Giving back at Google

I’ve been at Google for five years, and currently work in strategy and operations in London. Last year, I learned about the Google.org Fellowship, where Googlers could spend up to 6 months working full-time, pro bono for a nonprofit. When I saw that Generation — an organization with the mission to prepare and place people into life-changing careers — was one of the nonprofits looking for Fellows, I knew I wanted to participate.

Generation focuses on providing training and support to underserved jobseekers from diverse and low-income backgrounds. They’ve found that with the right skills, non-traditional candidates can be a boon for employers — in fact 84% of employers say that graduates from Generation programs outperform their peers.

However, innate biases still exist in recruitment that overlook talented and qualified people from nontraditional backgrounds. In France, for example, the first filters recruiters apply when looking for job candidates is often where someone went to school and their degree. Working with Generation, we wanted to figure out how to surface alternative applicants in order to give them a chance to be seen and considered.

Three other Fellows and I worked with the Generation team to design a “reverse job board” that advertised the candidate rather than the job. This would help ensure each jobseeker was seen as a top-notch candidate, rather than an alternative choice. We then conducted employer research for feedback. The Generation team springboarded off that work to build the portal, which launched as a pilot in Spain in March 2021. As the tool becomes more sophisticated and more jobseeker profiles are added, Generation plans to launch it globally.

The Generation Employer Portal that Vanessa and other Google.org Fellows helped build.

Keeping the culture of giving back going

My fellowship with Generation ended when COVID-19 grew into a global pandemic. I was shocked by the scale of the crisis and knew I wanted to do anything I could to help. In April 2020, I volunteered to lead Google.org’s UK COVID taskforce to help businesses and charities impacted by the pandemic. We brought together Googlers across the UK who wanted to help, and spent more than 2,700 hours volunteering across 100 projects for 50 charities.

To keep that spirit of giving back going beyond the pandemic, I created an employee group called Giving Back UK to encourage Googlers to spend time volunteering. This year for GoogleServe, our company-wide volunteering campaign that takes place every summer, I’ve helped create more than 500 volunteering opportunities. As for me, I’ll be spending my time working with Hatch, a nonprofit organization that supports entrepreneurs from underrepresented groups to develop the skills and knowledge they need to grow their businesses.

Being able to bring a positive impact to others is incredibly rewarding — and I love being able to encourage others to do the same.

Source: The Official Google Blog

Android Game Development Extension is now available to all Android game developers

After more than a year in closed beta, we are happy to announce that Android Game Development Extension (AGDE) is now available for all game developers to download. This milestone release of Game Tools from the Android Studio team meets game developers where they are; AGDE adds Android as a platform target to Microsoft Visual Studio, making it easier to target Android with existing multi-platform Visual Studio game projects.

AGDE is part of the Android Game Development Kit, which includes both libraries and tools that support making great games on Android. AGDE is best suited for game developers that develop primarily on Microsoft Windows using Visual Studio to write C/C++ code. Game developers that do not fall under these criteria, but are using C/C++, should use Android Studio to develop for Android.

Alongside the release of AGDE 2021.1, we recently published case studies on how our partners, Epic Games and Electronic Arts found success using AGDE.

We built AGDE as part of our effort to address game developers facing issues in targeting Android with their cross-platform workflows. At the top of the list of issues was developers’ preference to remain in a single IDE instead of maintaining multiple projects for different platforms. AGDE enables this for game developers using Visual Studio by removing the need to switch between IDEs when switching between platforms. In addition, we wanted to solve pain points around existing Visual Studio tools for Android that are often dated or suffer from integration issues. Our team is committed to having AGDE support the latest versions of the Android SDK, and NDK as well as providing updated tools easily accessible from Visual Studio. Finally, we wanted to bring you quick access to some of the most useful Android Studio capabilities, built into AGDE. Therefore, we invested in creating seamless integrations to our most popular tools, such as Studio profilers, logcat, and the Android SDK and device manager. Overall, these features are designed to make you more productive in your day-to-day game development workflow.

Build with AGDE

After downloading and installing AGDE in a Visual Studio project, you can treat Android development as you would any other platform.

- AGDE integrates with MSBuild to compile and link C++ code for Android.

- Project build settings are configured using the standard Visual Studio property system. After the MSBuild process, AGDE uses Gradle to complete the build and package the project. This Gradle stage can be used to integrate Android libraries containing Java or Kotlin code into the final application bundle.

- The Android SDK manager provides access to additional tools and frameworks to assist with building Android games.

- The Android Virtual Device (AVD) manager allows you to launch directly into emulator snapshots so that you can have a repeatable test environment.

Debug with AGDE

AGDE supports deploying to, running on, and debugging with both an Android emulator and a physical device. Debug sessions run inside Visual Studio, using its standard interface for breakpoints, tracing and variable inspection.

- AGDE interfaces with LLDB for debugging support.

- Register views, and disassembly of native code allow you to set a breakpoint, and step right into the disassembly of your OpenGL. The assembly view shows the assembly in-line with the current C++, allowing you to step into or over each instruction as they are executed. This is useful for building context and understanding what is running on your device.

- The memory view shows the current values within a block of memory. As we step through the running game, AGDE in Visual Studio automatically highlights the areas of memory that have changed. In the screenshot below we show where in memory the view matrix has changed, as indicated by the red text.

- Sometimes when debugging isn’t enough to figure out what is going on, we know that having access to the logs can be helpful to dig deeper. The logcat tool allows for searching and filtering logs to pinpoint exactly the data you want.

Profile with AGDE

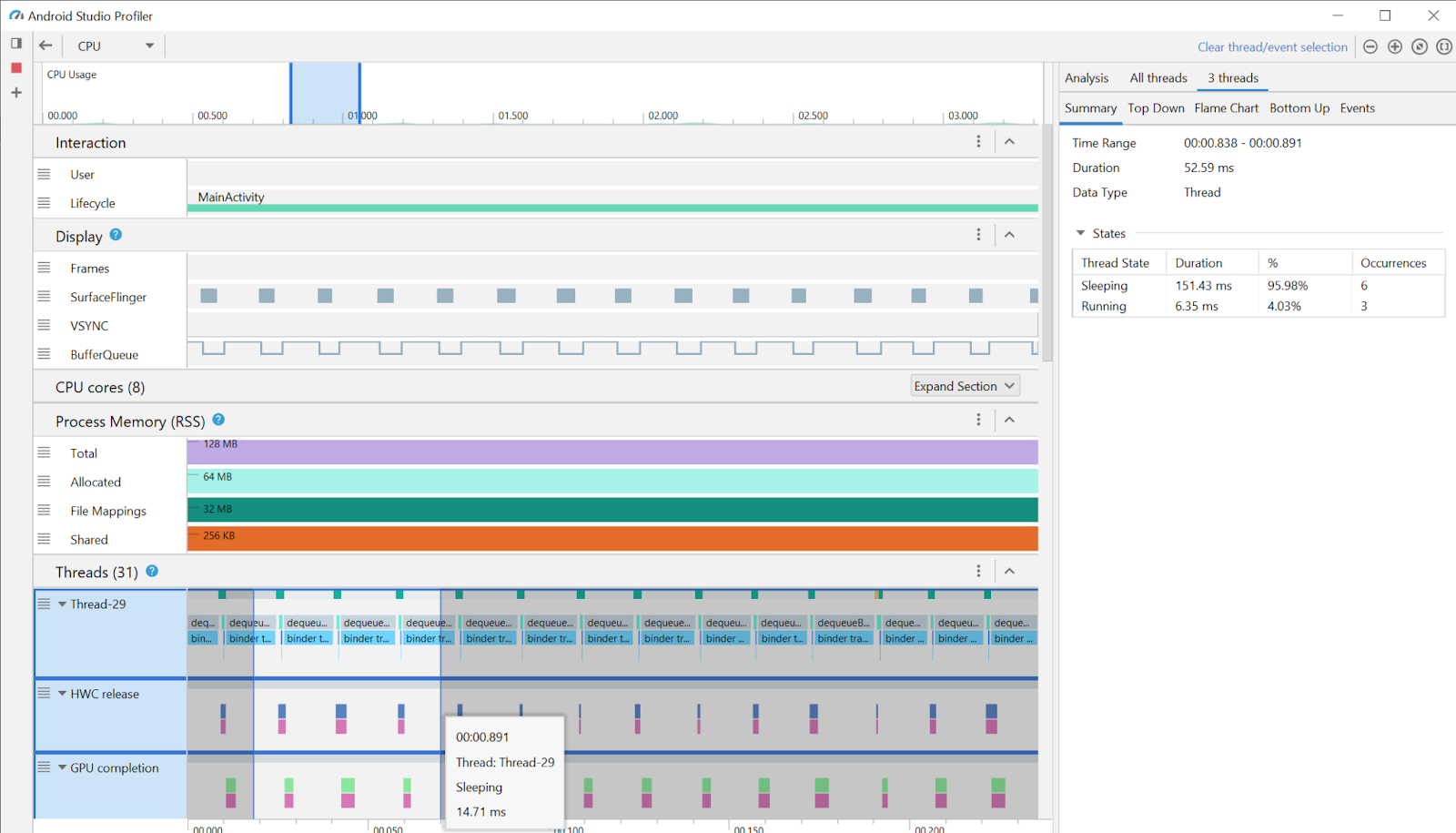

AGDE integrates with a standalone version of Android Studio Profilers. This profiler can be launched from Visual Studio and attached to a running game session.

- The Android Studio Profilers display real time usage statistics for CPU, memory, network, and energy.

- We added support for native memory sampling. Now you can better understand where your memory is going and how to optimize your game for a broader reach of devices.

Integrations

We know everyone has a unique build setup and there is no “one-size-fits-all” solution. That is why we are investing in making AGDE compatible with various tools commonly used by game developers.

- We partnered with Epic Games to integrate with Unreal Engine (UE 4.26.1+) to provide a seamless Android experience for Unreal Engine game developers.

- We are working with Sony Distributed Build System (SN-DBS) to enable SN-DBS users to leverage the power of distributed builds for Android with AGDE (coming soon)

- AGDE is compatible with Incredibuild, a distributed build tool.

Getting started

Download AGDE 2021.1 and see our documentation for additional details. To help you get to know AGDE quickly, we put together a few samples that demonstrate different ways you can use AGDE to configure your project.

Visual Studio IntelliSense features are compatible with AGDE. All current Android CPU architectures are supported: both ARM and Intel in 32-bit and 64-bit.

We appreciate any feedback on things you like, and issues or features you would like to see. If you find a bug or issue, feel free to file an issue. Learn more about Android game development, and follow us -- the Android Studio development team ‐ on Twitter and on Medium.

Microsoft and Visual Studio are trademarks of the Microsoft group of companies.

Source: Android Developers Blog

Android Game Development Extension is now available to all Android game developers

After more than a year in closed beta, we are happy to announce that Android Game Development Extension (AGDE) is now available for all game developers to download. This milestone release of Game Tools from the Android Studio team meets game developers where they are; AGDE adds Android as a platform target to Microsoft Visual Studio, making it easier to target Android with existing multi-platform Visual Studio game projects.

AGDE is part of the Android Game Development Kit, which includes both libraries and tools that support making great games on Android. AGDE is best suited for game developers that develop primarily on Microsoft Windows using Visual Studio to write C/C++ code. Game developers that do not fall under these criteria, but are using C/C++, should use Android Studio to develop for Android.

Alongside the release of AGDE 2021.1, we recently published case studies on how our partners, Epic Games and Electronic Arts found success using AGDE.

We built AGDE as part of our effort to address game developers facing issues in targeting Android with their cross-platform workflows. At the top of the list of issues was developers’ preference to remain in a single IDE instead of maintaining multiple projects for different platforms. AGDE enables this for game developers using Visual Studio by removing the need to switch between IDEs when switching between platforms. In addition, we wanted to solve pain points around existing Visual Studio tools for Android that are often dated or suffer from integration issues. Our team is committed to having AGDE support the latest versions of the Android SDK, and NDK as well as providing updated tools easily accessible from Visual Studio. Finally, we wanted to bring you quick access to some of the most useful Android Studio capabilities, built into AGDE. Therefore, we invested in creating seamless integrations to our most popular tools, such as Studio profilers, logcat, and the Android SDK and device manager. Overall, these features are designed to make you more productive in your day-to-day game development workflow.

Build with AGDE

After downloading and installing AGDE in a Visual Studio project, you can treat Android development as you would any other platform.

- AGDE integrates with MSBuild to compile and link C++ code for Android.

- Project build settings are configured using the standard Visual Studio property system. After the MSBuild process, AGDE uses Gradle to complete the build and package the project. This Gradle stage can be used to integrate Android libraries containing Java or Kotlin code into the final application bundle.

- The Android SDK manager provides access to additional tools and frameworks to assist with building Android games.

- The Android Virtual Device (AVD) manager allows you to launch directly into emulator snapshots so that you can have a repeatable test environment.

Debug with AGDE

AGDE supports deploying to, running on, and debugging with both an Android emulator and a physical device. Debug sessions run inside Visual Studio, using its standard interface for breakpoints, tracing and variable inspection.

- AGDE interfaces with LLDB for debugging support.

- Register views, and disassembly of native code allow you to set a breakpoint, and step right into the disassembly of your OpenGL. The assembly view shows the assembly in-line with the current C++, allowing you to step into or over each instruction as they are executed. This is useful for building context and understanding what is running on your device.

- The memory view shows the current values within a block of memory. As we step through the running game, AGDE in Visual Studio automatically highlights the areas of memory that have changed. In the screenshot below we show where in memory the view matrix has changed, as indicated by the red text.

- Sometimes when debugging isn’t enough to figure out what is going on, we know that having access to the logs can be helpful to dig deeper. The logcat tool allows for searching and filtering logs to pinpoint exactly the data you want.

Profile with AGDE

AGDE integrates with a standalone version of Android Studio Profilers. This profiler can be launched from Visual Studio and attached to a running game session.

- The Android Studio Profilers display real time usage statistics for CPU, memory, network, and energy.

- We added support for native memory sampling. Now you can better understand where your memory is going and how to optimize your game for a broader reach of devices.

Integrations

We know everyone has a unique build setup and there is no “one-size-fits-all” solution. That is why we are investing in making AGDE compatible with various tools commonly used by game developers.

- We partnered with Epic Games to integrate with Unreal Engine (UE 4.26.1+) to provide a seamless Android experience for Unreal Engine game developers.

- We are working with Sony Distributed Build System (SN-DBS) to enable SN-DBS users to leverage the power of distributed builds for Android with AGDE (coming soon)

- AGDE is compatible with Incredibuild, a distributed build tool.

Getting started

Download AGDE 2021.1 and see our documentation for additional details. To help you get to know AGDE quickly, we put together a few samples that demonstrate different ways you can use AGDE to configure your project.

Visual Studio IntelliSense features are compatible with AGDE. All current Android CPU architectures are supported: both ARM and Intel in 32-bit and 64-bit.

We appreciate any feedback on things you like, and issues or features you would like to see. If you find a bug or issue, feel free to file an issue. Learn more about Android game development, and follow us -- the Android Studio development team ‐ on Twitter and on Medium.

Microsoft and Visual Studio are trademarks of the Microsoft group of companies.

Source: Android Developers Blog

Android Game Development Extension is now available to all Android game developers

After more than a year in closed beta, we are happy to announce that Android Game Development Extension (AGDE) is now available for all game developers to download. This milestone release of Game Tools from the Android Studio team meets game developers where they are; AGDE adds Android as a platform target to Microsoft Visual Studio, making it easier to target Android with existing multi-platform Visual Studio game projects.

AGDE is part of the Android Game Development Kit, which includes both libraries and tools that support making great games on Android. AGDE is best suited for game developers that develop primarily on Microsoft Windows using Visual Studio to write C/C++ code. Game developers that do not fall under these criteria, but are using C/C++, should use Android Studio to develop for Android.

Alongside the release of AGDE 2021.1, we recently published case studies on how our partners, Epic Games and Electronic Arts found success using AGDE.

We built AGDE as part of our effort to address game developers facing issues in targeting Android with their cross-platform workflows. At the top of the list of issues was developers’ preference to remain in a single IDE instead of maintaining multiple projects for different platforms. AGDE enables this for game developers using Visual Studio by removing the need to switch between IDEs when switching between platforms. In addition, we wanted to solve pain points around existing Visual Studio tools for Android that are often dated or suffer from integration issues. Our team is committed to having AGDE support the latest versions of the Android SDK, and NDK as well as providing updated tools easily accessible from Visual Studio. Finally, we wanted to bring you quick access to some of the most useful Android Studio capabilities, built into AGDE. Therefore, we invested in creating seamless integrations to our most popular tools, such as Studio profilers, logcat, and the Android SDK and device manager. Overall, these features are designed to make you more productive in your day-to-day game development workflow.

Build with AGDE

After downloading and installing AGDE in a Visual Studio project, you can treat Android development as you would any other platform.

- AGDE integrates with MSBuild to compile and link C++ code for Android.

- Project build settings are configured using the standard Visual Studio property system. After the MSBuild process, AGDE uses Gradle to complete the build and package the project. This Gradle stage can be used to integrate Android libraries containing Java or Kotlin code into the final application bundle.

- The Android SDK manager provides access to additional tools and frameworks to assist with building Android games.

- The Android Virtual Device (AVD) manager allows you to launch directly into emulator snapshots so that you can have a repeatable test environment.

Debug with AGDE

AGDE supports deploying to, running on, and debugging with both an Android emulator and a physical device. Debug sessions run inside Visual Studio, using its standard interface for breakpoints, tracing and variable inspection.

- AGDE interfaces with LLDB for debugging support.

- Register views, and disassembly of native code allow you to set a breakpoint, and step right into the disassembly of your OpenGL. The assembly view shows the assembly in-line with the current C++, allowing you to step into or over each instruction as they are executed. This is useful for building context and understanding what is running on your device.

- The memory view shows the current values within a block of memory. As we step through the running game, AGDE in Visual Studio automatically highlights the areas of memory that have changed. In the screenshot below we show where in memory the view matrix has changed, as indicated by the red text.

- Sometimes when debugging isn’t enough to figure out what is going on, we know that having access to the logs can be helpful to dig deeper. The logcat tool allows for searching and filtering logs to pinpoint exactly the data you want.

Profile with AGDE

AGDE integrates with a standalone version of Android Studio Profilers. This profiler can be launched from Visual Studio and attached to a running game session.

- The Android Studio Profilers display real time usage statistics for CPU, memory, network, and energy.

- We added support for native memory sampling. Now you can better understand where your memory is going and how to optimize your game for a broader reach of devices.

Integrations

We know everyone has a unique build setup and there is no “one-size-fits-all” solution. That is why we are investing in making AGDE compatible with various tools commonly used by game developers.

- We partnered with Epic Games to integrate with Unreal Engine (UE 4.26.1+) to provide a seamless Android experience for Unreal Engine game developers.

- We are working with Sony Distributed Build System (SN-DBS) to enable SN-DBS users to leverage the power of distributed builds for Android with AGDE (coming soon)

- AGDE is compatible with Incredibuild, a distributed build tool.

Getting started

Download AGDE 2021.1 and see our documentation for additional details. To help you get to know AGDE quickly, we put together a few samples that demonstrate different ways you can use AGDE to configure your project.

Visual Studio IntelliSense features are compatible with AGDE. All current Android CPU architectures are supported: both ARM and Intel in 32-bit and 64-bit.

We appreciate any feedback on things you like, and issues or features you would like to see. If you find a bug or issue, feel free to file an issue. Learn more about Android game development, and follow us -- the Android Studio development team ‐ on Twitter and on Medium.

Microsoft and Visual Studio are trademarks of the Microsoft group of companies.

Source: Android Developers Blog

Enhanced desktop security for Windows is now available for Google Workspace Business Plus customers

Quick launch summary

Google Workspace Business Plus customers can now manage and secure Windows devices through the Admin console, just as you do for Android, iOS, Chrome, and Jamboard devices. Now, Business Plus Admins can:

- Set Windows policies in the admin console which will ensure that all Windows 10 devices used to access Workspace are updated, secure, and within compliance of organizational policies.

- Perform admin actions, such as wiping a device and pushing device configuration updates, to Windows 10 devices from the cloud without connecting to corp network.

See our previous announcement for more details on the Windows 10 management features and benefits and the Help Center to learn more about enhanced desktop security for Windows.

Getting started

- Admins:

- Use our Help Center to learn how to enroll a device in Windows device management, how to enable enhanced desktop security for Windows, and how to manage Windows devices.

- Within Workspace and Cloud Identity, multiple devices per user can be managed at no additional cost.

Rollout pace

- This feature is available now.

Resources

- Google Workspace Admin Help: Overview: Google Credential Provider for Windows (GCPW)

- Google Workspace Admin Help: Manage Windows 10 devices

- Google Workspace Admin Help: Enhanced desktop security for Windows

- Cloud Blog: 6 new device, data, and user controls to help G Suite customers stay secure

Source: Google Workspace Updates

Easily navigate through the Admin console using the updated left-hand navigation bar

Quick summary

Getting started

- Admins: This feature will be available by default.

- End users: There is no end user impact.

Rollout pace

- Rapid Release and Scheduled Release domains: Full rollout (1–3 days for feature visibility) starting on July 13, 2021

Availability

- Available to all Google Workspace customers, as well as G Suite Basic and Business customers

Posted by Lily Rapaport, Product Manager

Posted by Lily Rapaport, Product Manager