Posted by Tarun Jain, Group PM, Actions on Google

The Google Assistant helps you get things done across the devices you have at your side throughout your day--a bedside smart speaker, your mobile device while on the go, or even your kitchen Smart Display when winding down in the evening.

One of the common questions we get from developers is: how do I create a seamless path for users to complete purchases across all these types of devices? We also get asked by developers: how can I better personalize my experience for users on the Assistant with privacy in mind?

Today, we're making these easier for developers with support for digital goods and subscriptions, and Google Sign-in for the Assistant. We're also giving the Google Assistant a complete makeover on mobile phones, enabling developers to create even more visually rich integrations.

Start earning money with premium experiences for your users

While we've offered transactions for physical goods for some time, starting today, you will also be able to offer digital goods, including one time purchases like upgrades--expansion packs or new levels, for example--and even recurring subscriptions directly within your Action.

Starting today, users can complete these transactions while in conversation with your Action through speakers, phones, and Smart Displays.This will be supported in the U.S. to start, with more locales coming soon.

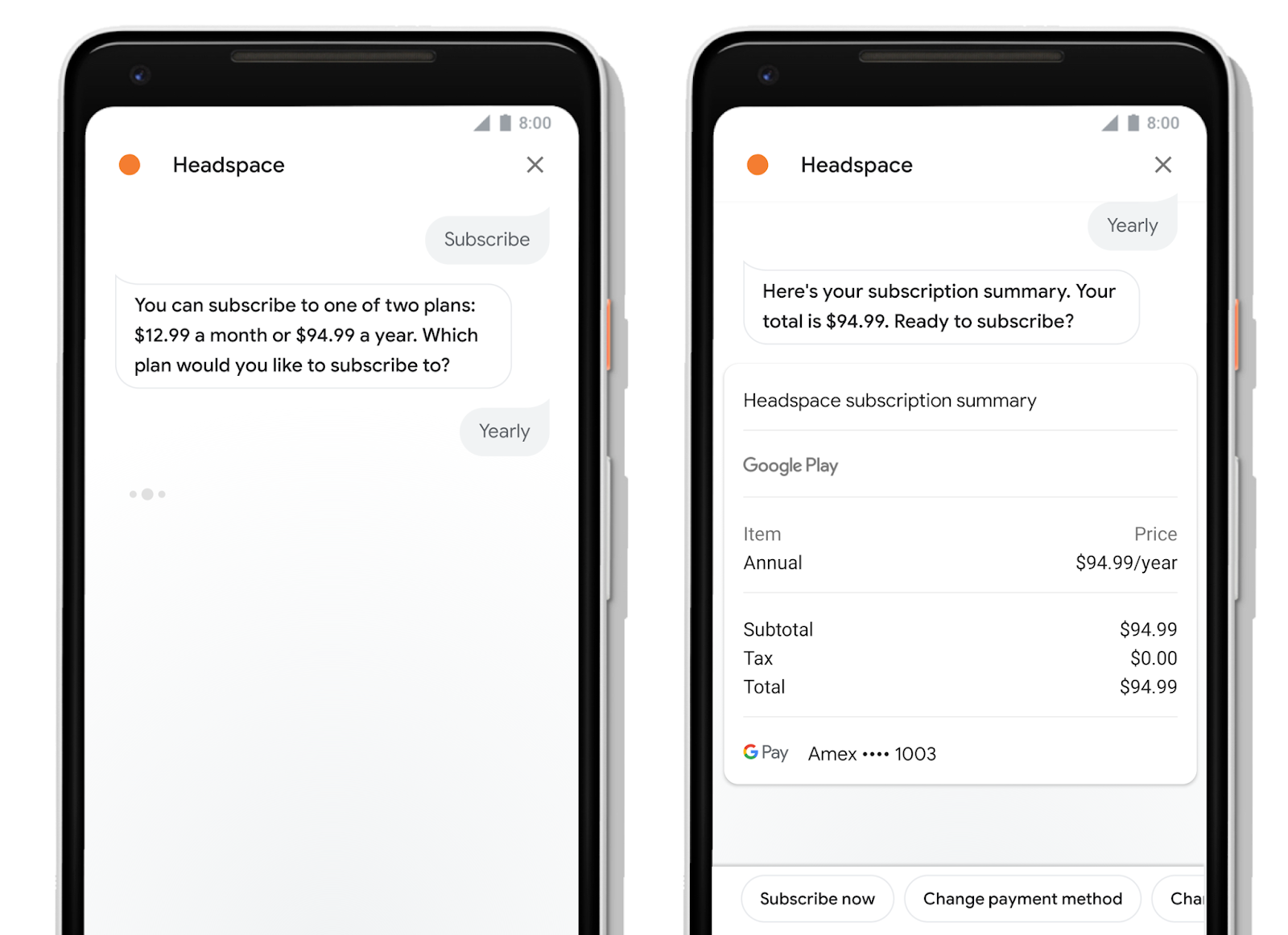

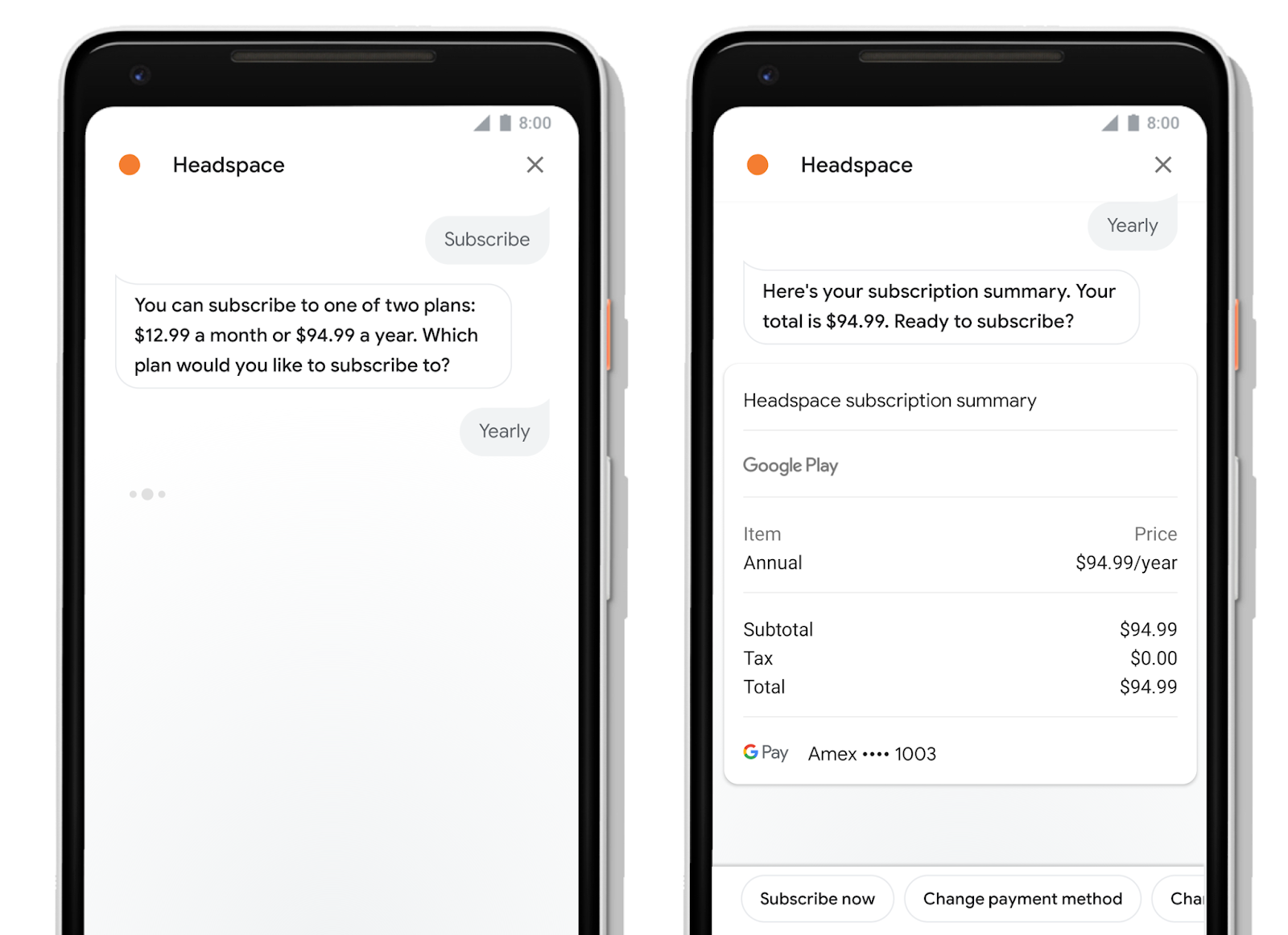

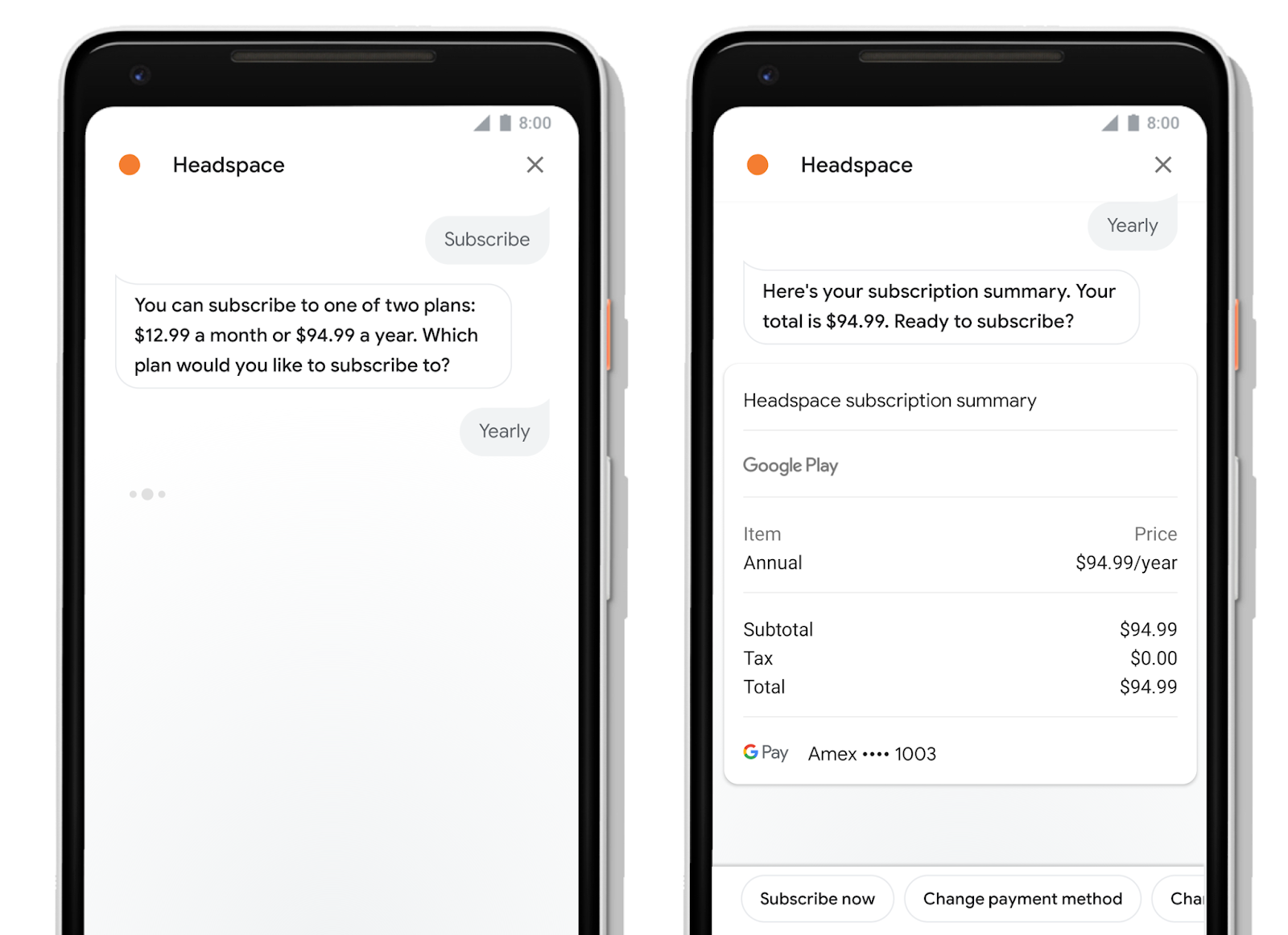

Headspace, for example, now offers Android users an option to subscribe to their plans, meaning users can purchase a subscription and immediately see an upgraded experience while talking to their Action. Try it for yourself, by telling your Google Assistant, "meditate with Headspace"

Volley added digital goods to their role-playing game Castle Master so users could enhance their experience by purchasing upgrades. Try it yourself, by asking your Google Assistant to, "play Castle Master."

You can also ensure a seamless premium experience as users move between your Android app and Action for Assistant by letting users access their digital goods across their relationship with you, regardless of where the purchase originated. You can manage your digital goods for both your app and your Action in one place, in the Play Console.

Simplified account linking and user personalization

Once your users have access to a premium experience with digital goods, you will want to make sure your Action remembers them. To help with that, we're also introducing Google Sign-In for the Assistant, a secure authentication method that simplifies account linking for your users and reduces user drop off for login. Google Sign-In provides the most convenient way to log in, with just a few taps. With Google Sign-In users can even just use their voice to login and link accounts on smart speakers with the Assistant.

In the past, account linking could be a frustrating experience for your users; having to manually type a username and password--or worse, create a new account--breaks the natural conversational flow. With Google Sign-In, users can now create a new account with just a tap or confirmation through their voice. Most users can even link to their existing accounts with your service using their verified email address.

For developers, Google Sign-In also makes it easier to support login and personalize your Action for users. Previously, developers needed to build an account system and support OAuth-based account linking in order to personalize their Action. Now, you have the option to use Google Sign-In to support login for any user with a Google account.

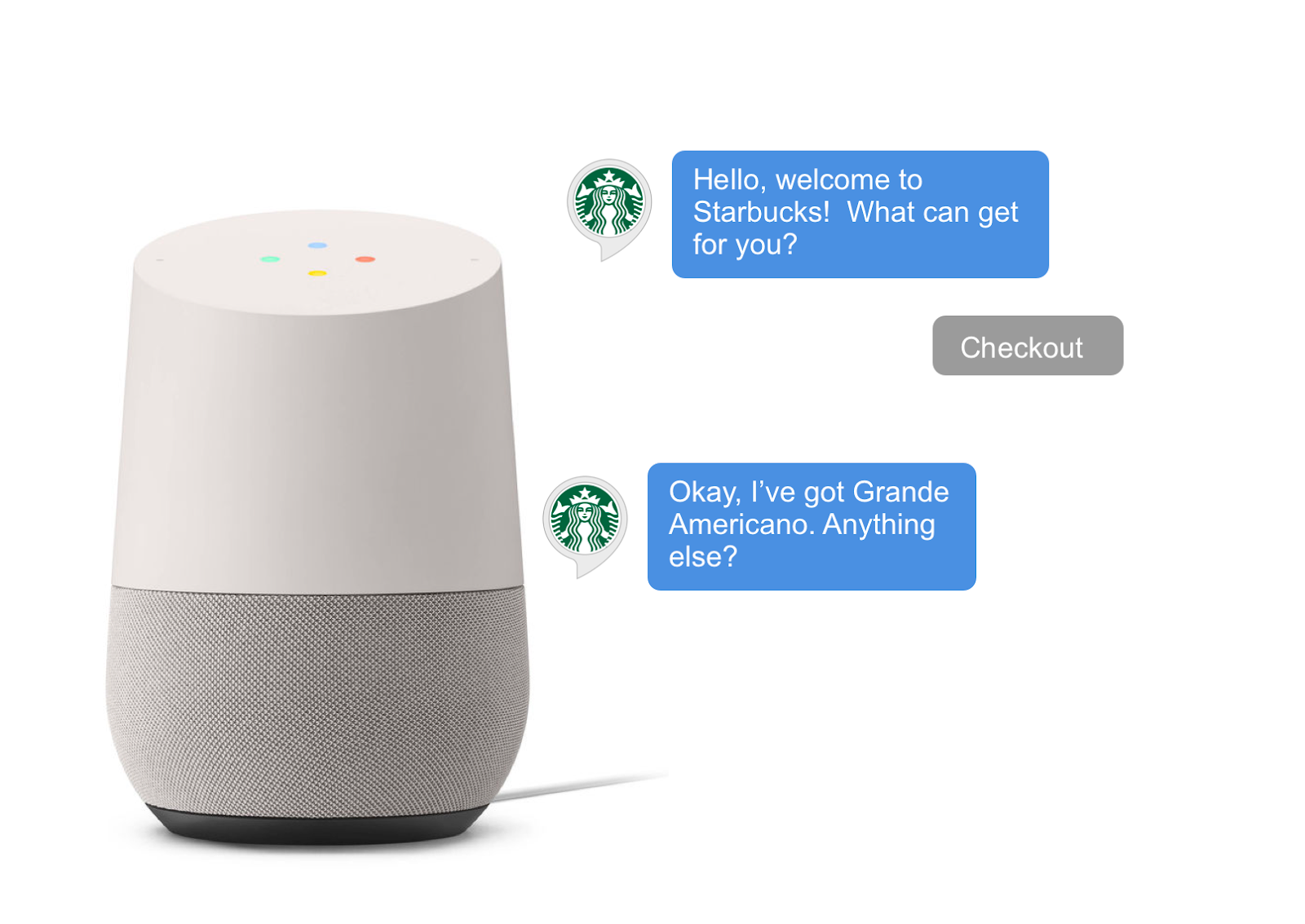

Starbucks added Google Sign-In for the Assistant to enable users of their Action to access their Starbucks RewardsTM accounts and earn stars for their purchases. Since adding Google Sign-In for the Assistant, they've seen login conversion nearly double for their users versus their previous implementation that required manual account entry.

Check out our guide on the different authentication options available to you, to understand which best meets your needs.

A new visual experience for the phone

Today, we're launching the first major makeover for the Google Assistant on phones, bringing a richer, more interactive interface to the devices we carry with us throughout the day.

Since the Google Assistant made its debut, we've noticed that nearly half of all interactions with the Assistant today include both voice and touch. With this redesign, we're making the Assistant more visually assistive for users, combining voice with touch in a way that gives users the right controls in the right situations.

For developers, we've also made it easy to bring great multimodal experiences to life on the phone and other Assistant-enabled devices with screens, including Smart Displays. This presents a new opportunity to express your brand through richer visuals and with greater real estate in your Action.

To get started, you can now add rich responses to customize your Action for visual interfaces. With rich responses you can build visually engaging Actions for your users with a set of plug-and-play visual components for different types of content. If you've already added rich responses to your Action, these will work automatically on the new mobile redesign. Be sure to also check out our guidance on how and when to use visuals in your Action.

Below you can find some examples of the ways some partners and developers have already started to make use of rich responses to provide more visually interactive experiences for Assistant users on phones.

You can try these yourself by asking your Google Assistant to, "order my usual from Starbucks," "ask H&M Home to give inspiration for my kitchen," "ask Fitstar to workout," or "ask Food Network for chicken recipes."

Ready to get building? Check out our documentation on how to add digital goods and Google Sign-In for Assistant to create premium and personalized experiences for your users across devices.

To improve your visual experience for phone users, check out our conversation design site, our documentation on different surfaces, and our documentation and sample on how you can use rich responses to build with visual components. You can also test and debug your different types of voice, visual, and multimodal experiences in the Actions simulator.

Good luck building, and please continue to share your ideas and feedback with us. Don't forget that once you publish your first Action you can join our community program* and receive your exclusive Google Assistant t-shirt and up to $200 of monthly Google Cloud credit.

*Some countries are not eligible to participate in the developer community program, please review the terms and conditions

Posted by the Flutter Team at Google

Posted by the Flutter Team at Google

Posted by Jan-Felix Schmakeit, Google Photos Developer Lead

Posted by Jan-Felix Schmakeit, Google Photos Developer Lead