Posted by Dave Burke, VP of Engineering

Every day, billions of people around the world pull out their Android device to help them get things done. That Android works well for each and every one of them is ensured in part through a collaborative process with you, our developer community, sharing feedback to help us make Android stronger.

Today, we’re sharing a first look at the next release of Android, with the Android 13 Developer Preview 1. With Android 13 we’re continuing some important themes: privacy and security, as well as developer productivity. We’ll also build on some of the newer updates we made in 12L to help you take advantage of the 250+ million large screen Android devices currently running.

This is just the start for Android 13, and we’ll have lots more to share as we move through the release. Read on for a taste of what’s new, and visit the Android 13 developer site for details on downloads for Pixel and the release timeline. As always, it’s crucial to get your feedback early, to help us include it in the final release. We’re looking forward to hearing what you think, and thanks in advance for your continued help in making Android a platform that works for everyone!

Privacy & security at the core

People want an OS and apps that they can trust with their most personal and sensitive information. Privacy is core to Android’s product principles, and Android 13 focuses on building a responsible and high quality platform for all by providing a safer environment on the device and more controls to the user. In today’s release, we’re introducing a photo picker that allows users to share photos and videos securely with apps, and a new Wi-Fi permission to further minimize the need for apps to have the location permission. We recommend trying out the new APIs and testing how the changes may affect your app.

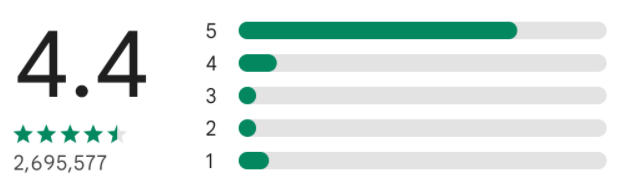

Photo picker and APIs - To help protect photo and video privacy of users, Android 13 adds a system photo picker — a standard and optimized way for users to share both local and cloud-based photos securely. Android’s long standing document picker allows a user to share specific documents of any type with an app, without that app needing permission to view all media files on the device. The photo picker extends this capability with a dedicated experience for picking photos and videos. Apps can use the photo picker APIs to access the shared photos and videos without needing permission to view all media files on the device. We plan to bring the photo picker experience to more Android users through Google Play system updates, as part of a MediaProvider module update for devices (excepting Go devices) running Android 11 and higher. Give photo picker APIs a try and let us know your feedback!

Photo picker provides a consistent, secure way for users

to give apps access to specific photos and videos.

Nearby device permission for Wi-Fi - Android 13 introduces the NEARBY_WIFI_DEVICES runtime permission (part of the NEARBY_DEVICES permission group) for apps that manage a device's connections to nearby access points over Wi-Fi. The new permission will be required for apps that call many commonly-used Wi-Fi APIs, and enables apps to discover and connect to nearby devices over Wi-Fi without needing location permission. Previously, the location permission requirements were a challenge for apps that needed to connect to nearby Wi-Fi devices but didn’t actually need the device location. Apps targeting Android 13 will be now able to request the NEARBY_WIFI_DEVICES permission with the “neverForLocation” flag instead, which should help promote a privacy-friendly app design while reducing friction for developers. Learn more.

Developer productivity and tools

Android 13 also brings new features and tools for developer productivity. Helping you create beautiful apps that run on billions of devices is one of our core missions – whether it’s in Android 13 or through our tools for modern Android development, like a language you love in Kotlin or opinionated APIs with Jetpack. By helping you work more productively, we aim to lower your cost of development so you can focus on continuing to build amazing experiences. Here’s some of what’s new in today’s release.

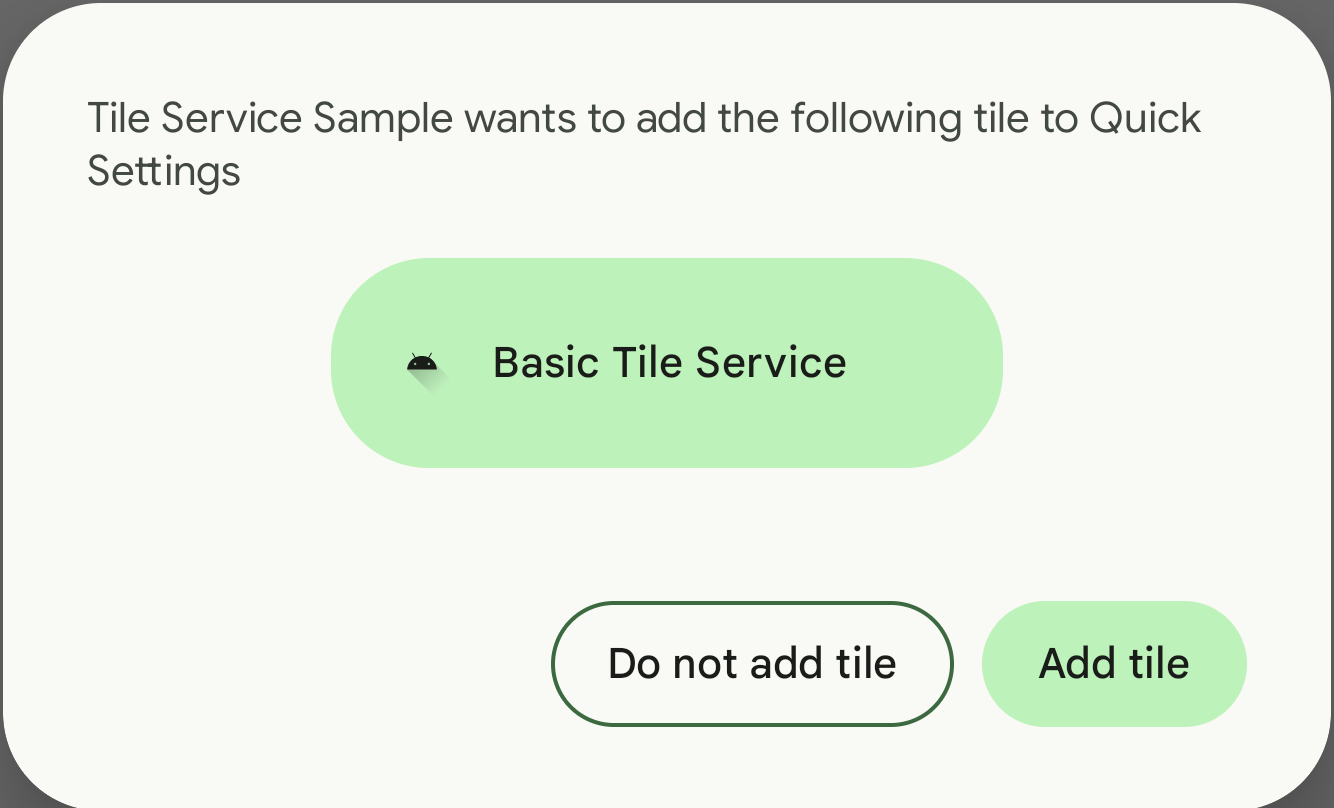

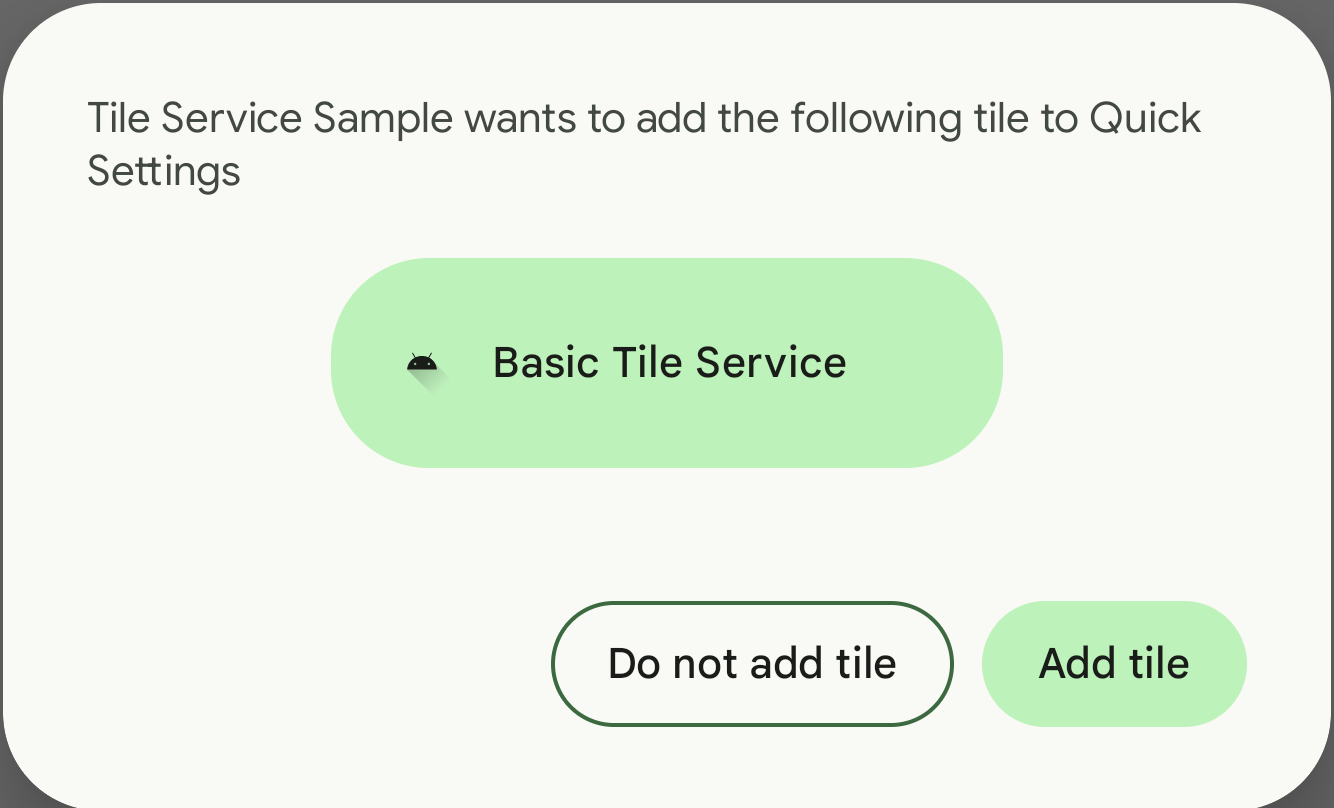

Quick Settings Placement API - Quick Settings in the notification shade is a convenient way for users to change settings or take quick actions without leaving the context of an app. For apps that provide custom tiles, we’re making it easier for users to discover and add your tiles to Quick Settings. Using a new tile placement API, your app can now prompt the user to directly add your custom tile to the set of active Quick Settings tiles. A new system dialog lets the user add the tile in one step, without leaving your app, rather than having to go to Quick Settings to add the tile.

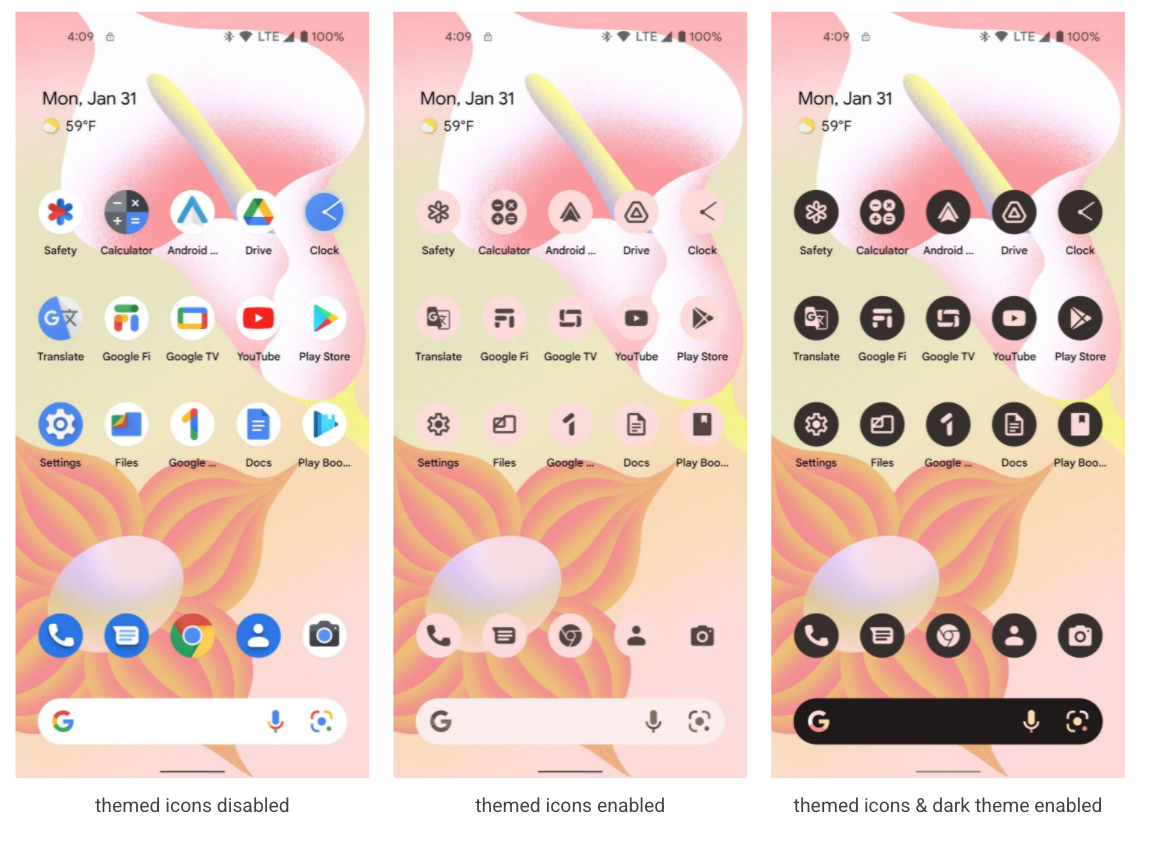

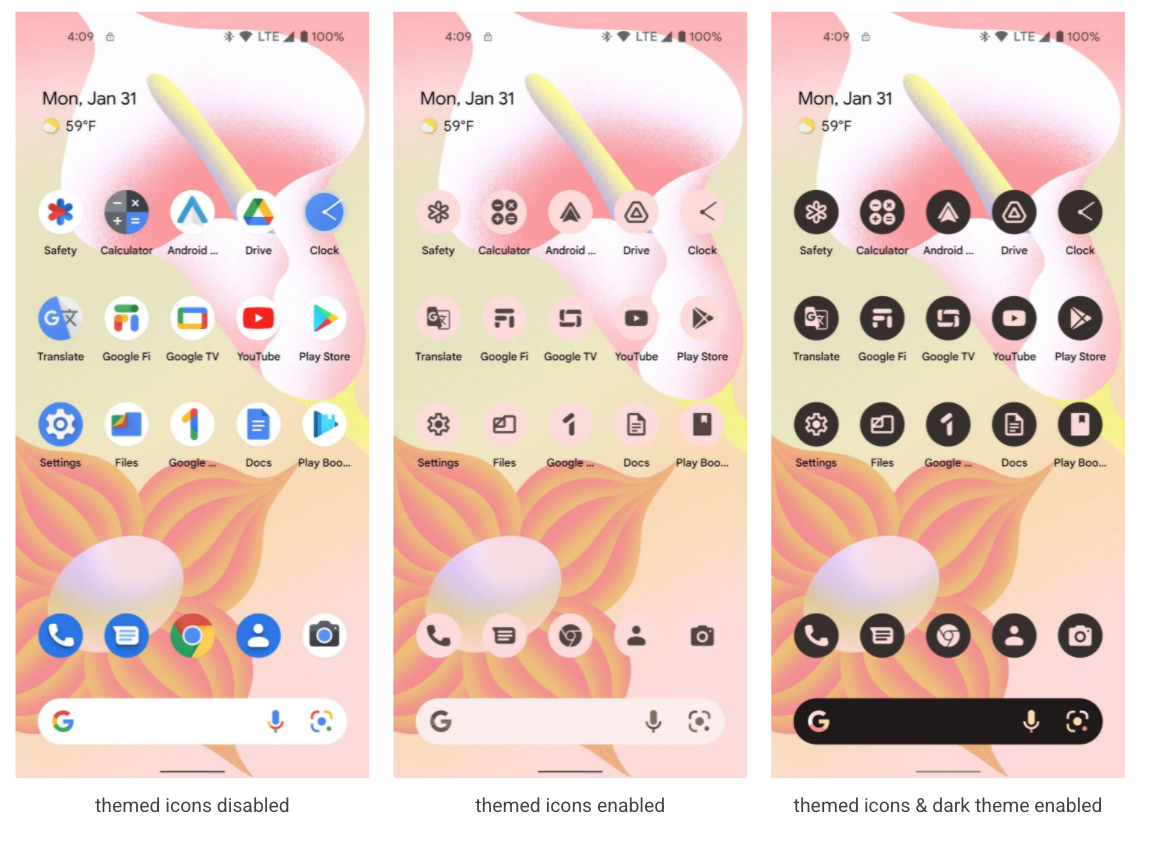

Themed app icons - In Android 13 we’re extending Material You dynamic color beyond Google apps to all app icons, letting users opt into icons that inherit the tint of their wallpaper and other theme preferences. All your app needs to supply is a monochromatic app icon (for example, your notification drawable) and a tweak to the adaptive icon XML. We’re encouraging all developers to provide compatible icons to help provide a consistent experience for users who have opted in. Themed app icons are initially supported on Pixel devices and we’re working with our device manufacturer partners to bring them to more devices. Learn more.

Per-app language preferences - Some apps let users choose a language that differs from the system language, to meet the needs of multilingual users. Such apps can now call a new platform API to set or get the user’s preferred language, helping to reduce boilerplate code and improve compatibility when setting the app’s runtime language. For broader compatibility, we'll be adding a similar API in an upcoming Jetpack library. Learn more.

Faster hyphenation - Hyphenation makes wrapped text easier to read and helps make your UI more adaptive. In Android 13 we’ve optimized hyphenation performance by as much as 200% so you can now enable it in your TextViews with almost no impact on rendering performance. To enable faster hyphenation, use the new fullFast or normalFast frequencies in setHyphenationFrequency(). Give faster hyphenation a try and let us know what you think!

Programmable shaders - Android 13 adds support for programmable

RuntimeShader objects, with behavior defined using the Android Graphics Shading Language (AGSL). AGSL shares much of its syntax with GLSL, but works within the Android rendering engine to customize painting within Android's canvas as well as filtering of View content. Android internally uses these shaders to implement

ripple effects,

blur, and

stretch overscroll, and Android 13 enables you to create similar advanced effects for your app.

AGSL animated shader, adapted

from

this GLSL Shader

OpenJDK 11 updates - In Android 13 we’ve started the work of refreshing Android's Core Libraries to align with the OpenJDK 11 LTS release, with both library updates and Java 11 programming language support for app and platform developers. We also plan to bring these Core Library changes to more devices through Google Play system updates, as part of an ART module update for devices running Android 12 and higher. Learn more.

App compatibility

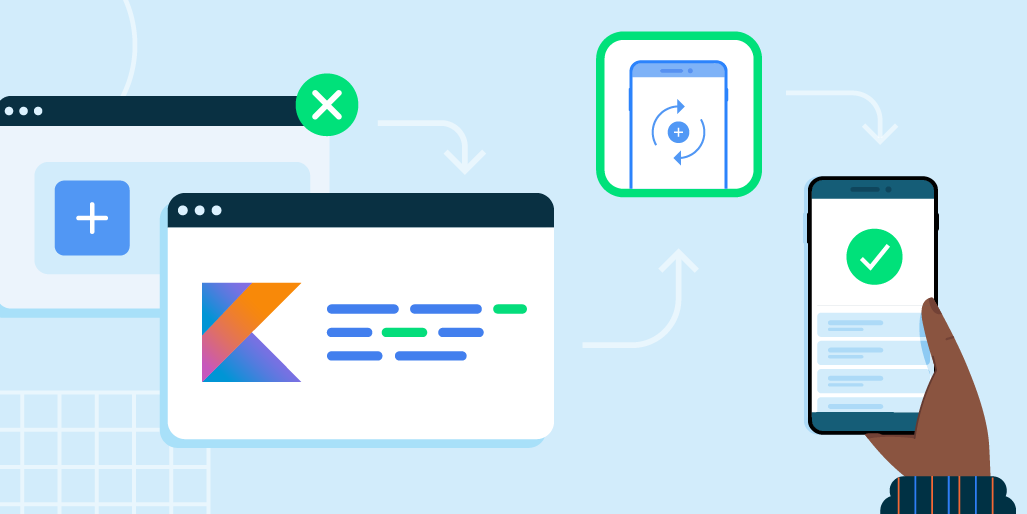

With each platform release we’re working to make updates faster and smoother by prioritizing app compatibility as we roll out new platform versions. In Android 13 we’ve made most app-facing changes opt-in to give you more time, and we’ve updated our tools and processes to help you get ready sooner.

More of Android updated through Google Play - In Android 13 we’re continuing to expand our investment in Google Play system updates (Project Mainline) to give apps a more consistent, secure environment across devices, and to deliver new features and capabilities to users. We can now push new features like photo picker and OpenJDK 11 directly to users on older versions of Android through updates to existing modules. We’ve also added new modules, such as the Bluetooth and Ultra wideband modules, to further expand the scope of Android’s updatable core functionality.

Optimizing for tablets, foldables, and Chromebooks - With all the momentum in large screen devices like tablets, foldables, and Chromebooks, now is the time to get your apps ready for these devices and design fully adaptive apps that fit any screen. You can get started using our guidance on optimizing for tablets, then learn how to build for large screens and develop for foldables.

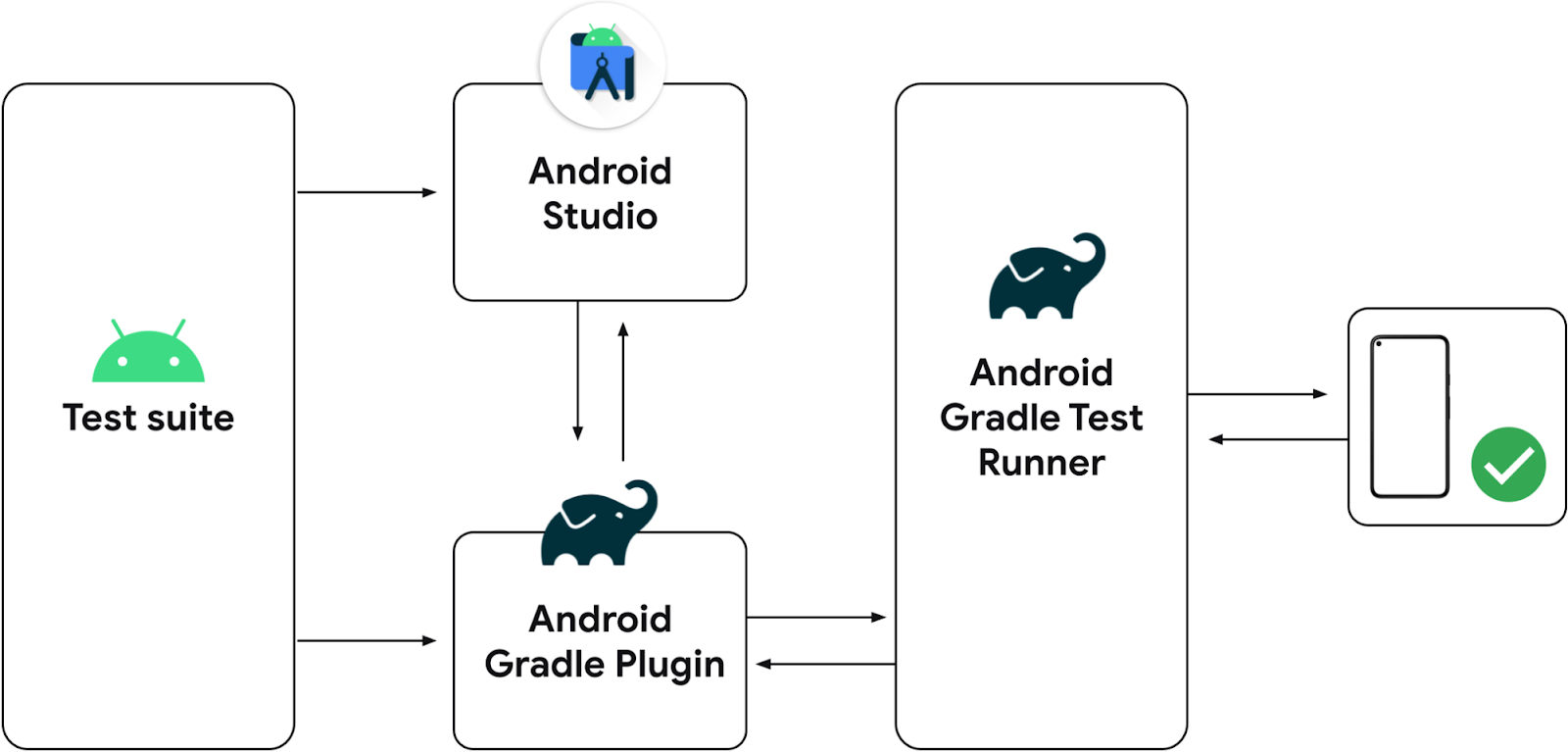

Easier testing and debugging of changes - To make it easier for you to test the opt-in changes that can affect your app, we’ll make many of them toggleable again this year. WIth the toggles you can force-enable or disable the changes individually from Developer options or adb. Check out the details here.

App compatibility toggles in Developer Options.

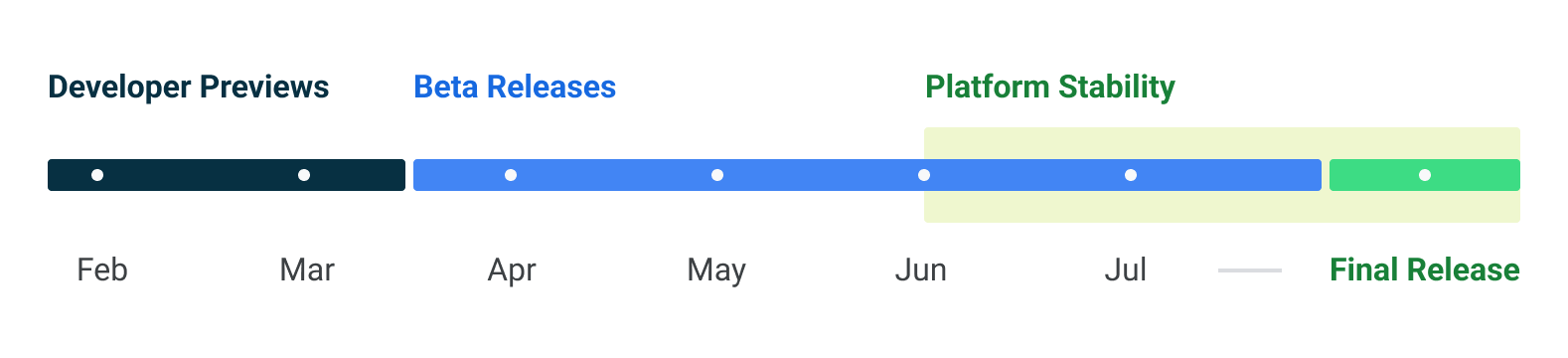

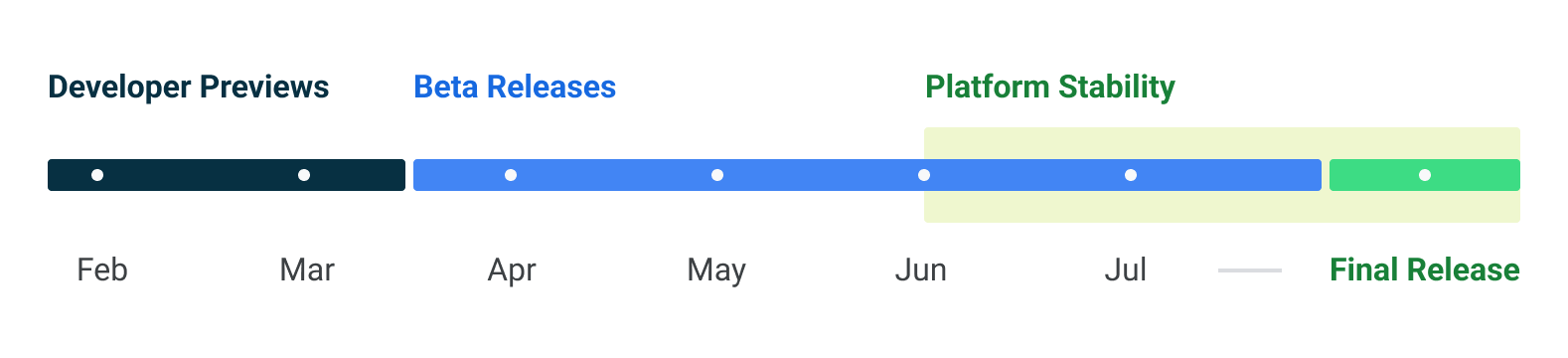

Platform stability milestone - Like last year, we’re letting you know our Platform Stability milestone well in advance, to give you more time to plan for app compatibility work. At this milestone we’ll deliver not only final SDK/NDK APIs, but also final internal APIs and app-facing system behaviors. This year we’re expecting to reach Platform Stability in June 2022, and from that time you’ll have several weeks before the official release to do your final testing. The release timeline details are here.

Get started with Android 13

The Developer Preview has everything you need to try the Android 13 features, test your apps, and give us feedback. For testing your app with tablets and foldables, the easiest way to get started is using the Android Emulator in a tablet or foldable configuration - complete setup instructions are here. For phones, you can get started on a device today by flashing a system image to a Pixel 6 Pro, Pixel 6, Pixel 5a 5G, Pixel 5, Pixel 4a (5G), Pixel 4a, Pixel 4 XL, or Pixel 4 device. If you don’t have a Pixel device, you can use the 64-bit system images with the Android Emulator in Android Studio. For even broader testing, GSI images are available.

When you’re set up, here are some of the things you should do:

- Try the new features and APIs - your feedback is critical during the early part of the developer preview. Report issues in our tracker or give us direct feedback by survey for selected features from the feedback and requests page.

- Test your current app for compatibility - learn whether your app is affected by default behavior changes in Android 13. Just install your current published app onto a device or emulator running Android 13 and test.

- Test your app with opt-in changes - Android 13 has opt-in behavior changes that only affect your app when it’s targeting the new platform. It’s extremely important to understand and assess these changes early. To make it easier to test, you can toggle the changes on and off individually.

We’ll update the preview system images and SDK regularly throughout the Android 13 release cycle. This initial preview release is for developers only and not intended for daily or consumer use, so we're making it available by manual download only. Once you’ve manually installed a preview build, you’ll automatically get future updates over-the-air for all later previews and Betas. More here.

As we reach our Beta releases, we'll be inviting consumers to try Android 13 as well, and we'll open up enrollments for the Android Beta program at that time. For now, please note that Android Beta is not yet available for Android 13.

For complete information, visit the Android 13 developer site.

Java and OpenJDK are trademarks or registered trademarks of Oracle and/or its affiliates.