Originally posted on Android Developers blog

Posted by Sam Dutton, Ankur Kotwal, Developer Advocates; Liz Yepsen, Program Manager

‘TOP-UP WARNING.’ ‘NO CONNECTION.’ ‘INSUFFICIENT BANDWIDTH TO PLAY THIS RESOURCE.’

These are common warnings for many smartphone users around the world.

To build products that work for billions of users, developers must address key challenges: limited or intermittent connectivity, device compatibility, varying screen sizes, high data costs, short-lived batteries. We first presented developers.google.com/billionsand related Android and Web resources at Google I/O last month, and today you can watch the video presentations about Android or the Web.

These best practices can help developers reach billions by delivering exceptional performance across a range of connections, data plans, and devices. g.co/dev/billions will help you:

Seamlessly transition between slow, intermediate, and offline environments

Your users move from place to place, from speedy wireless to patchy or expensive data. Manage these transitions by storing data, queueing requests, optimizing image handling, and performing core functions entirely offline.

Provide the right content for the right context

Keep context in mind - how and where do your users consume your content? Selecting text and media that works well across different viewport sizes, keeping text short (for scrolling on the go), providing a simple UI that doesn’t distract from content, and removing redundant content can all increase perception of your app’s quality while giving real performance gains like reduced data transfer. Once these practices are in place, localization options can grow audience reach and increase engagement.

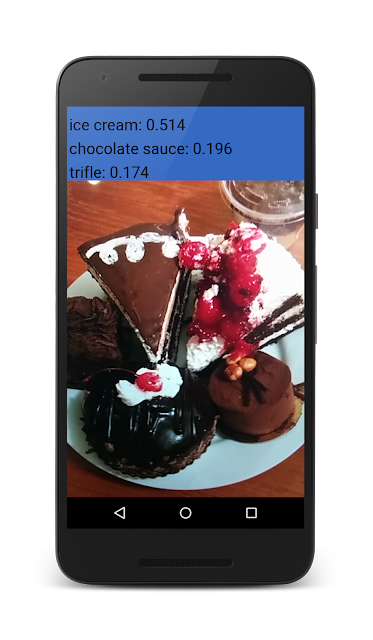

Optimize for mobile hardware

Ensure your app or Web content is served and runs well for your widest possible addressable market, covering all actively used OS versions, while still following best practices, by testing on virtual or actual devices in target markets. Native Android apps should set minimum and target SDKs. Also, remember low cost phones have smaller amounts of RAM; apps should therefore adjust usage accordingly and minimize background running. For in-depth information on minimizing APK size, check out this series of Medium posts. On the Web, optimize JavaScript CPU usage, avoid raster image rendering, and minimize resource requests. Find out more here.

Reduce battery consumption

Low cost phones usually have shorter battery life. Users are sensitive to battery consumption levels and excessive consumption can lead to a high uninstall rate or avoidance of your site. Benchmark your battery usage against sessions on other pages or apps, or using tools such as Battery Historian, and avoid long-running processes which drain batteries.

Conserve data usage

Whatever you’re building, conserve data usage in three simple steps: understand loading requirements, reduce the amount of data required for interaction, and streamline navigation so users get what they want quickly. Conserving data on behalf of your users (and with native apps, offering configurable network usage) helps retain data-sensitive users -- especially those on prepaid plans or contracts with limited data -- as even “unlimited” plans can become expensive when roaming or if unexpected fees are applied.

Have another insight, or a success launching in low-connectivity conditions or on low-cost devices? Let us know on our G+ post.