Posted by The Coral Team

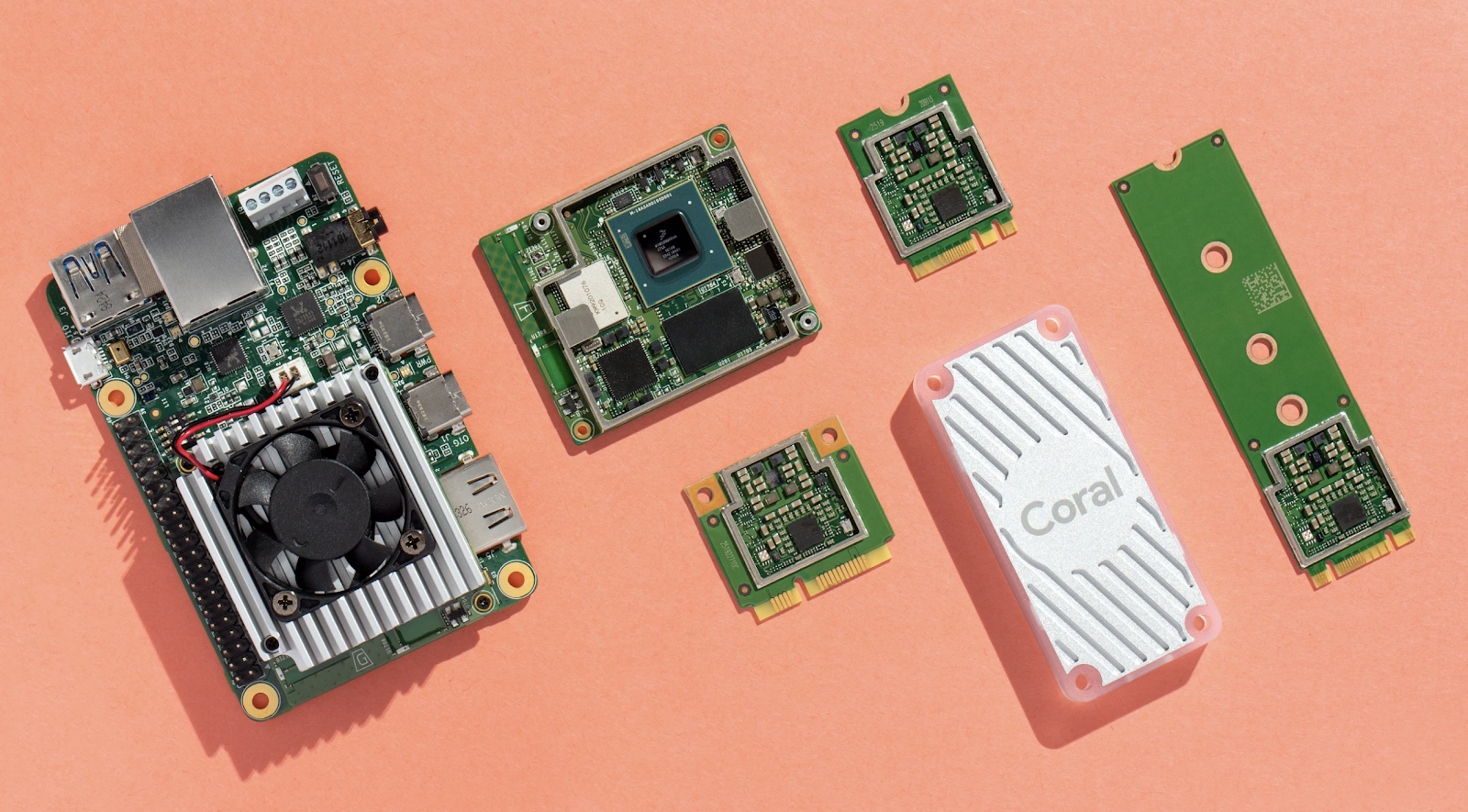

We're always excited to share updates to our Coral platform for building edge ML applications. In this post, we have some interesting demos, interfaces, and tutorials to share, and we'll start by pointing you to an important software update for the Coral Dev Board.

Important update for the Dev Board / SoM

If you have a Coral Dev Board or Coral SoM, please install our latest Mendel update as soon as possible to receive a critical fix to part of the SoC power configuration. To get it, just log onto your board and install the update as follows:

This will install a patch from NXP for the Dev Board / SoM's SoC, without which it's possible the SoC will overstress and the lifetime of the device could be reduced. If you recently flashed your board with the latest system image, you might already have this fix (we also updated the flashable image today), but it never hurts to fetch all updates, as shown above.

Note: This update does not apply to the Dev Board Mini.Manufacturing demo

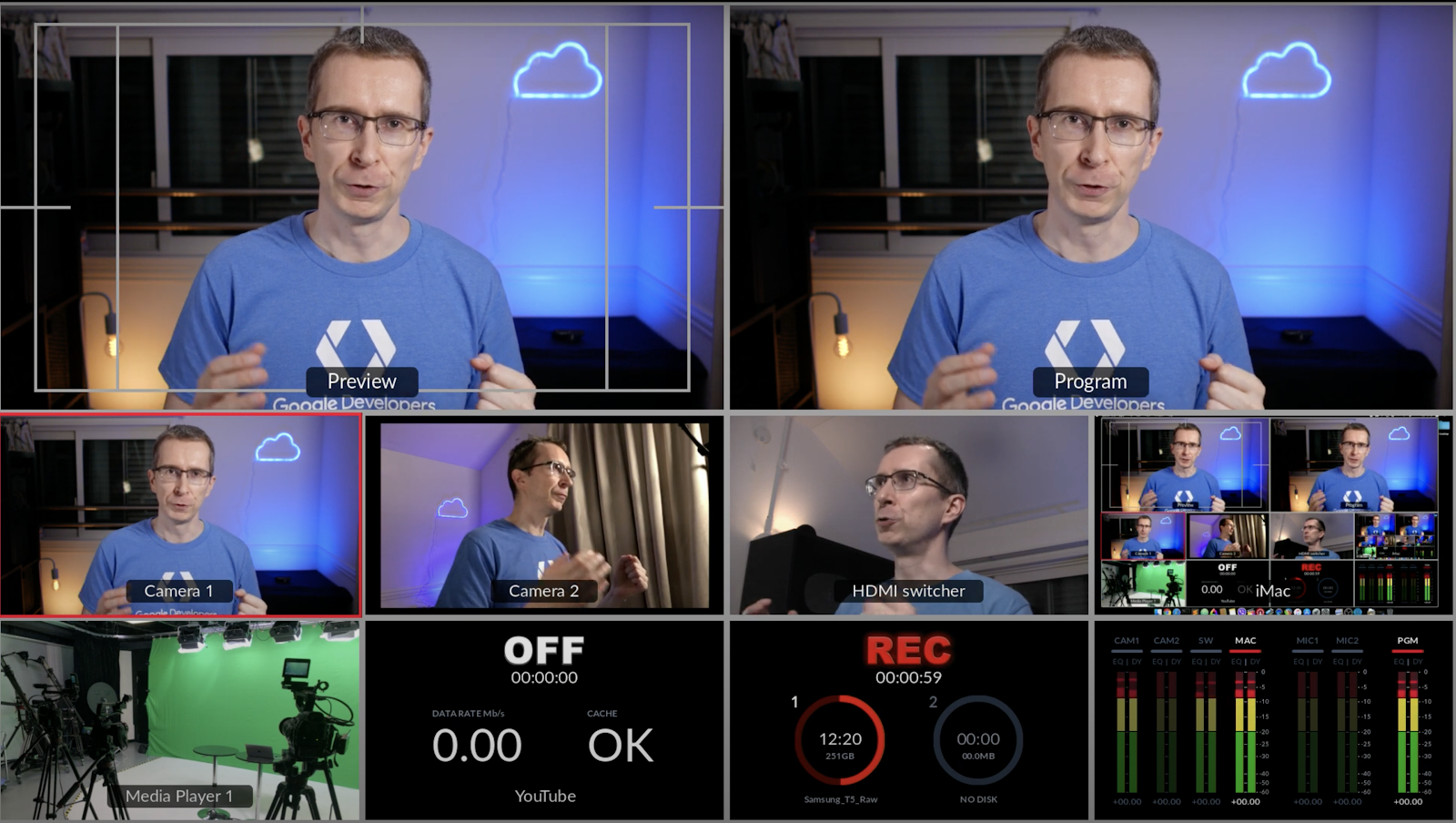

We recently published the Coral Manufacturing Demo, which demonstrates how to use a single Coral Edge TPU to simultaneously accomplish two common manufacturing use-cases: worker safety and visual inspection.

The demo is designed for two specific videos and tasks (worker keepout detection and apple quality grading) but it is designed to be easily customized with different inputs and tasks. The demo, written in C++, requires OpenGL and is primarily targeted at x86 systems which are prevalent in manufacturing gateways – although ARM Cortex-A systems, like the Coral Dev Board, are also supported.

Web Coral

We've been working hard to make ML acceleration with the Coral Edge TPU available for most popular systems. So we're proud to announce support for WebUSB, allowing you to use the Coral USB Accelerator directly from Chrome. To get started, check out our WebCoral demo, which builds a webpage where you can select a model and run an inference accelerated by the Edge TPU.

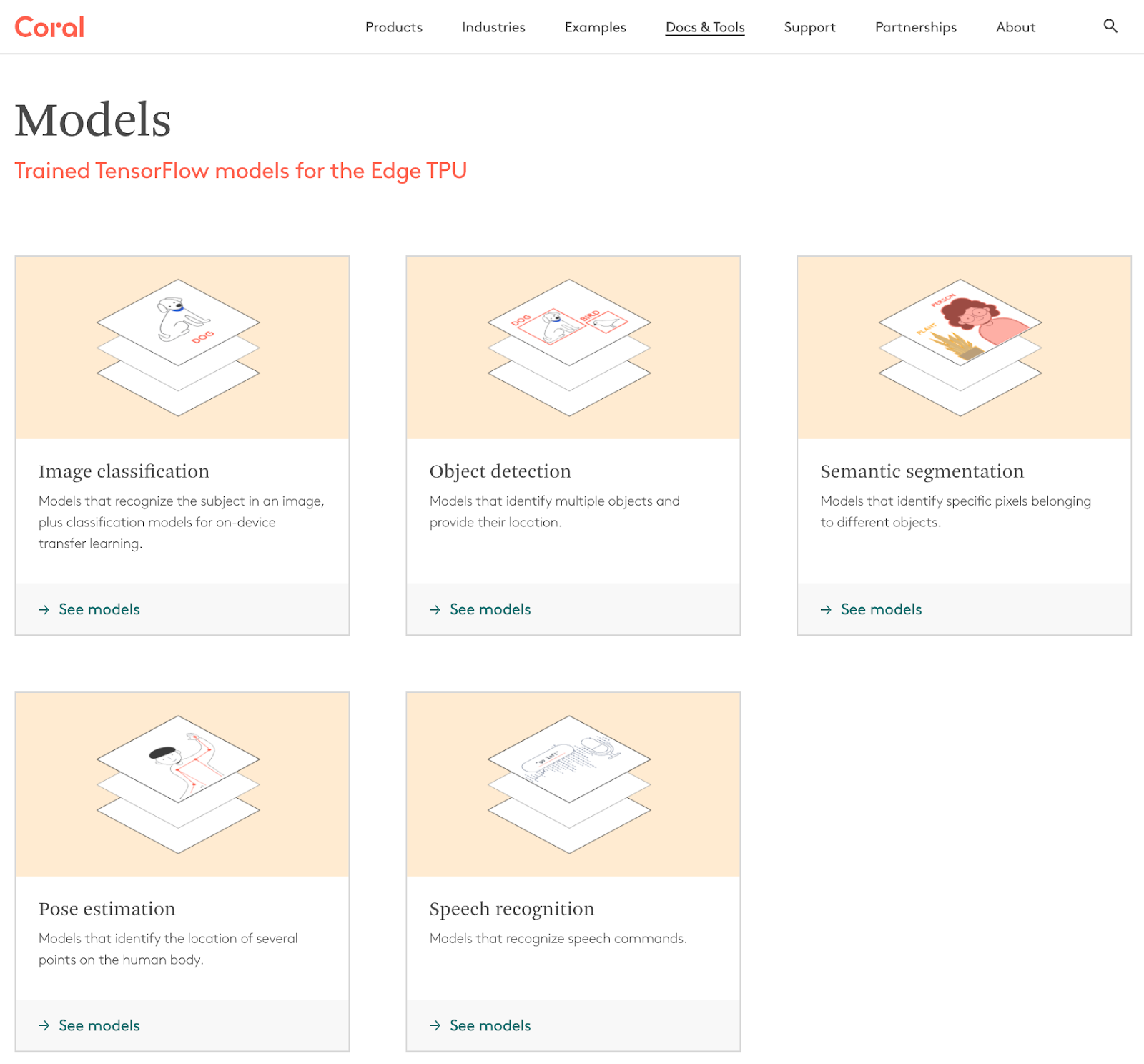

New models repository

We recently released a new models repository that makes it easier to explore the various trained models available for the Coral platform, including image classification, object detection, semantic segmentation, pose estimation, and speech recognition. Each family page lists the various models, including details about training dataset, input size, latency, accuracy, model size, and other parameters, making it easier to select the best fit for the application at hand. Lastly, each family page includes links to training scripts and example code to help you get started. Or for an overview of all our models, you can see them all on one page.

Transfer learning tutorials

Even with our collection of pre-trained models, it can sometimes be tricky to create a task-specific model that's compatible with our Edge TPU accelerator. To make this easier, we've released some new Google Colab tutorials that allow you to perform transfer learning for object detection, using MobileDet and EfficientDet-Lite models. You can find these and other Colabs in our GitHub Tutorials repo.

We are excited to share all that Coral has to offer as we continue to evolve our platform. Keep an eye out for more software and platform related news coming this summer. To discover more about our edge ML platform, please visit Coral.ai and share your feedback at [email protected].

Posted by Billy Rutledge, Director Google Research, Coral Team

Posted by Billy Rutledge, Director Google Research, Coral Team Posted by

Posted by

Posted by Vikram Tank (Product Manager), Coral Team

Posted by Vikram Tank (Product Manager), Coral Team