Posted by Niharika Arora, Developer Relations Engineer

The Android operating system brings the power of computing to everyone. This vision applies to all users, including those on entry-level phones that face real constraints across data, storage, memory, and more.

This was especially important for us to get right because, when we first announced Android (Go edition) back in 2017, people using low-end phones accounted for 57% of all device shipments globally (IDC Mobile Phone Tracker).

What is Android (Go edition)?

Android (Go edition) is a mobile operating system built for entry-level smartphones with less RAM. Android (Go edition) runs lighter and saves data, enabling Original Equipment Manufacturers (OEMs) to build affordable, entry-level devices that empower people with possibility. RAM requirements are listed below, and for full Android (Go edition) device capability specifications, see this page on our site.Recent Updates

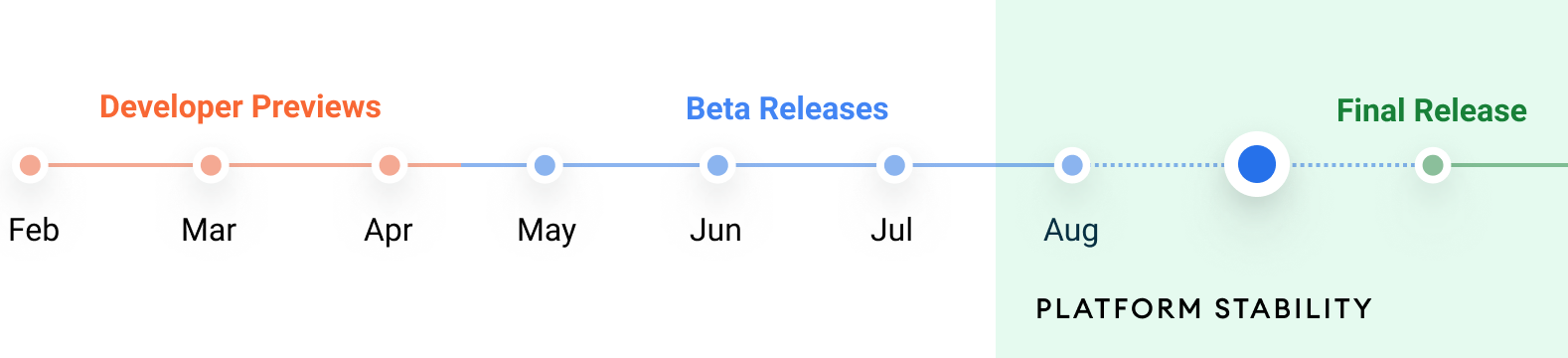

We are constantly making phones powered by Android (Go edition) more accessible with additional performance optimizations and features designed specifically for new & novice internet users, like translation, app switching, and data saving.Below are the recent improvements we made for Android 12:

Why build for Android (Go edition)?

With the fast growing & easily accessible internet, and all the features available at low cost, OEMs and developers are aiming & building their apps specifically for Android (Go edition) devices.Fast forward to today — over 250 million+ people worldwide actively use an Android (Go edition) phone. And also considering the big OEMs like Jio, Samsung, Oppo, Realme etc. building Android (Go edition) devices, there is a need for developers to build apps that perform well especially on Go devices.

But the markets with the fast growing internet and smartphone penetration can have some challenging issues, such as:

- Your app is not starting within the required time limit.

- A lot of features/required capabilities increases your app size

- How to handle memory pressure while working on Go apps?

Optimize your apps for Android (Go edition)

To help your app succeed and deliver the best possible experience in developing markets, we have put together some best practices based on experience building our own Google apps Gboard & Camera from Google.Approach

Optimize App Memory

- Release cache-like memory in onTrimMemory(): onTrimMemory() has always proven useful for an app to trim unneeded memory from its process. To best know an app's current trim level, you can use ActivityManager.getMyMemoryState(RunningAppProcessInfo) and then try to optimize/trim the resources which are not needed.

GBoard used the onTrimMemory() signal to trim unneeded memory while it goes in the background and there is not enough memory to keep as many background processes running as desired, for example, trimming unneeded memory usage from expressions, search, view cache or openable extensions in background. It helped them reduce the number of times being low memory killed and the average background RSS. Resident Set Size(RSS) is basically the portion of memory occupied by your app process that is held in main memory (RAM). To know more about RSS, please refer here.

Typically this is useful for loading large assets or ML models.

- Scheduling tasks which require similar resources(CPU, IO, Memory) appropriately: Concurrent scheduling could lead to multiple memory intensive operations to run in parallel and leading to them competing for resources and exceeding the peak memory usage of the app. The Camera from Google app found multiple problems, ensured a cap to peak memory and further optimized their app by appropriately allocating resources, separating tasks into CPU intensive, low latency tasks(tasks that need to be finished fast for Good UX) & IO tasks. Schedule tasks in right thread pools / executors so they can run on resource constrained devices in a balanced fashion.

- Find & fix memory leaks: Fighting leaks is difficult but there are tools like Android Studio Memory Profiler/Perfetto specifically available to reduce the effort to find and fix memory leaks.

Google apps used the tools to identify and fix memory issues which helped reduce the memory usage/footprint of the app. This reduction allowed other components of the app to run without adding additional memory pressure on the system.

An example from Gboard app is about View leaks

A specific case is caching subviews, like this:

The |keyboardView| might be released at some time, and the |keyButtonA| should be assigned as null appropriately at some time to avoid the view leak.

Lessons learned:

- Always add framework/library updates after analyzing the changes and verifying its impact early on.

- Make sure to release memory before assigning new value to a pointer pointing to other object allocation in heap in Java. (native backend java objects)

For example :

In Java it should be ok to do

GC should clean this up eventually.

if ClassA allocates native resources underneath and doesn't cleanup automatically on finalize(..) and requires caller to call some release(..) method, it needs to be like this

else it will leak native heap memory.

- Optimize your bitmaps: Large images/drawables usually consume more memory in the app. Google apps identified and optimized large bitmaps that are used in their apps.

Lessons learned :

- Prefer Lazy/on-demand initializations of big drawables.

- Release view when necessary.

- Avoid using full colored bitmaps when possible.

For example: Gboard’s glide typing feature needs to show an overlay view with a bitmap of trails, which can only has the alpha channel and apply a color filter for rendering.

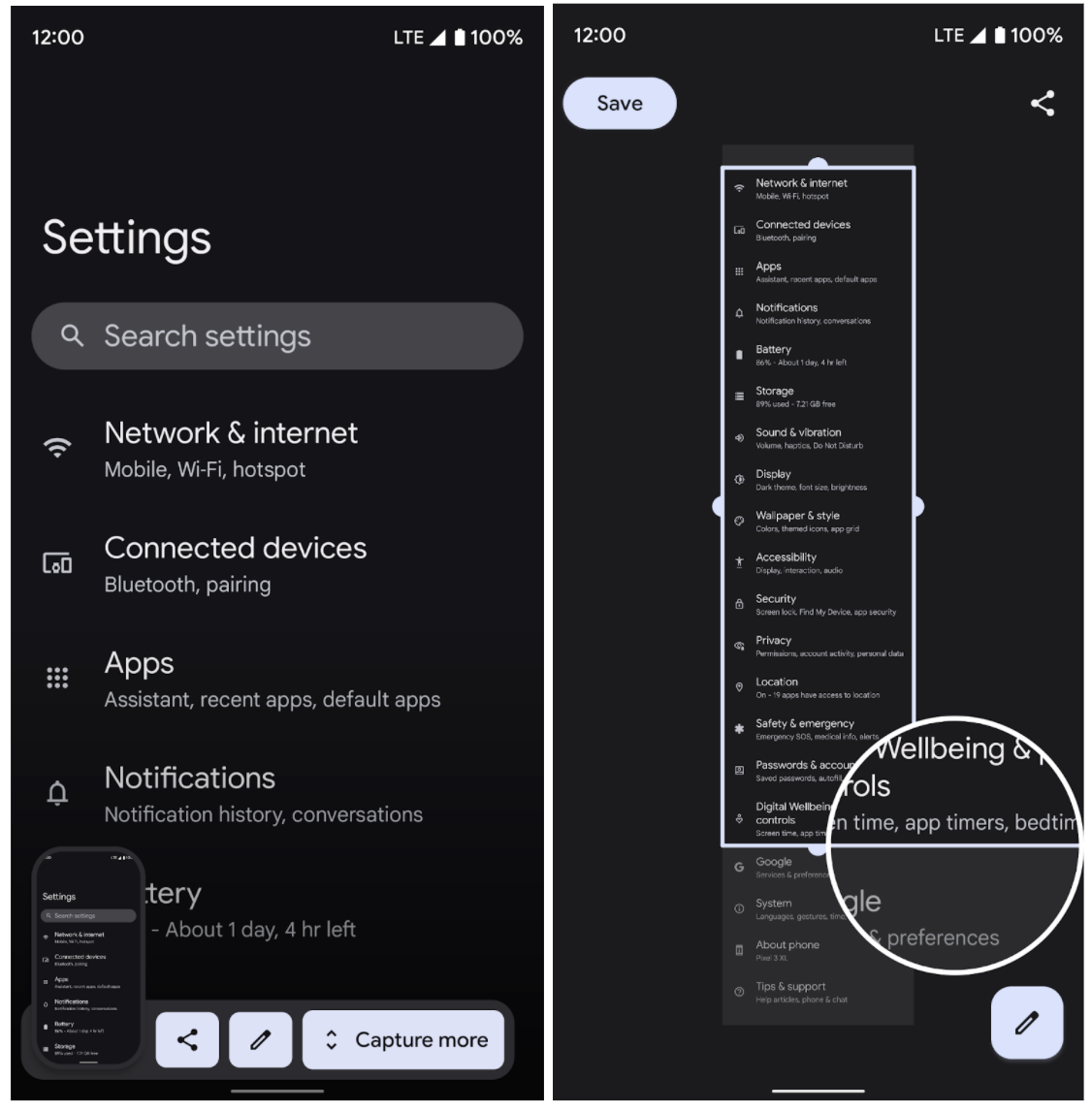

A screenshot of glide typing on Gboard

- Check and only set the alpha channel for the bitmap for complex custom views used in the app. This saved them a couple of MBs (per screen size/density).

- While using Glide,

- The ARGB_8888 format has 4 bytes/pixel consumption while RGB_565 has 2 bytes/pixel. Memory footprint gets reduced to half when RGB_565 format is used but using lower bitmap quality comes with a price too. Whether you need alpha values or not, try to fit your case accordingly.

- Configure and use cache wisely when using a 3P lib like Glide for image rendering.

- Try to choose other options for GIFs in your app when building for Android (Go edition) as GIFs take a lot of memory.

- The aapt tool can optimize the image resources placed in res/drawable/ with lossless compression during the build process. For example, the aapt tool can convert a true-color PNG that does not require more than 256 colors to an 8-bit PNG with a color palette. Doing so results in an image of equal quality but a smaller memory footprint. Read more here.

- You can reduce PNG file sizes without losing image quality using tools like pngcrush, pngquant, or zopflipng. All of these tools can reduce PNG file size while preserving the perceptive image quality.

- You could use resizable bitmaps. The Draw 9-patch tool is a WYSIWYG editor included in Android Studio that allows you to create bitmap images that automatically resize to accommodate the contents of the view and the size of the screen. Learn more about the tool here.

Recap

This part of the blog outlines why developers should consider building for Android (Go edition), a standard approach to follow while optimizing their apps and some recommendations & learnings from Google apps to improve their app memory and appropriately allocate resources.In the next part of this blog, we will talk about the best practices on Startup latency, app size and the tools used by Google apps to identify and fix performance issues.

.png)