Posted by Cam Askew and André Araujo, Software Engineers, Google Research Instance-level recognition (ILR) is the computer vision task of recognizing a specific instance of an object, rather than simply the category to which it belongs. For example, instead of labeling an image as “post-impressionist painting”, we’re interested in instance-level labels like “Starry Night Over the Rhone by Vincent van Gogh”, or “Arc de Triomphe de l'Étoile, Paris, France”, instead of simply “arch”. Instance-level recognition problems exist in many domains, like landmarks, artwork, products, or logos, and have applications in visual search apps, personal photo organization, shopping and more. Over the past several years, Google has been contributing to research on ILR with the Google Landmarks Dataset and Google Landmarks Dataset v2 (GLDv2), and novel models such as DELF and Detect-to-Retrieve.

|

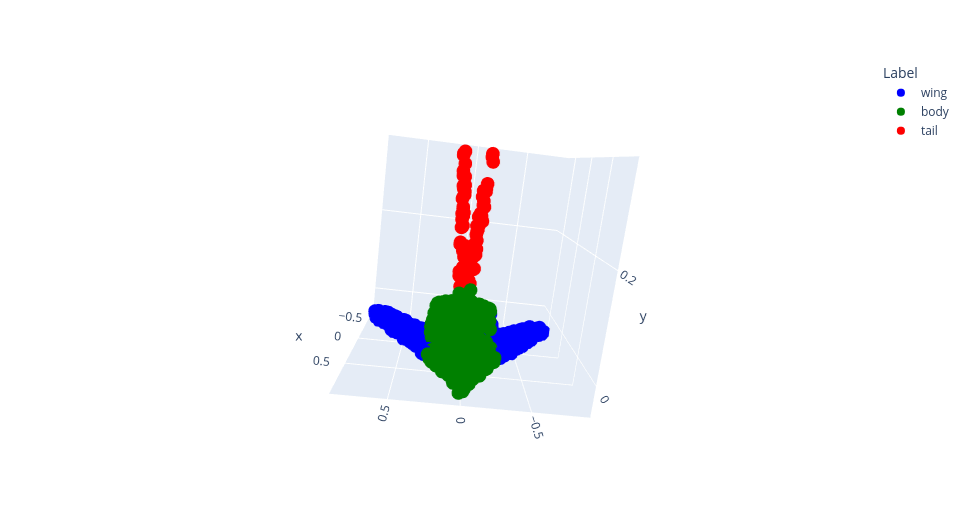

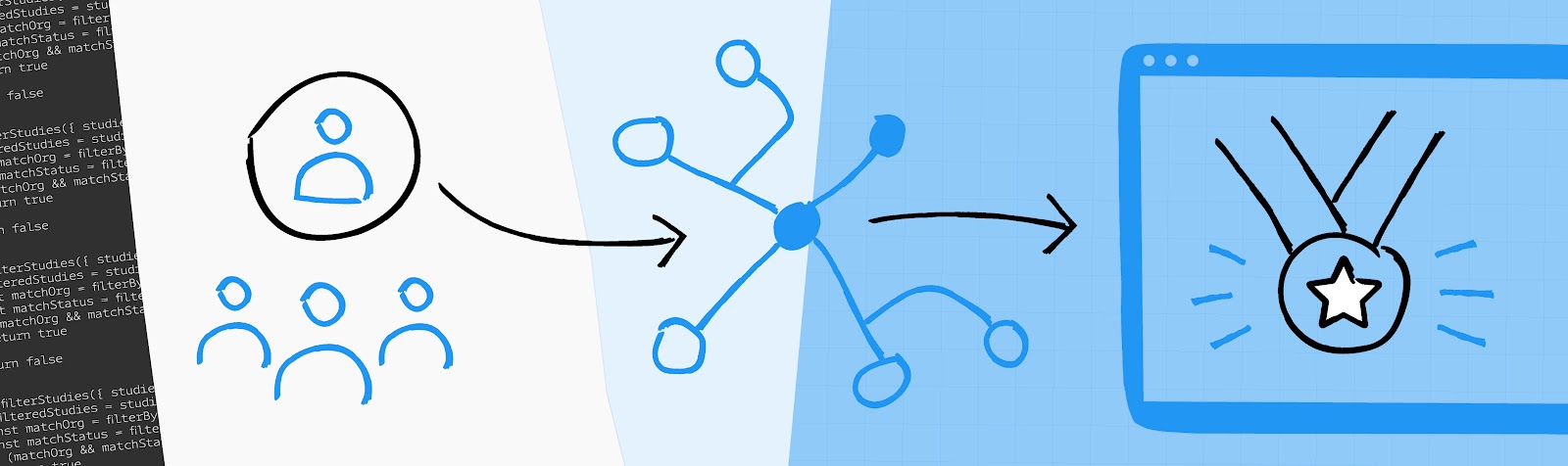

| Three types of image recognition problems, with different levels of label granularity (basic, fine-grained, instance-level), for objects from the artwork, landmark and product domains. In our work, we focus on instance-level recognition. |

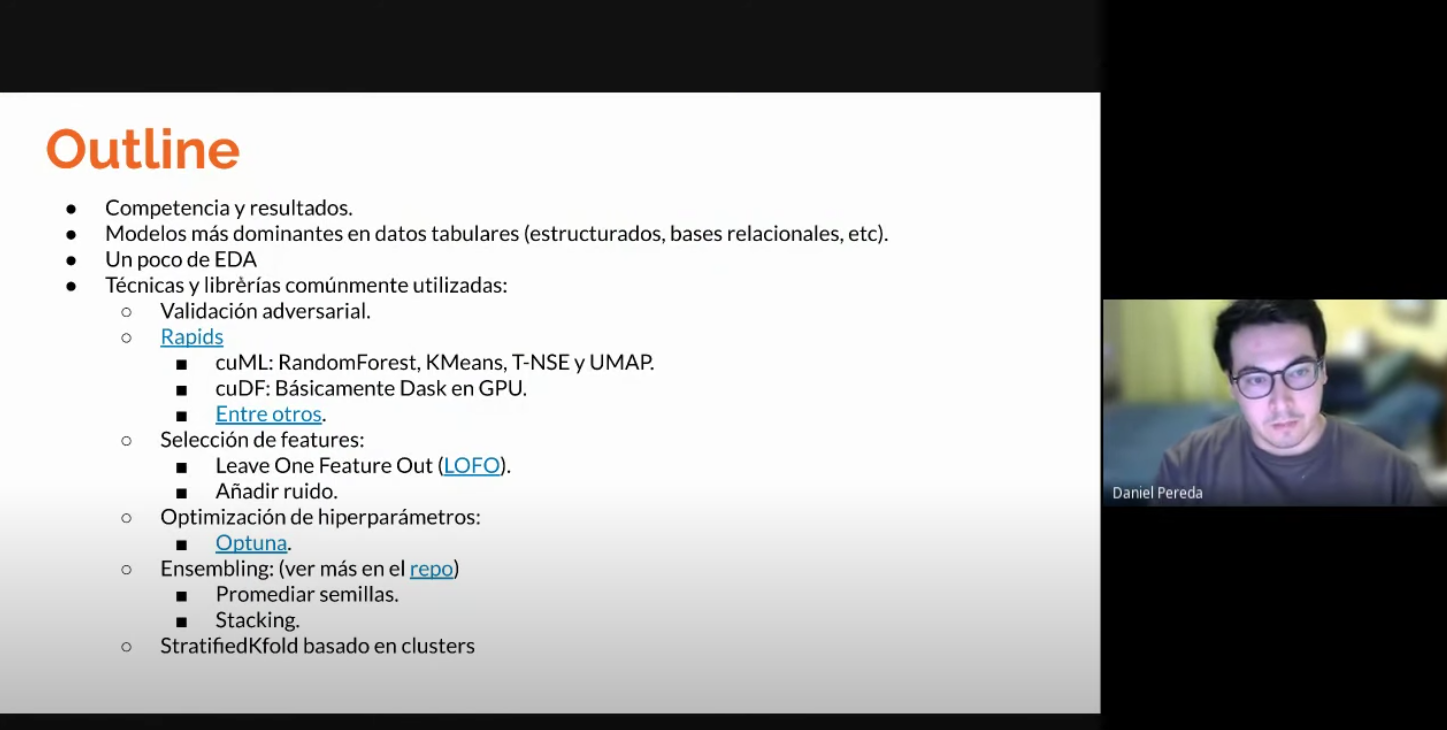

Today, we highlight some results from the Instance-Level Recognition Workshop at ECCV’20. The workshop brought together experts and enthusiasts in this area, with many fruitful discussions, some of which included our ECCV’20 paper “DEep Local and Global features” (DELG), a state-of-the-art image feature model for instance-level recognition, and a supporting open-source codebase for DELG and other related ILR techniques. Also presented were two new landmark challenges (on recognition and retrieval tasks) based on GLDv2, and future ILR challenges that extend to other domains: artwork recognition and product retrieval. The long-term goal of the workshop and challenges is to foster advancements in the field of ILR and push forward the state of the art by unifying research workstreams from different domains, which so far have mostly been tackled as separate problems.

DELG: DEep Local and Global Features

Effective image representations are the key components required to solve instance-level recognition problems. Often, two types of representations are necessary: global and local image features. A global feature summarizes the entire contents of an image, leading to a compact representation but discarding information about spatial arrangement of visual elements that may be characteristic of unique examples. Local features, on the other hand, comprise descriptors and geometry information about specific image regions; they are especially useful to match images depicting the same objects.

Currently, most systems that rely on both of these types of features need to separately adopt each of them using different models, which leads to redundant computations and lowers overall efficiency. To address this, we proposed DELG, a unified model for local and global image features.

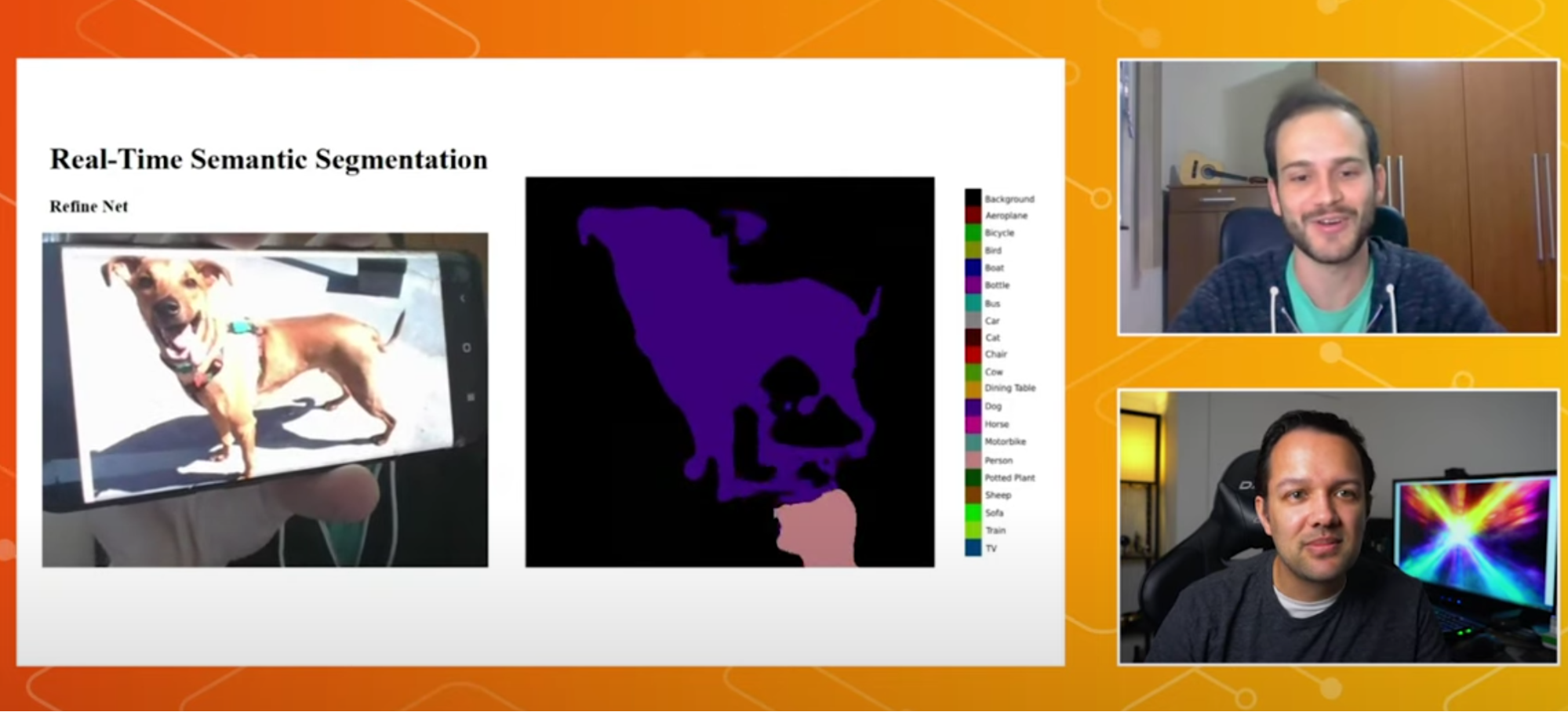

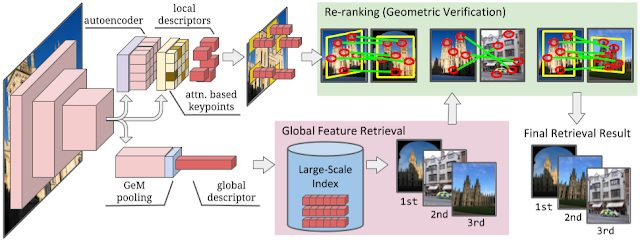

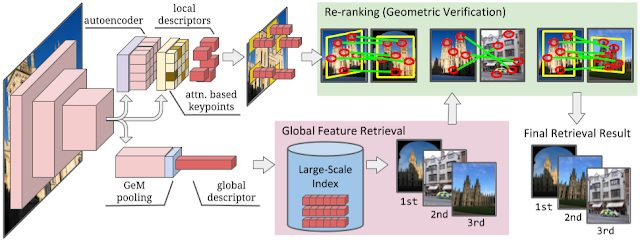

The DELG model leverages a fully-convolutional neural network with two different heads: one for global features and the other for local features. Global features are obtained using pooled feature maps of deep network layers, which in effect summarize the salient features of the input images making the model more robust to subtle changes in input. The local feature branch leverages intermediate feature maps to detect salient image regions, with the help of an attention module, and to produce descriptors that represent associated localized contents in a discriminative manner.

|

| Our proposed DELG model (left). Global features can be used in the first stage of a retrieval-based system, to efficiently select the most similar images (bottom). Local features can then be employed to re-rank top results (top, right), increasing the precision of the system. |

This novel design allows for efficient inference since it enables extraction of global and local features within a single model. For the first time, we demonstrated that such a unified model can be trained end-to-end and deliver state-of-the-art results for instance-level recognition tasks. When compared to previous global features, this method outperforms other approaches by up to 7.5% mean average precision; and for the local feature re-ranking stage, DELG-based results are up to 7% better than previous work. Overall, DELG achieves 61.2% average precision on the recognition task of GLDv2, which outperforms all except two methods of the 2019 challenge. Note that all top methods from that challenge used complex model ensembles, while our results use only a single model.

Tensorflow 2 Open-Source Codebase

To foster research reproducibility, we are also releasing a revamped open-source codebase that includes DELG and other techniques relevant to instance-level recognition, such as DELF and Detect-to-Retrieve. Our code adopts the latest Tensorflow 2 releases, and makes available reference implementations for model training & inference, besides image retrieval and matching functionalities. We invite the community to use and contribute to this codebase in order to develop strong foundations for research in the ILR field.

New Challenges for Instance Level Recognition

Focused on the landmarks domain, the Google Landmarks Dataset v2 (GLDv2) is the largest available dataset for instance-level recognition, with 5 million images spanning 200 thousand categories. By training landmark retrieval models on this dataset, we have demonstrated improvements of up to 6% mean average precision, compared to models trained on earlier datasets. We have also recently launched a new browser interface for visually exploring the GLDv2 dataset.

This year, we also launched two new challenges within the landmark domain, one focusing on recognition and the other on retrieval. These competitions feature newly-collected test sets, and a new evaluation methodology: instead of uploading a CSV file with pre-computed predictions, participants have to submit models and code that are run on Kaggle servers, to compute predictions that are then scored and ranked. The compute restrictions of this environment put an emphasis on efficient and practical solutions.

The challenges attracted over 1,200 teams, a 3x increase over last year, and participants achieved significant improvements over our strong DELG baselines. On the recognition task, the highest scoring submission achieved a relative increase of 43% average precision score and on the retrieval task, the winning team achieved a 59% relative improvement of the mean average precision score. This latter result was achieved via a combination of more effective neural networks, pooling methods and training protocols (see more details on the Kaggle competition site).

In addition to the landmark recognition and retrieval challenges, our academic and industrial collaborators discussed their progress on developing benchmarks and competitions in other domains. A large-scale research benchmark for artwork recognition is under construction, leveraging The Met’s Open Access image collection, and with a new test set consisting of guest photos exhibiting various photometric and geometric variations. Similarly, a new large-scale product retrieval competition will capture various challenging aspects, including a very large number of products, a long-tailed class distribution and variations in object appearance and context. More information on the ILR workshop, including slides and video recordings, is available on its website.

With this research, open source code, data and challenges, we hope to spur progress in instance-level recognition and enable researchers and machine learning enthusiasts from different communities to develop approaches that generalize across different domains.

Acknowledgements

The main Google contributors of this project are André Araujo, Cam Askew, Bingyi Cao, Jack Sim and Tobias Weyand. We’d like to thank the co-organizers of the ILR workshop Ondrej Chum, Torsten Sattler, Giorgos Tolias (Czech Technical University), Bohyung Han (Seoul National University), Guangxing Han (Columbia University), Xu Zhang (Amazon), collaborators on the artworks dataset Nanne van Noord, Sarah Ibrahimi (University of Amsterdam), Noa Garcia (Osaka University), as well as our collaborators from the Metropolitan Museum of Art: Jennie Choi, Maria Kessler and Spencer Kiser. For the open-source Tensorflow codebase, we’d like to thank the help of recent contributors: Dan Anghel, Barbara Fusinska, Arun Mukundan, Yuewei Na and Jaeyoun Kim. We are grateful to Will Cukierski, Phil Culliton, Maggie Demkin for their support with the landmarks Kaggle competitions. Also we’d like to thank Ralph Keller and Boris Bluntschli for their help with data collection.