Posted by Jennifer Kohl, Program Manager, Developer Community Programs

On October 16-18, thousands of developers from all over the world are coming together for DevFest 2020, the largest virtual weekend of community-led learning on Google technologies.

As people around the world continue to adapt to spending more time at home, developers yearn for community now more than ever. In years past, DevFest was a series of in-person events over a season. For 2020, the community is coming together in a whole new way – virtually – over one weekend to keep developers connected when they may want it the most.

The speakers

The magic of DevFest comes from the people who organize and speak at the events - developers with various backgrounds and skill levels, all with their own unique perspectives. In different parts of the world, you can find a DevFest session in many local languages. DevFest speakers are made up of various types of technologists, including kid developers , self-taught programmers from rural areas , and CEOs and CTOs of startups. DevFest also features a wide range of speakers from Google, Women Techmakers, Google Developer Experts, and more. Together, these friendly faces, with many different perspectives, create a unique and rich developer conference.

The sessions and their mission

Hosted by Google Developer Groups, this year’s sessions include technical talks and workshops from the community, and a keynote from Google Developers. Through these events, developers will learn how Google technologies help them develop, learn, and build together.

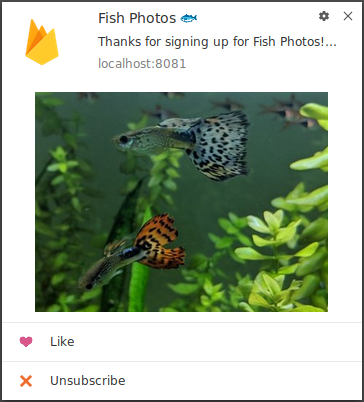

Sessions will cover multiple technologies, such as Android, Google Cloud Platform, Machine Learning with TensorFlow, Web.dev, Firebase, Google Assistant, and Flutter.

For this reason, Google Developers supports the community-led efforts of Google Developer Groups and their annual tentpole event, DevFest. Google provides esteemed speakers from the company and custom technical content produced by developers at Google. The impact of DevFest is really driven by the grassroots, passionate GDG community organizers who volunteer their time. Google Developers is proud to support them.

The attendees

During DevFest 2019, 138,000+ developers participated across 500+ DevFests in 100 countries. While 2020 is a very different year for events around the world, GDG chapters are galvanizing their communities to come together virtually for this global moment. The excitement for DevFest continues as more people seek new opportunities to meet and collaborate with like-minded, community-oriented developers in our local towns and regions.

Join the conversation on social media with #DevFest.

Sign up for DevFest at goo.gle/devfest.

Still curious? Check out these popular talks from DevFest 2019 events around the world...