When building machine learning models for real-life applications, we need to consider inputs from multiple modalities in order to capture various aspects of the world around us. For example, audio, video, and text all provide varied and complementary information about a visual input. However, building multimodal models is challenging due to the heterogeneity of the modalities. Some of the modalities might be well synchronized in time (e.g., audio, video) but not aligned with text. Furthermore, the large volume of data in video and audio signals is much larger than that in text, so when combining them in multimodal models, video and audio often cannot be fully consumed and need to be disproportionately compressed. This problem is exacerbated for longer video inputs.

In “Mirasol3B: A Multimodal Autoregressive model for time-aligned and contextual modalities”, we introduce a multimodal autoregressive model (Mirasol3B) for learning across audio, video, and text modalities. The main idea is to decouple the multimodal modeling into separate focused autoregressive models, processing the inputs according to the characteristics of the modalities. Our model consists of an autoregressive component for the time-synchronized modalities (audio and video) and a separate autoregressive component for modalities that are not necessarily time-aligned but are still sequential, e.g., text inputs, such as a title or description. Additionally, the time-aligned modalities are partitioned in time where local features can be jointly learned. In this way, audio-video inputs are modeled in time and are allocated comparatively more parameters than prior works. With this approach, we can effortlessly handle much longer videos (e.g., 128-512 frames) compared to other multimodal models. At 3B parameters, Mirasol3B is compact compared to prior Flamingo (80B) and PaLI-X (55B) models. Finally, Mirasol3B outperforms the state-of-the-art approaches on video question answering (video QA), long video QA, and audio-video-text benchmarks.

Coordinating time-aligned and contextual modalities

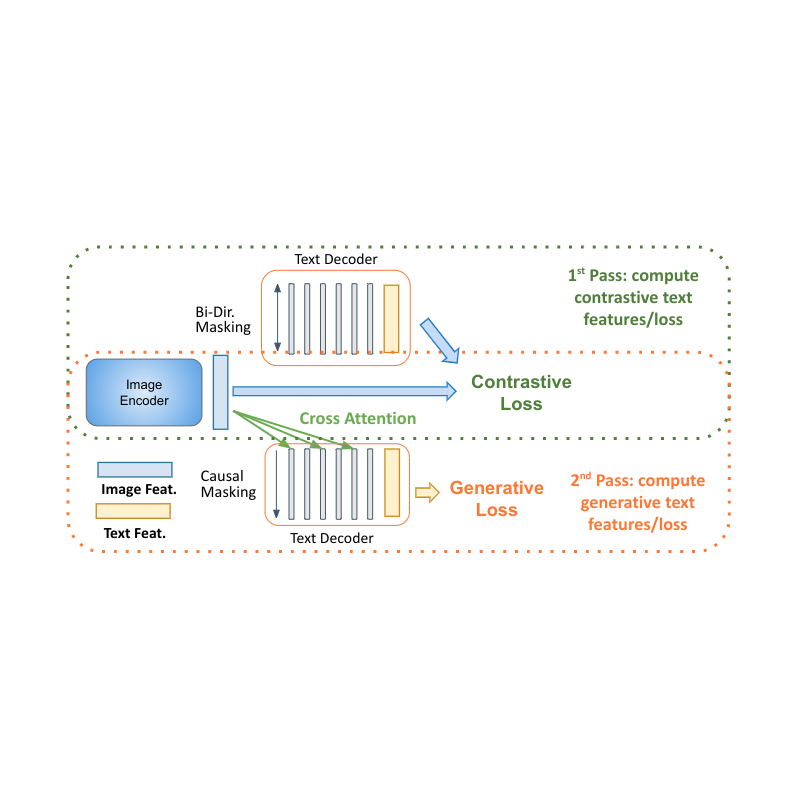

Video, audio and text are diverse modalities with distinct characteristics. For example, video is a spatio-temporal visual signal with 30–100 frames per second, but due to the large volume of data, typically only 32–64 frames per video are consumed by current models. Audio is a one-dimensional temporal signal obtained at much higher frequency than video (e.g., at 16 Hz), whereas text inputs that apply to the whole video, are typically 200–300 word-sequence and serve as a context to the audio-video inputs. To that end, we propose a model consisting of an autoregressive component that fuses and jointly learns the time-aligned signals, which occur at high frequencies and are roughly synchronized, and another autoregressive component for processing non-aligned signals. Learning between the components for the time-aligned and contextual modalities is coordinated via cross-attention mechanisms that allow the two to exchange information while learning in a sequence without having to synchronize them in time.

Time-aligned autoregressive modeling of video and audio

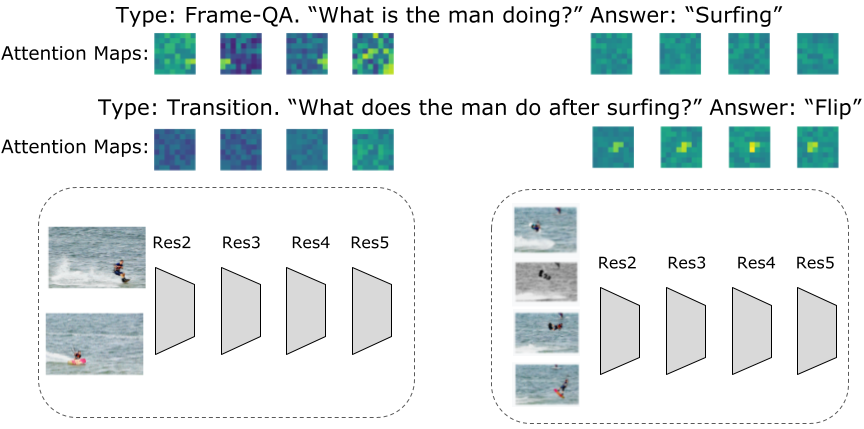

Long videos can convey rich information and activities happening in a sequence. However, present models approach video modeling by extracting all the information at once, without sufficient temporal information. To address this, we apply an autoregressive modeling strategy where we condition jointly learned video and audio representations for one time interval on feature representations from previous time intervals. This preserves temporal information.

The video is first partitioned into smaller video chunks. Each chunk itself can be 4–64 frames. The features corresponding to each chunk are then processed by a learning module, called the Combiner (described below), which generates a joint audio and video feature representation at the current step — this step extracts and compacts the most important information per chunk. Next, we process this joint feature representation with an autoregressive Transformer, which applies attention to the previous feature representation and generates the joint feature representation for the next step. Consequently, the model learns how to represent not only each individual chunk, but also how the chunks relate temporally.

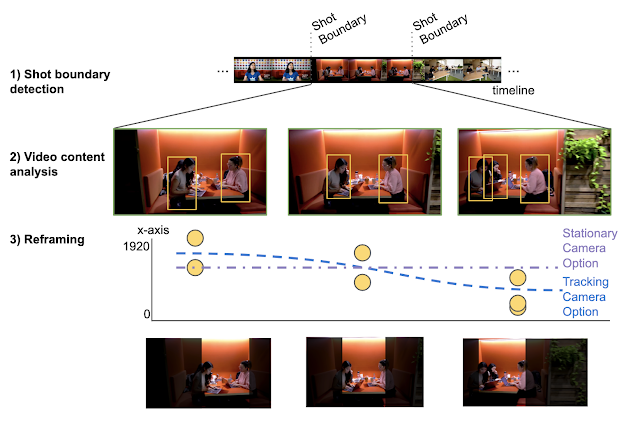

|

| We use an autoregressive modeling of the audio and video inputs, partitioning them in time and learning joint feature representations, which are then autoregressively learned in sequence. |

Modeling long videos with a modality combiner

To combine the signals from the video and audio information in each video chunk, we propose a learning module called the Combiner. Video and audio signals are aligned by taking the audio inputs that correspond to a specific video timeframe. We then process video and audio inputs spatio-temporally, extracting information particularly relevant to changes in the inputs (for videos we use sparse video tubes, and for audio we apply the spectrogram representation, both of which are processed by a Vision Transformer). We concatenate and input these features to the Combiner, which is designed to learn a new feature representation capturing both these inputs. To address the challenge of the large volume of data in video and audio signals, another goal of the Combiner is to reduce the dimensionality of the joint video/audio inputs, which is done by selecting a smaller number of output features to be produced. The Combiner can be implemented simply as a causal Transformer, which processes the inputs in the direction of time, i.e., using only inputs of the prior steps or the current one. Alternatively, the Combiner can have a learnable memory, described below.

Combiner styles

A simple version of the Combiner adapts a Transformer architecture. More specifically, all audio and video features from the current chunk (and optionally prior chunks) are input to a Transformer and projected to a lower dimensionality, i.e., a smaller number of features are selected as the output “combined” features. While Transformers are not typically used in this context, we find it effective for reducing the dimensionality of the input features, by selecting the last m outputs of the Transformer, if m is the desired output dimension (shown below). Alternatively, the Combiner can have a memory component. For example, we use the Token Turing Machine (TTM), which supports a differentiable memory unit, accumulating and compressing features from all previous timesteps. Using a fixed memory allows the model to work with a more compact set of features at every step, rather than process all the features from previous steps, which reduces computation.

|

| We use a simple Transformer-based Combiner (left) and a Memory Combiner (right), based on the Token Turing Machine (TTM), which uses memory to compress previous history of features. |

Results

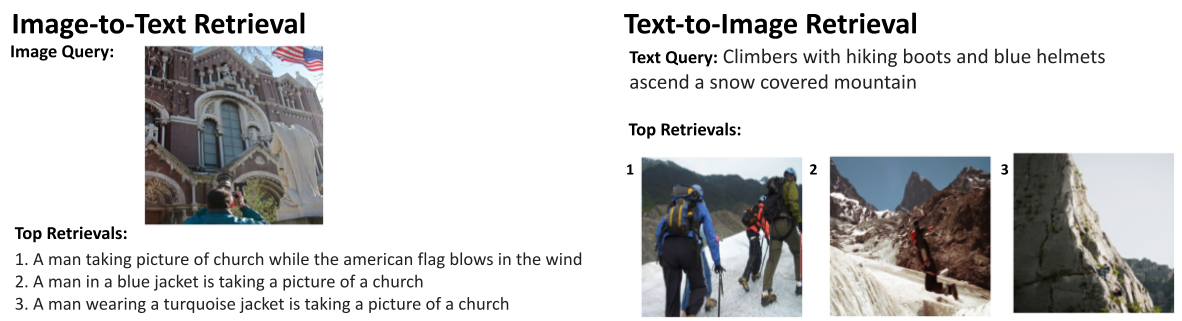

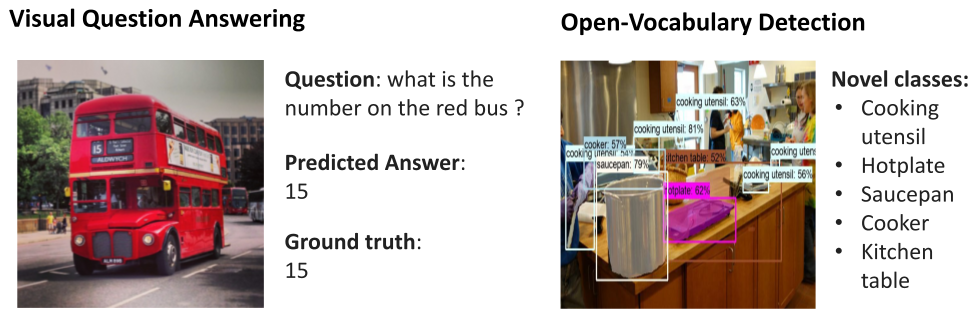

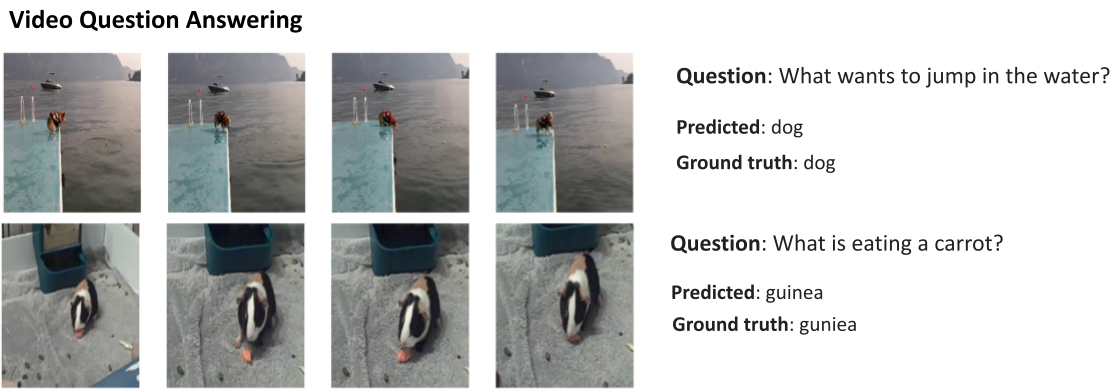

We evaluate our approach on several benchmarks, MSRVTT-QA, ActivityNet-QA and NeXT-QA, for the video QA task, where a text-based question about a video is issued and the model needs to answer. This evaluates the ability of the model to understand both the text-based question and video content, and to form an answer, focusing on only relevant information. Of these benchmarks, the latter two target long video inputs and feature more complex questions.

We also evaluate our approach in the more challenging open-ended text generation setting, wherein the model generates the answers in an unconstrained fashion as free form text, requiring an exact match to the ground truth answer. While this stricter evaluation counts synonyms as incorrect, it may better reflect a model’s ability to generalize.

Our results indicate improved performance over state-of-the-art approaches for most benchmarks, including all with open-ended generation evaluation — notable considering our model is only 3B parameters, considerably smaller than prior approaches, e.g., Flamingo 80B. We used only video and text inputs to be comparable to other work. Importantly, our model can process 512 frames without needing to increase the model parameters, which is crucial for handling longer videos. Finally with the TTM Combiner, we see both better or comparable performance while reducing compute by 18%.

|

| Results on the MSRVTT-QA (video QA) dataset. |

|

| Results on NeXT-QA benchmark, which features long videos for the video QA task. |

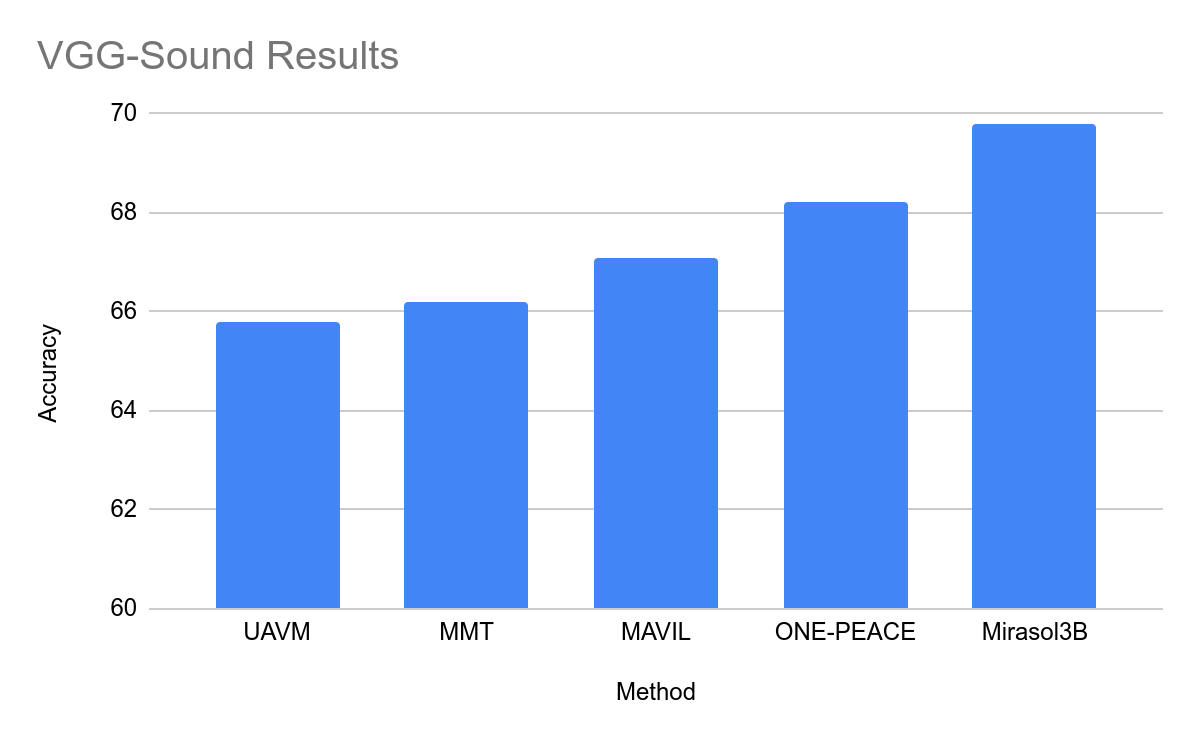

Results on audio-video benchmarks

Results on the popular audio-video datasets VGG-Sound and EPIC-SOUNDS are shown below. Since these benchmarks are classification-only, we treat them as an open-ended text generative setting where our model produces the text of the desired class; e.g., for the class ID corresponding to the “playing drums” activity, we expect the model to generate the text “playing drums”. In some cases our approach outperforms the prior state of the art by large margins, even though our model outputs the results in the generative open-ended setting.

|

| Results on the VGG-Sound (audio-video QA) dataset. |

|

| Results on the EPIC-SOUNDS (audio-video QA) dataset. |

Benefits of autoregressive modeling

We conduct an ablation study comparing our approach to a set of baselines that use the same input information but with standard methods (i.e., without autoregression and the Combiner). We also compare the effects of pre-training. Because standard methods are ill-suited for processing longer video, this experiment is conducted for 32 frames and four chunks only, across all settings for fair comparison. We see that Mirasol3B’s improvements are still valid for relatively short videos.

|

| Ablation experiments comparing the main components of our model. Using the Combiner, the autoregressive modeling, and pre-training all improve performance. |

Conclusion

We present a multimodal autoregressive model that addresses the challenges associated with the heterogeneity of multimodal data by coordinating the learning between time-aligned and time-unaligned modalities. Time-aligned modalities are further processed autoregressively in time with a Combiner, controlling the sequence length and producing powerful representations. We demonstrate that a relatively small model can successfully represent long video and effectively combine with other modalities. We outperform the state-of-the-art approaches (including some much bigger models) on video- and audio-video question answering.

Acknowledgements

This research is co-authored by AJ Piergiovanni, Isaac Noble, Dahun Kim, Michael Ryoo, Victor Gomes, and Anelia Angelova. We thank Claire Cui, Tania Bedrax-Weiss, Abhijit Ogale, Yunhsuan Sung, Ching-Chung Chang, Marvin Ritter, Kristina Toutanova, Ming-Wei Chang, Ashish Thapliyal, Xiyang Luo, Weicheng Kuo, Aren Jansen, Bryan Seybold, Ibrahim Alabdulmohsin, Jialin Wu, Luke Friedman, Trevor Walker, Keerthana Gopalakrishnan, Jason Baldridge, Radu Soricut, Mojtaba Seyedhosseini, Alexander D'Amour, Oliver Wang, Paul Natsev, Tom Duerig, Younghui Wu, Slav Petrov, Zoubin Ghahramani for their help and support. We also thank Tom Small for preparing the animation.