Verible

The

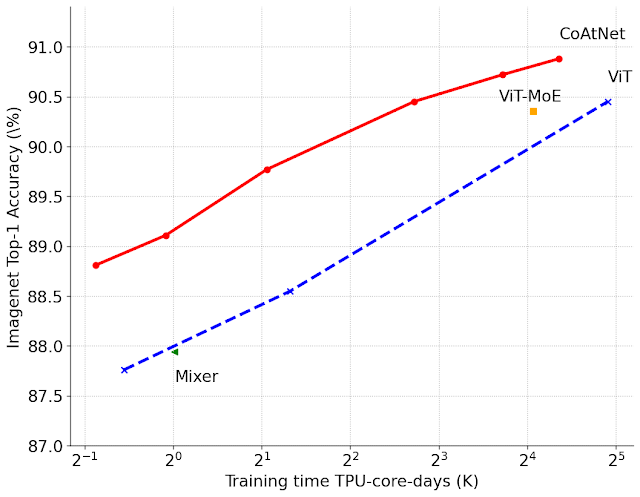

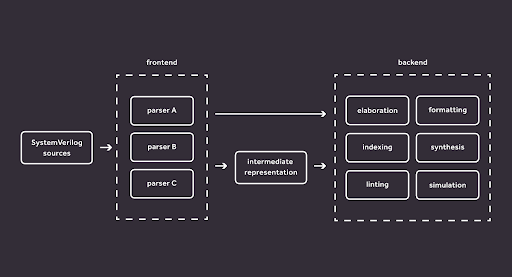

Verible project originated at Google; its main mission is to make SystemVerilog easily and quickly parsable for a wide variety of applications mostly focusing on developer tools.

Verible is a set of tools based on a common SystemVerilog parsing engine, providing a command line interface which makes integration with other tools for daily usage or CI systems for automatic testing and deployment a breeze.

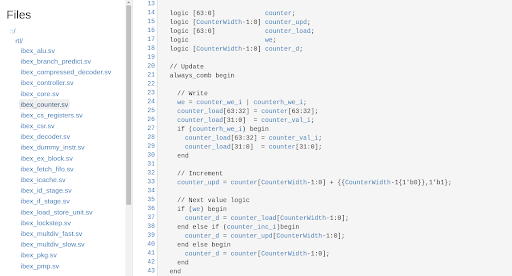

Antmicro has been involved in the development of Verible since its initial open source release and we now provide a significant portion of current development efforts, helping adapt it for use in various open source projects or commercial environments that use SystemVerilog. One notable user is the security-focused

OpenTitan project, which has driven many

interesting developments and provides a good showcase of the capabilities being completely open source, well documented, fairly complex, and used in real applications.

Linter

One of the most common use cases for Verible is linting. The linter analyzes code for patterns and constructs that are deemed undesirable according to the implemented lint rules. The rules follow authoritative style guides that can be enforced on a project or company level in various SystemVerilog projects.

The rules range from simple ones like making sure the module name matches the file name to more sophisticated like checking variable naming conventions (all caps, snake case, specific prefix or suffix etc.) or making sure the labels after the begin and end statements match.

A full list of rules can be found in the

Verible lint documentation and is constantly growing. Usage is very simple:

$ verible-verilog-lint --ruleset all core.sv

core.sv:3:11: Interface names must use lower_snake_case naming convention and end with _if. [Style: interface-conventions] [interface-name-style]

The output of the linter is easy to understand, as the way issues are reported to the user is modeled after popular programming language compilers.

The linter is highly configurable. It is possible to select the rules for which the compliance will be checked, some rules allow for detailed configuration (e.g. max line length).

Rules can also be selectively waived in specific files or at specific lines or even by regex matching. In addition, some rules can be automatically fixed by the linter itself.

Formatter

The Verible formatter is a complementary tool for the linter. It is used to automatically detect various formatting issues like improper indentation or alignment. As opposed to the linter, it only detects and fixes issues that have no lexical impact on the source code.

The formatter also comes with useful helper scripts for selective and interactive reformatting (e.g. only format files that changed according to git, ask before applying changes to each chunk).

A toolset that consists of both the linter and the formatter can effectively remove all the discussions about styling, preferences and conventions from all pull requests. Developers can then focus solely on the technical aspects of the proposed changes.

Indexing is widely adopted for many larger open source software projects.

Thanks to Verible, it is now possible to do the same in the world of open source HDL designs, and of course private, company-wide deployments like this are also possible.

Surelog and UHDM

SystemVerilog is a powerful language but also complex. So far no open source tools have been able to support it in full. Implementing it separately for each project such as the Yosys synthesis tool or the Verilator simulator would take a colossal amount of time, and that’s where Surelog and UHDM come in.

Surelog, originally created and led by Alain Dargelas, aims to be a fully-featured SystemVerilog 2017 preprocessor, parser, and elaborator. It’s a modern tool and thus follows the current version of the SV standard without unnecessary deviations or legacy baggage.

What’s interesting is that Surelog is only a language frontend designed to integrate well with other tools—it outputs an elaborated design in an intermediate format called UHDM.

UHDM stands for Universal Hardware Data Model, and it’s both a file format for storing hardware designs and a library able to manipulate this format. A client application can access the data using VPI, which is a standard programming interface for SystemVerilog.

What this means is that the work required to create a SystemVerilog parser only needs to be done once, and other tools can use that parser via UHDM. This is much easier than implementing a full SystemVerilog parser within each tool. What’s more, any improvements in the unified parser will provide benefits for all client applications. Finally, any other parser is free to emit UHDM as well, so in the future we might see e.g. a UHDM backend for Verible.

Just like in Verible’s case, both Surelog and UHDM have recently been contributed into CHIPS Alliance to drive a broader adoption. We are actively contributing to both projects, especially around the integrations with tooling such as Yosys and Verilator, and practical use in open source and customer projects.

Recent Antmicro contributions adding UHDM frontends for Yosys and Verilator enabled

Ibex synthesis and simulation. The complete OpenTitan project is the next milestone.

The Surelog/UHDM/Yosys flow enabling SystemVerilog synthesis without the necessity of converting the HDL code to Verilog is a great improvement for open source ASIC build flows such as OpenROAD’s

OpenLane flow (which we also support commercially). Removing the code conversion step enables the developers to perform e.g. circuit equivalence validation to check the correctness of the design.

More information about Surelog/UHDM and Verible can be found in a dedicated

CHIPS Alliance presentation that was recently given by Henner Zeller, Google’s Verible lead.

UVM is in the picture

No open source ASIC design toolkit can be complete without support for Universal Verification Methodology, or UVM, which is one of the most widespread verification methodologies for large-scale ASIC design. This has also been an underrepresented area in open source tooling and changing that is an enormous undertaking, but working together with our customers, most notably Western Digital, we have been making progress on that front as well.

Across the ASIC development landscape, UVM verification is currently performed with proprietary simulators, but a more easily distributable, collaborative and open ecosystem is needed to close the feedback loop between (emerging) open source design approaches and verification. Verilator is an extremely popular choice for other system development use cases but it has historically not focused on UVM-style verification. Other styles of verification, such as the very interesting and popular Python-based

cocotb framework maintained by

FOSSi Foundation, have been

enabled in Verilator. But support for UVM, partly due to the size and complexity of the methodology, has been notably absent.

One of the features missing from Verilator but needed for UVM is SystemVerilog stratified scheduling, which is a set of rules specified in the standard that govern the way time progresses in a simulation, as well as the order of operations. A SystemVerilog simulation is divided into smaller steps called time slots, and each time slot is further divided into multiple regions. Specific events can only happen in certain regions, and some regions can reoccur in a single time slot.

Until recently, Verilator had implemented only a small subset of these rules, as all scheduling was being done at compilation time. Spearheading a long-standing development effort within CHIPS Alliance, in collaboration with the maintainer of Verilator, Wilson Snyder, we have built is a proof-of-concept version of Verilator with a dynamic scheduler, which manages the occurrence of certain events at runtime, extending the stratified scheduling support. More details can be found in

Antmicro’s presentation for the inaugural CHIPS Alliance Deep Dive Cafe Talk.

Another feature required for UVM is constrained randomization, which allows generating random inputs to feed to a design in order to thoroughly test it. Unlike unconstrained randomization, which is already provided by Verilator, it allows the user to specify some rules for input generation, thus limiting the possible value space and making sure that the input makes sense. Work on adding this to Verilator has already started, although the feature is still in its infancy. There are many other features on the roadmap which will eventually enable practical UVM support—stay tuned with our CHIPS Alliance events to follow that development.

What next?

Support for SystemVerilog parsers, for the intermediate format, and for their respective backends and integrations with various tooling, as well as for UVM is now under heavy development. If you would like to see more effort put into a specific area, reach out to us at

[email protected]. Antmicro offers commercial support services to extend the flows we’ve briefly presented here to various practical applications and designs, and to effectively integrate this approach into people’s workflows.

Adding to this our cloud expertise, Antmicro customers can benefit from a complete and industry-proven methodology scalable between teams and across on-premise and cloud installations, transforming chip design workflows to be more software-driven and collaborative. To take advantage of open source solutions with tools like Verilator, Yosys, OpenROAD and others - tell us about your use case and we will see what can be done today.

If you are interested in collaborating on the development of SystemVerilog-focused and other open hardware tooling,

join CHIPS Alliance and participate in our

workgroups and help us push innovation in ASIC design forward.

Originally posted on the

Antmicro blog.

By guest author Michael Gielda, Antmicro, and Tim Ansell, Software Engineer