LTS-114 is being updated in the LTS channel to 114.0.5735.343 (Platform Version: 15437.81.0) for most ChromeOS devices. Want to know more about Long Term Support? Click here.

Chrome Dev for Desktop Update

The Dev channel has been updated to 122.0.6170.3 for Windows, Mac and Linux.

A partial list of changes is available in the Git log. Interested in switching release channels? Find out how. If you find a new issue, please let us know by filing a bug. The community help forum is also a great place to reach out for help or learn about common issues.

Prudhvi Bommana

Google Chrome

Source: Google Chrome Releases

Sparsity-preserving differentially private training

Large embedding models have emerged as a fundamental tool for various applications in recommendation systems [1, 2] and natural language processing [3, 4, 5]. Such models enable the integration of non-numerical data into deep learning models by mapping categorical or string-valued input attributes with large vocabularies to fixed-length representation vectors using embedding layers. These models are widely deployed in personalized recommendation systems and achieve state-of-the-art performance in language tasks, such as language modeling, sentiment analysis, and question answering. In many such scenarios, privacy is an equally important feature when deploying those models. As a result, various techniques have been proposed to enable private data analysis. Among those, differential privacy (DP) is a widely adopted definition that limits exposure of individual user information while still allowing for the analysis of population-level patterns.

For training deep neural networks with DP guarantees, the most widely used algorithm is DP-SGD (DP stochastic gradient descent). One key component of DP-SGD is adding Gaussian noise to every coordinate of the gradient vectors during training. However, this creates scalability challenges when applied to large embedding models, because they rely on gradient sparsity for efficient training, but adding noise to all the coordinates destroys sparsity.

To mitigate this gradient sparsity problem, in “Sparsity-Preserving Differentially Private Training of Large Embedding Models” (to be presented at NeurIPS 2023), we propose a new algorithm called adaptive filtering-enabled sparse training (DP-AdaFEST). At a high level, the algorithm maintains the sparsity of the gradient by selecting only a subset of feature rows to which noise is added at each iteration. The key is to make such selections differentially private so that a three-way balance is achieved among the privacy cost, the training efficiency, and the model utility. Our empirical evaluation shows that DP-AdaFEST achieves a substantially sparser gradient, with a reduction in gradient size of over 105X compared to the dense gradient produced by standard DP-SGD, while maintaining comparable levels of accuracy. This gradient size reduction could translate into 20X wall-clock time improvement.

Overview

To better understand the challenges and our solutions to the gradient sparsity problem, let us start with an overview of how DP-SGD works during training. As illustrated by the figure below, DP-SGD operates by clipping the gradient contribution from each example in the current random subset of samples (called a mini-batch), and adding coordinate-wise Gaussian noise to the average gradient during each iteration of stochastic gradient descent (SGD). DP-SGD has demonstrated its effectiveness in protecting user privacy while maintaining model utility in a variety of applications [6, 7].

The challenges of applying DP-SGD to large embedding models mainly come from 1) the non-numerical feature fields like user/product IDs and categories, and 2) words and tokens that are transformed into dense vectors through an embedding layer. Due to the vocabulary sizes of those features, the process requires large embedding tables with a substantial number of parameters. In contrast to the number of parameters, the gradient updates are usually extremely sparse because each mini-batch of examples only activates a tiny fraction of embedding rows (the figure below visualizes the ratio of zero-valued coordinates, i.e., the sparsity, of the gradients under various batch sizes). This sparsity is heavily leveraged for industrial applications that efficiently handle the training of large-scale embeddings. For example, Google Cloud TPUs, custom-designed AI accelerators that are optimized for training and inference of large AI models, have dedicated APIs to handle large embeddings with sparse updates. This leads to significantly improved training throughput compared to training on GPUs, which at this time did not have specialized optimization for sparse embedding lookups. On the other hand, DP-SGD completely destroys the gradient sparsity because it requires adding independent Gaussian noise to all the coordinates. This creates a road block for private training of large embedding models as the training efficiency would be significantly reduced compared to non-private training.

|

| Embedding gradient sparsity (the fraction of zero-value gradient coordinates) in the Criteo pCTR model (see below). The figure reports the gradient sparsity, averaged over 50 update steps, of the top five categorical features (out of a total of 26) with the highest number of buckets, as well as the sparsity of all categorical features. The sprasity decreases with the batch size as more examples hit more rows in the embedding table, creating non-zero gradients. However, the sparsity is above 0.97 even for very large batch sizes. This pattern is consistently observed for all the five features. |

Algorithm

Our algorithm is built by extending standard DP-SGD with an extra mechanism at each iteration to privately select the “hot features”, which are the features that are activated by multiple training examples in the current mini-batch. As illustrated below, the mechanism works in a few steps:

- Compute how many examples contributed to each feature bucket (we call each of the possible values of a categorical feature a “bucket”).

- Restrict the total contribution from each example by clipping their counts.

- Add Gaussian noise to the contribution count of each feature bucket.

- Select only the features to be included in the gradient update that have a count above a given threshold (a sparsity-controlling parameter), thus maintaining sparsity. This mechanism is differentially private, and the privacy cost can be easily computed by composing it with the standard DP-SGD iterations.

Theoretical motivation

We provide the theoretical motivation that underlies DP-AdaFEST by viewing it as optimization using stochastic gradient oracles. Standard analysis of stochastic gradient descent in a theoretical setting decomposes the test error of the model into “bias” and “variance” terms. The advantage of DP-AdaFEST can be viewed as reducing variance at the cost of slightly increasing the bias. This is because DP-AdaFEST adds noise to a smaller set of coordinates compared to DP-SGD, which adds noise to all the coordinates. On the other hand, DP-AdaFEST introduces some bias to the gradients since the gradient on the embedding features are dropped with some probability. We refer the interested reader to Section 3.4 of the paper for more details.

Experiments

We evaluate the effectiveness of our algorithm with large embedding model applications, on public datasets, including one ad prediction dataset (Criteo-Kaggle) and one language understanding dataset (SST-2). We use DP-SGD with exponential selection as a baseline comparison.

The effectiveness of DP-AdaFEST is evident in the figure below, where it achieves significantly higher gradient size reduction (i.e., gradient sparsity) than the baseline while maintaining the same level of utility (i.e., only minimal performance degradation).

Specifically, on the Criteo-Kaggle dataset, DP-AdaFEST reduces the gradient computation cost of regular DP-SGD by more than 5x105 times while maintaining a comparable AUC (which we define as a loss of less than 0.005). This reduction translates into a more efficient and cost-effective training process. In comparison, as shown by the green line below, the baseline method is not able to achieve reasonable cost reduction within such a small utility loss threshold.

In language tasks, there isn't as much potential for reducing the size of gradients, because the vocabulary used is often smaller and already quite compact (shown on the right below). However, the adoption of sparsity-preserving DP-SGD effectively obviates the dense gradient computation. Furthermore, in line with the bias-variance trade-off presented in the theoretical analysis, we note that DP-AdaFEST occasionally exhibits superior utility compared to DP-SGD when the reduction in gradient size is minimal. Conversely, when incorporating sparsity, the baseline algorithm faces challenges in maintaining utility.

|

| A comparison of the best gradient size reduction (the ratio of the non-zero gradient value counts between regular DP-SGD and sparsity-preserving algorithms) achieved under ε =1.0 by DP-AdaFEST (our algorithm) and the baseline algorithm (DP-SGD with exponential selection) compared to DP-SGD at different thresholds for utility difference. A higher curve indicates a better utility/efficiency trade-off. |

In practice, most ad prediction models are being continuously trained and evaluated. To simulate this online learning setup, we also evaluate with time-series data, which are notoriously challenging due to being non-stationary. Our evaluation uses the Criteo-1TB dataset, which comprises real-world user-click data collected over 24 days. Consistently, DP-AdaFEST reduces the gradient computation cost of regular DP-SGD by more than 104 times while maintaining a comparable AUC.

Conclusion

We present a new algorithm, DP-AdaFEST, for preserving gradient sparsity in differentially private training — particularly in applications involving large embedding models, a fundamental tool for various applications in recommendation systems and natural language processing. Our algorithm achieves significant reductions in gradient size while maintaining accuracy on real-world benchmark datasets. Moreover, it offers flexible options for balancing utility and efficiency via sparsity-controlling parameters, while our proposals offer much better privacy-utility loss.

Acknowledgements

This work was a collaboration with Badih Ghazi, Pritish Kamath, Ravi Kumar, Pasin Manurangsi and Amer Sinha.

Source: Google AI Blog

Easily fill in smart chips in Google Docs using placeholder chips

What’s changing

Getting started

- Admins: There is no admin control for this feature.

- End users: Placeholder chips are only editable on the web, but can be viewed on web and mobile. Visit the Help Center to learn more about inserting smart chips & building blocks in your Google Doc.

Rollout pace

- Rapid Release and Scheduled Release domains: Gradual rollout (up to 15 days for feature visibility) starting on December 8, 2023

Availability

- Available to all Google Workspace customers and users with personal Google Accounts

Resources

Source: Google Workspace Updates

10 Google Play apps to help with your holiday budget

Check out these top apps for keeping your finances, and stress, in check this holiday shopping season.

Check out these top apps for keeping your finances, and stress, in check this holiday shopping season.

Source: The Official Google Blog

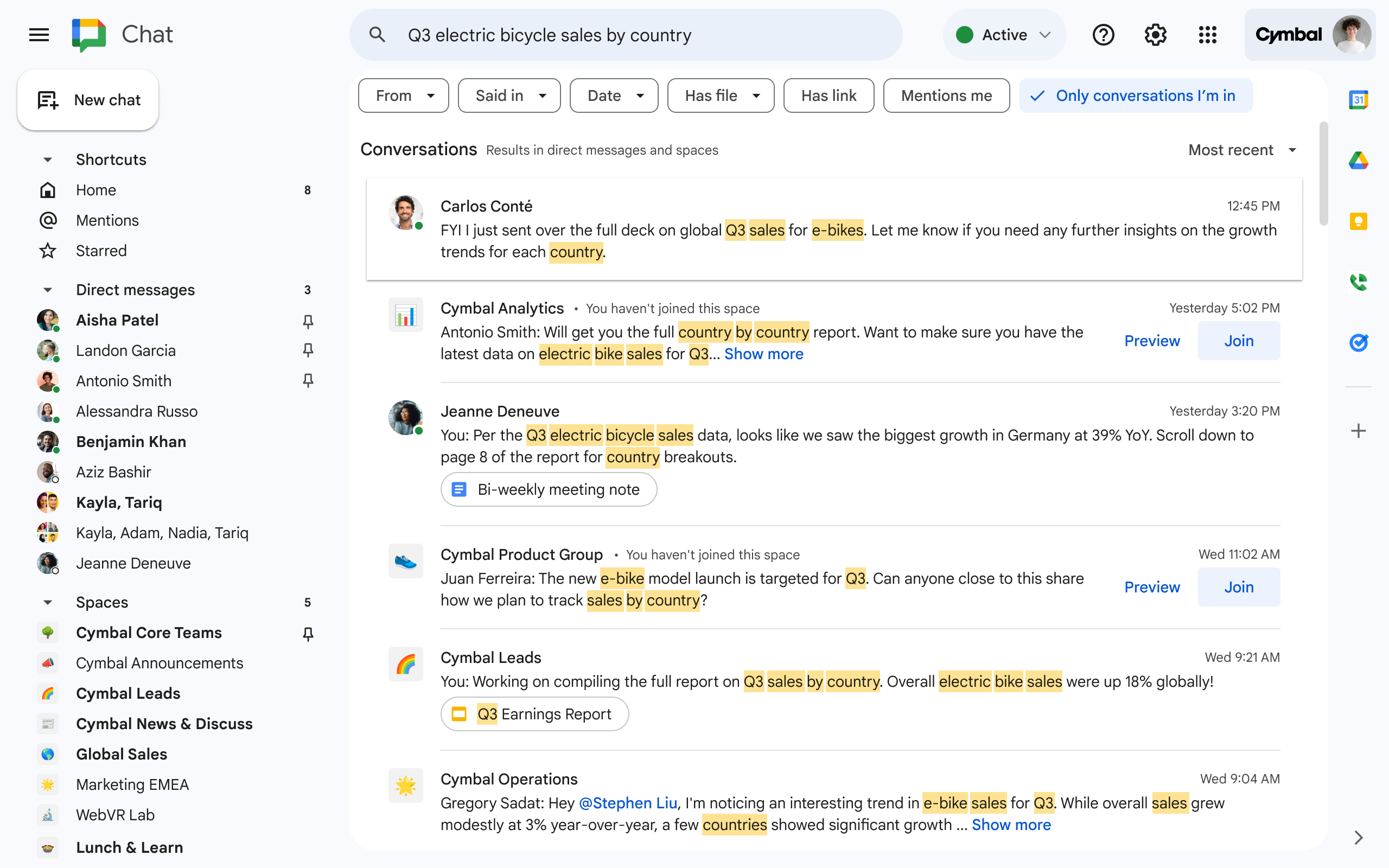

Additional enhancements to the search results page in Google Chat

What’s changing

Who’s impacted

Why it matters

Additional details

Getting started

- Admins: There is no admin control for this feature.

- End users: Visit the Help Center to learn more about searching for Google Chat messages.

Rollout pace

- Rapid Release and Scheduled Release domains: Full rollout (1–3 days for feature visibility) starting on December 8, 2023

- This feature is available now for all users.

Availability

- Available to all Google Workspace customers and users with personal Google Accounts

Resources

Source: Google Workspace Updates

NotebookLM adds more than a dozen new features

Now available in the U.S., NotebookLM has new features to help you easily read, take notes and organize your writing projects.

Now available in the U.S., NotebookLM has new features to help you easily read, take notes and organize your writing projects.

Source: AI

Chrome Beta for Android Update

Hi everyone! We've just released Chrome Beta 121 (121.0.6167.7) for Android. It's now available on Google Play.

You can see a partial list of the changes in the Git log. For details on new features, check out the Chromium blog, and for details on web platform updates, check here.

If you find a new issue, please let us know by filing a bug.

Krishna Govind

Google Chrome

Source: Google Chrome Releases

Optimally configure the Attribution Reporting API for ad measurement

Background

Ad-tech providers have historically used third-party cookies for conversion measurement, and for attributing conversions to ad interactions. Conversion measurement is critical for evaluating the performance of ad campaigns and automated bidding strategies. Now, with technology changes and privacy regulations on the rise, traditional ad-measurement systems must change in order to remain effective while protecting user privacy.

Chrome’s Attribution Reporting API (ARA), part of the Privacy Sandbox initiative, offers a new path to conversion measurement after Chrome’s planned third-party cookie deprecation in the second half of 2024, subject to addressing any remaining competition concerns of the UK’s Competition and Markets Authority (CMA). Google’s ads teams have made significant investments in learning to use the ARA more effectively, to help advertisers achieve more accurate measurement.

In a previous post, we provided a high-level overview of the approach Google’s ads teams are taking to effectively blend the ARA event-level and aggregate summary reports to maximize accuracy. A key point is that your configuration determines what data you query, and how you query it. It’s crucial for ad-tech providers to effectively configure the ARA for their use cases. Google’s ads teams have found that configuring specific ARA settings can lead to notable accuracy improvements. We encourage other ad-tech providers to integrate with the ARA to retrieve the conversion data they need, and process the ARA's output to help maintain accurate measurement in a post-third-party-cookie world.

The ARA is flexible to support various use cases. Google’s ads teams use this flexibility to configure unique ARA settings for each advertiser. This way, ARA-based measurement adapts to each advertiser’s specific needs. For example, we’ve noticed that when advertisers differ in conversion volume, it’s better to have advertiser-specific configurations related to the granularity of aggregation keys and the maximum observable conversions per ad interaction.

Google’s ads teams’ approach

Here's how Google's ads teams use the ARA to ensure the raw data we receive is as useful as possible for downstream blending. We configure ARA settings as explicit mathematical optimizations by defining objective functions to represent data quality, then choosing settings to optimize those functions. Ad-tech providers can choose their own approach. Google’s ads teams plan to continue sharing insights we learn from our own optimizations with the ad-tech community.

Please see our detailed technical explainer for more information about our approach to ARA configuration.

Source: Google Ads Developer Blog

eSignature is now generally available for Google Workspace Individual subscribers

What’s changing

- Request signatures, see the status of pending signatures, and find completed contracts.

- Sign official contracts directly within Google Drive, eliminating the need to switch apps or tabs.

- Create a copy of any given contract so it can be used as a template to initiate multiple eSignatures requests.

- Audit trail: all completed contracts will automatically contain an audit trail report.

- Multi-signer: the ability to request a signature from more than one user.

- Non-Gmail users: the ability to request an eSignature from non-Gmail users.

- Initiating eSignature on PDF (beta): the ability to initiate an eSignature on PDF files stored in Drive.

We’ll also be introducing more features for eSignature in the next few months, including:

- PDF templates: the ability to easily reuse a PDF file as contract templates.

- Custom text fields: the ability to ask signers to add relevant information (e.g. job titles, email address) to the contract.

Additional details

Getting started

- End users: Workspace Individual subscribers: Visit the Help Center to learn more about sending signature requests & sign documents with eSignature.

Rollout pace

- Rapid Release and Scheduled Release domains: Extended rollout (potentially longer than 15 days for feature visibility) starting on December 7, 2023

Availability

- Available to Google Workspace Individual Subscribers

- Eligible for beta: Google Workspace Business Standard, Business Plus, Enterprise Starter, Enterprise Standard, Enterprise Plus, Enterprise Essentials, Enterprise Essentials Plus, Education Plus, and Nonprofits customers