In 2015 we launched Google Handwriting Input, which enabled users to handwrite text on their Android mobile device as an additional input method for any Android app. In our initial launch, we managed to support 82 languages from French to Gaelic, Chinese to Malayalam. In order to provide a more seamless user experience and remove the need for switching input methods, last year we added support for handwriting recognition in more than 100 languages to Gboard for Android, Google's keyboard for mobile devices.

Since then, progress in machine learning has enabled new model architectures and training methodologies, allowing us to revise our initial approach (which relied on hand-designed heuristics to cut the handwritten input into single characters) and instead build a single machine learning model that operates on the whole input and reduces error rates substantially compared to the old version. We launched those new models for all latin-script based languages in Gboard at the beginning of the year, and have published the paper "Fast Multi-language LSTM-based Online Handwriting Recognition" that explains in more detail the research behind this release. In this post, we give a high-level overview of that work.

Touch Points, Bézier Curves and Recurrent Neural Networks

The starting point for any online handwriting recognizer are the touch points. The drawn input is represented as a sequence of strokes and each of those strokes in turn is a sequence of points each with a timestamp attached. Since Gboard is used on a wide variety of devices and screen resolutions our first step is to normalize the touch-point coordinates. Then, in order to capture the shape of the data accurately, we convert the sequence of points into a sequence of cubic Bézier curves to use as inputs to a recurrent neural network (RNN) that is trained to accurately identify the character being written (more on that step below). While Bézier curves have a long tradition of use in handwriting recognition, using them as inputs is novel, and allows us to provide a consistent representation of the input across devices with different sampling rates and accuracies. This approach differs significantly from our previous models which used a so-called segment-and-decode approach, which involved creating several hypotheses of how to decompose the strokes into characters (segment) and then finding the most likely sequence of characters from this decomposition (decode).

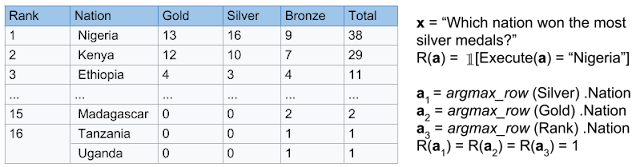

Another benefit of this method is that the sequence of Bézier curves is more compact than the underlying sequence of input points, which makes it easier for the model to pick up temporal dependencies along the input — Each curve is represented by a polynomial defined by start and end-points as well as two additional control points, determining the shape of the curve. We use an iterative procedure which minimizes the squared distances (in x, y and time) between the normalized input coordinates and the curve in order to find a sequence of cubic Bézier curves that represent the input accurately. The figure below shows an example of the curve fitting process. The handwritten user-input can be seen in black. It consists of 186 touch points and is clearly meant to be the word go. In yellow, blue, pink and green we see its representation through a sequence of four cubic Bézier curves for the letter g (with their two control points each), and correspondingly orange, turquoise and white represent the three curves interpolating the letter o.

Character Decoding

The sequence of curves represents the input, but we still need to translate the sequence of input curves to the actual written characters. For that we use a multi-layer RNN to process the sequence of curves and produce an output decoding matrix with a probability distribution over all possible letters for each input curve, denoting what letter is being written as part of that curve.

We experimented with multiple types of RNNs, and finally settled on using a bidirectional version of quasi-recurrent neural networks (QRNN). QRNNs alternate between convolutional and recurrent layers, giving it the theoretical potential for efficient parallelization, and provide a good predictive performance while keeping the number of weights comparably small. The number of weights is directly related to the size of the model that needs to be downloaded, so the smaller the better.

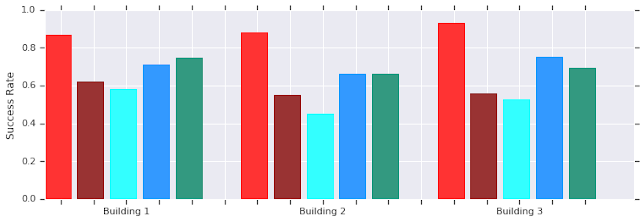

In order to "decode" the curves, the recurrent neural network produces a matrix, where each column corresponds to one input curve, and each row corresponds to a letter in the alphabet. The column for a specific curve can be seen as a probability distribution over all the letters of the alphabet. However, each letter can consist of multiple curves (the g and o above, for instance, consist of four and three curves, respectively). This mismatch between the length of the output sequence from the recurrent neural network (which always matches the number of bezier curves) and the actual number of characters the input is supposed to represent is addressed by adding a special blank symbol to indicate no output for a particular curve, as in the Connectionist Temporal Classification (CTC) algorithm. We use a Finite State Machine Decoder to combine the outputs of the Neural Network with a character-based language model encoded as a weighted finite-state acceptor. Character sequences that are common in a language (such as "sch" in German) receive bonuses and are more likely to be output, whereas uncommon sequences are penalized. The process is visualized below.

The sequence of touch points (color-coded by the curve segments as in the previous figure) is converted to a much shorter sequence of Bezier coefficients (seven, in our example), each of which corresponds to a single curve. The QRNN-based recognizer converts the sequence of curves into a sequence of character probabilities of the same length, shown in the decoder matrix with the rows corresponding to the letters "a" to "z" and the blank symbol, where the brightness of an entry corresponds to its relative probability. Going through the decoder matrix left to right, we see mostly blanks, and bright points for the characters "g" and "o", resulting in the text output "go".

Despite being significantly simpler, our new character recognition models not only make 20%-40% fewer mistakes than the old ones, they are also much faster. However, all this still needs to be performed on-device!

Making it Work, On-device

In order to provide the best user-experience, accurate recognition models are not enough — they also need to be fast. To achieve the lowest latency possible in Gboard, we convert our recognition models (trained in TensorFlow) to TensorFlow Lite models. This involves quantizing all our weights during model training such that instead of using four bytes per weight we only use one, which leads to smaller models as well as lower inference times. Moreover, TensorFlow Lite allows us to reduce the APK size compared to using a full TensorFlow implementation, because it is optimized for small binary size by only including the parts which are required for inference.

More to Come

We will continue to push the envelope beyond improving the latin-script language recognizers. The Handwriting Team is already hard at work launching new models for all our supported handwriting languages in Gboard.

Acknowledgements

We would like to thank everybody who contributed to improving the handwriting experience in Gboard. In particular, Jatin Matani from the Gboard team, David Rybach from the Speech & Language Algorithms Team, Prabhu Kaliamoorthi from the Expander Team, Pete Warden from the TensorFlow Lite team, as well as Henry Rowley, Li-Lun Wang, Mircea Trăichioiu, Philippe Gervais, and Thomas Deselaers from the Handwriting Team.