Today the 2024 March Meeting of the American Physical Society (APS) kicks off in Minneapolis, MN. A premier conference on topics ranging across physics and related fields, APS 2024 brings together researchers, students, and industry professionals to share their discoveries and build partnerships with the goal of realizing fundamental advances in physics-related sciences and technology.

This year, Google has a strong presence at APS with a booth hosted by the Google Quantum AI team, 50+ talks throughout the conference, and participation in conference organizing activities, special sessions and events. Attending APS 2024 in person? Come visit Google’s Quantum AI booth to learn more about the exciting work we’re doing to solve some of the field’s most interesting challenges.

You can learn more about the latest cutting edge work we are presenting at the conference along with our schedule of booth events below (Googlers listed in bold).

Organizing Committee

Session Chairs include: Aaron Szasz

Booth Activities

This schedule is subject to change. Please visit the Google Quantum AI booth for more information.

Crumble

Presenter: Matt McEwen

Tue, Mar 5 | 11:00 AM CST

Qualtran

Presenter: Tanuj Khattar

Tue, Mar 5 | 2:30 PM CST

Qualtran

Presenter: Tanuj Khattar

Thu, Mar 7 | 11:00 AM CST

$5M XPRIZE / Google Quantum AI competition to accelerate quantum applications Q&A

Presenter: Ryan Babbush

Thu, Mar 7 | 11:00 AM CST

Talks

Monday

Certifying highly-entangled states from few single-qubit measurements

Presenter: Hsin-Yuan Huang

Author: Hsin-Yuan Huang

Session A45: New Frontiers in Machine Learning Quantum Physics

Toward high-fidelity analog quantum simulation with superconducting qubits

Presenter: Trond Andersen

Authors: Trond I Andersen, Xiao Mi, Amir H Karamlou, Nikita Astrakhantsev, Andrey Klots, Julia Berndtsson, Andre Petukhov, Dmitry Abanin, Lev B Ioffe, Yu Chen, Vadim Smelyanskiy, Pedram Roushan

Session A51: Applications on Noisy Quantum Hardware I

Measuring circuit errors in context for surface code circuits

Presenter: Dripto M Debroy

Authors: Dripto M Debroy, Jonathan A Gross, Élie Genois, Zhang Jiang

Session B50: Characterizing Noise with QCVV Techniques

Quantum computation of stopping power for inertial fusion target design I: Physics overview and the limits of classical algorithms

Presenter: Andrew D. Baczewski

Authors: Nicholas C. Rubin, Dominic W. Berry, Alina Kononov, Fionn D. Malone, Tanuj Khattar, Alec White, Joonho Lee, Hartmut Neven, Ryan Babbush, Andrew D. Baczewski

Session B51: Heterogeneous Design for Quantum Applications

Link to Paper

Quantum computation of stopping power for inertial fusion target design II: Physics overview and the limits of classical algorithms

Presenter: Nicholas C. Rubin

Authors: Nicholas C. Rubin, Dominic W. Berry, Alina Kononov, Fionn D. Malone, Tanuj Khattar, Alec White, Joonho Lee, Hartmut Neven, Ryan Babbush, Andrew D. Baczewski

Session B51: Heterogeneous Design for Quantum Applications

Link to Paper

Calibrating Superconducting Qubits: From NISQ to Fault Tolerance

Presenter: Sabrina S Hong

Author: Sabrina S Hong

Session B56: From NISQ to Fault Tolerance

Measurement and feedforward induced entanglement negativity transition

Presenter: Ramis Movassagh

Authors: Alireza Seif, Yu-Xin Wang, Ramis Movassagh, Aashish A. Clerk

Session B31: Measurement Induced Criticality in Many-Body Systems

Link to Paper

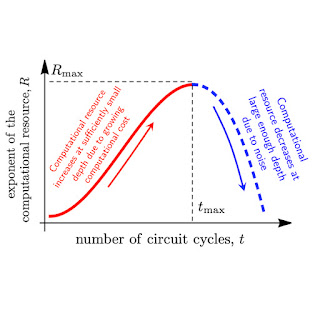

Effective quantum volume, fidelity and computational cost of noisy quantum processing experiments

Presenter: Salvatore Mandra

Authors: Kostyantyn Kechedzhi, Sergei V Isakov, Salvatore Mandra, Benjamin Villalonga, X. Mi, Sergio Boixo, Vadim Smelyanskiy

Session B52: Quantum Algorithms and Complexity

Link to Paper

Accurate thermodynamic tables for solids using Machine Learning Interaction Potentials and Covariance of Atomic Positions

Presenter: Mgcini K Phuthi

Authors: Mgcini K Phuthi, Yang Huang, Michael Widom, Ekin D Cubuk, Venkat Viswanathan

Session D60: Machine Learning of Molecules and Materials: Chemical Space and Dynamics

Tuesday

IN-Situ Pulse Envelope Characterization Technique (INSPECT)

Presenter: Zhang Jiang

Authors: Zhang Jiang, Jonathan A Gross, Élie Genois

Session F50: Advanced Randomized Benchmarking and Gate Calibration

Characterizing two-qubit gates with dynamical decoupling

Presenter: Jonathan A Gross

Authors: Jonathan A Gross, Zhang Jiang, Élie Genois, Dripto M Debroy, Ze-Pei Cian*, Wojciech Mruczkiewicz

Session F50: Advanced Randomized Benchmarking and Gate Calibration

Statistical physics of regression with quadratic models

Presenter: Blake Bordelon

Authors: Blake Bordelon, Cengiz Pehlevan, Yasaman Bahri

Session EE01: V: Statistical and Nonlinear Physics II

Improved state preparation for first-quantized simulation of electronic structure

Presenter: William J Huggins

Authors: William J Huggins, Oskar Leimkuhler, Torin F Stetina, Birgitta Whaley

Session G51: Hamiltonian Simulation

Controlling large superconducting quantum processors

Presenter: Paul V. Klimov

Authors: Paul V. Klimov, Andreas Bengtsson, Chris Quintana, Alexandre Bourassa, Sabrina Hong, Andrew Dunsworth, Kevin J. Satzinger, William P. Livingston, Volodymyr Sivak, Murphy Y. Niu, Trond I. Andersen, Yaxing Zhang, Desmond Chik, Zijun Chen, Charles Neill, Catherine Erickson, Alejandro Grajales Dau, Anthony Megrant, Pedram Roushan, Alexander N. Korotkov, Julian Kelly, Vadim Smelyanskiy, Yu Chen, Hartmut Neven

Session G30: Commercial Applications of Quantum Computing)

Link to Paper

Gaussian boson sampling: Determining quantum advantage

Presenter: Peter D Drummond

Authors: Peter D Drummond, Alex Dellios, Ned Goodman, Margaret D Reid, Ben Villalonga

Session G50: Quantum Characterization, Verification, and Validation II

Attention to complexity III: learning the complexity of random quantum circuit states

Presenter: Hyejin Kim

Authors: Hyejin Kim, Yiqing Zhou, Yichen Xu, Chao Wan, Jin Zhou, Yuri D Lensky, Jesse Hoke, Pedram Roushan, Kilian Q Weinberger, Eun-Ah Kim

Session G50: Quantum Characterization, Verification, and Validation II

Balanced coupling in superconducting circuits

Presenter: Daniel T Sank

Authors: Daniel T Sank, Sergei V Isakov, Mostafa Khezri, Juan Atalaya

Session K48: Strongly Driven Superconducting Systems

Resource estimation of Fault Tolerant algorithms using Qᴜᴀʟᴛʀᴀɴ

Presenter: Tanuj Khattar

Author: Tanuj Khattar

Session K49: Algorithms and Implementations on Near-Term Quantum Computers

Wednesday

Discovering novel quantum dynamics with superconducting qubits

Presenter: Pedram Roushan

Author: Pedram Roushan

Session M24: Analog Quantum Simulations Across Platforms

Deciphering Tumor Heterogeneity in Triple-Negative Breast Cancer: The Crucial Role of Dynamic Cell-Cell and Cell-Matrix Interactions

Presenter: Susan Leggett

Authors: Susan Leggett, Ian Wong, Celeste Nelson, Molly Brennan, Mohak Patel, Christian Franck, Sophia Martinez, Joe Tien, Lena Gamboa, Thomas Valentin, Amanda Khoo, Evelyn K Williams

Session M27: Mechanics of Cells and Tissues II

Toward implementation of protected charge-parity qubits

Presenter: Abigail Shearrow

Authors: Abigail Shearrow, Matthew Snyder, Bradley G Cole, Kenneth R Dodge, Yebin Liu, Andrey Klots, Lev B Ioffe, Britton L Plourde, Robert McDermott

Session N48: Unconventional Superconducting Qubits

Electronic capacitance in tunnel junctions for protected charge-parity qubits

Presenter: Bradley G Cole

Authors: Bradley G Cole, Kenneth R Dodge, Yebin Liu, Abigail Shearrow, Matthew Snyder, Andrey Klots, Lev B Ioffe, Robert McDermott, B.L.T. Plourde

Session N48: Unconventional Superconducting Qubits

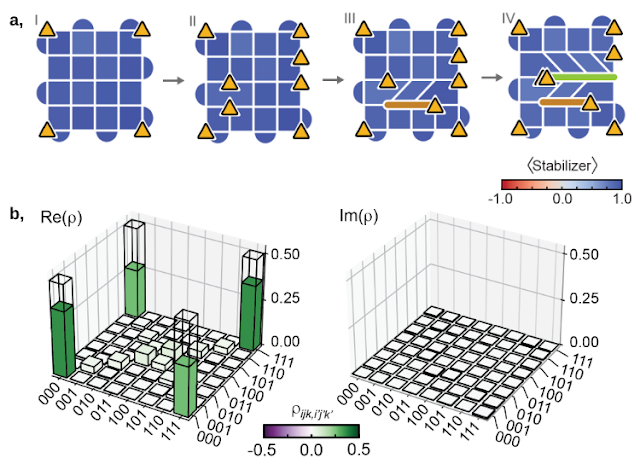

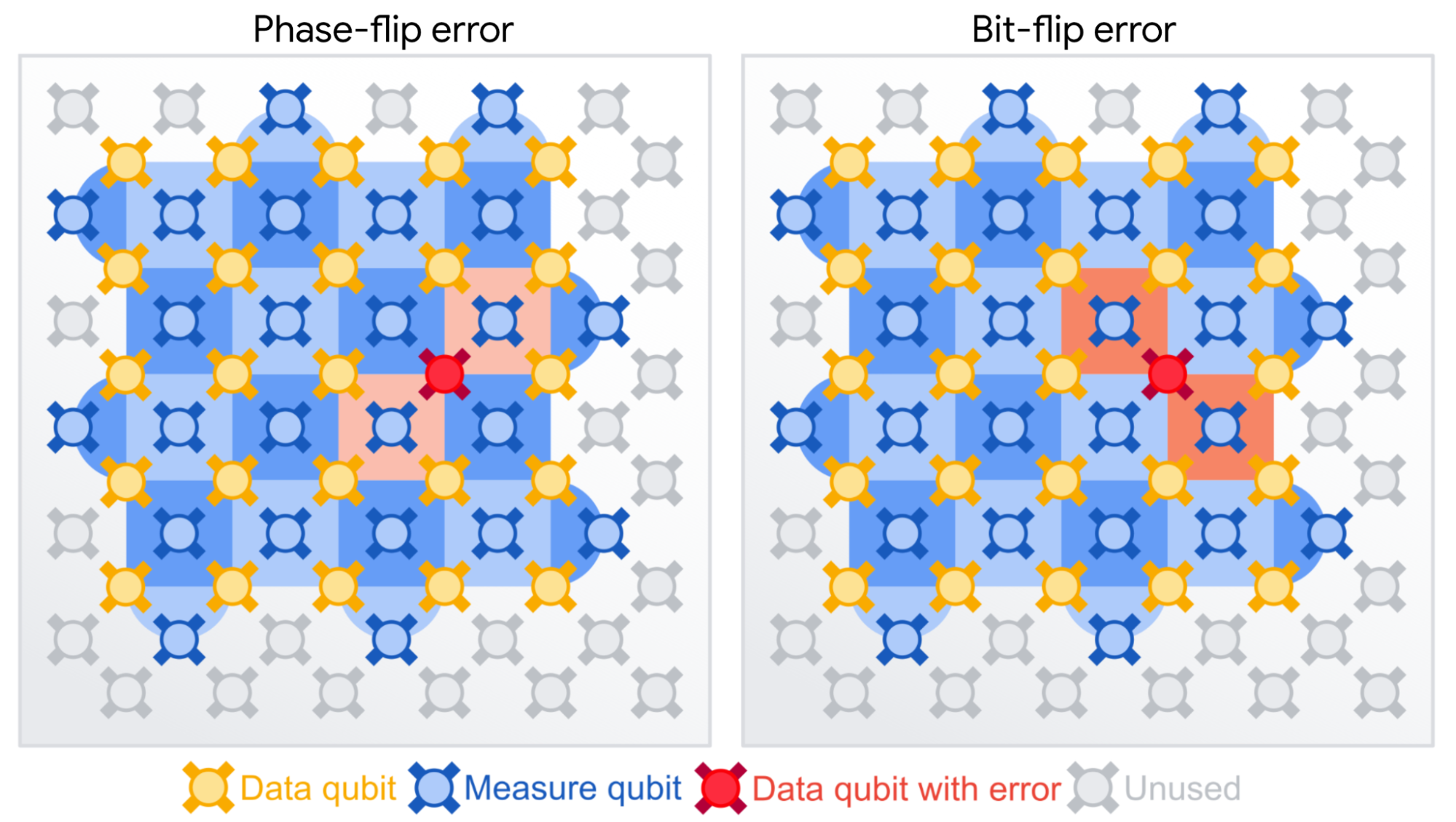

Overcoming leakage in quantum error correction

Presenter: Kevin C. Miao

Authors: Kevin C. Miao, Matt McEwen, Juan Atalaya, Dvir Kafri, Leonid P. Pryadko, Andreas Bengtsson, Alex Opremcak, Kevin J. Satzinger, Zijun Chen, Paul V. Klimov, Chris Quintana, Rajeev Acharya, Kyle Anderson, Markus Ansmann, Frank Arute, Kunal Arya, Abraham Asfaw, Joseph C. Bardin, Alexandre Bourassa, Jenna Bovaird, Leon Brill, Bob B. Buckley, David A. Buell, Tim Burger, Brian Burkett, Nicholas Bushnell, Juan Campero, Ben Chiaro, Roberto Collins, Paul Conner, Alexander L. Crook, Ben Curtin, Dripto M. Debroy, Sean Demura, Andrew Dunsworth, Catherine Erickson, Reza Fatemi, Vinicius S. Ferreira, Leslie Flores Burgos, Ebrahim Forati, Austin G. Fowler, Brooks Foxen, Gonzalo Garcia, William Giang, Craig Gidney, Marissa Giustina, Raja Gosula, Alejandro Grajales Dau, Jonathan A. Gross, Michael C. Hamilton, Sean D. Harrington, Paula Heu, Jeremy Hilton, Markus R. Hoffmann, Sabrina Hong, Trent Huang, Ashley Huff, Justin Iveland, Evan Jeffrey, Zhang Jiang, Cody Jones, Julian Kelly, Seon Kim, Fedor Kostritsa, John Mark Kreikebaum, David Landhuis, Pavel Laptev, Lily Laws, Kenny Lee, Brian J. Lester, Alexander T. Lill, Wayne Liu, Aditya Locharla, Erik Lucero, Steven Martin, Anthony Megrant, Xiao Mi, Shirin Montazeri, Alexis Morvan, Ofer Naaman, Matthew Neeley, Charles Neill, Ani Nersisyan, Michael Newman, Jiun How Ng, Anthony Nguyen, Murray Nguyen, Rebecca Potter, Charles Rocque, Pedram Roushan, Kannan Sankaragomathi, Christopher Schuster, Michael J. Shearn, Aaron Shorter, Noah Shutty, Vladimir Shvarts, Jindra Skruzny, W. Clarke Smith, George Sterling, Marco Szalay, Douglas Thor, Alfredo Torres, Theodore White, Bryan W. K. Woo, Z. Jamie Yao, Ping Yeh, Juhwan Yoo, Grayson Young, Adam Zalcman, Ningfeng Zhu, Nicholas Zobrist, Hartmut Neven, Vadim Smelyanskiy, Andre Petukhov, Alexander N. Korotkov, Daniel Sank, Yu Chen

Session N51: Quantum Error Correction Code Performance and Implementation I

Link to Paper

Modeling the performance of the surface code with non-uniform error distribution: Part 1

Presenter: Yuri D Lensky

Authors: Yuri D Lensky, Volodymyr Sivak, Kostyantyn Kechedzhi, Igor Aleiner

Session N51: Quantum Error Correction Code Performance and Implementation I

Modeling the performance of the surface code with non-uniform error distribution: Part 2

Presenter: Volodymyr Sivak

Authors: Volodymyr Sivak, Michael Newman, Cody Jones, Henry Schurkus, Dvir Kafri, Yuri D Lensky, Paul Klimov, Kostyantyn Kechedzhi, Vadim Smelyanskiy

Session N51: Quantum Error Correction Code Performance and Implementation I

Highly optimized tensor network contractions for the simulation of classically challenging quantum computations

Presenter: Benjamin Villalonga

Author: Benjamin Villalonga

Session Q51: Co-evolution of Quantum Classical Algorithms

Teaching modern quantum computing concepts using hands-on open-source software at all levels

Presenter: Abraham Asfaw

Author: Abraham Asfaw

Session Q61: Teaching Quantum Information at All Levels II

Thursday

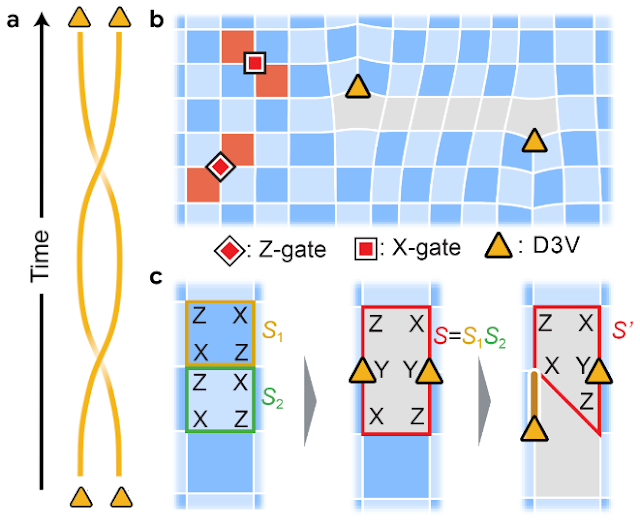

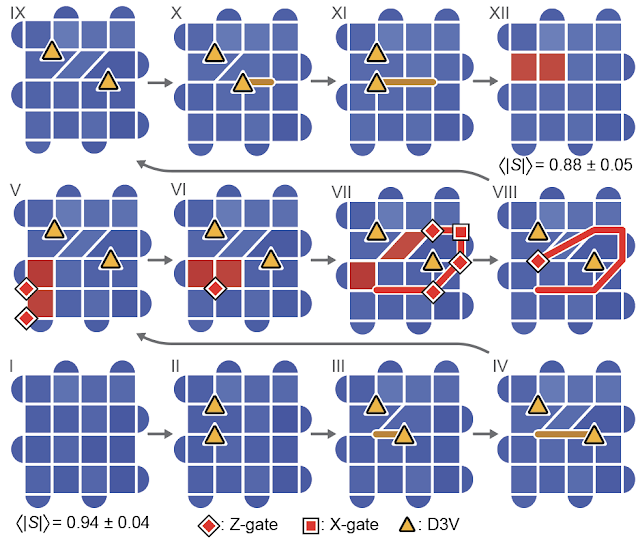

New circuits and an open source decoder for the color code

Presenter: Craig Gidney

Authors: Craig Gidney, Cody Jones

Session S51: Quantum Error Correction Code Performance and Implementation II

Link to Paper

Performing Hartree-Fock many-body physics calculations with large language models

Presenter: Eun-Ah Kim

Authors: Eun-Ah Kim, Haining Pan, Nayantara Mudur, William Taranto, Subhashini Venugopalan, Yasaman Bahri, Michael P Brenner

Session S18: Data Science, AI and Machine Learning in Physics I

New methods for reducing resource overhead in the surface code

Presenter: Michael Newman

Authors: Craig M Gidney, Michael Newman, Peter Brooks, Cody Jones

Session S51: Quantum Error Correction Code Performance and Implementation II

Link to Paper

Challenges and opportunities for applying quantum computers to drug design

Presenter: Raffaele Santagati

Authors: Raffaele Santagati, Alan Aspuru-Guzik, Ryan Babbush, Matthias Degroote, Leticia Gonzalez, Elica Kyoseva, Nikolaj Moll, Markus Oppel, Robert M. Parrish, Nicholas C. Rubin, Michael Streif, Christofer S. Tautermann, Horst Weiss, Nathan Wiebe, Clemens Utschig-Utschig

Session S49: Advances in Quantum Algorithms for Near-Term Applications

Link to Paper

Dispatches from Google's hunt for super-quadratic quantum advantage in new applications

Presenter: Ryan Babbush

Author: Ryan Babbush

Session T45: Recent Advances in Quantum Algorithms

Qubit as a reflectometer

Presenter: Yaxing Zhang

Authors: Yaxing Zhang, Benjamin Chiaro

Session T48: Superconducting Fabrication, Packaging, & Validation

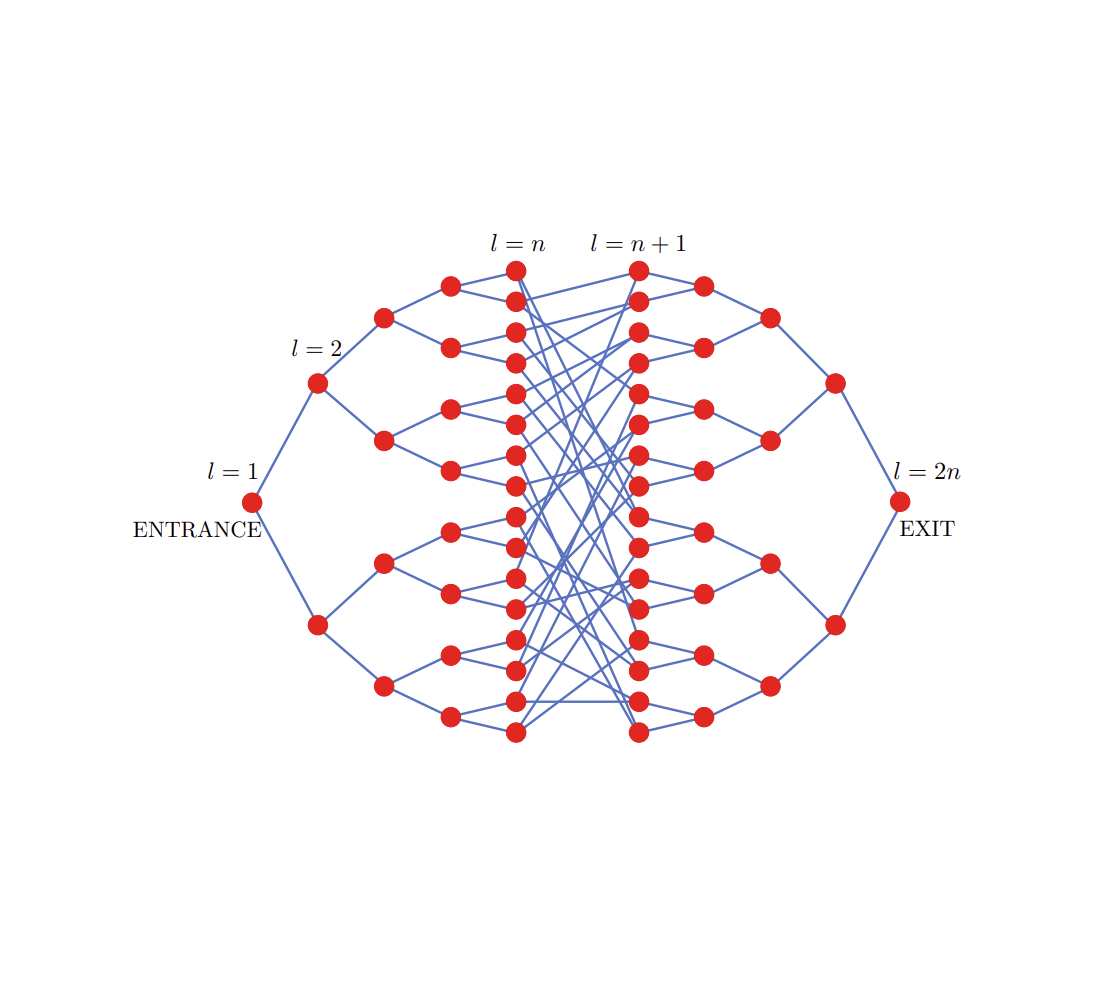

Random-matrix theory of measurement-induced phase transitions in nonlocal Floquet quantum circuits

Presenter: Aleksei Khindanov

Authors: Aleksei Khindanov, Lara Faoro, Lev Ioffe, Igor Aleiner

Session W14: Measurement-Induced Phase Transitions

Continuum limit of finite density many-body ground states with MERA

Presenter: Subhayan Sahu

Authors: Subhayan Sahu, Guifré Vidal

Session W58: Extreme-Scale Computational Science Discovery in Fluid Dynamics and Related Disciplines II

Dynamics of magnetization at infinite temperature in a Heisenberg spin chain

Presenter: Eliott Rosenberg

Authors: Eliott Rosenberg, Trond Andersen, Rhine Samajdar, Andre Petukhov, Jesse Hoke*, Dmitry Abanin, Andreas Bengtsson, Ilya Drozdov, Catherine Erickson, Paul Klimov, Xiao Mi, Alexis Morvan, Matthew Neeley, Charles Neill, Rajeev Acharya, Richard Allen, Kyle Anderson, Markus Ansmann, Frank Arute, Kunal Arya, Abraham Asfaw, Juan Atalaya, Joseph Bardin, A. Bilmes, Gina Bortoli, Alexandre Bourassa, Jenna Bovaird, Leon Brill, Michael Broughton, Bob B. Buckley, David Buell, Tim Burger, Brian Burkett, Nicholas Bushnell, Juan Campero, Hung-Shen Chang, Zijun Chen, Benjamin Chiaro, Desmond Chik, Josh Cogan, Roberto Collins, Paul Conner, William Courtney, Alexander Crook, Ben Curtin, Dripto Debroy, Alexander Del Toro Barba, Sean Demura, Agustin Di Paolo, Andrew Dunsworth, Clint Earle, E. Farhi, Reza Fatemi, Vinicius Ferreira, Leslie Flores, Ebrahim Forati, Austin Fowler, Brooks Foxen, Gonzalo Garcia, Élie Genois, William Giang, Craig Gidney, Dar Gilboa, Marissa Giustina, Raja Gosula, Alejandro Grajales Dau, Jonathan Gross, Steve Habegger, Michael Hamilton, Monica Hansen, Matthew Harrigan, Sean Harrington, Paula Heu, Gordon Hill, Markus Hoffmann, Sabrina Hong, Trent Huang, Ashley Huff, William Huggins, Lev Ioffe, Sergei Isakov, Justin Iveland, Evan Jeffrey, Zhang Jiang, Cody Jones, Pavol Juhas, D. Kafri, Tanuj Khattar, Mostafa Khezri, Mária Kieferová, Seon Kim, Alexei Kitaev, Andrey Klots, Alexander Korotkov, Fedor Kostritsa, John Mark Kreikebaum, David Landhuis, Pavel Laptev, Kim Ming Lau, Lily Laws, Joonho Lee, Kenneth Lee, Yuri Lensky, Brian Lester, Alexander Lill, Wayne Liu, William P. Livingston, A. Locharla, Salvatore Mandrà, Orion Martin, Steven Martin, Jarrod McClean, Matthew McEwen, Seneca Meeks, Kevin Miao, Amanda Mieszala, Shirin Montazeri, Ramis Movassagh, Wojciech Mruczkiewicz, Ani Nersisyan, Michael Newman, Jiun How Ng, Anthony Nguyen, Murray Nguyen, M. Niu, Thomas O'Brien, Seun Omonije, Alex Opremcak, Rebecca Potter, Leonid Pryadko, Chris Quintana, David Rhodes, Charles Rocque, N. Rubin, Negar Saei, Daniel Sank, Kannan Sankaragomathi, Kevin Satzinger, Henry Schurkus, Christopher Schuster, Michael Shearn, Aaron Shorter, Noah Shutty, Vladimir Shvarts, Volodymyr Sivak, Jindra Skruzny, Clarke Smith, Rolando Somma, George Sterling, Doug Strain, Marco Szalay, Douglas Thor, Alfredo Torres, Guifre Vidal, Benjamin Villalonga, Catherine Vollgraff Heidweiller, Theodore White, Bryan Woo, Cheng Xing, Jamie Yao, Ping Yeh, Juhwan Yoo, Grayson Young, Adam Zalcman, Yaxing Zhang, Ningfeng Zhu, Nicholas Zobrist, Hartmut Neven, Ryan Babbush, Dave Bacon, Sergio Boixo, Jeremy Hilton, Erik Lucero, Anthony Megrant, Julian Kelly, Yu Chen, Vadim Smelyanskiy, Vedika Khemani, Sarang Gopalakrishnan, Tomaž Prosen, Pedram Roushan

Session W50: Quantum Simulation of Many-Body Physics

Link to Paper

The fast multipole method on a quantum computer

Presenter: Kianna Wan

Authors: Kianna Wan, Dominic W Berry, Ryan Babbush

Session W50: Quantum Simulation of Many-Body Physics

Friday

The quantum computing industry and protecting national security: what tools will work?

Presenter: Kate Weber

Author: Kate Weber

Session Y43: Industry, Innovation, and National Security: Finding the Right Balance

Novel charging effects in the fluxonium qubit

Presenter: Agustin Di Paolo

Authors: Agustin Di Paolo, Kyle Serniak, Andrew J Kerman, William D Oliver

Session Y46: Fluxonium-Based Superconducting Quibits

Microwave Engineering of Parametric Interactions in Superconducting Circuits

Presenter: Ofer Naaman

Author: Ofer Naaman

Session Z46: Broadband Parametric Amplifiers and Circulators

Linear spin wave theory of large magnetic unit cells using the Kernel Polynomial Method

Presenter: Harry Lane

Authors: Harry Lane, Hao Zhang, David A Dahlbom, Sam Quinn, Rolando D Somma, Martin P Mourigal, Cristian D Batista, Kipton Barros

Session Z62: Cooperative Phenomena, Theory

*Work done while at Google