CameraX, the Android Jetpack camera library which helps you create a best-in-class experience that works consistently across Android versions and devices, is becoming even more helpful with its 1.3 release. CameraX is already used in a growing number of Android apps, encompassing a wide range of use cases from straightforward and performant camera interactions to advanced image processing and beyond.

CameraX 1.3 opens up even more advanced capabilities. With the dual concurrent camera feature, apps can operate two cameras at the same time. Additionally, 1.3 makes it simple to delight users with new HDR video capabilities. You can also now add graphics library transformations (for example, with OpenGL or Vulkan) to the Preview, ImageCapture, and VideoCapture UseCases to apply filters and effects. There are also many other video improvements.

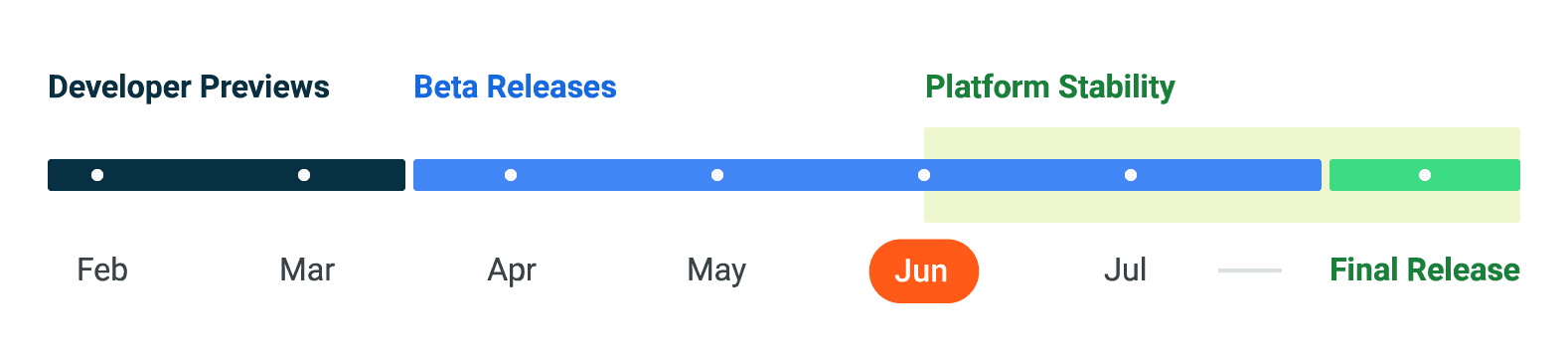

CameraX version 1.3 is officially in Beta as of today, so let’s get right into the details!

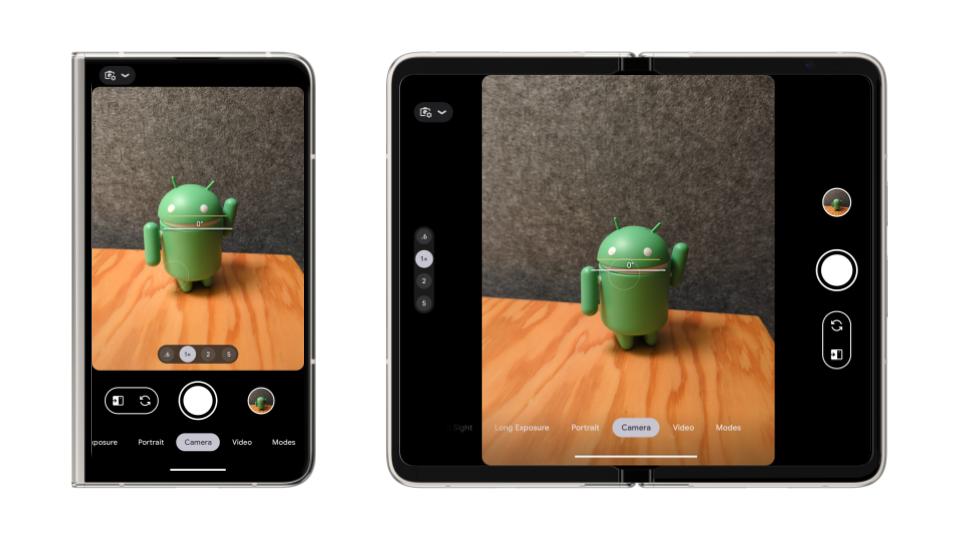

Dual concurrent camera

CameraX makes complex camera functionality easy to use, and the new dual concurrent camera feature is no exception. CameraX handles the low-level details like ensuring the concurrent camera streams are opened and closed in the correct order. In CameraX, binding dual concurrent cameras is not that different from binding a single camera.

First, check which cameras support a concurrent connection with getAvailableConcurrentCameraInfos(). A common scenario is to select a front-facing and a back-facing camera.

var primaryCameraSelector: CameraSelector? = null

var secondaryCameraSelector: CameraSelector? = null

for (cameraInfos in cameraProvider.availableConcurrentCameraInfos) {

primaryCameraSelector = cameraInfos.first {

it.lensFacing == CameraSelector.LENS_FACING_FRONT

}.cameraSelector

secondaryCameraSelector = cameraInfos.first {

it.lensFacing == CameraSelector.LENS_FACING_BACK

}.cameraSelector

if (primaryCameraSelector == null || secondaryCameraSelector == null) {

// If either a primary or secondary selector wasn't found, reset both

// to move on to the next list of CameraInfos.

primaryCameraSelector = null

secondaryCameraSelector = null

} else {

// If both primary and secondary camera selectors were found, we can

// conclude the search.

break

}

}

if (primaryCameraSelector == null || secondaryCameraSelector == null) {

// Front and back concurrent camera not available. Handle accordingly.

} |

Then, create a SingleCameraConfig for each camera, passing in each camera selector from before, along with your UseCaseGroup and LifecycleOwner. Then call bindToLifecycle() on your CameraProvider with both SingleCameraConfigs in a list.

val primary = ConcurrentCamera.SingleCameraConfig(

primaryCameraSelector,

useCaseGroup,

lifecycleOwner

)

val secondary = ConcurrentCamera.SingleCameraConfig(

secondaryCameraSelector,

useCaseGroup,

lifecycleOwner

)

val concurrentCamera = cameraProvider.bindToLifecycle(

listOf(primary, secondary)

) |

For compatibility reasons, dual concurrent camera supports each camera being bound to 2 or fewer UseCases with a maximum resolution of 720p or 1440p, depending on the device.

HDR video

CameraX 1.3 also adds support for 10-bit video streaming along with HDR profiles, giving you the ability to capture video with greater detail, color and contrast than previously available. You can use the VideoCapture.Builder.setDynamicRange() method to set a number of configurations. There are several pre-configured values:

- HLG_10_BIT - A 10-bit high-dynamic range with HLG encoding.This is the recommended HDR encoding to use because every device that supports HDR capture will support HLG10. See the Check for HDR support guide for details.

- HDR10_10_BIT - A 10-bit high-dynamic range with HDR10 encoding.

- HDR10_PLUS_10_BIT - A 10-bit high-dynamic range with HDR10+ encoding.

- DOLBY_VISION_10_BIT - A 10-bit high-dynamic range with Dolby Vision encoding.

- DOLBY_VISION_8_BIT - An 8-bit high-dynamic range with Dolby Vision encoding.

First, loop through the available CameraInfos to find the first one that supports HDR. You can add additional camera selection criteria here.

var supportedHdrEncoding: DynamicRange? = null

val hdrCameraInfo = cameraProvider.availableCameraInfos

.first { cameraInfo ->

val videoCapabilities = Recorder.getVideoCapabilities(cameraInfo)

val supportedDynamicRanges =

videoCapabilities.getSupportedDynamicRanges()

supportedHdrEncoding = supportedDynamicRanges.firstOrNull {

it != DynamicRange.SDR // Ensure an HDR encoding is found

}

return supportedDynamicRanges != null

}

var cameraSelector = hdrCameraInfo?.cameraSelector ?:

CameraSelector.DEFAULT_BACK_CAMERA |

Then, set up a Recorder and a VideoCapture UseCase. If you found a supportedHdrEncoding earlier, also call setDynamicRange() to turn on HDR in your camera app.

// Create a Recorder with Quality.HIGHEST, which will select the highest

// resolution compatible with the chosen DynamicRange.

val recorder = Recorder.Builder()

.setQualitySelector(QualitySelector.from(Quality.HIGHEST))

.build()

val videoCaptureBuilder = VideoCapture.Builder(recorder)

if (supportedHdrEncoding != null) {

videoCaptureBuilder.setDynamicRange(supportedHdrEncoding!!)

}

val videoCapture = videoCaptureBuilder.build() |

Effects

While CameraX makes many camera tasks easy, it also provides hooks to accomplish advanced or custom functionality. The new effects methods enable custom graphics library transformations to be applied to frames for Preview, ImageCapture, and VideoCapture.

You can define a CameraEffect to inject code into the CameraX pipeline and apply visual effects, such as a custom portrait effect. When creating your own CameraEffect via the constructor, you must specify which use cases to target (from PREVIEW, VIDEO_CAPTURE, and IMAGE_CAPTURE). You must also specify a SurfaceProcessor to implement a GPU effect for the underlying Surface. It's recommended to use graphics API such as OpenGL or Vulkan to access the Surface. This process will block the Executor associated with the ImageCapture. An internal I/O thread is used by default, or you can set one with ImageCapture.Builder.setIoExecutor(). Note: It’s the implementation’s responsibility to be performant. For a 30fps input, each frame should be processed under 30 ms to avoid frame drops.

There is an alternative CameraEffect constructor for processing still images, since higher latency is more acceptable when processing a single image. For this constructor, you pass in an ImageProcessor, implementing the process method to return an image as detailed in the ImageProcessor.Request.getInputImage() method.

Once you’ve defined one or more CameraEffects, you can add them to your CameraX setup. If you’re using a CameraProvider, you should call UseCaseGroup.Builder.addEffect() for each CameraEffect, then build the UseCaseGroup, and pass it in to bindToLifecycle(). If you’re using a CameraController, you should pass all of our CameraEffects into setEffects().

Additional video features

CameraX 1.3 has many additional highly-requested video features that we’re excited to add support for.

With VideoCapture.Builder.setMirrorMode(), you can control when video recordings are reflected horizontally. You can set MIRROR_MODE_OFF (the default), MIRROR_MODE_ON, and MIRROR_MODE_ON_FRONT_ONLY (useful for matching the mirror state of the Preview, which is mirrored on front-facing cameras). Note: in an app that only uses the front-facing camera, MIRROR_MODE_ON and MIRROR_MODE_ON_FRONT_ONLY are equivalent.

PendingRecording.asPersistentRecording() method prevents a video from being stopped by lifecycle events or the explicit unbinding of a VideoCapture use case that the recording's Recorder is attached to. This is useful if you want to bind to a different camera and continue the video recording with that camera. When this option is enabled, you must explicitly call Recording.stop() or Recording.close() to end the recording.

For videos that are set to record audio via PendingRecording.withAudioEnabled(), you can now call Recording.mute() while the recording is in progress. Pass in a boolean to specify whether to mute or unmute the audio, and CameraX will insert silence during the muted portions to ensure the audio stays aligned with the video.

AudioStats now has a getAudioAmplitude() method, which is perfect for showing a visual indicator to users that audio is being recorded. While a video recording is in progress, each VideoRecordEvent can be used to access RecordingStats, which in turn contains the AudioStats object.

Next steps

Check the full release notes for CameraX 1.3 for more details on the features described here and more! If you’re ready to try out CameraX 1.3, update your project’s CameraX dependency to 1.3.0-beta01 (or the latest version at the time you’re reading this).

If you would like to provide feedback on any of these features or CameraX in general, please create a CameraX issue. As always, you can also reach out on our CameraX Discussion Group.

Posted by Donovan McMurray, Camera Developer Relations Engineer

Posted by Donovan McMurray, Camera Developer Relations Engineer

Posted by the Android team

Posted by the Android team

Posted by Tom Grinsted, Group Product Manager, Google Play

Posted by Tom Grinsted, Group Product Manager, Google Play

Posted by Alex Vanyo, Developer Relations Engineer

Posted by Alex Vanyo, Developer Relations Engineer

Posted by Leticia Lago, Developer Marketing

Posted by Leticia Lago, Developer Marketing

Posted by Sara Hamilton, Developer Relations Engineer

Posted by Sara Hamilton, Developer Relations Engineer

Posted by

Posted by

Posted by Dan Galpin, Developer Relations Engineer

Posted by Dan Galpin, Developer Relations Engineer

Posted by Dawid Ostrowski, Developer Relations Program Manager, Android Developer

Posted by Dawid Ostrowski, Developer Relations Program Manager, Android Developer

Posted by Rejane França, Product Manager and Serge Beauchamp, Software Engineer at Google Play

Posted by Rejane França, Product Manager and Serge Beauchamp, Software Engineer at Google Play