Posted by Nari Yoon, Hee Jung, DevRel Community Manager / Soonson Kwon, DevRel Program Manager

Let’s explore highlights and accomplishments of vast Google Machine Learning communities over the last quarter of 2022. We are enthusiastic and grateful about all the activities by the global network of ML communities. Here are the highlights!

ML at DevFest 2022

A large number of members of ML GDE, TFUG, and 3P ML communities participated in

DevFests 2022 worldwide covering various ML topics with Google products.

Machine Learning with Jax: Zero to Hero (DevFest Conakry) by ML GDE Yannick Serge Obam Akou (Cameroon) and

Easy ML on Google Cloud (DevFest Med) by ML GDE Nathaly Alarcon Torrico (Bolivia) hosted great sessions.

ML Community Summit 2022

ML Community Summit 2022 was hosted on Oct 22-23, 2022, in Bangkok, Thailand. Twenty-five most active community members (ML GDE or TFUG organizer) were invited and shared their past activities and thoughts on Google’s ML products.

A video sketch from ML Developer Programs team and

a blog posting by ML GDE Margaret Maynard-Reid (United States) help us revisit the moments.

TensorFlow

MAXIM in TensorFlow by ML GDE Sayak Paul (India) shows his implementation of the MAXIM family of models in TensorFlow.

gMLP: What it is and how to use it in practice with Tensorflow and Keras? by ML GDE Radostin Cholakov (Bulgaria) demonstrates the state-of-the-art results on NLP and computer vision tasks using a lot less trainable parameters than corresponding Transformer models. He also wrote Differentiable discrete sampling in TensorFlow.

Building Computer Vision Model using TensorFlow: Part 2 by TFUG Pune for the developers who want to deep dive into training an object detection model on Google Colab, inspecting the TF Lite model, and deploying the model on an Android application. ML GDE Nitin Tiwari (India) covered detailed aspects for end-to-end training and deployment of object model detection.

Advent of Code 2022 in pure TensorFlow (days 1-5) by ML GDE Paolo Galeone (Italy) solving the Advent of Code (AoC) puzzles using only TensorFlow. The articles contain a description of the solutions of the Advent of Code puzzles 1-5, in pure TensorFlow.

Build tensorflow-lite-select-tf-ops.aar and tensorflow-lite.aar files with Colab by ML GDE George Soloupis (Greece) guides how you can shrink the final size of your Android application’s .apk by building tensorflow-lite-select-tf-ops.aar and tensorflow-lite.aar files without the need of Docker or personal PC environment.

TensorFlow Lite and MediaPipe Application by ML GDE XuHua Hu (China) explains how to use TFLite to deploy an ML model into an application on devices. He shared experiences with developing a motion sensing game with MediaPipe, and how to solve problems that we may meet usually.

Keras

Let’s Generate Images with Keras based Stable Diffusion by ML GDE Chansung Park (Korea) delivered how to generate images with given text and what stable diffusion is. He also talked about Keras-based stable diffusion, basic building blocks, and the advantages of using Keras-based stable diffusion.

TFX

How startups can benefit from TFX by ML GDE Hannes Hapke (United States) explains how the San Francisco-based FinTech startup Digits has benefitted from applying TFX early, how TFX helps Digits grow, and how other startups can benefit from TFX too.

Usha Rengaraju (India) shared TensorFlow Extended (TFX) Tutorials (Part 1, Part 2, Part 3) and the following TF projects: TensorFlow Decision Forests Tutorial and FT Transformer TensorFlow Implementation.

Hyperparameter Tuning and ML Pipeline by ML GDE Chansung Park (Korea) explained hyperparam tuning, why it is important; Introduction to KerasTuner, basic usage; how to visualize hyperparam tuning results with TensorBoard; and integration within ML pipeline with TFX.

JAX/Flax

JAX High-performance ML Research by TFUG Taipei and ML GDE Jerry Wu (Taiwan) introduced JAX and how to start using JAX to solve machine learning problems.

Introduction to JAX with Flax (

slides) by ML GDE Phillip Lippe (Netherlands) reviewed from the basics of the requirements we have on a DL framework to what JAX has to offer. Further, he focused on the powerful function-oriented view JAX offers and how Flax allows you to use them in training neural networks.

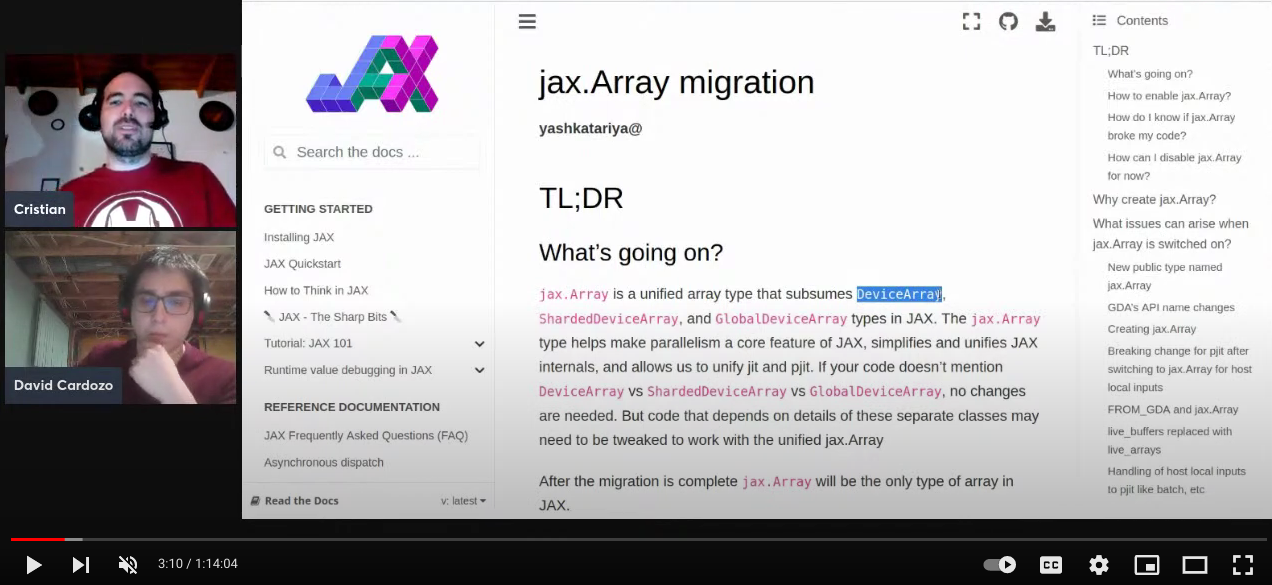

JAX Streams: Exploring JAX 0.4 by ML GDE David Cardozo (Canada) and Cristian Garcia (Colombia) showed a review of new features (specifically Shared Arrays) in the recent release of JAX and demonstrated live coding.

Kaggle

Low-light Image Enhancement using MirNetv2 by ML GDE Soumik Rakshit (India) demonstrated the task of Low-light Image Enhancement.

Cloud AI

Better Hardware Provisioning for ML Experiments on GCP by ML GDE Sayak Paul (India) discussed the pain points of provisioning hardware (especially for ML experiments) and how we can get better provision hardware with code using Vertex AI Workbench instances and Terraform.

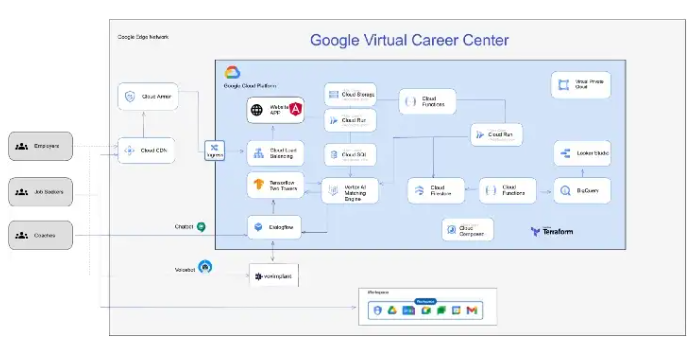

MLOps workshop with TensorFlow and Vertex AI by

TFUG Chennai targeted beginners and intermediate-level practitioners to give hands-on experience on the E2E MLOps pipeline with GCP. In the workshop, they shared the various stages of an ML pipeline, the top tools to build a solution, and how to design a workflow using an open-source framework like ZenML.

More practical time-series model with BQML by ML GDE JeongMin Kwon (Korea) introduced BQML and time-series modeling and showed some practical applications with BQML ARIMA+ and Python implementations.

Research & Ecosystem

AI in Healthcare by ML GDE Sara EL-ATEIF (Morocco) introduced AI applications in healthcare and the challenges facing AI in its adoption into the health system.

Women in AI APAC finished their journey at ML Paper Reading Club. During 10 weeks, participants gained knowledge on outstanding machine learning research, learned the latest techniques, and understood the notion of “ML research” among ML engineers. See their session here.

A Natural Language Understanding Model LaMDA for Dialogue Applications by ML GDE Jerry Wu (Taiwan) introduced the natural language understanding (NLU) concept and shared the operation mode of LaMDA, model fine-tuning, and measurement indicators.

Python library for Arabic NLP preprocessing (Ruqia) by ML GDE Ruqiya Bin (Saudi Arabia) is her first python library to serve Arabic NLP.

Anatomy of Capstone ML Projects 🫀by ML GDE Sayak Paul (India) discussed working on capstone ML projects that will stay with you throughout your career. He covered various topics ranging from problem selection to tightening up the technical gotchas to presentation. And in Improving as an ML Practitioner he shared his learning from experience in the field working on several aspects.

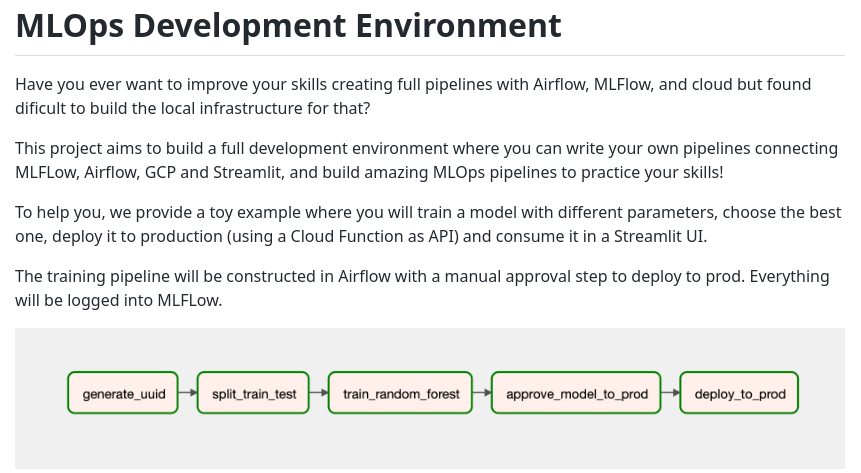

MLOps Development Environment by ML GDE Vinicius Caridá (Brazil) aims to build a full development environment where you can write your own pipelines connecting MLFLow, Airflow, GCP and Streamlit, and build amazing MLOps pipelines to practice your skills.

Transcending Scaling Laws with 0.1% Extra Compute by ML GDE Grigory Sapunov (UK) reviewed a recent Google article on UL2R. And his posting Discovering faster matrix multiplication algorithms with reinforcement learning explained how AlphaTensor works and why it is important.

Back in Person - Prompting, Instructions and the Future of Large Language Models by TFUG Singapore and ML GDE Sam Witteveen (Singapore) and Martin Andrews (Singapore). This event covered recent advances in the field of large language models (LLMs).

ML for Production: The art of MLOps in TensorFlow Ecosystem with GDG Casablanca by TFUG Agadir discussed the motivation behind using MLOps and how it can help organizations automate a lot of pain points in the ML production process. It also covered the tools used in the TensorFlow ecosystem.

For International Women’s Day, our latest #WeArePlay stories feature inspirational women building apps and games.

For International Women’s Day, our latest #WeArePlay stories feature inspirational women building apps and games.

For International Women’s Day, our latest #WeArePlay stories feature inspirational women building apps and games.

For International Women’s Day, our latest #WeArePlay stories feature inspirational women building apps and games.

Google Registry celebrates the first anniversary of .day and announces collaboration with United Nation organizations using this domain.

Google Registry celebrates the first anniversary of .day and announces collaboration with United Nation organizations using this domain.

People across the world are developing and starting websites that everyone loves, no matter who, where, and how. See why.

People across the world are developing and starting websites that everyone loves, no matter who, where, and how. See why.

Chryssa Jones, Women Techmakers Ambassador, shares her story about moving into tech and advocating for equity and inclusion.

Chryssa Jones, Women Techmakers Ambassador, shares her story about moving into tech and advocating for equity and inclusion.

Our latest #WeArePlay film features Filipe, a former researcher who co-founded a game company to share Brazilian culture with the world.

Our latest #WeArePlay film features Filipe, a former researcher who co-founded a game company to share Brazilian culture with the world.