Posted by Jen Person, DevRel Engineer, CoreML

If you're reading this blog, then you're probably interested in creating a custom machine learning (ML) model. I recently went through the process myself, creating a custom dog detector to go with a Codelab, Create a custom object detection web app with MediaPipe. Like any new coding task, the process took some trial and error to figure out what I was doing along the way. To minimize the error part of your "trial and error" experience, I'm happy to share five takeaways from my model training experience with you.

1. Preparing data takes a long time. Be sure to make the time

Preparing your data for training will look different depending on the type of model you're customizing. In general, there is a step for sourcing data and a step for annotating data.

Sourcing data

Finding enough data points that best represent your use case can be a challenge. For one, you want to make sure you have the right to use any images or text you include in your data. Check the licensing for your data before training. One way to resolve this is to provide your own data. I just so happen to have hundreds of photos of my dogs, so choosing them for my object detector was a no-brainer. You can also look for existing datasets on Kaggle. There are so many options on Kaggle covering a wide range of use cases. If you're lucky, you'll find an existing dataset that serves your needs and it might even already have annotations!

Annotating data

MediaPipe Model Maker accepts data where each input has a corresponding XML file listing its annotations. For example:

There are several software programs that can help with annotation. This is especially useful when you need to highlight specific areas in images. Some software programs are designed to enable collaboration–an intuitive UI and instructions for annotators mean you can enlist the help of others. A common open source option is Label Studio, which is what I used to annotate my images.

So expect this step to take a long time, but keep in mind that it will take longer than you expect.

2. Simplify your custom model

If you're anything like me, you have a wonderfully grand idea planned for your first custom model. My dog Ben was the inspiration for my first model. He came from a local golden retriever rescue, but when I did a DNA test, it turned out that he's 0% golden retriever! My first idea was to create a golden retriever detector – a solution that could tell you if a dog was a "golden retriever" or "not golden retriever". I thought it could be fun to see what the model thought of Ben, but I quickly realized that I would have to source a lot more images of dogs than I had so I could run the model on other dogs as well. And, I'd have to make sure that it could accurately identify golden retrievers of all shades. After hours into this endeavor I realized I needed to simplify. That's when I decided to try building a solution for just my three dogs. I had plenty of photos to choose from, so I picked the ones that best showed the dogs in detail. This was a much more successful solution, and a great proof of concept for my golden retriever model because I refuse to abandon that idea.

Here are a few ways to simplify your first custom model:

- Start with fewer labels. Choose 2-5 classes to assign to your data.

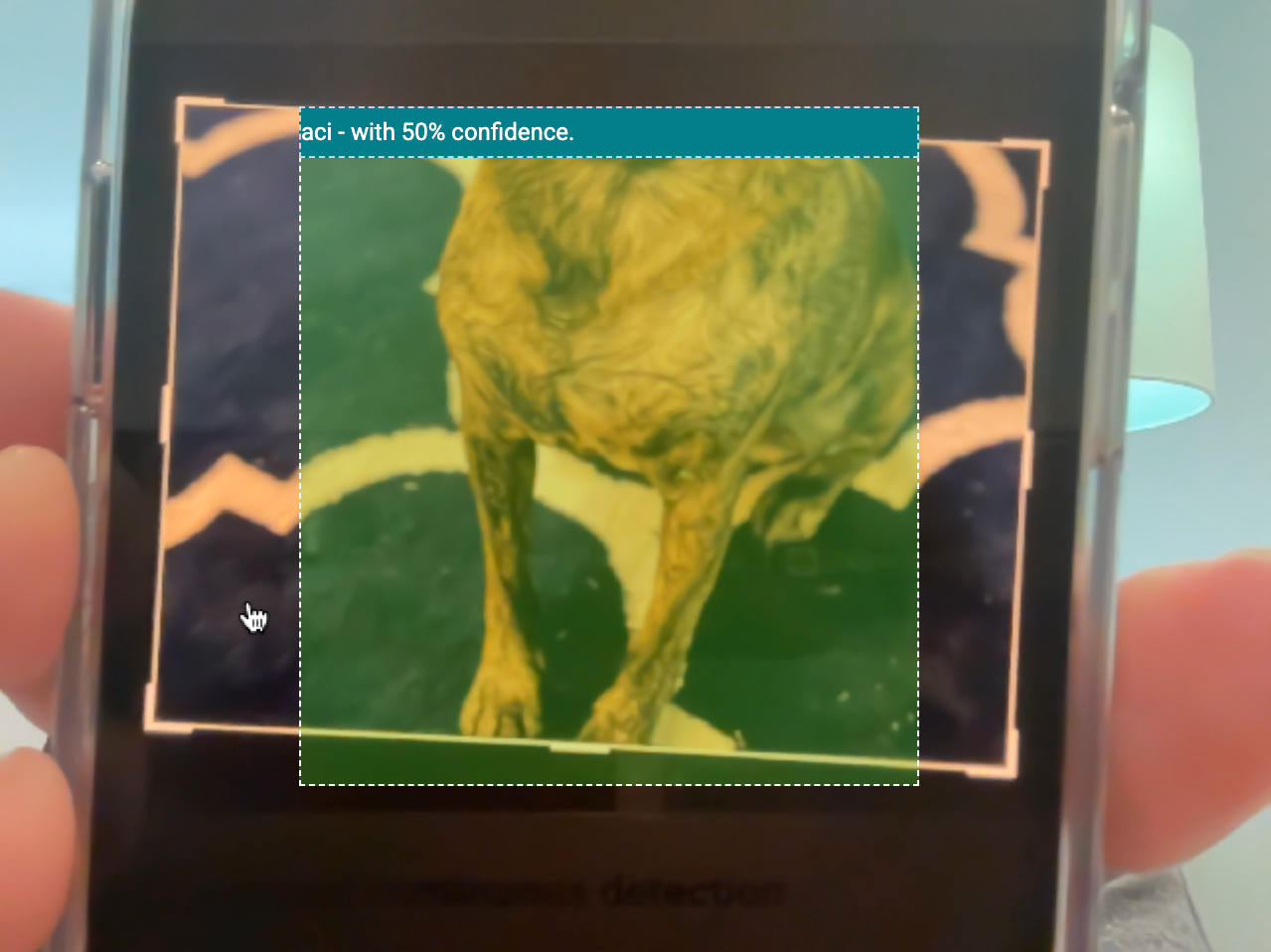

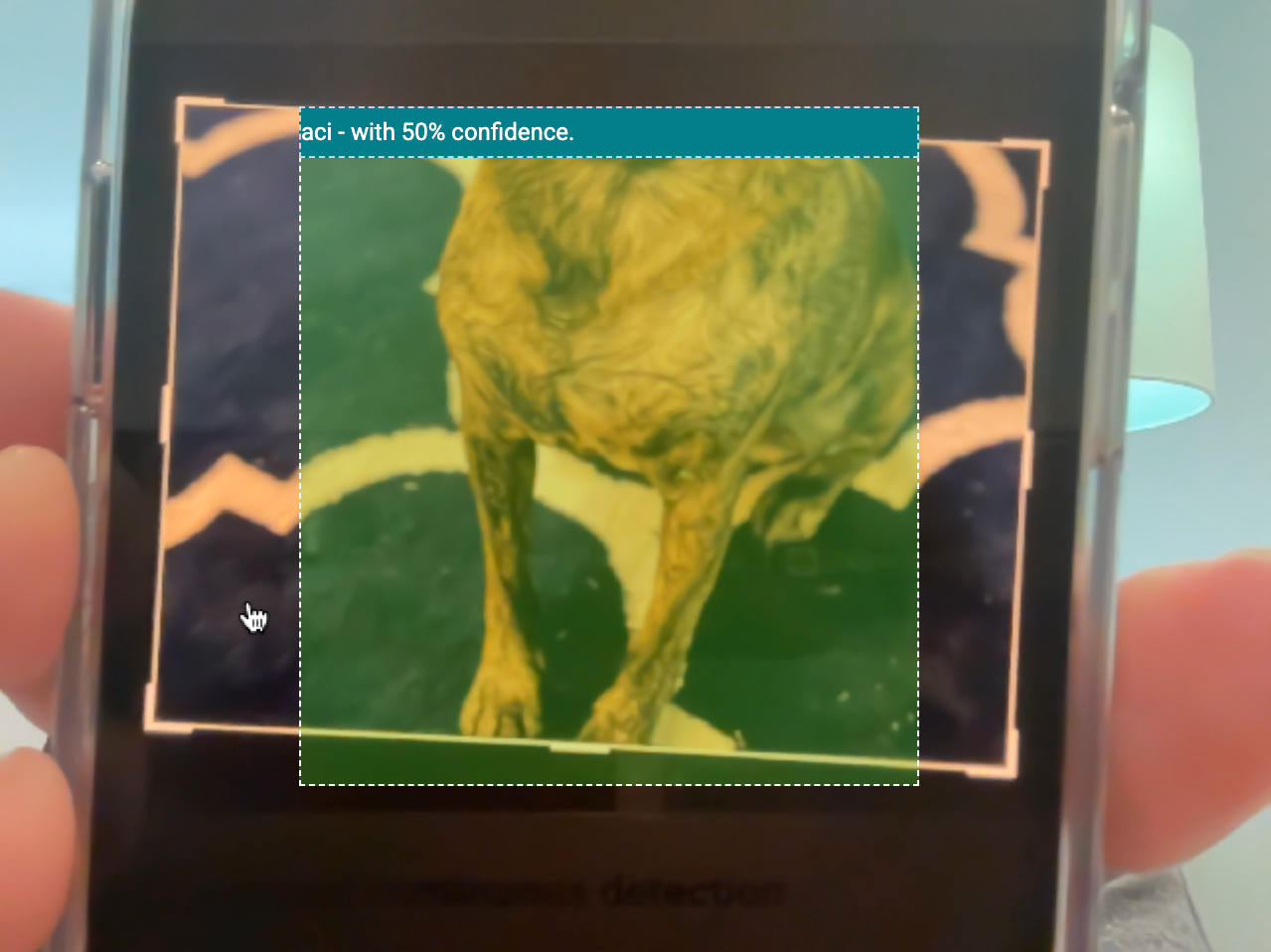

- Leave off the edge cases. If you're coming from a background in software engineering, then you're used to paying attention to and addressing any edge cases. In machine learning, you might be introducing some errors or strange behavior when you try to train for edge cases. For example, I didn't choose any dog photos where their heads aren't visible. Sure, I may want a model that can detect my dogs even from just the back half. But I left partial dog photos out of my training and it turns out that the model is still able to detect them.

|

| The web app still identifies ACi in an image even when her head isn't visible |

- A little data goes a long way. Since MediaPipe Model Maker uses transfer learning, you need much less data to train than you would if you were training a model from scratch. Aim for 100 examples for each class. You might be able to train with fewer than 100 examples if there aren't many possible iterations of the data. For example, my colleague trained a model to detect two different Android figurines. He didn't need too many photos because there are only so many angles at which to view the figurines. You might need more than 100 examples to start if you need more to show the possible iterations of the data. For example, a golden retriever comes in many colors. You might need several dozen examples for each color to ensure the model can accurately identify them, resulting in over 100 examples.

So when it comes to your first ML training experience, remember to simplify, simplify, simplify.

Simplify.

Simplify.

3. Expect several training iterations

As much as I'd like to confidently say you'll get the right results from your model the first time you train, it probably won't happen. Taking your time with choosing data samples and annotation will definitely improve your success rate, but there are so many factors that can change how the model behaves. You might find that you need to start with a different model architecture to reach your desired accuracy. Or, you might try a different split of training and validation data. You might need to add more samples to your dataset. Fortunately, transfer learning with MediaPipe Model Maker generally takes several minutes, so you can turn around new iterations fairly quickly.

4. Prototype outside of your app

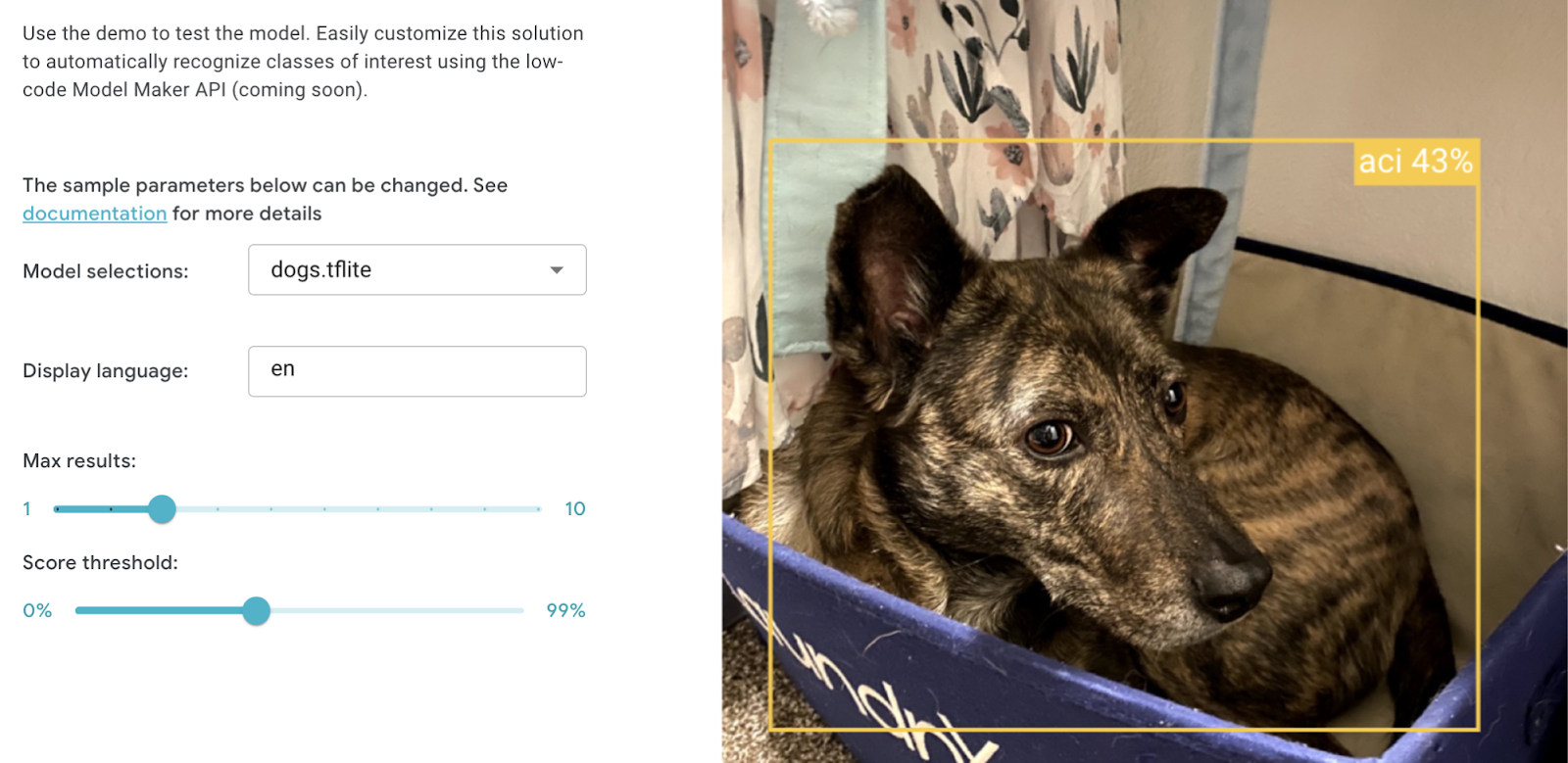

When you finish training a model, you're probably going to be very excited and eager to add it to your app. However, I encourage you to first try out your model in MediaPipe Studio for a couple of reasons:

- Any time you make a change to your app, you probably have to wait for some compile and/or build step to complete. Even with a hot reload, there can be a wait time. So if you decide you want to tweak a configuration option like score threshold, you'll be waiting through every tweak you make and that time can add up. It's not worth the extra time to wait for a whole app to build out when you're just trying to test one component. With MediaPipe Studio, you can try out options and see results with very low latency.

- If you don't get the expected results, you can't confidently determine if the issue is with your model, task configuration, or app.

With MediaPipe Studio, I was able to quickly try out different score thresholds on various images to determine what threshold I should use in my app. I also eliminated my own web app as a factor in this performance.

5. Make incremental changes

After sourcing quality data, simplifying your use case, training, and prototyping, you might find that you need to repeat the cycle to get the right result. When that happens, choose just one part of the process to change, and make a small change. In my case, many photos of my dogs were taken on the same blue couch. If the model started picking up on this couch since it's often inside the bounding box, that could be affecting how it categorized images where the dogs aren't on the couch. Rather than throwing out all the couch photos, I removed just a couple and added about 10 more of each dog where they aren't on the couch. This greatly improved my results. If you try to make a big change right away, you might end up introducing new issues rather than resolving them.

Go forth and customize!

With these tips in mind, it's time for you to customize your own ML solution! You can customize your image classification, gesture recognition, text classification, or object detection model to use in MediaPipe Tasks.

If you’d like to share some learnings from training your first model, post the details on LinkedIn along with a link to this blog post, and then tag me. I can't wait to see what you learn and what you build!

Posted by

Posted by  Posted by the Android team

Posted by the Android team

Stress less with these Fitbit features and products.

Stress less with these Fitbit features and products.

Today, we’re announcing the launch of the Google Cybersecurity Certificate, the newest addition to our Google Career Certificates program.

Today, we’re announcing the launch of the Google Cybersecurity Certificate, the newest addition to our Google Career Certificates program.

Google is committed to fighting the spread of misinformation online. We take the task of providing access to high quality, trustworthy, and credible content very serious…

Google is committed to fighting the spread of misinformation online. We take the task of providing access to high quality, trustworthy, and credible content very serious…

Posted by Maru Ahues Bouza, Director, Android Developer Relations

Posted by Maru Ahues Bouza, Director, Android Developer Relations