Chrome Beta for iOS Update

Hi everyone! We've just released Chrome Beta 120 (120.0.6099.6) for iOS; it'll become available on App Store in the next few days.

You can see a partial list of the changes in the Git log. If you find a new issue, please let us know by filing a bug.

Harry Souders

Google Chrome

Source: Google Chrome Releases

6 ways to celebrate Native American Heritage Month with Google

Here are ways that Google products are honoring Indigenous Peoples this Native American Heritage Month.

Here are ways that Google products are honoring Indigenous Peoples this Native American Heritage Month.

Source: Search

Chrome Beta for Android Update

Hi everyone! We've just released Chrome Beta 120 (120.0.6099.4) for Android. It's now available on Google Play.

You can see a partial list of the changes in the Git log. For details on new features, check out the Chromium blog, and for details on web platform updates, check here.

If you find a new issue, please let us know by filing a bug.

Harry Souders

Google Chrome

Source: Google Chrome Releases

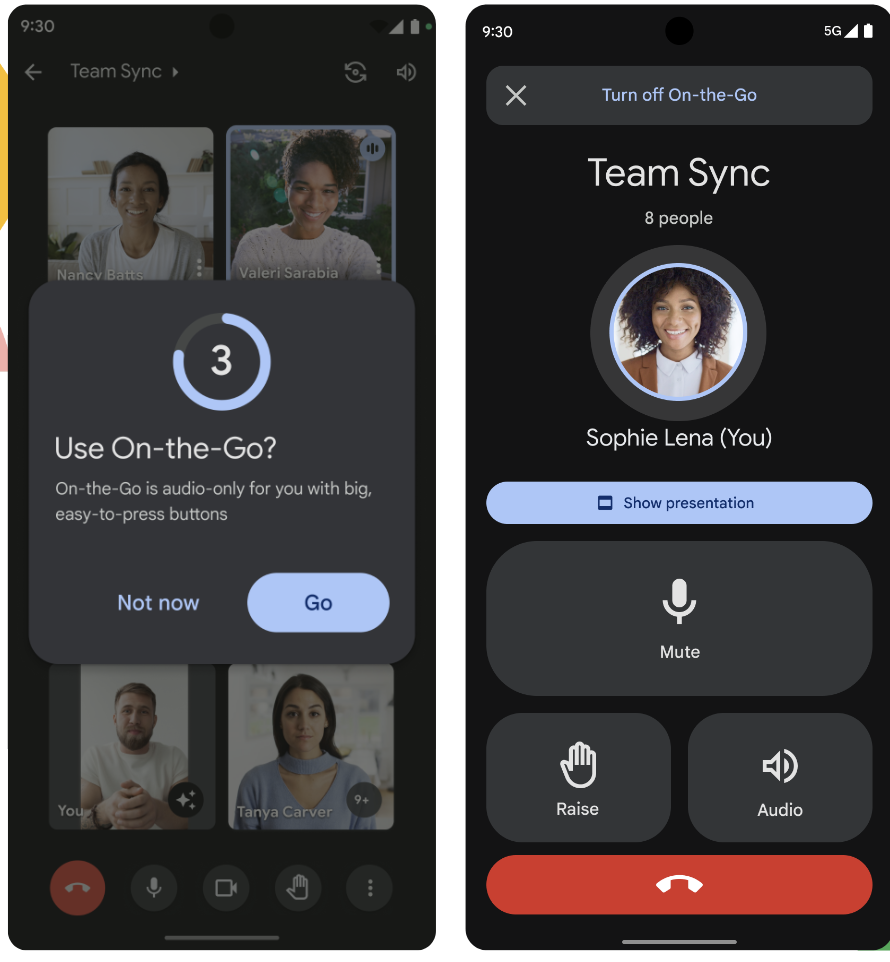

Take Google Meet on-the-go with ease

What’s changing

- A simplified user interface with access to the most critical features such as mute & unmute, hand raise, and audio device selection.

- Only key information is displayed, such as the active speaker and the number of people on the call.

- Your video is automatically turned off.

- You have the option to view presented content.

Who’s impacted

Why it’s important

Getting started

- Admins: There is no admin control for this feature.

- End users: This feature will be on by default and can be turned off by the user. Visit the Help Center to learn more about turning On-the-Go on or off for your account.

Rollout pace

- Rapid Release and Scheduled Release domains: Full rollout (1-3 days for feature availability) beginning on November 1, 2023

Availability

- Available to all Google Workspace customers and users with personal Google accounts

Resources

Source: Google Workspace Updates

Chrome Beta for Desktop Update

The Chrome team is excited to announce the promotion of Chrome 120 to the Beta channel for Windows, Mac and Linux. Chrome 120.0.6099.5 contains our usual under-the-hood performance and stability tweaks, but there are also some cool new features to explore - please head to the Chromium blog to learn more!

A partial list of changes is available in the Git log. Interested in switching release channels? Find out how. If you find a new issue, please let us know by filing a bug. The community help forum is also a great place to reach out for help or learn about common issues.

Srinivas Sista

Google Chrome

Source: Google Chrome Releases

MetNet-3: A state-of-the-art neural weather model available in Google products

Forecasting weather variables such as precipitation, temperature, and wind is key to numerous aspects of society, from daily planning and transportation to energy production. As we continue to see more extreme weather events such as floods, droughts, and heat waves, accurate forecasts can be essential to preparing for and mitigating their effects. The first 24 hours into the future are especially important as they are both highly predictable and actionable, which can help people make informed decisions in a timely manner and stay safe.

Today we present a new weather model called MetNet-3, developed by Google Research and Google DeepMind. Building on the earlier MetNet and MetNet-2 models, MetNet-3 provides high resolution predictions up to 24 hours ahead for a larger set of core variables, including precipitation, surface temperature, wind speed and direction, and dew point. MetNet-3 creates a temporally smooth and highly granular forecast, with lead time intervals of 2 minutes and spatial resolutions of 1 to 4 kilometers. MetNet-3 achieves strong performance compared to traditional methods, outperforming the best single- and multi-member physics-based numerical weather prediction (NWP) models — such as High-Resolution Rapid Refresh (HRRR) and ensemble forecast suite (ENS) — for multiple regions up to 24 hours ahead.

Finally, we’ve integrated MetNet-3’s capabilities across various Google products and technologies where weather is relevant. Currently available in the contiguous United States and parts of Europe with a focus on 12 hour precipitation forecasts, MetNet-3 is helping bring accurate and reliable weather information to people in multiple countries and languages.

|

|

| MetNet-3 precipitation output summarized into actionable forecasts in Google Search on mobile. |

Densification of sparse observations

Many recent machine learning weather models use the atmospheric state generated by traditional methods (e.g., data assimilation from NWPs) as the primary starting point to build forecasts. In contrast, a defining feature of the MetNet models has been to use direct observations of the atmosphere for training and evaluation. The advantage of direct observations is that they often have higher fidelity and resolution. However, direct observations come from a large variety of sensors at different altitudes, including weather stations at the surface level and satellites in orbit, and can be of varying degrees of sparsity. For example, precipitation estimates derived from radar such as NOAA’s Multi-Radar/Multi-Sensor System (MRMS) are relatively dense images, whereas weather stations located on the ground that provide measurements for variables such as temperature and wind are mere points spread over a region.

In addition to the data sources used in previous MetNet models, MetNet-3 includes point measurements from weather stations as both inputs and targets with the goal of making a forecast at all locations. To this end, MetNet-3’s key innovation is a technique called densification, which merges the traditional two-step process of data assimilation and simulation found in physics-based models into a single pass through the neural network. The main components of densification are illustrated below. Although the densification technique applies to a specific stream of data individually, the resulting densified forecast benefits from all the other input streams that go into MetNet-3, including topographical, satellite, radar, and NWP analysis features. No NWP forecasts are included in MetNet-3’s default inputs.

High resolution in space and time

A central advantage of using direct observations is their high spatial and temporal resolution. For example, weather stations and ground radar stations provide measurements every few minutes at specific points and at 1 km resolutions, respectively; this is in stark contrast with the assimilation state from the state-of-the-art model ENS, which is generated every 6 hours at a resolution of 9 km with hour-by-hour forecasts. To handle such a high resolution, MetNet-3 preserves another of the defining features of this series of models, lead time conditioning. The lead time of the forecast in minutes is directly given as input to the neural network. This allows MetNet-3 to efficiently model the high temporal frequency of the observations for intervals as brief as 2 minutes. Densification combined with lead time conditioning and high resolution direct observations produces a fully dense 24 hour forecast with a temporal resolution of 2 minutes, while learning from just 1,000 points from the One Minute Observation (OMO) network of weather stations spread across the United States.

MetNet-3 predicts a marginal multinomial probability distribution for each output variable and each location that provides rich information beyond just the mean. This allows us to compare the probabilistic outputs of MetNet-3 with the outputs of advanced probabilistic ensemble NWP models, including the ensemble forecast ENS from the European Centre for Medium-Range Weather Forecasts and the High Resolution Ensemble Forecast (HREF) from the National Oceanic and Atmospheric Administration of the US. Due to the probabilistic nature of the outputs of both models, we are able to compute scores such as the Continuous Ranked Probability Score (CRPS). The following graphics highlight densification results and illustrate that MetNet’s forecasts are not only of much higher resolution, but are also more accurate when evaluated at the overlapping lead times.

|

| Top: MetNet-3’s forecast of wind speed for each 2 minutes over the future 24 hours with a spatial resolution of 4km. Bottom: ENS’s hourly forecast with a spatial resolution of 18 km. The two distinct regimes in spatial structure are primarily driven by the presence of the Colorado mountain ranges. Darker corresponds to higher wind speed. More samples available here: 1, 2, 3, 4. |

In contrast to weather station variables, precipitation estimates are more dense as they come from ground radar. MetNet-3’s modeling of precipitation is similar to that of MetNet-1 and 2, but extends the high resolution precipitation forecasts with a 1km spatial granularity to the same 24 hours of lead time as the other variables, as shown in the animation below. MetNet-3’s performance on precipitation achieves a better CRPS value than ENS’s throughout the 24 hour range.

|

| Case study for Thu Jan 17 2019 00:00 UTC showing the probability of instantaneous precipitation rate being above 1 mm/h on CONUS. Darker corresponds to a higher probability value. The maps also show the prediction threshold when optimized towards Critical Success Index CSI (dark blue contours). This specific case study shows the formation of a new large precipitation pattern in the central US; it is not just forecasting of existing patterns. Top: ENS’s hourly forecast. Center: Ground truth, source NOAA’s MRMS. Bottom: Probability map as predicted by MetNet-3. Native resolution available here. |

|

| Performance comparison between MetNet-3 and NWP baseline for instantaneous precipitation rate on CRPS (lower is better). |

Delivering realtime ML forecasts

Training and evaluating a weather forecasting model like MetNet-3 on historical data is only a part of the process of delivering ML-powered forecasts to users. There are many considerations when developing a real-time ML system for weather forecasting, such as ingesting real-time input data from multiple distinct sources, running inference, implementing real-time validation of outputs, building insights from the rich output of the model that lead to an intuitive user experience, and serving the results at Google scale — all on a continuous cycle, refreshed every few minutes.

We developed such a real-time system that is capable of producing a precipitation forecast every few minutes for the entire contiguous United States and for 27 countries in Europe for a lead time of up to 12 hours.

|

| Illustration of the process of generating precipitation forecasts using MetNet-3. |

The system's uniqueness stems from its use of near-continuous inference, which allows the model to constantly create full forecasts based on incoming data streams. This mode of inference is different from traditional inference systems, and is necessary due to the distinct characteristics of the incoming data. The model takes in various data sources as input, such as radar, satellite, and numerical weather prediction assimilations. Each of these inputs has a different refresh frequency and spatial and temporal resolution. Some data sources, such as weather observations and radar, have characteristics similar to a continuous stream of data, while others, such as NWP assimilations, are similar to batches of data. The system is able to align all of these data sources spatially and temporally, allowing the model to create an updated understanding of the next 12 hours of precipitation at a very high cadence.

With the above process, the model is able to predict arbitrary discrete probability distributions. We developed novel techniques to transform this dense output space into user-friendly information that enables rich experiences throughout Google products and technologies.

Weather features in Google products

People around the world rely on Google every day to provide helpful, timely, and accurate information about the weather. This information is used for a variety of purposes, such as planning outdoor activities, packing for trips, and staying safe during severe weather events.

The state-of-the-art accuracy, high temporal and spatial resolution, and probabilistic nature of MetNet-3 makes it possible to create unique hyperlocal weather insights. For the contiguous United States and Europe, MetNet-3 is operational and produces real-time 12 hour precipitation forecasts that are now served across Google products and technologies where weather is relevant, such as Search. The rich output from the model is synthesized into actionable information and instantly served to millions of users.

For example, a user who searches for weather information for a precise location from their mobile device will receive highly localized precipitation forecast data, including timeline graphs with granular minute breakdowns depending on the product.

|

| MetNet-3 precipitation output in weather on the Google app on Android (left) and mobile web Search (right). |

Conclusion

MetNet-3 is a new deep learning model for weather forecasting that outperforms state-of-the-art physics-based models for 24-hour forecasts of a core set of weather variables. It has the potential to create new possibilities for weather forecasting and to improve the safety and efficiency of many activities, such as transportation, agriculture, and energy production. MetNet-3 is operational and its forecasts are served across several Google products where weather is relevant.

Acknowledgements

Many people were involved in the development of this effort. We would like to especially thank those from Google DeepMind (Di Li, Jeremiah Harmsen, Lasse Espeholt, Marcin Andrychowicz, Zack Ontiveros), Google Research (Aaron Bell, Akib Uddin, Alex Merose, Carla Bromberg, Fred Zyda, Isalo Montacute, Jared Sisk, Jason Hickey, Luke Barrington, Mark Young, Maya Tohidi, Natalie Williams, Pramod Gupta, Shreya Agrawal, Thomas Turnbull, Tom Small, Tyler Russell), and Google Search (Agustin Pesciallo, Bill Myers, Danny Cheresnick, Lior Cohen, Maca Piombi, Maia Diamant, Max Kamenetsky, Maya Ekron, Mor Schlesinger, Neta Gefen-Doron, Nofar Peled Levi, Ofer Lehr, Or Hillel, Rotem Wertman, Vinay Ruelius Shah, Yechie Labai).

Source: Google AI Blog

Beta Channel Update for ChromeOS / ChromeOS Flex

The Beta channel is being updated to OS version: 15633.30.0 Browser version: 119.0.6045.104 for most ChromeOS devices.

If you find new issues, please let us know one of the following ways

- File a bug

- Visit our ChromeOS communities

- General: Chromebook Help Community

- Beta Specific: ChromeOS Beta Help Community

- Report an issue or send feedback on Chrome

Interested in switching channels? Find out how.

Daniel Gagnon,

Google ChromeOS

Source: Google Chrome Releases

Extended Stable Channel Update for Desktop

The Extended Stable channel has been updated to 118.0.5993.129 for Windows and Mac which will roll out over the coming days/weeks.

A full list of changes in this build is available in the log. Interested in switching release channels? Find out how here. If you find a new issue, please let us know by filing a bug. The community help forum is also a great place to reach out for help or learn about common issues.

Source: Google Chrome Releases

Open source and CI-driven RTL testing and verification for Caliptra’s RISC-V VeeR core

As part of CHIPS Alliance’s mission to enable a software-driven approach to silicon, Google, Antmicro and other CHIPS members have been developing and improving a growing number of open source tools to enable effective, CI-driven silicon development.

Fully reproducible and scalable workflows based on open source tooling are especially beneficial for efforts spanning across multiple industrial and academic actors such as Caliptra, a Root of Trust project driven by Google, AMD, NVIDIA and Microsoft which joined CHIPS in order to host the ongoing development and provide the necessary structure, working environment and support for the reference implementation of the standard, originally hosted by Open Compute Project.

In this blog post, we describe Antmicro and Google’s collaborative effort focused on introducing a Continuous Integration (CI) based code quality checks, code indexing, coverage and functional testing pipeline into the RISC-V VeeR core family, as used within the Caliptra project.

Caliptra and VeeR

VeeR (Very Efficient & Elegant RISC-V) is an open source production-grade RISC-V core family hosted by CHIPS Alliance and comes in three variants:

- EH1 - a high-performance single threaded RV32IMCZ core, the original implementation,

- EH2 - a dual-threaded successor to EH1, originally the world’s first dual-threaded commercial, embedded RISC-V core designed for IoT and AI systems,

- EL2 - a tiny and low-power RV32IMC (with partial support for Z extension) core, which is the variant used in the Caliptra project.

Caliptra’s hardware block structure with an EL2 VeeR CPU core includes the following elements:

As can be seen in the diagram, VeeR EL2 plays a central role in the implementation and, while it is a mature and well-tested technology, keeping both the core itself and its integration with Caliptra consistently tested is important.

Advanced code processing with Verible and Kythe

Many of Antmicro’s efforts focus around building not only the end products but the scalable CI solutions for collaborative hardware development environments that power them. Caliptra’s needs for establishing a more open process tie in perfectly with Antmicro’s and all of CHIPS Alliance’s open source-based approach to tooling.

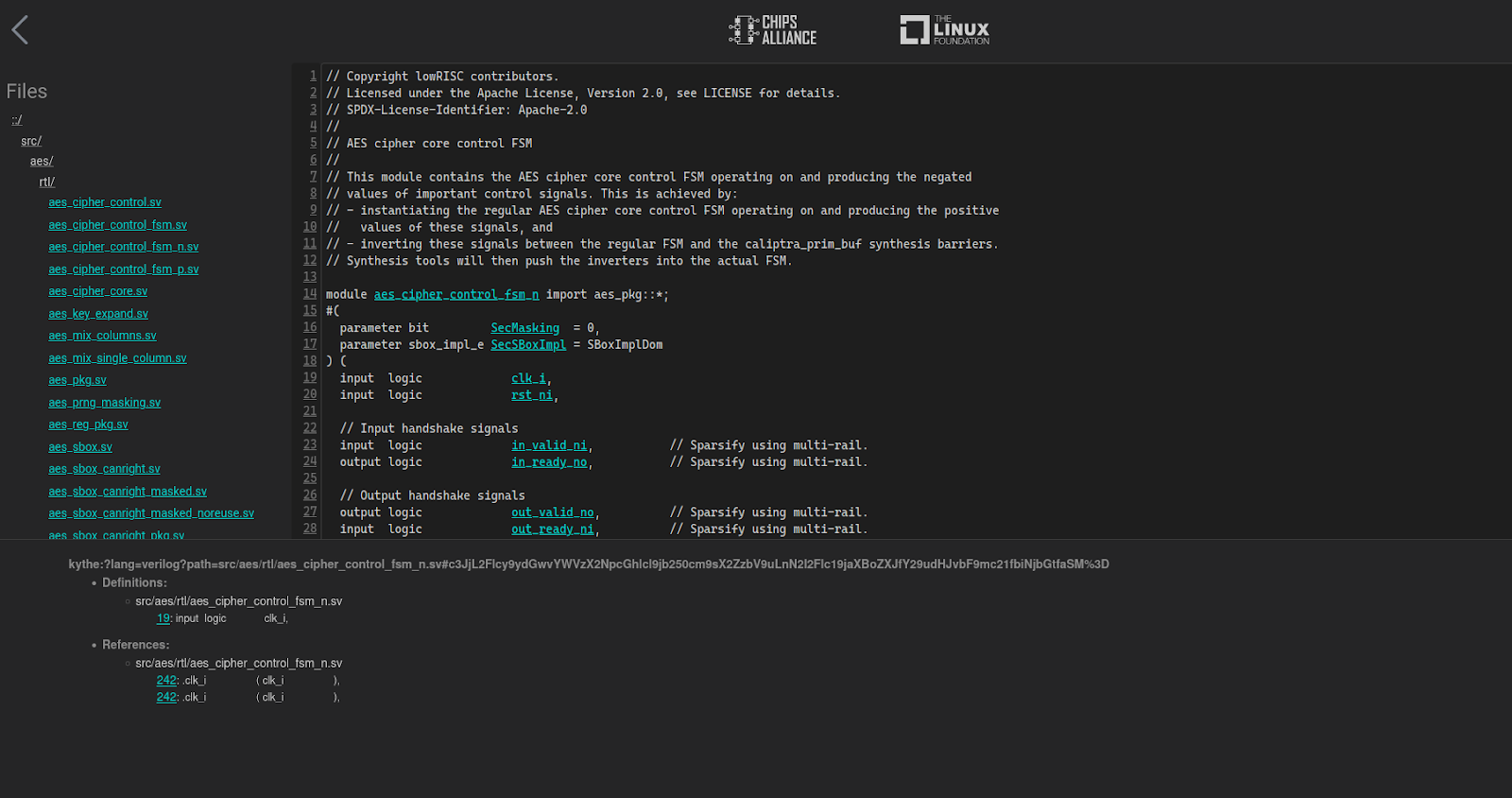

One of the core parts of this effort involved Verible, an open source SystemVerilog parser developed by Google in collaboration with Antmicro within CHIPS Alliance, offering a number of code processing functionalities, including linting, formatting, indexing and producing a Kythe schema. Verible comes with a Language Server Protocol which enables integration with popular text editors such as VS Code, Vim, Neovim, Emacs, Sublime, Kakoune and Kate, described in detail in a separate article on Antmicro's blog.

Antmicro’s work for Google around Caliptra involved [adding the Verible formatter to the VeeR CI which marks non-compliant formatting changes and uses the reviewdog bot to add comments in the Pull Request Discussion with suggested fixes. Furthermore, we added a Verible linting Action that helps developers maintain good coding practices by providing lint rules for continuous validation of the code, before it even reaches the compilation phase. Notably, the provided lint rules are flexible and can be adjusted based on the project's requirements, or even turned off completely through creation of a waiver-file or by an inline directive.

Thanks to Verible’s ability to output a Kythe schema, besides linting and formatting code changes we can also provide an indexed overview of the entire codebase, viewable online. The Kythe Verible Indexer, using Verible Indexing Action, enables the user to select multiple repositories to create a set of indexed webpages. The source code is available in the Verible Indexer GitHub repo.

The workflow also checks if a newer revision is available for any of the defined repositories and, if needed, performs indexing. The indexed code browser webpages were deployed for Cores-VeeR-EL2 and Caliptra-rtl.

Putting riscv-dv to use

The riscv-dv framework is another tool hosted by CHIPS Alliance helping address the complexities of SoC design and verification. It is an SV/UVM based open source instruction generator for RISC-V processors, originally developed for Google’s own needs but currently in use by a wide array of organizations and companies working with verification of RISC-V cores.

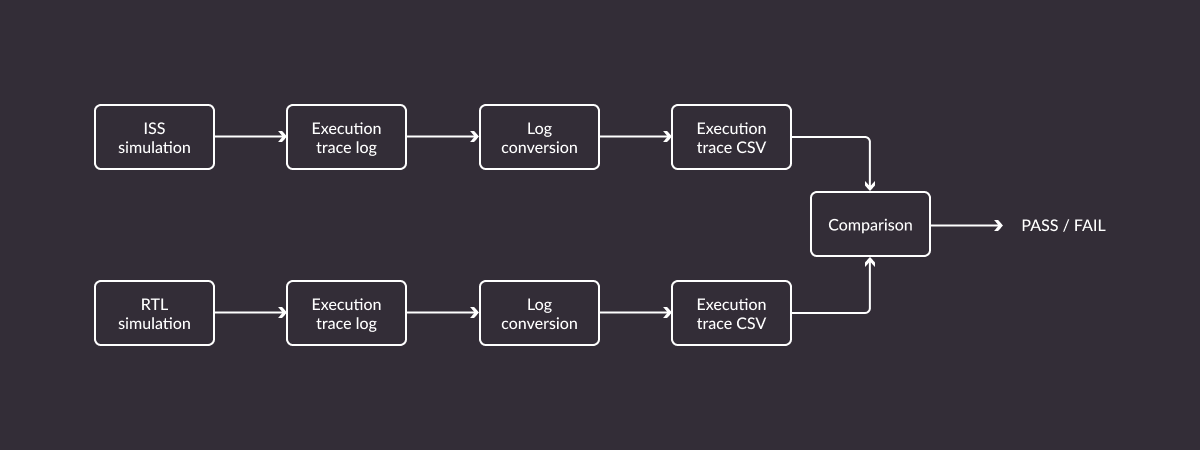

The riscv-dv framework generates random instruction chains to exercise certain core features. These instructions are then simultaneously executed by the core (through RTL simulation) and by a reference RISC-V ISS (instruction set simulator), for example Spike or Renode, Antmicro’s open source simulation framework.

Core states of both are then compared after each executed instruction and an error is reported in case of a mismatch.

While working on the Caliptra project, Antmicro introduced the riscv-dv framework for testing the VeeR-EL2 core as well as a GH Actions CI flow which builds or downloads all the dependencies (Verilator, Spike, VeeR-ISS and Renode) and runs the tests. For the purpose of using riscv-dv with VeeR we had to write a VeeR-specific execution trace log parser. The task of this parser is to translate the log to the format understandable by the riscv-dv framework.

As an interesting detail, VeeR implements division (div) and remainder (rem) instructions in a way it delegates the calculations to the division logic and proceeds with the execution of the program. Once the division core ends, the result is written back to the div/rem instruction result register. This flow takes into account the situation where any instruction following div/rem requires the div/rem result. In such cases the pipeline is stalled until the result is available. If any instruction following div/rem overwrites the result register before division logic finishes, the division operation is canceled.

To handle the case where the division results are available after a few other instructions were executed we’ve developed a lazy parsing method of the VeeR trace log to be able to catch the result register update even if it is not immediate. The second case - cancellation of the division calculation has been handled by adding a code post-processing script. It can detect a situation where a cancellation would happen and prevent it by injecting a number of the NOP instructions (allowing the division core to finish).

Custom GitHub Actions runners for greater scalability and more flexibility to mix tools

Much like a large part of the industry, the Caliptra project uses Universal Verification Methodology (UVM) as its verification methodology. While Antmicro’s ongoing work on enabling fully open source UVM support in Verilator should ultimately enable completely open source verification, today UVM testbenches or tools like RISC-V DV cannot be run using open source tools only.

Fortunately, this problem already has a solution, also developed within the CHIPS Alliance - custom GitHub Actions Runners that are already in use by a large number of CHIPS projects.

A custom runner setup, currently in development for Caliptra, allows mixing and matching open and closed source tools for CI testing purposes, exposing only the results (such as pass/fail or coverage) with fine-grained control.

What is more, given that RTL design testing and verification of RISC-V based cores and SoCs often require long, memory-consuming and computationally demanding simulations, the custom runners will play another very important role in Caliptra. While GitHub is the obvious choice for hosting the reference RTL, the processing power and throughput of the CI machines available in GitHub Actions is simply not enough to cover the needs of simulation of complex designs, especially in a highly dynamic, collaborative environment with lots of CI angles.

In order to enable public-facing yet secure CI, and improve the flexibility and scalability of Caliptra’s/VeeR’s pipelines, the custom runners will be deployed for the respective repositories. This setup will enable us to precisely select machines to be used for specific workloads (i.e. the architecture, virtual CPU count, memory size and disk space) but also to use tools stored on an external cloud disk that can be attached to a virtual machine running the job workload.

Seeding other verification methods

The Caliptra SoC is meant as a macro for use in a variety of chip designs, big and small. Various teams adopting Caliptra/VeeR as their Root of Trust solution will need to plug it into a larger ecosystem of tools used in their organization (of course hopefully using Caliptra as a good reference and role model).

As part of the project, on top of the original Caliptra test suite we implemented more specific tests around the VeeR integration in cocotb, a co-simulation testbench library that enables connecting Python coroutines with your HDL simulator of choice. We prepared a cocotb testbench that is able to not only run programs from the generator, but also apply dedicated stimuli and monitor the results in a Python coroutine.

Furthermore, for projects who prefer a more UVM-like testing methodology but need an open source option today, we also provide some example tests using pyuvm, a Pythonic library that mirrors the industry accepted SystemVerilog implementation. We have implemented a minimal UVM Agent for the programmable interrupt controller of the VeeR-EL2 Core, which will be used to verify handling of the interrupt service routines triggered by external or local-to-core timer interrupts. The verification environment is expected to grow as more test cases could be added, covering the DMA controller, close coupled memory buses or the debug interface.

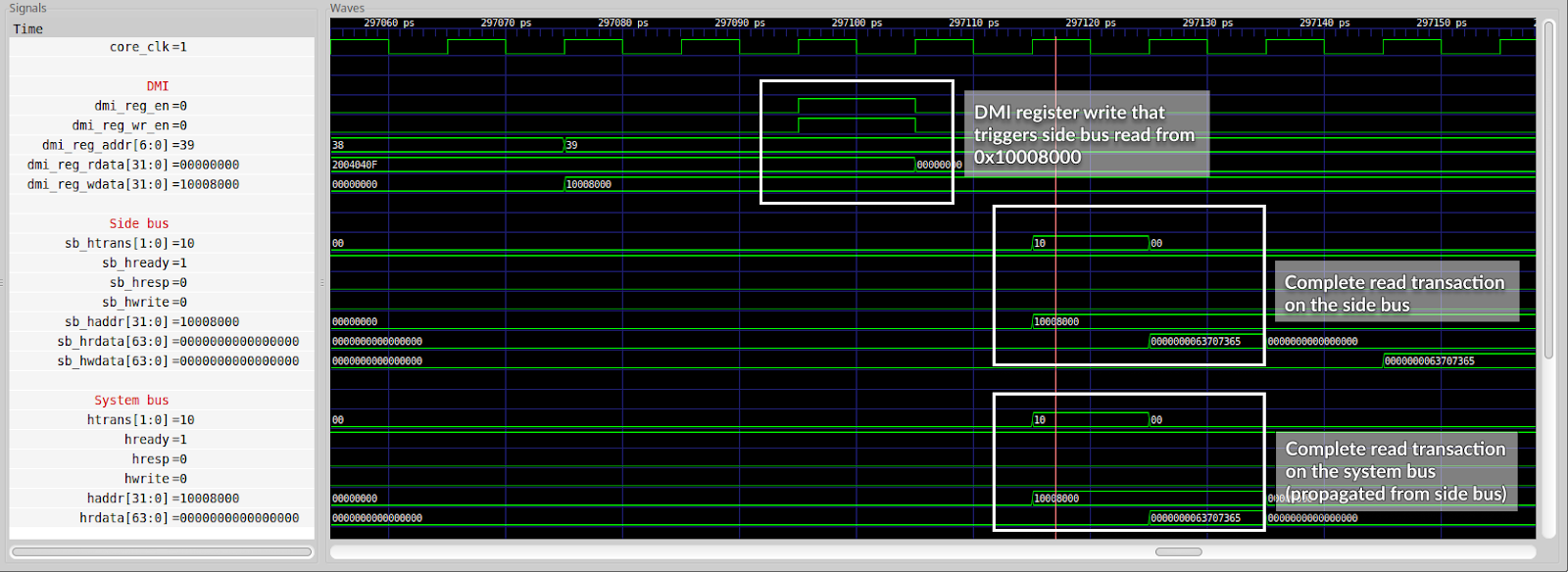

For system level tests we decided to connect to an interactive simulation of the complete design via JTAG with commonly used tools: Open On-Chip Debugger OpenOCD and the GNU Project Debugger (GDB). The simulation exposes a virtual JTAG port, which is used to establish a connection with OpenOCD. Then the OpenOCD instance connects to the GNU debugger. Finally, test scripts are run in GDB, which verify core registers content, memory access and peripheral accesses.

With this testing methodology we exposed an actual problem in the design which prevented accessing system peripherals via JTAG. As it turned out the issue was caused by the side AHB bus of the debug core being disconnected.

Once a connection of the side bus had been made it became possible to access all the peripherals. A 2-to-1 AHB multiplexer was used to join the system and side AHB master ports and forward requests to the peripherals.

To verify the effectiveness of all kinds of tests, both ISS and RTL level, and help ensure that all design states are properly tested, we use coverage analysis. While open source tools and frameworks have some support for gathering and presenting these metrics, e.g. Verilator supports line, toggle and functional coverage, some additional work needs to be done to integrate all of those and present them in a comprehensive, visual form, which will be part of our future efforts.

Transparent and open source-driven hardware ecosystem

In addition to the efforts described in this article, there are other interesting developments within CHIPS Alliance, including improving Verilator to better handle large designs and verification tasks, which are helping bring more open source-driven development and verification solutions to the Caliptra project and the entire open hardware ecosystem.

To learn more about the Caliptra project, watch a recording from a joint talk by Google and Antmicro given at this year’s RISC-V Summit Europe. You can also join the contributors at the upcoming 2023 OCP Global Summit for several talks about the latest developments in the project and future plans.

If you are interested in the work of CHIPS Alliance, keep an eye out for updates during their next Technology Update coinciding with the RISC-V Summit this Fall.

By Michael Gielda – Antmicro