Posted by the Flutter Team at Google

This week at Google I/O, we're announcing the third beta release of Flutter, our mobile app SDK for creating high-quality, native user experiences on iOS and Android, along with showcasing new tooling partners, usage of Flutter by several high-profile customers, and announcing official support from the Material team.

We believe mobile development needs an upgrade. All too often, developers are forced to compromise between quality and productivity: either building the same application twice on both iOS and Android, or settling for a cross-platform solution that makes it hard to deliver the native experience that customers demand. This is why we built Flutter: to offer a new path for mobile development, focused foremost on native performance, advanced visuals, and dramatically improving developer velocity and productivity.

Just twelve months ago at Google I/O 2017, we announced Flutter and delivered an early alpha of the toolkit. Over the last year, we've invested tens of thousands of engineering hours preparing Flutter for production use. We've rewritten major parts of the engine for performance, added support for developing on Windows, published tooling for Android Studio and Visual Studio Code, integrated Dart 2 and added support for more Firebase APIs, added support for inline video, ads and charts, internationalization and accessibility, addressed thousands of bugs and published hundreds of pages of documentation. It's been a busy year and we're thrilled to share the latest beta release with you!

Flutter offers:

- High-velocity development with features like stateful hot reload, which helps you quickly and easily experiment with your application without having to rebuild from scratch.

- Expressive and flexible designs with a layered, extensible architecture of rich, composable, customizable UI widget sets and animation libraries that enables designers' dreams to come to life.

- High-quality experiences across devices and platforms with our portable, GPU-accelerated renderer and ahead-of-time compilation to lightning-fast machine code.

Empowering Developers and Designers

As evidence of the power that Flutter can offer applications, 2Dimensions are this week releasing a preview of a new tool for creating powerful interactive animations with Flutter. Here's an example of the output of their software:

What you are seeing here is Flutter rendering 2D skeletal mesh animations on the phone in real-time. Achieving this level of graphical horsepower is thanks to Flutter's use of the hardware-accelerated Skia engine that draws every pixel to the screen, paired with the blazingly fast ahead-of-time compiled Dart language. But it gets better: note how the demo slider widget is translucently overlaid on the animation. Flutter seamlessly combines user interface widgets with 60fps animated graphics generated in real time, with the same code running on iOS and Android.

Here's what Luigi Rosso, co-founder of 2Dimensions, says about Flutter:

"I love the friction-free iteration with Flutter. Hot Reload sets me in a feedback loop that keeps me focused and in tune with my work. One of my biggest productivity inhibitors are tools that run slower than the developer. Flutter finally resets that bar."

One common challenge for mobile application creators is the transition from early design sketches to an interactive prototype that can be piloted or tested with customers. This week at Google I/O, Infragistics, one of the largest providers of developer tooling and components, are announcing their commitment to Flutter and demonstrating how they've set out to close the designer/developer gap even further with supportive tooling. Indigo Design to Code Studio enables designers to add interactivity to a Sketch design, and generate a pixel-perfect Flutter application.

Customer Adoption

We launched Flutter Beta 1 just ten weeks ago at Mobile World Congress, and it is exciting to see the momentum since then, both on Github, and in the number of published Flutter applications. Even though we're still building out Flutter, we're pleasantly surprised to see strong early adoption of the SDK, with some high-profile customer examples already published. One of the most popular is the companion app to the award-winning Hamilton Broadway musical, built by Posse Digital, with millions of monthly users, and an average rating of 4.6 on the Play Store.

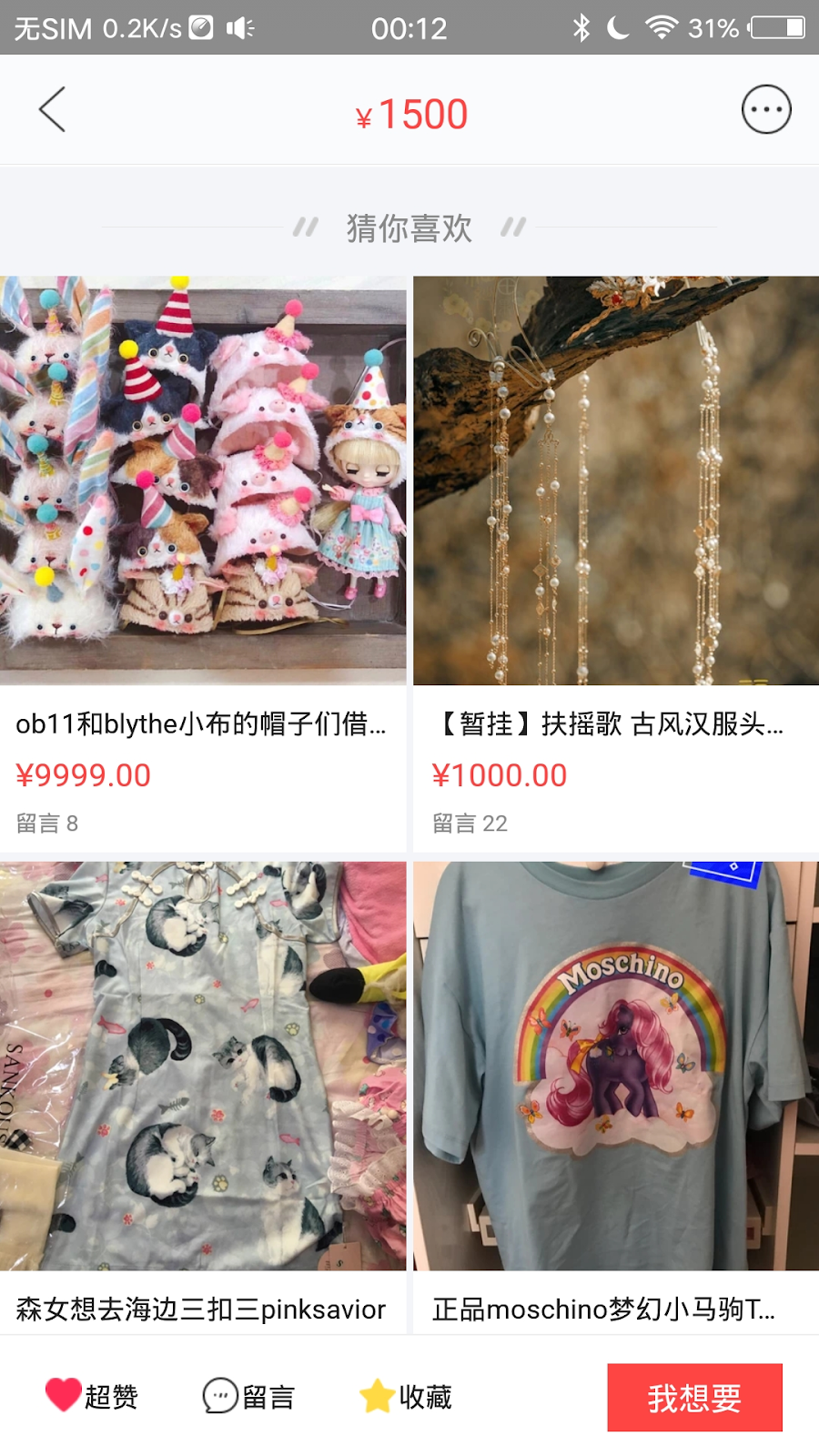

This week, Alibaba is announcing their adoption of Flutter for Xianyu, one of their flagship applications with over twenty million monthly active users. Alibaba praises Flutter for its consistency across platforms, the ease of generating UI code from designer redlines, and the ease with which their native developers have learned Flutter. They are currently rolling out this updated version to their customers.

Another company now using Flutter is Groupon, who is prototyping and building new code for their merchant application. Here's what they say about using it:

"I love the fact that Flutter integrates with our existing app and our team has to write code just once to provide a native experience for both our apps. This significantly reduces our time to market and helps us deliver more features to our customers." Varun Menghani, Head of Merchant Product Management, Groupon

In the short time since the Beta 1 launch, we've seen hundreds of Flutter apps published to the app stores, across a wide variety of application categories. Here are a few examples of the diversity of apps being created with Flutter:

- Abbey Road Studios are previewing Topline, a new version of their music production app.

- AppTree provides a low-code enterprise app platform for brands like McDonalds, Stanford, Wayfair & Fermilab.

- Birch Finance lets you manage and optimize your existing credit cards.

- Coach Yourself offers mindfulness and cognitive-behavioral training.

- OfflinePal collects nearby activities in one place, from concerts and theaters, to mountain hiking and tourist attractions.

Closer to home, Google continues to use Flutter extensively. One new example announced at I/O comes from Google Ads, who are previewing their new Flutter-based AdWords app that allows businesses to track and optimize their online advertising campaigns. Sridhar Ramaswamy, SVP for Ads and Commerce, says:

"Flutter provides a modern reactive framework that enabled us to unify the codebase and teams for our Android and iOS applications. It's allowed the team to be much more productive, while still delivering a native application experience to both platforms. Stateful hot reload has been a game changer for productivity."

New in Flutter Beta 3

Flutter Beta 3, shipping today at I/O, continues us on the glidepath towards our eventual 1.0 release with new features that complete core scenarios. Dart 2, our reboot of the Dart language with a focus on client development, is now fully enabled with a terser syntax for building Flutter UIs. Beta 3 is world-ready with localization support including right-to-left languages, and also provides significantly improved support for building highly-accessible applications. New tooling provides a powerful widget inspector that makes it easier to see the visual tree for your UI and preview how widgets will look during development. We have emerging support for integrating ads through Firebase. And Visual Studio Code is now fully supported as a first-class development tool, with a dedicated Flutter extension.

The Material Design team has worked with us extensively since the start. We're happy to announce that as of today, Flutter is a first-class toolkit for Material, which means the Material and Flutter teams will partner to deliver even more support for Material Design. Of course, you can continue to use Flutter to build apps with a wide range of design aesthetics to express your brand.

More information about the new features in Flutter Beta 3 can be found at the Flutter blog on Medium. If you already have Flutter installed, just one command -- flutter upgrade -- gets you on the latest build. Otherwise, you can follow our getting started guide to install Flutter on macOS, Windows or Linux.

Roadmap to Release

Flutter has long been used in production at Google and by the public, even though we haven't yet released "1.0." We're approaching our 1.0 quality bar, and in the coming months you'll see us focus on some specific areas:

- Performance and size. We'll work on improving both the speed and consistency of Flutter's performance, and offer additional tools and documentation for diagnosing potential issues. We'll also reduce the minimum size of a Flutter application;

- Compatibility. We are continuing to grow our support for a broad range of device types, including older 32-bit devices and expanding our set of out-of-the-box iOS widgets. We're also working to make it easier to add Flutter to your existing Android or iOS codebase.

- Ecosystem. In partnership with the broader community, we continue to build out an ecosystem of packages that make it easy to integrate with the broad set of platform APIs and SDKs.

Like every software project, the trade-offs are between time, quality and features. We are targeting a 1.0 release within the next year, but we will continue to adjust the schedule as necessary. As we're an open source project, our open issues are public and work scheduled for upcoming milestones can be viewed on our Github repo at any time. We welcome your help along this journey to make mobile development amazing.

Whether you're at Google I/O in person or watching remotely, we have plenty of technical content to help you get up and running. In particular, we have numerous sessions on Flutter and Material Design, as well as a new series of Flutter codelabs and a Udacity course that is now open for registration.

Since last year, we've been on a great journey together with a community of early adopters. We get an electric feeling when we see the range of apps, experiments, plug-ins, and supporting tools that developers are starting to produce using Flutter, and we're only just getting started. Now is a great time to join us. Connect with us through the website at https://flutter.io, via Twitter at @flutterio, and in our Google group and Gitter chat room. We're excited to see what you build!

Posted by Christina Chiou Yeh, Google Registry

Posted by Christina Chiou Yeh, Google Registry