Posted by Payam Shodjai, Director, Product Management Google Assistant

With 2020 coming to a close, we wanted to reflect on everything we have launched this year to help you, our developers and partners, create powerful voice experiences with Google Assistant.

Today, many top brands and developers turn to Google Assistant to help users get things done on their phones and on Smart Displays. Over the last year, the number of Actions built by third-party developers has more than doubled. Below is a snapshot of some of our partners who’ve integrated with Google Assistant:

2020 Highlights

Below are a few highlights of what we have launched in 2020:

1. Integrate your Android mobile Apps with Google Assistant

App Actions allow your users to jump right into existing functionality in your Android app with the help of Google Assistant. It makes it easier for users to find what they're looking for in your app in a natural way by using their voice. We take care of all the Natural Language Understanding (NLU) processing, making it easy to develop in only a few days. In 2020, we announced that App Actions are now available for all Android developers to voicify their apps and integrate with Google Assistant.

For common tasks such as opening your apps, opening specific pages in your apps or searching within apps, we introduced Common Intents. For a deeper integration, we’ve expanded our vertical-specific built-in intents (BIIs), to cover more than 60 intents across 10 verticals, adding new categories like Social, Games, Travel & Local, Productivity, Shopping and Communications.

For cases where there isn't a built-in intent for your app functionality, you can instead create custom intents that are unique to your Android app. Like BIIs, custom intents follow the actions.xml schema and act as connection points between Assistant and your defined fulfillments.

Learn more about how to integrate your app with Google Assistant here.

2. Create new experiences for Smart Displays

We also announced new developer tools to help you build high quality, engaging experiences to reach users at home by building for Smart Displays.

Actions Builder is a new web-based IDE that provides a graphical interface to show the entire conversation flow. It allows you to manage Natural Language Understanding (NLU) training data and provides advanced debugging tools. And, it is fully integrated into the Actions Console so you can now build, debug, test, release, and analyze your Actions - all in one place.

Actions SDK, a file based representation of your Action and the ability to use a local IDE. The SDK not only enables local authoring of NLU and conversation schemas, but it also allows bulk import and export of training data to improve conversation quality. The Actions SDK is accompanied by a command line interface, so you can build and manage an Action fully in code using your favorite source control and continuous integration tools.

Interactive Canvas allows you to add visual, immersive experiences to Conversational Actions. We announced the expansion of Interactive Canvas to support Storytelling and Education verticals earlier this year.

Continuous Match Mode allows the Assistant to respond immediately to a user’s speech for more fluid experiences by recognizing defined words and phrases set by you.

We also created a central hub for you to find resources to build games on Smart Displays. This site is filled with a game design playbook, interviews with game creators, code samples, tools access, and everything you need to create awesome games for smart displays.

Actions API provides a new programmatic way to test your critical user journeys more thoroughly and effectively, to help you ensure your Action's conversations run smoothly.

The Dialogflow migration tool inside the Actions Console automates much of the work to move projects to the new and improved Actions Builder tool.

We also worked with partners such as Voiceflow and Jovo, to launch integrations to support voice application development on the Assistant. This effort is part of our commitment to enable you to leverage your favorite development tools, while building for Google Assistant.

We launched several other new features that help you build high quality experiences for the home, such as Media APIs, new and improved voices (available in Actions Console), home storage API.

Get started building for Smart Displays here.

3. Discovery features

Once you build high quality Actions, you are ready for your users to discover them. We have designed new touch points to help your users easily learn about your Actions..

For example, on Android mobile, we’ll be recommending relevant Apps Actions even when the user doesn't mention the app’s name explicitly by showing suggestions. Google Assistant will also be suggesting apps proactively, depending on individual app usage patterns. Android mobile users will also be able to customize their experience, creating their own way to automate their most common tasks with app shortcuts, enabling people to set up quick phrases to enable app functions they frequently use. By simply saying "Hey Google, shortcuts", they can set up and explore suggested shortcuts in the settings screen. We’ll also make proactive suggestions for shortcuts throughout Google Assistants’ mobile experience, tailored to how you use your phone.

Assistant Links deep link to your conversational Action to deliver rich Google Assistant experiences to your websites, so you can send your users directly to your conversational Actions from anywhere on the web.

We also recently opened two new built-in intents (BIIs) for public registration: Education and Storytelling. Registering your Actions for these intents allows your users to discover them in a simple, natural way through general requests to Google Assistant on Smart Displays. People will then be able to say "Hey Google, teach me something new" and they will be presented with a browsable selection of different education experiences. For stories, users can simply say "Hey Google, tell me a story".

We know you build personalized and premium experience for your users, and need to make it easy for them to connect their accounts to your Actions. To help streamline this process we opened two betas for improved account linking flows that will allow simple, streamlined authentication via apps.

- Link with Google enables anyone with an Android or iOS app where they are already logged in to complete the linking flow with just a few clicks, without needing to re-enter credentials.

- App Flip helps you build a better mobile account linking experience, so your users can seamlessly link their accounts to Google without having to re-enter their credentials.

What to expect in 2021

Looking ahead, we will double down on enabling you, our developers and partners to build great experiences for GoogleAssistant and help you reach your users on the go and at home. You can expect to hear more from us on how we are improving the Google Assistant experience to make it easy for Android developers to integrate their Android app with Google Assistant and also help developers achieve success through discovery and monetization.

We are excited to see what you will build with these new features and tools. Thank you for being a part of the Google Assistant ecosystem. We can’t wait to launch even more features and tools for Android developers and Smart Display experiences in 2021.

Want to stay in the know with announcements from the Google Assistant team? Sign up for our monthly developer newsletter here.

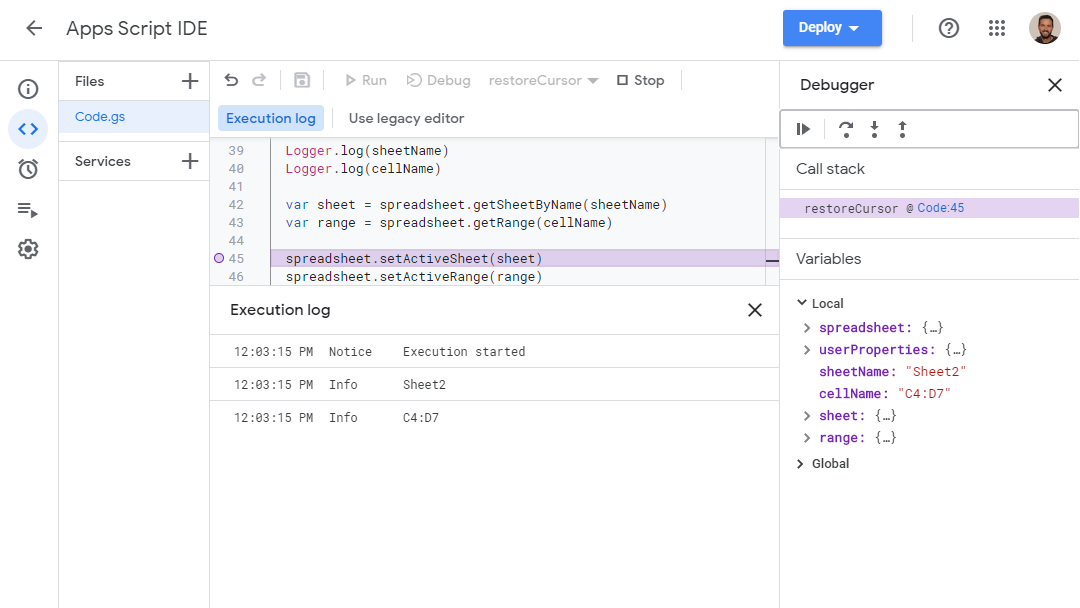

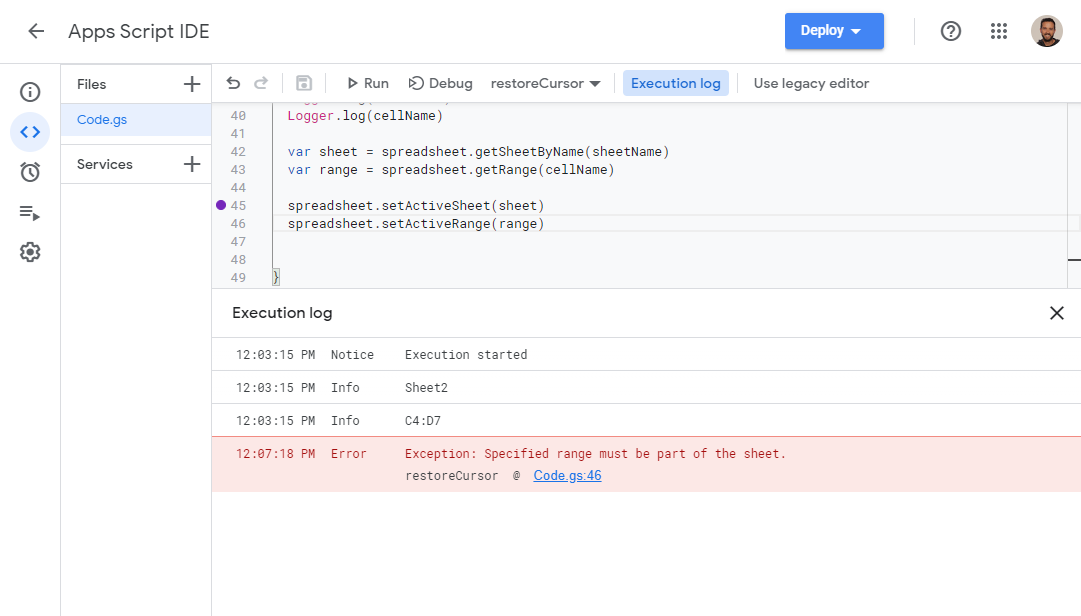

Posted by Charles Maxson, Developer Advocate, Google Cloud

Posted by Charles Maxson, Developer Advocate, Google Cloud

Posted by Kenny Sulaimon, Product Manager, ML Kit, Tory Voight, Product Manager, ML Kit, Daniel Furlong, Lei Yu, Software Engineers, ML Kit, Dong Chen, Technical Lead, MLKit

Posted by Kenny Sulaimon, Product Manager, ML Kit, Tory Voight, Product Manager, ML Kit, Daniel Furlong, Lei Yu, Software Engineers, ML Kit, Dong Chen, Technical Lead, MLKit