Flutter is Google's new mobile app toolkit for crafting beautiful native interfaces on iOS and Android in record time. Today, during the keynote of Google Developer Days in Shanghai, we are announcing Flutter Release Preview 2: our last major milestone before Flutter 1.0.

This release continues the work of completing core scenarios and improving quality, beginning with our initial beta release in February through to the availability of our first Release Preview earlier this summer. The team is now fully focused on completing our 1.0 release.

What's New in Release Preview 2

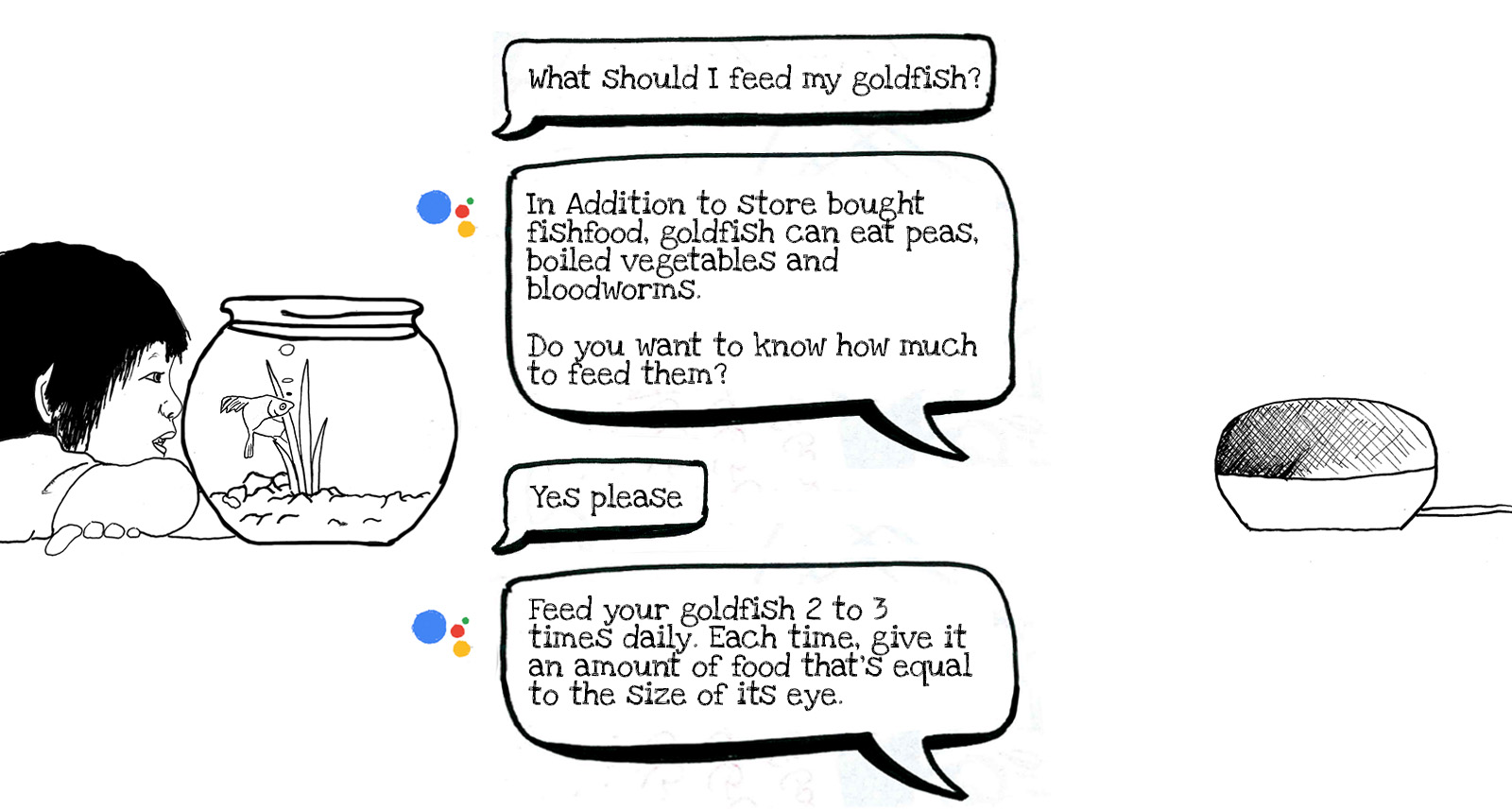

The theme for this release is pixel-perfect iOS apps. While we designed Flutter with highly brand-driven, tailored experiences in mind, we heard feedback from some of you who wanted to build applications that closely follow the Apple interface guidelines. So in this release we've greatly expanded our support for the "Cupertino" themed controls in Flutter, with an extensive library of widgets and classes that make it easier than ever to build with iOS in mind.

A reproduction of the iOS Settings home page, built with Flutter

Here are a few of the new iOS-themed widgets added in Flutter Release Preview 2:

CupertinoApp: a top-level widget for creating iOS-styled apps;CupertinoTimerPickerfor a countdown timer picker;CupertinoSegmentedControlfor horizontal segmented controls;CupertinoActionSheetfor iOS-styled, bottom pop-up sheets.

And more have been updated, too:

CupertinoNavigationBarandCupertinoSliverNavigationBar- Perform parallax transitions when navigating between pages.

- Auto-populate their title and back button labels based on

CupertinoPageRoute.title.

CupertinoPageScaffold- Inset content when the keyboard displays.

CupertinoScrollbar- Improved visual fidelity during overscrolls.

CupertinoPicker- Adds support for infinite scrolling and looped scrolling.

- Adds multicolumn support for off-axis cylindrical projection.

As ever, the Flutter documentation is the place to go for detailed information on the Cupertino* classes. (Note that at the time of writing, we were still working to add some of these new Cupertino widgets to the visual widget catalog).

We've made progress to complete other scenarios also. Taking a look under the hood, support has been added for executing Dart code in the background, even while the application is suspended. Plugin authors can take advantage of this to create new plugins that execute code upon an event being triggered, such as the firing of a timer, or the receipt of a location update. For a more detailed introduction, read this Medium article, which demonstrates how to use background execution to create a geofencing plugin.

Another improvement is a reduction of up to 30% in our application package size on both Android and iOS. Our minimal Flutter app on Android now weighs in at just 4.7MB when built in release mode, a savings of 2MB since we started the effort — and we're continuing to identify further potential optimizations. (Note that while the improvements affect both iOS and Android, you may see different results on iOS because of how iOS packages are built).

Growing Momentum

As many new developers continue to discover Flutter, we're humbled to note that Flutter is now one of the top 50 active software repositories on GitHub:

We declared Flutter "production ready" at Google I/O this year; with Flutter getting ever closer to the stable 1.0 release, many new Flutter applications are being released, with thousands of Flutter-based apps already appearing in the Apple and Google Play stores. These include some of the largest applications on the planet by usage, such as Alibaba (Android, iOS), Tencent Now (Android, iOS), and Google Ads (Android, iOS). Here's a video on how Alibaba used Flutter to build their Xianyu app (Android, iOS), currently used by over 50 million customers in China:

We take customer satisfaction seriously and regularly survey our users. We promised to share the results back with the community, and our most recent survey shows that 92% of developers are satisfied or very satisfied with Flutter and would recommend Flutter to others. When it comes to fast development and beautiful UIs, 79% found Flutter extremely helpful or very helpful in both reaching their maximum engineering velocity and implementing an ideal UI. And 82% of Flutter developers are satisfied or very satisfied with the Dart programming language, which recently celebrated hitting the release milestone for Dart 2.

Flutter's strong community growth can be felt in other ways, too. On StackOverflow, we see fast growing interest in Flutter, with lots of new questions being posted, answered and viewed, as this chart shows:

Number of StackOverflow question views tagged with each of four popular UI frameworks over time

Flutter has been open source from day one. That's by design. Our goal is to be transparent about our progress and encourage contributions from individuals and other companies who share our desire to see beautiful user experiences on all platforms.

Getting Started

How do you upgrade to Flutter Release Preview 2? If you're on the beta channel already, it just takes one command:

$ flutter upgrade

You can check that you have Release Preview 2 installed by running flutter --version from the command line. If you have version 0.8.2 or later, you have everything described in this post.

If you haven't tried Flutter yet, now is the perfect time, and flutter.io has all the details to download Flutter and get started with your first app.

When you're ready, there's a whole ecosystem of example apps and code snippets to help you get going. You can find samples from the Flutter team in the flutter/samples repo on GitHub, covering things like how to use Material and Cupertino, approaches for deserializing data encoded in JSON, and more. There's also a curated list of samples that links out to some of the best examples created by the Flutter community.

You can also learn and stay up to date with Flutter through our hands-on videos, newsletters, community articles and developer shows. There are discussion groups, chat rooms, community support, and a weekly online hangout available to you to help you along the way as you build your application. Release Preview 2 is our last release preview. Next stop: 1.0!

Posted by the Flutter Team at Google

Posted by the Flutter Team at Google

Posted by Jan-Felix Schmakeit, Google Photos Developer Lead

Posted by Jan-Felix Schmakeit, Google Photos Developer Lead