Posted by The Android ML Platform Team

The use of on-device ML in Android is growing faster than ever thanks to its unique benefits over server based ML such as offline availability, lower latency, improved privacy and lower inference costs.

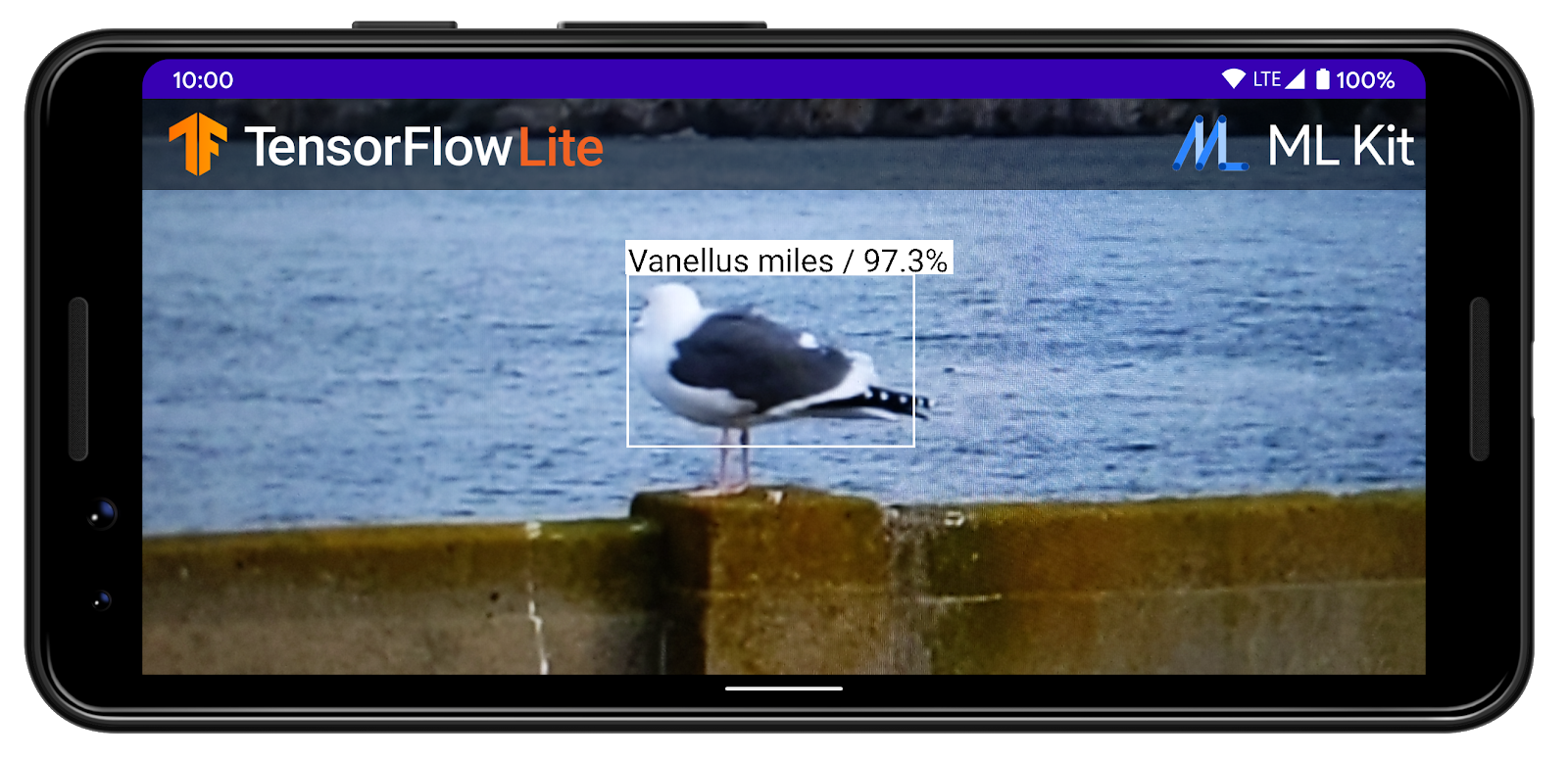

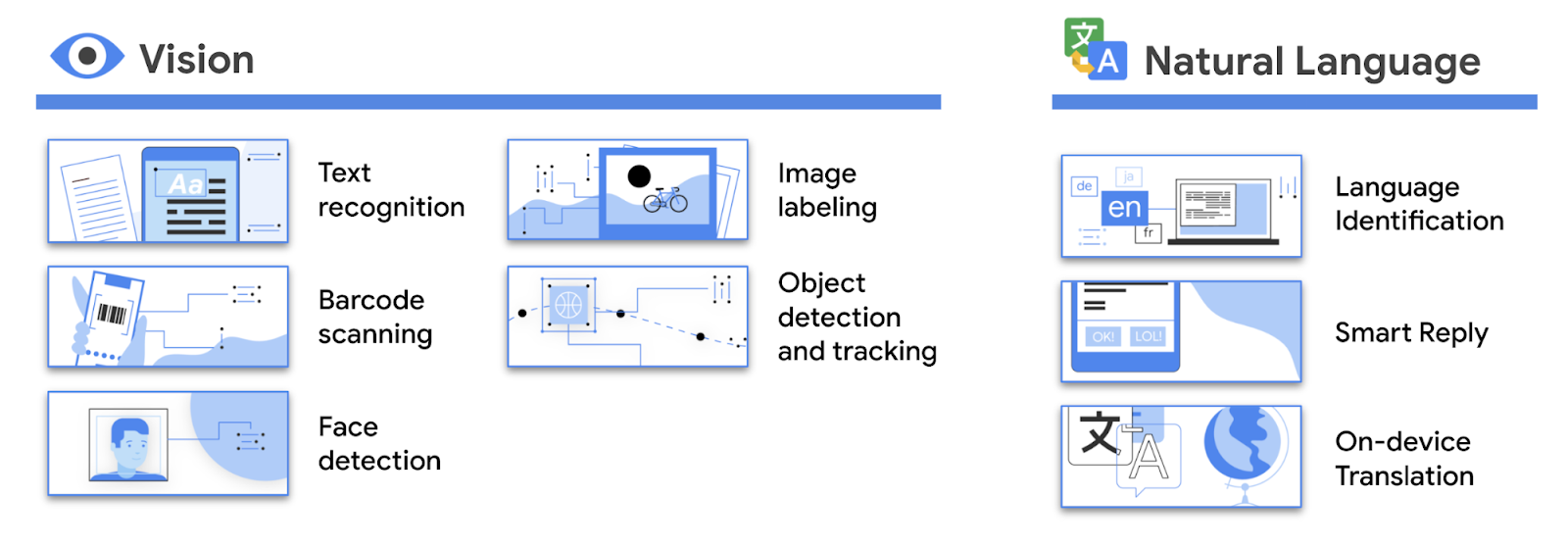

When building on-device ML based features, Android developers usually have a choice between two options: using a production ready SDK that comes with pre-trained and optimized ML models, such as ML Kit or, if they need more control, deploying their own custom ML models and features.

Today, we have some updates on Android’s custom ML stack - a set of essential APIs and services for deploying custom ML features on Android.

TensorFlow Lite in Google Play services is now Android’s official ML inference engine

We first announced TensorFlow Lite in Google Play services in Early Access Preview at Google I/O '21 as an alternative to standalone TensorFlow Lite. Since then, it has grown to serve billions of users every month via tens of thousands of apps.Last month we released the stable version of TensorFlow Lite in Google Play services and are excited to make it the official ML inference engine on Android.

Using TensorFlow Lite in Google Play services will not only allow you to save on binary size and benefit from performance improvements via automatic updates but also ensure that you can easily integrate with future APIs and services from Android’s custom ML stack as they will be built on top of our official inference engine.

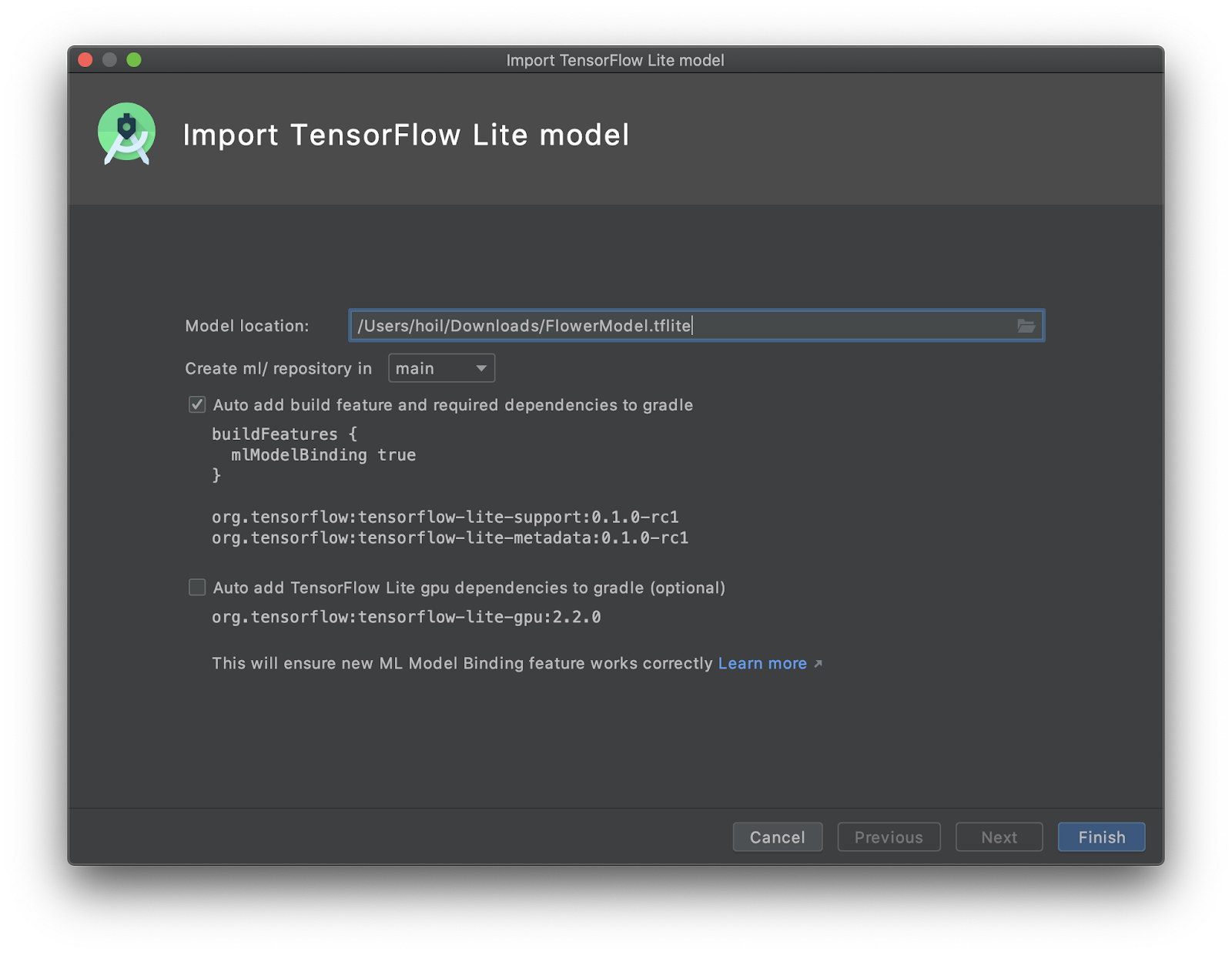

If you are currently bundling TensorFlow Lite to your app, check out the documentation to migrate.

TensorFlow Lite Delegates now distributed via Google Play services

Released a few years ago, GPU delegate and NNAPI delegate let you leverage the processing power of specialized hardware such as GPU, DSP or NPU. Both GPU and NNAPI delegates are now distributed via Google Play services.

We are also aware that, for advanced use cases, some developers want to use custom delegates directly. We’re working with our hardware partners on expanding access to their custom delegates via Google Play services.

Acceleration Service will help you pick the best TensorFlow Lite Delegate for optimal performance in runtime

Identifying the best delegate for each user can be a complex task on Android due to hardware heterogeneity. To help you overcome this challenge, we are building a new API that allows you to safely optimize the hardware acceleration configuration at runtime for your TensorFlow Lite models.

We are currently accepting applications for early access to the Acceleration Service and aim for a public launch early next year.

We will keep investing in Android’s custom ML stack

We are committed to providing the essentials for high performance custom on-device ML on Android.

As a summary, Android’s custom ML stack currently includes:

- TensorFlow Lite in Google Play Services for high performance on-device inference

- TensorFlow Lite Delegates for accessing hardware acceleration

Soon, we will release an Acceleration Service, which will help pick the optimal delegate for you at runtime.

You can read about and stay up to date with Android’s custom ML stack at developer.android.com/ml.