How well do you know Halloween 2023? Take our Google Search Trends quiz and find out.

How well do you know Halloween 2023? Take our Google Search Trends quiz and find out.

Quiz: Test your Halloween search trend knowledge

How well do you know Halloween 2023? Take our Google Search Trends quiz and find out.

How well do you know Halloween 2023? Take our Google Search Trends quiz and find out.

How well do you know Halloween 2023? Take our Google Search Trends quiz and find out.

How well do you know Halloween 2023? Take our Google Search Trends quiz and find out.

We are announcing new ways across Search and Lens for learners to explore new topics and get unstuck on complex problems.

We are announcing new ways across Search and Lens for learners to explore new topics and get unstuck on complex problems.

A list of spooky books that you can read free of charge on Google Books.

A list of spooky books that you can read free of charge on Google Books.

Many people with questions about grammar turn to Google Search for guidance. While existing features, such as “Did you mean”, already handle simple typo corrections, more complex grammatical error correction (GEC) is beyond their scope. What makes the development of new Google Search features challenging is that they must have high precision and recall while outputting results quickly.

The conventional approach to GEC is to treat it as a translation problem and use autoregressive Transformer models to decode the response token-by-token, conditioning on the previously generated tokens. However, although Transformer models have proven to be effective at GEC, they aren’t particularly efficient because the generation cannot be parallelized due to autoregressive decoding. Often, only a few modifications are needed to make the input text grammatically correct, so another possible solution is to treat GEC as a text editing problem. If we could run the autoregressive decoder only to generate the modifications, that would substantially decrease the latency of the GEC model.

To this end, in “EdiT5: Semi-Autoregressive Text-Editing with T5 Warm-Start”, published at Findings of EMNLP 2022, we describe a novel text-editing model that is based on the T5 Transformer encoder-decoder architecture. EdiT5 powers the new Google Search grammar check feature that allows you to check if a phrase or sentence is grammatically correct and provides corrections when needed. Grammar check shows up when the phrase "grammar check" is included in a search query, and if the underlying model is confident about the correction. Additionally, it shows up for some queries that don’t contain the “grammar check” phrase when Search understands that is the likely intent.

|

For low-latency applications at Google, Transformer models are typically run on TPUs. Due to their fast matrix multiplication units (MMUs), these devices are optimized for performing large matrix multiplications quickly, for example running a Transformer encoder on hundreds of tokens in only a few milliseconds. In contrast, Transformer decoding makes poor use of a TPU’s capabilities, because it forces it to process only one token at a time. This makes autoregressive decoding the most time-consuming part of a translation-based GEC model.

In the EdiT5 approach, we reduce the number of decoding steps by treating GEC as a text editing problem. The EdiT5 text-editing model is based on the T5 Transformer encoder-decoder architecture with a few crucial modifications. Given an input with grammatical errors, the EdiT5 model uses an encoder to determine which input tokens to keep or delete. The kept input tokens form a draft output, which is optionally reordered using a non-autoregressive pointer network. Finally, a decoder outputs the tokens that are missing from the draft, and uses a pointing mechanism to indicate where each new token should be placed to generate a grammatically correct output. The decoder is only run to produce tokens that were missing in the draft, and as a result, runs for much fewer steps than would be needed in the translation approach to GEC.

To further decrease the decoder latency, we reduce the decoder down to a single layer, and we compensate by increasing the size of the encoder. Overall, this decreases latency significantly because the extra work in the encoder is efficiently parallelized.

We applied the EdiT5 model to the public BEA grammatical error correction benchmark, comparing different model sizes. The experimental results show that an EdiT5 large model with 391M parameters yields a higher F0.5 score, which measures the accuracy of the corrections, while delivering a 9x speedup compared to a T5 base model with 248M parameters. The mean latency of the EdiT5 model was merely 4.1 milliseconds.

Our earlier research, as well as the results above, show that model size plays a crucial role in generating accurate grammatical corrections. To combine the advantages of large language models (LLMs) and the low latency of EdiT5, we leverage a technique called hard distillation. First, we train a teacher LLM using similar datasets used for the Gboard grammar model. The teacher model is then used to generate training data for the student EdiT5 model.

Training sets for grammar models consist of ungrammatical source / grammatical target sentence pairs. Some of the training sets have noisy targets that contain grammatical errors, unnecessary paraphrasing, or unwanted artifacts. Therefore, we generate new pseudo-targets with the teacher model to get cleaner and more consistent training data. Then, we re-train the teacher model with the pseudo-targets using a technique called self-training. Finally, we found that when the source sentence contains many errors, the teacher sometimes corrects only part of the errors. Thus, we can further improve the quality of the pseudo-targets by feeding them to the teacher LLM for a second time, a technique called iterative refinement.

Using the improved GEC data, we train two EdiT5-based models: a grammatical error correction model, and a grammaticality classifier. When the grammar check feature is used, we run the query first through the correction model, and then we check if the output is indeed correct with the classifier model. Only then do we surface the correction to the user.

The reason to have a separate classifier model is to more easily trade off between precision and recall. Additionally, for ambiguous or nonsensical queries to the model where the best correction is unclear, the classifier reduces the risk of serving erroneous or confusing corrections.

We have developed an efficient grammar correction model based on the state-of-the-art EdiT5 model architecture. This model allows users to check for the grammaticality of their queries in Google Search by including the “grammar check” phrase in the query.

We gratefully acknowledge the key contributions of the other team members, including Akash R, Aliaksei Severyn, Harsh Shah, Jonathan Mallinson, Mithun Kumar S R, Samer Hassan, Sebastian Krause, and Shikhar Thakur. We’d also like to thank Felix Stahlberg, Shankar Kumar, and Simon Tong for helpful discussions and pointers.

Today, we’re announcing three new ways that you can get more context about the images and sources you’re finding online.

Today, we’re announcing three new ways that you can get more context about the images and sources you’re finding online.

Learning a language can open up new opportunities in a person’s life. It can help people connect with those from different cultures, travel the world, and advance their career. English alone is estimated to have 1.5 billion learners worldwide. Yet proficiency in a new language is difficult to achieve, and many learners cite a lack of opportunity to practice speaking actively and receiving actionable feedback as a barrier to learning.

We are excited to announce a new feature of Google Search that helps people practice speaking and improve their language skills. Within the next few days, Android users in Argentina, Colombia, India (Hindi), Indonesia, Mexico, and Venezuela can get even more language support from Google through interactive speaking practice in English — expanding to more countries and languages in the future. Google Search is already a valuable tool for language learners, providing translations, definitions, and other resources to improve vocabulary. Now, learners translating to or from English on their Android phones will find a new English speaking practice experience with personalized feedback.

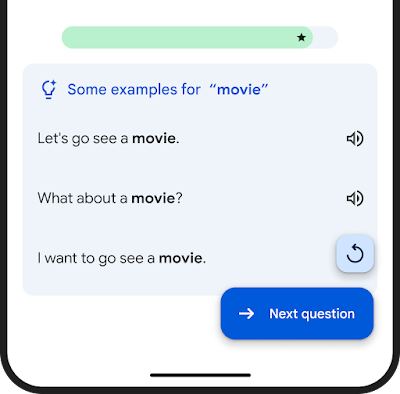

|

| A new feature of Google Search allows learners to practice speaking words in context. |

Learners are presented with real-life prompts and then form their own spoken answers using a provided vocabulary word. They engage in practice sessions of 3-5 minutes, getting personalized feedback and the option to sign up for daily reminders to keep practicing. With only a smartphone and some quality time, learners can practice at their own pace, anytime, anywhere.

Designed to be used alongside other learning services and resources, like personal tutoring, mobile apps, and classes, the new speaking practice feature on Google Search is another tool to assist learners on their journey.

We have partnered with linguists, teachers, and ESL/EFL pedagogical experts to create a speaking practice experience that is effective and motivating. Learners practice vocabulary in authentic contexts, and material is repeated over dynamic intervals to increase retention — approaches that are known to be effective in helping learners become confident speakers. As one partner of ours shared:

"Speaking in a given context is a skill that language learners often lack the opportunity to practice. Therefore this tool is very useful to complement classes and other resources." - Judit Kormos, Professor, Lancaster University

We are also excited to be working with several language learning partners to surface content they are helping create and to connect them with learners around the world. We look forward to expanding this program further and working with any interested partner.

Every learner is different, so delivering personalized feedback in real time is a key part of effective practice. Responses are analyzed to provide helpful, real-time suggestions and corrections.

The system gives semantic feedback, indicating whether their response was relevant to the question and may be understood by a conversation partner. Grammar feedback provides insights into possible grammatical improvements, and a set of example answers at varying levels of language complexity give concrete suggestions for alternative ways to respond in this context.

|

| The feedback is composed of three elements: Semantic analysis, grammar correction, and example answers. |

Among the several new technologies we developed, contextual translation provides the ability to translate individual words and phrases in context. During practice sessions, learners can tap on any word they don’t understand to see the translation of that word considering its context.

|

| Example of contextual translation feature. |

This is a difficult technical task, since individual words in isolation often have multiple alternative meanings, and multiple words can form clusters of meaning that need to be translated in unison. Our novel approach translates the entire sentence, then estimates how the words in the original and the translated text relate to each other. This is commonly known as the word alignment problem.

|

| Example of a translated sentence pair and its word alignment. A deep learning alignment model connects the different words that create the meaning to suggest a translation. |

The key technology piece that enables this functionality is a novel deep learning model developed in collaboration with the Google Translate team, called Deep Aligner. The basic idea is to take a multilingual language model trained on hundreds of languages, then fine-tune a novel alignment model on a set of word alignment examples (see the figure above for an example) provided by human experts, for several language pairs. From this, the single model can then accurately align any language pair, reaching state-of-the-art alignment error rate (AER, a metric to measure the quality of word alignments, where lower is better). This single new model has led to dramatic improvements in alignment quality across all tested language pairs, reducing average AER from 25% to 5% compared to alignment approaches based on Hidden Markov models (HMMs).

|

| Alignment error rates (lower is better) between English (EN) and other languages. |

This model is also incorporated into Google’s translation APIs, greatly improving, for example, the formatting of translated PDFs and websites in Chrome, the translation of YouTube captions, and enhancing Google Cloud’s translation API.

To enable grammar feedback for accented spoken language, our research teams adapted grammar correction models for written text (see the blog and paper) to work on automatic speech recognition (ASR) transcriptions, specifically for the case of accented speech. The key step was fine-tuning the written text model on a corpus of human and ASR transcripts of accented speech, with expert-provided grammar corrections. Furthermore, inspired by previous work, the teams developed a novel edit-based output representation that leverages the high overlap between the inputs and outputs that is particularly well-suited for short input sentences common in language learning settings.

The edit representation can be explained using an example:

In the above, “at” is the word that is inserted at position 4 and “PREPOSITION” denotes this is an error involving prepositions. We used the error tag to select tag-dependent acceptance thresholds that improved the model further. The model increased the recall of grammar problems from 4.6% to 35%.

Some example output from our model and a model trained on written corpora:

| Example 1 | Example 2 | |||

| User input (transcribed speech) | I live of my profession. | I need a efficient card and reliable. | ||

| Text-based grammar model | I live by my profession. | I need an efficient card and a reliable. | ||

| New speech-optimized model | I live off my profession. | I need an efficient and reliable card. |

A primary goal of conversation is to communicate one’s intent clearly. Thus, we designed a feature that visually communicates to the learner whether their response was relevant to the context and would be understood by a partner. This is a difficult technical problem, since early language learners’ spoken responses can be syntactically unconventional. We had to carefully balance this technology to focus on the clarity of intent rather than correctness of syntax.

Our system utilizes a combination of two approaches:

|

| The system provides feedback about whether the response was relevant to the prompt, and would be understood by a communication partner. |

Our available practice activities present a mix of human-expert created content, and content that was created with AI assistance and human review. This includes speaking prompts, focus words, as well as sets of example answers that showcase meaningful and contextual responses.

|

| A list of example answers is provided when the learner receives feedback and when they tap the help button. |

Since learners have different levels of ability, the language complexity of the content has to be adjusted appropriately. Prior work on language complexity estimation focuses on text of paragraph length or longer, which differs significantly from the type of responses that our system processes. Thus, we developed novel models that can estimate the complexity of a single sentence, phrase, or even individual words. This is challenging because even a phrase composed of simple words can be hard for a language learner (e.g., "Let's cut to the chase”). Our best model is based on BERT and achieves complexity predictions closest to human expert consensus. The model was pre-trained using a large set of LLM-labeled examples, and then fine-tuned using a human expert–labeled dataset.

|

| Mean squared error of various approaches’ performance estimating content difficulty on a diverse corpus of ~450 conversational passages (text / transcriptions). Top row: Human raters labeled the items on a scale from 0.0 to 5.0, roughly aligned to the CEFR scale (from A1 to C2). Bottom four rows: Different models performed the same task, and we show the difference to the human expert consensus. |

Using this model, we can evaluate the difficulty of text items, offer a diverse range of suggestions, and most importantly challenge learners appropriately for their ability levels. For example, using our model to label examples, we can fine-tune our system to generate speaking prompts at various language complexity levels.

| Vocabulary focus words, to be elicited by the questions | ||||||

| guitar | apple | lion | ||||

| Simple | What do you like to play? | Do you like fruit? | Do you like big cats? | |||

| Intermediate | Do you play any musical instruments? | What is your favorite fruit? | What is your favorite animal? | |||

| Complex | What stringed instrument do you enjoy playing? | Which type of fruit do you enjoy eating for its crunchy texture and sweet flavor? | Do you enjoy watching large, powerful predators? | |||

Furthermore, content difficulty estimation is used to gradually increase the task difficulty over time, adapting to the learner’s progress.

With these latest updates, which will roll out over the next few days, Google Search has become even more helpful. If you are an Android user in India (Hindi), Indonesia, Argentina, Colombia, Mexico, or Venezuela, give it a try by translating to or from English with Google.

We look forward to expanding to more countries and languages in the future, and to start offering partner practice content soon.

Many people were involved in the development of this project. Among many others, we thank our external advisers in the language learning field: Jeffrey Davitz, Judit Kormos, Deborah Healey, Anita Bowles, Susan Gaer, Andrea Revesz, Bradley Opatz, and Anne Mcquade.

We’re testing new ways to get started on something you need to do — like creating an image that can bring an idea to life, or a written draft when you need a starting po…

We’re testing new ways to get started on something you need to do — like creating an image that can bring an idea to life, or a written draft when you need a starting po…

These new product features and expansions help people, city planners and policy makers take action toward building a sustainable future.

These new product features and expansions help people, city planners and policy makers take action toward building a sustainable future.

By partnering with Google Search, the Aks Foundation helped more survivors of domestic violence and sexual abuse with their 24/7 crisis hotline.

By partnering with Google Search, the Aks Foundation helped more survivors of domestic violence and sexual abuse with their 24/7 crisis hotline.

Search Generative Experience is rolling out to teens, so they can test out generative AI in Google Search.

Search Generative Experience is rolling out to teens, so they can test out generative AI in Google Search.