The 10th International Conference on Learning Representations (ICLR 2022) kicks off this week, bringing together researchers, entrepreneurs, engineers and students alike to discuss and explore the rapidly advancing field of deep learning. Entirely virtual this year, ICLR 2022 offers conference and workshop tracks that present some of the latest research in deep learning and its applications to areas ranging from computer vision, speech recognition and text understanding to robotics, computational biology, and more.

As a Platinum Sponsor of ICLR 2022 and Champion DEI Action Fund contributor, Google will have a robust presence with nearly 100 accepted publications and extensive participation on organizing committees and in workshops. If you have registered for ICLR 2022, we hope you’ll watch our talks and learn about the work done at Google to address complex problems that affect billions of people. Here you can learn more about the research we will be presenting as well as our general involvement at ICLR 2022 (those with Google affiliations in bold).

Senior Area Chairs:

Includes: Been Kim, Dale Schuurmans, Sergey Levine

Area Chairs:

Includes: Adam White, Aditya Menon, Aleksandra Faust, Amin Karbasi, Amir Globerson, Andrew Dai, Balaji Lakshminarayanan, Behnam Neyshabur, Ben Poole, Bhuwan Dhingra, Bo Dai, Boqing Gong, Cristian Sminchisescu, David Ha, David Woodruff, Denny Zhou, Dipanjan Das, Dumitru Erhan, Dustin Tran, Emma Strubell, Eunsol Choi, George Dahl, George Tucker, Hanie Sedghi, Heinrich Jiang, Hossein Mobahi, Hugo Larochelle, Izhak Shafran, Jasper Snoek, Jean-Philippe Vert, Jeffrey Pennington, Justin Gilmer, Karol Hausman, Kevin Swersky, Krzysztof Choromanski, Mathieu Blondel, Matt Kusner, Michael Ryoo, Ming-Hsuan Yang, Minmin Chen, Mirella Lapata, Mohammad Ghavamzadeh, Mohammad Norouzi, Naman Agarwal, Nicholas Carlini, Olivier Bachem, Piyush Rai, Prateek Jain, Quentin Berthet, Richard Nock, Rose Yu, Sewoong Oh, Silvio Lattanzi, Slav Petrov, Srinadh Bhojanapalli, Tim Salimans, Ting Chen, Tong Zhang, Vikas Sindhwani, Weiran Wang, William Cohen, Xiaoming Liu

Workflow Chairs:

Includes: Yaguang Li

Diversity Equity & Inclusion Chairs:

Includes: Rosanne Liu

Invited Talks

Beyond Interpretability: Developing a Language to Shape Our Relationships with AI

Google Speaker: Been Kim

Do You See What I See? Large-Scale Learning from Multimodal Videos

Google Speaker: Cordelia Schmid

Publications

Hyperparameter Tuning with Renyi Differential Privacy – 2022 Outstanding Paper Award

Nicolas Papernot, Thomas Steinke

MIDI-DDSP: Detailed Control of Musical Performance via Hierarchical Modeling

Yusong Wu, Ethan Manilow, Yi Deng, Rigel Swavely, Kyle Kastner, Tim Cooijmans, Aaron Courville, Cheng-Zhi Anna Huang, Jesse Engel

The Information Geometry of Unsupervised Reinforcement Learning

Benjamin Eysenbach, Ruslan Salakhutdinov, Sergey Levine

Learning Strides in Convolutional Neural Networks – 2022 Outstanding Paper Award

Rachid Riad*, Olivier Teboul, David Grangier, Neil Zeghidour

Poisoning and Backdooring Contrastive Learning

Nicholas Carlini, Andreas Terzis

Coordination Among Neural Modules Through a Shared Global Workspace

Anirudh Goyal, Aniket Didolkar, Alex Lamb, Kartikeya Badola, Nan Rosemary Ke, Nasim Rahaman, Jonathan Binas, Charles Blundell, Michael Mozer, Yoshua Bengio

Fine-Tuned Language Models Are Zero-Shot Learners (see the blog post)

Jason Wei, Maarten Bosma, Vincent Y. Zhao, Kelvin Guu, Adams Wei Yu, Brian Lester, Nan Du, Andrew M. Dai, Quoc V. Le

Large Language Models Can Be Strong Differentially Private Learners

Xuechen Li, Florian Tramèr, Percy Liang, Tatsunori Hashimoto

Progressive Distillation for Fast Sampling of Diffusion Models

Tim Salimans, Jonathan Ho

Exploring the Limits of Large Scale Pre-training

Samira Abnar, Mostafa Dehghani, Behnam Neyshabur, Hanie Sedghi

Scarf: Self-Supervised Contrastive Learning Using Random Feature Corruption

Dara Bahri, Heinrich Jiang, Yi Tay, Donald Metzler

Scalable Sampling for Nonsymmetric Determinantal Point Processes

Insu Han, Mike Gartrell, Jennifer Gillenwater, Elvis Dohmatob, Amin Karbasi

When Vision Transformers Outperform ResNets without Pre-training or Strong Data Augmentations

Xiangning Chen, Cho-Jui Hsieh, Boqing Gong

ViTGAN: Training GANs with Vision Transformers

Kwonjoon Lee, Huiwen Chang, Lu Jiang, Han Zhang, Zhuowen Tu, Ce Liu

Generalized Decision Transformer for Offline Hindsight Information Matching

Hiroki Furuta, Yutaka Matsuo, Shixiang Shane Gu

The MultiBERTs: BERT Reproductions for Robustness Analysis

Thibault Sellam, Steve Yadlowsky, Ian Tenney, Jason Wei, Naomi Saphra, Alexander D’Amour, Tal Linzen, Jasmijn Bastings, Iulia Turc, Jacob Eisenstein, Dipanjan Das, Ellie Pavlick

Scaling Laws for Neural Machine Translation

Behrooz Ghorbani, Orhan Firat, Markus Freitag, Ankur Bapna, Maxim Krikun, Xavier Garcia, Ciprian Chelba, Colin Cherry

Interpretable Unsupervised Diversity Denoising and Artefact Removal

Mangal Prakash, Mauricio Delbracio, Peyman Milanfar, Florian Jug

Understanding Latent Correlation-Based Multiview Learning and Self-Supervision: An Identifiability Perspective

Qi Lyu, Xiao Fu, Weiran Wang, Songtao Lu

Memorizing Transformers

Yuhuai Wu, Markus N. Rabe, DeLesley Hutchins, Christian Szegedy

Churn Reduction via Distillation

Heinrich Jiang, Harikrishna Narasimhan, Dara Bahri, Andrew Cotter, Afshin Rostamizadeh

DR3: Value-Based Deep Reinforcement Learning Requires Explicit Regularization

Aviral Kumar, Rishabh Agarwal, Tengyu Ma, Aaron Courville, George Tucker, Sergey Levine

Path Auxiliary Proposal for MCMC in Discrete Space

Haoran Sun, Hanjun Dai, Wei Xia, Arun Ramamurthy

On the Relation Between Statistical Learning and Perceptual Distances

Alexander Hepburn, Valero Laparra, Raul Santos-Rodriguez, Johannes Ballé, Jesús Malo

Possibility Before Utility: Learning And Using Hierarchical Affordances

Robby Costales, Shariq Iqbal, Fei Sha

MT3: Multi-Task Multitrack Music Transcription

Josh Gardner*, Ian Simon, Ethan Manilow*, Curtis Hawthorne, Jesse Engel

Bayesian Neural Network Priors Revisited

Vincent Fortuin, Adrià Garriga-Alonso, Sebastian W. Ober, Florian Wenzel, Gunnar Rätsch, Richard E. Turner, Mark van der Wilk, Laurence Aitchison

GradMax: Growing Neural Networks using Gradient Information

Utku Evci, Bart van Merrienboer, Thomas Unterthiner, Fabian Pedregosa, Max Vladymyrov

Scene Transformer: A Unified Architecture for Predicting Future Trajectories of Multiple Agents

Jiquan Ngiam, Benjamin Caine, Vijay Vasudevan, Zhengdong Zhang, Hao-Tien Lewis Chiang, Jeffrey Ling, Rebecca Roelofs, Alex Bewley, Chenxi Liu, Ashish Venugopal, David Weiss, Ben Sapp, Zhifeng Chen, Jonathon Shlens

The Role of Pretrained Representations for the OOD Generalization of RL Agents

Frederik Träuble, Andrea Dittadi, Manuel Wüthrich, Felix Widmaier, Peter Gehler, Ole Winther, Francesco Locatello, Olivier Bachem, Bernhard Schölkopf, Stefan Bauer

Autoregressive Diffusion Models

Emiel Hoogeboom, Alexey A. Gritsenko, Jasmijn Bastings, Ben Poole, Rianne van den Berg, Tim Salimans

The Role of Permutation Invariance in Linear Mode Connectivity of Neural Networks

Rahim Entezari, Hanie Seghi, Olga Saukh, Behnam Neyshabur

DISSECT: Disentangled Simultaneous Explanations via Concept Traversals

Asma Ghandeharioun, Been Kim, Chun-Liang Li, Brendan Jou, Brian Eoff, Rosalind W. Picard

Anisotropic Random Feature Regression in High Dimensions

Gabriel C. Mel, Jeffrey Pennington

Open-Vocabulary Object Detection via Vision and Language Knowledge Distillation

Xiuye Gu, Tsung-Yi Lin*, Weicheng Kuo, Yin Cui

MCMC Should Mix: Learning Energy-Based Model with Flow-Based Backbone

Erik Nijkamp*, Ruiqi Gao, Pavel Sountsov, Srinivas Vasudevan, Bo Pang, Song-Chun Zhu, Ying Nian Wu

Effect of Scale on Catastrophic Forgetting in Neural Networks

Vinay Ramasesh, Aitor Lewkowycz, Ethan Dyer

Incremental False Negative Detection for Contrastive Learning

Tsai-Shien Chen, Wei-Chih Hung, Hung-Yu Tseng, Shao-Yi Chien, Ming-Hsuan Yang

Towards Evaluating the Robustness of Neural Networks Learned by Transduction

Jiefeng Chen, Xi Wu, Yang Guo, Yingyu Liang, Somesh Jha

What Do We Mean by Generalization in Federated Learning?

Honglin Yuan*, Warren Morningstar, Lin Ning, Karan Singhal

ViDT: An Efficient and Effective Fully Transformer-Based Object Detector

Hwanjun Song, Deqing Sun, Sanghyuk Chun, Varun Jampani, Dongyoon Han, Byeongho Heo, Wonjae Kim, Ming-Hsuan Yang

Measuring CLEVRness: Black-Box Testing of Visual Reasoning Models

Spyridon Mouselinos, Henryk Michalewski, Mateusz Malinowski

Wisdom of Committees: An Overlooked Approach To Faster and More Accurate Models (see the blog post)

Xiaofang Wang, Dan Kondratyuk, Eric Christiansen, Kris M. Kitani, Yair Alon (prev. Movshovitz-Attias), Elad Eban

Leveraging Unlabeled Data to Predict Out-of-Distribution Performance

Saurabh Garg*, Sivaraman Balakrishnan, Zachary C. Lipton, Behnam Neyshabur, Hanie Sedghi

Data-Driven Offline Optimization for Architecting Hardware Accelerators (see the blog post)

Aviral Kumar, Amir Yazdanbakhsh, Milad Hashemi, Kevin Swersky, Sergey Levine

Diurnal or Nocturnal? Federated Learning of Multi-branch Networks from Periodically Shifting Distributions

Chen Zhu*, Zheng Xu, Mingqing Chen, Jakub Konecny, Andrew Hard, Tom Goldstein

Policy Gradients Incorporating the Future

David Venuto, Elaine Lau, Doina Precup, Ofir Nachum

Discrete Representations Strengthen Vision Transformer Robustness

Chengzhi Mao*, Lu Jiang, Mostafa Dehghani, Carl Vondrick, Rahul Sukthankar, Irfan Essa

SimVLM: Simple Visual Language Model Pretraining with Weak Supervision (see the blog post)

Zirui Wang, Jiahui Yu, Adams Wei Yu, Zihang Dai, Yulia Tsvetkov, Yuan Cao

Neural Stochastic Dual Dynamic Programming

Hanjun Dai, Yuan Xue, Zia Syed, Dale Schuurmans, Bo Dai

PolyLoss: A Polynomial Expansion Perspective of Classification Loss Functions

Zhaoqi Leng, Mingxing Tan, Chenxi Liu, Ekin Dogus Cubuk, Xiaojie Shi, Shuyang Cheng, Dragomir Anguelov

Information Prioritization Through Empowerment in Visual Model-Based RL

Homanga Bharadhwaj*, Mohammad Babaeizadeh, Dumitru Erhan, Sergey Levine

Value Function Spaces: Skill-Centric State Abstractions for Long-Horizon Reasoning

Dhruv Shah, Peng Xu, Yao Lu, Ted Xiao, Alexander Toshev, Sergey Levine, Brian Ichter

Understanding and Leveraging Overparameterization in Recursive Value Estimation

Chenjun Xiao, Bo Dai, Jincheng Mei, Oscar Ramirez, Ramki Gummadi, Chris Harris, Dale Schuurmans

The Efficiency Misnomer

Mostafa Dehghani, Anurag Arnab, Lucas Beyer, Ashish Vaswani, Yi Tay

On the Role of Population Heterogeneity in Emergent Communication

Mathieu Rita, Florian Strub, Jean-Bastien Grill, Olivier Pietquin, Emmanuel Dupoux

No One Representation to Rule Them All: Overlapping Features of Training Methods

Raphael Gontijo-Lopes, Yann Dauphin, Ekin D. Cubuk

Data Poisoning Won’t Save You From Facial Recognition

Evani Radiya-Dixit, Sanghyun Hong, Nicholas Carlini, Florian Tramèr

AdaMatch: A Unified Approach to Semi-Supervised Learning and Domain Adaptation

David Berthelot, Rebecca Roelofs, Kihyuk Sohn, Nicholas Carlini, Alex Kurakin

Maximum Entropy RL (Provably) Solves Some Robust RL Problems

Benjamin Eysenbach, Sergey Levine

Auto-scaling Vision Transformers Without Training

Wuyang Chen, Wei Huang, Xianzhi Du, Xiaodan Song, Zhangyang Wang, Denny Zhou

Optimizing Few-Step Diffusion Samplers by Gradient Descent

Daniel Watson, William Chan, Jonathan Ho, Mohammad Norouzi

ExT5: Towards Extreme Multi-Task Scaling for Transfer Learning

Vamsi Aribandi, Yi Tay, Tal Schuster, Jinfeng Rao, Huaixiu Steven Zheng, Sanket Vaibhav Mehta, Honglei Zhuang, Vinh Q. Tran, Dara Bahri, Jianmo Ni, Jai Gupta, Kai Hui, Sebastian Ruder, Donald Metzler

Fortuitous Forgetting in Connectionist Networks

Hattie Zhou, Ankit Vani, Hugo Larochelle, Aaron Courville

Evading Adversarial Example Detection Defenses with Orthogonal Projected Gradient Descent

Oliver Bryniarski, Nabeel Hingun, Pedro Pachuca, Vincent Wang, Nicholas Carlini

Benchmarking the Spectrum of Agent Capabilities

Danijar Hafner

Charformer: Fast Character Transformers via Gradient-Based Subword Tokenization

Yi Tay, Vinh Q. Tran, Sebastian Ruder, Jai Gupta, Hyung Won Chung, Dara Bahri, Zhen Qin, Simon Baumgartner, Cong Yu, Donald Metzler

Mention Memory: Incorporating Textual Knowledge into Transformers Through Entity Mention Attention

Michiel de Jong, Yury Zemlyanskiy, Nicholas FitzGerald, Fei Sha, William Cohen

Eigencurve: Optimal Learning Rate Schedule for SGD on Quadratic Objectives with Skewed Hessian Spectrums

Rui Pan, Haishan Ye, Tong Zhang

Scale Efficiently: Insights from Pre-training and Fine-Tuning Transformers

Yi Tay, Mostafa Dehghani, Jinfeng Rao, William Fedus, Samira Abnar, Hyung Won Chung, Sharan Narang, Dani Yogatama, Ashish Vaswani, Donald Metzler

Omni-Scale CNNs: A Simple and Effective Kernel Size Configuration for Time Series Classification

Wensi Tang, Guodong Long, Lu Liu,Tianyi Zhou, Michael Blumenstein, Jing Jiang

Embedded-Model Flows: Combining the Inductive Biases of Model-Free Deep Learning and Explicit Probabilistic Modeling

Gianluigi Silvestri, Emily Fertig, Dave Moore, Luca Ambrogioni

Post Hoc Explanations May be Ineffective for Detecting Unknown Spurious Correlation

Julius Adebayo, Michael Muelly, Hal Abelson, Been Kim

Axiomatic Explanations for Visual Search, Retrieval, and Similarity Learning

Mark Hamilton, Scott Lundberg, Stephanie Fu, Lei Zhang, William T. Freeman

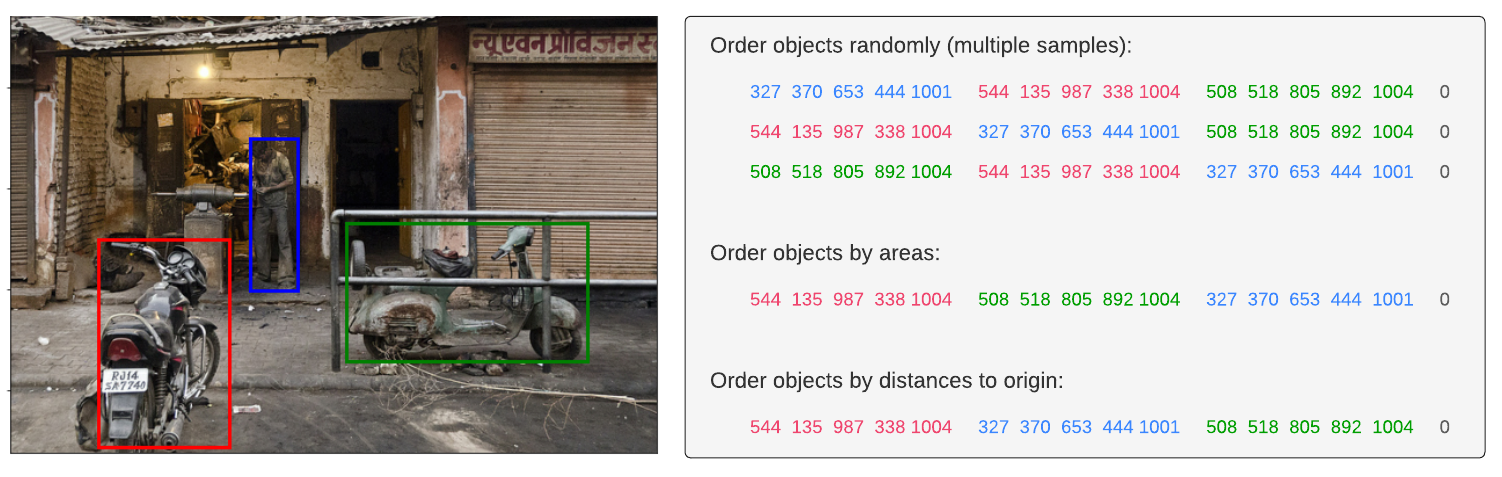

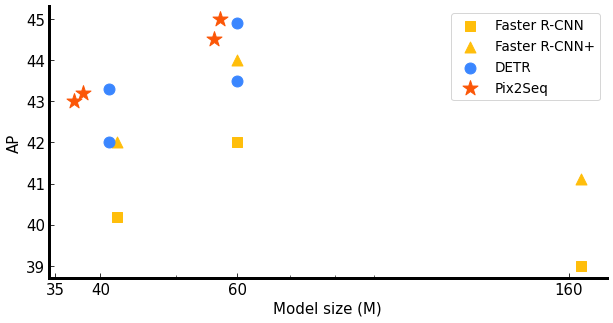

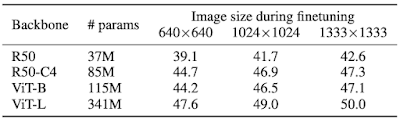

Pix2seq: A Language Modeling Framework for Object Detection (see the blog post)

Ting Chen, Saurabh Saxena, Lala Li, David J. Fleet, Geoffrey Hinton

Mirror Descent Policy Optimization

Manan Tomar, Lior Shani, Yonathan Efroni, Mohammad Ghavamzadeh

CodeTrek: Flexible Modeling of Code Using an Extensible Relational Representation

Pardis Pashakhanloo, Aaditya Naik, Yuepeng Wang, Hanjun Dai, Petros Maniatis, Mayur Naik

Conditional Object-Centric Learning From Video

Thomas Kipf, Gamaleldin F. Elsayed, Aravindh Mahendran, Austin Stone, Sara Sabour, Georg Heigold, Rico Jonschkowski, Alexey Dosovitskiy, Klaus Greff

A Loss Curvature Perspective on Training Instabilities of Deep Learning Models

Justin Gilmer, Behrooz Ghorbani, Ankush Garg, Sneha Kudugunta, Behnam Neyshabur, David Cardoze, George E. Dahl, Zack Nado, Orhan Firat

Autonomous Reinforcement Learning: Formalism and Benchmarking

Archit Sharma, Kelvin Xu, Nikhil Sardana, Abhishek Gupta, Karol Hausman, Sergey Levine, Chelsea Finn

TRAIL: Near-Optimal Imitation Learning with Suboptimal Data

Mengjiao Yang, Sergey Levine, Ofir Nachum

Minimax Optimization With Smooth Algorithmic Adversaries

Tanner Fiez, Lillian J. Ratliff, Chi Jin, Praneeth Netrapalli

Unsupervised Semantic Segmentation by Distilling Feature Correspondences

Mark Hamilton, Zhoutong Zhang, Bharath Hariharan, Noah Snavely, William T. Freeman

InfinityGAN: Towards Infinite-Pixel Image Synthesis

Chieh Hubert Lin, Hsin-Ying Lee, Yen-Chi Cheng, Sergey Tulyakov, Ming-Hsuan Yang

Shuffle Private Stochastic Convex Optimization

Albert Cheu, Matthew Joseph, Jieming Mao, Binghui Peng

Hybrid Random Features

Krzysztof Choromanski, Haoxian Chen, Han Lin, Yuanzhe Ma, Arijit Sehanobish, Deepali Jain, Michael S Ryoo, Jake Varley, Andy Zeng, Valerii Likhosherstov, Dmitry Kalashnikov, Vikas Sindhwani, Adrian Weller

Vector-Quantized Image Modeling With Improved VQGAN

Jiahui Yu, Xin Li, Jing Yu Koh, Han Zhang, Ruoming Pang, James Qin, Alexander Ku, Yuanzhong Xu, Jason Baldridge, Yonghui Wu

On the Benefits of Maximum Likelihood Estimation for Regression and Forecasting

Pranjal Awasthi, Abhimanyu Das, Rajat Sen, Ananda Theertha Suresh

Surrogate Gap Minimization Improves Sharpness-Aware Training

Juntang Zhuang*, Boqing Gong, Liangzhe Yuan, Yin Cui, Hartwig Adam, Nicha C. Dvornek, Sekhar Tatikonda, James S. Duncan, Ting Liu

Online Target Q-learning With Reverse Experience Replay: Efficiently Finding the Optimal Policy for Linear MDPs

Naman Agarwal, Prateek Jain, Dheeraj Nagaraj, Praneeth Netrapalli, Syomantak Chaudhuri

CrossBeam: Learning to Search in Bottom-Up Program Synthesis

Kensen Shi, Hanjun Dai, Kevin Ellis, Charles Sutton

Workshops

Workshop on the Elements of Reasoning: Objects, Structure, and Causality (OSC)

Organizers include: Klaus Greff, Thomas Kipf

Workshop on Agent Learning in Open-Endedness

Organizers include: Krishna Srinivasan

Speakers include: Natasha Jaques, Danijar Hafner

Wiki-M3L: Wikipedia and Multi-modal & Multi-lingual Research

Organizers include: Klaus Greff, Thomas Kipf

Speakers include: Jason Baldridge, Tom Duerig

Setting Up ML Evaluation Standards to Accelerate Progress

Organizers include: Rishabh Agarwal

Speakers and Panelists include: Katherine Heller, Sara Hooker, Corinna Cortes

From Cells to Societies: Collective Learning Across Scales

Organizers include: Mark Sandler, Max Vladymyrov

Speakers include: Blaise Aguera y Arcas, Alexander Mordvintsev, Michael Mozer

Emergent Communication: New Frontiers

Speakers include: Natasha Jaques

Deep Learning for Code

Organizers include: Jonathan Herzig

GroundedML: Anchoring Machine Learning in Classical Algorithmic Theory

Speakers include: Gintare Karolina Dziugaite

Generalizable Policy Learning in the Physical World

Speakers and Panelists include: Mrinal Kalakrishnan

CoSubmitting Summer (CSS) Workshop

Organizers include: Rosanne Liu

*Work done while at Google. ↩