Visual Transfer Learning for Robotic Manipulation

The idea that robots can learn to directly perceive the affordances of actions on objects (i.e., what the robot can or cannot do with an object) is called affordance-based manipulation, explored in research on learning complex vision-based manipulation skills including grasping, pushing, and throwing. In these systems, affordances are represented as dense pixel-wise action-value maps that estimate how good it is for the robot to execute one of several predefined motions at each location. For example, given an RGB-D image, an affordance-based grasping model might infer grasping affordances per pixel with a convolutional neural network. The grasping affordance value at each pixel would represent the success rate of performing a corresponding motion primitive (e.g. grasping action), which would then be executed by the robot at the position with the highest value.

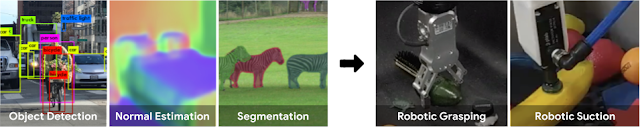

|

| Overview of affordance-based manipulation. |

In “Learning to See before Learning to Act: Visual Pre-training for Manipulation”, a collaboration with researchers from MIT to be presented at ICRA 2020, we investigate whether existing pre-trained deep learning visual feature representations can improve the efficiency of learning robotic manipulation tasks, like grasping objects. By studying how we can intelligently transfer neural network weights between vision models and affordance-based manipulation models, we can evaluate how different visual feature representations benefit the exploration process and enable robots to quickly acquire manipulation skills using different grippers. We present practical techniques to pre-train deep learning models, which enable robots to learn to pick and grasp arbitrary objects in unstructured settings in less than 10 minutes of trial and error.

Affordance-based manipulation is essentially a way to reframe a manipulation task as a computer vision task, but rather than referencing pixels to object labels, we instead associate pixels to the value of actions. Since the structure of computer vision models and affordance models are so similar, one can leverage techniques from transfer learning in computer vision to enable affordance models to learn faster with less data. This approach re-purposes pre-trained neural network weights (i.e., feature representations) learned from large-scale vision datasets to initialize network weights of affordance models for robotic grasping.

In computer vision, many deep model architectures are composed of two parts: a “backbone” and a “head”. The backbone consists of weights that are responsible for early-stage image processing, e.g., filtering edges, detecting corners, and distinguishing between colors, while the head consists of network weights that are used in latter-stage processing, such as identifying high-level features, recognizing contextual cues, and executing spatial reasoning. The head is often much smaller than the backbone and is also more task specific. Hence, it is common practice in transfer learning to pre-train (e.g., on ResNet) and share backbone weights between tasks, while randomly initializing the weights of the model head for each new task.

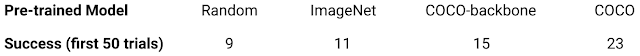

Following this recipe, we initialized our affordance-based manipulation models with backbones based on the ResNet-50 architecture and pre-trained on different vision tasks, including a classification model from ImageNet and a segmentation model from COCO. With different initializations, the robot was then tasked with learning to grasp a diverse set of objects through trial and error.

Initially, we did not see any significant gains in performance compared with training from scratch – grasping success rates on training objects were only able to rise to 77% after 1,000 trial and error grasp attempts, outperforming training from scratch by 2%. However, upon transferring network weights from both the backbone and the head of the pre-trained COCO vision model, we saw a substantial improvement in training speed – grasping success rates reached 73% in just 500 trial and error grasp attempts, and jumped to 86% by 1,000 attempts. In addition, we tested our model on new objects unseen during training and found that models with the pre-trained backbone from COCO generalize better. The grasping success rates reach 83% with pre-trained backbone alone and further improve to 90% with both pre-trained backbone and head, outperforming the 46% reached by a model trained from scratch.

In our experiments with the grasping robot, we observed that the distribution of successful grasps versus failures in the generated datasets was far more balanced when network weights from both the backbone and head of pre-trained vision models were transferred to the affordance models, as opposed to only transferring the backbone.

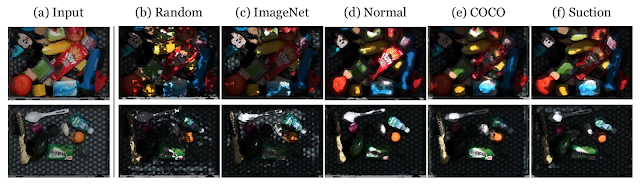

To better understand this, we visualize the neural activations that are triggered by different pre-trained models and a converged affordance model trained from scratch using a suction gripper. Interestingly, we find that the intermediate network representations learned from the head of vision models used for segmentation from the COCO dataset activate on objects in ways that are similar to the converged affordance model. This aligns with the idea that transferring as much of the vision model as possible (both backbone and head) can lead to more object-centric exploration by leveraging model weights that are better at picking up visual features and localizing objects.

|

| Affordances predicted by different models from images of cluttered objects (a). (b) Random refers to a randomly initialized model. (c) ImageNet is a model with backbone pre-trained on ImageNet and a randomly initialized head. (d) Normal refers to a model pre-trained to detect pixels with surface normals close to the anti-gravity axis. (e) COCO is the modified segmentation model (MaskRCNN) trained on the COCO dataset. (f) Suction is a converged model learned from robot-environment interactions using the suction gripper. |

Many of the methods that we use today for end-to-end robot learning are effectively the same as those being used for computer vision tasks. Our work here on visual pre-training illuminates this connection and demonstrates that it is possible to leverage techniques from visual pre-training to improve the learning efficiency of affordance-base manipulation applied to robotic grasping tasks. While our experiments point to a better understanding of deep learning for robots, there are still many interesting questions that have yet to be explored. For example, how do we leverage large-scale pre-training for additional modes of sensing (e.g. force-torque or tactile)? How do we extend these pre-training techniques towards more complex manipulation tasks that may not be as object-centric as grasping? These areas are promising directions for future research.

You can learn more about this work in the summary video below.

Acknowledgements

This research was done by Yen-Chen Lin (Ph.D. student at MIT), Andy Zeng, Shuran Song, Phillip Isola (faculty at MIT), and Tsung-Yi Lin, with special thanks to Johnny Lee and Ivan Krasin for valuable managerial support, Chad Richards for helpful feedback on writing, and Jonathan Thompson for fruitful technical discussions.

Source: Google AI Blog

Helping educators and students stay connected

As many educational institutions around the globe are undergoing, extending or planning closures due to COVID-19, half of the world’s student population is unable to attend school. Educators face the challenge of teaching remotely at an unprecedented scale, and in some cases, for the first time.

In the last week we’ve created new distance learning resources including a collection of training materials, a list of useful apps, a new Learn@Home YouTube resource designed for families, as well as a series of blog posts and webinars. We’ve also made our premium Meet features free for schools through July 1, 2020. This includes the ability to have 250 people in a call together, record lessons and livestream. And thanks to feedback from educators, we’re also constantly making product improvements, like these new educator controls for Hangouts Meet.

We’ve continued to listen to the challenges teachers are facing during these uncertain times and today we’re announcing two new resources to help teachers and students stay connected.

Teach from Home

Teach From Home is a central hub of information, tips, training and tools from across Google for Education to help teachers keep teaching, even when they aren’t in the classroom.

To start, we’re providing an overview of how to get started with distance learning—for example how to teach online, make lessons accessible to students, and collaborate with other educators.

The resource will continue to evolve. We've built the hub with the support and cooperation of UNESCO Institute for Information Technologies in Education, who is also working with other education partners to respond to this emergency. As we continue receiving feedback from teachers and partners on what’s most helpful, we’ll continue to build and improve this. Teach From Home is currently available in English, with downloadable toolkits available in Danish, German, Spanish, French, Italian, Arabic and Polish, with additional languages coming soon.

Supporting organizations who are helping to reduce barriers to distance learning

When facing these challenges, we’re at our best when we respond as a community. As part of our $50 million Google.org COVID-19 response, we’re announcing a $10 million Distance Learning Fund to support organizations around the globe that help educators access the resources they need to provide high quality learning opportunities to children, particularly those from underserved communities.

The Google.org Distance Learning Fund’s first grant will be $1 million to help Khan Academy provide remote learning opportunities to students affected by COVID-19 related school closures. Along with the grant, Google volunteers are planning to help Khan Academy provide educator resources in more than 15 languages, and through their platform, they'll reach over 18 million learners a month from communities around the globe. We hope to announce additional organizations soon.

We’re inspired by the ideas and resources educational leaders are sharing with each other during this time. To continue the conversation, join a Google Educator Group, share your distance learning tips and tricks, and connect with us on Twitter and Facebook. We hope you’ll keep passing along your ideas and feedback so we can continue to evolve and build this together.Google's response to COVID-19

Updates about Google's response to COVID-19.

Source: The Official Google Blog

COVID-19: Resources to help people learn on YouTube

We’re fortunate to have an incredible community of learning creators on YouTube. From CrashCourse to Physics Girl, the EduTuber community has been helping people around the world learn and keep up with their studies. We wanted to take a moment to provide an update around how we’re supporting their efforts.

Learn@Home

Starting today, we’re launching Learn@Home, a website with learning resources and content for families. From Khan Academy to Sesame Street to code.org, Learn@Home will spotlight content across math, science, history and arts from popular learning channels. We’ll also have a dedicated section for families with kids under 13, where parents and kids can watch videos together that encourage kids' creativity, curiosity, playfulness and offline activities, such as how to build a model volcano. The website is launching today in English and will continue to evolve. We’re working to expand to more languages in the coming days, such as Italian, French, Korean, Spanish, Japanese and more.

YouTube Learning Destination

The YouTube Learning destination is designed to inspire and help students with high-quality learning content on YouTube. The destination regularly features supplemental learning content, celebrates learning moments, and shares tips for learners. The destination is available in English today and will expand to Italian, French, Korean, Spanish, Japanese and more in the coming days. You can find the Learning destination at youtube.com/learning or in the brand new Explore tab on the YouTube app.

#StudyWithMe

As people #StayHome to work and study, it can feel like an isolating time. We’ve been inspired by the #StudyWithMe movement, where students share their study experiences with each other online. Whether reading or listening to music, it helps to feel less alone when you study together.

YouTube Kids

YouTube Kids provides kids under 13 with a safer environment where they can explore their interests and curiosity on their own while giving parents the tools to customize the experience. The app features a range of timely content, such as healthy habits, indoor fun and learning.

We understand this is an unprecedented situation facing families across the globe. We’re humbled by the incredible EduTuber community that’s sharing knowledge with the world, and we hope you find these resources helpful in these challenging times.

Malik Ducard, VP of Content Partnerships, Learning, Social Impact, Family, Film & TV

Source: YouTube Blog

Google and Binomial partner to open source high quality basis universal

Basis Universal allows you to have state of the art web performance with your images, keeping images compressed even on the GPU. Older systems like JPEG and PNG may look small in storage size, but once they hit the GPU they are processed as uncompressed data! The original Basis Universal codec created images that were 6-8 times smaller than JPEG on the GPU while maintaining a similar storage size.

Today we release a high quality Basis Universal codec that utilizes the highest quality formats modern GPUs support, finally bringing the web up to modern GPU texture standards—with cross platform support. The textures are larger in storage size and GPU compressed size, but are still 3-4 times smaller than sending a JPEG or PNG file to be processed on the GPU, and can transcode to a lower quality format for older GPUs.

| Original Image by Erol Ahmed from Unsplash.com |

| Visual comparison of Basis Universal High Quality |

Best of all, we are actively working on standardizing Basis Universal with the Khronos Group.

Since our original release in Summer 2019 we’ve seen widespread adoption of Basis Universal in engines like three.js, Babylon.js, Godot, and more, changing what is possible for people to create on the web. Now that a high quality option is available, we expect to see even more adoption and groundbreaking applications created with it.

Please feel free to join our community on Github and check out the full demo there as well. You can also follow standardization efforts via Khronos Group events and forums.

Source: Google Open Source Blog

In Mexico, one Googler gives girls their “tümü” moment

When a butterfly comes out of its cocoon, it uses the most fragile part of its body—its wings— to break free. In the Otomi dialect, which is spoken in the central region of Mexico, this magical moment is called Tümü. So when Paoloa Escalante and her co-founder decided to create an organization to help support young women, they decided that Tümü was a fitting name for it.

“The idea was to create content that promotes determination, self-esteem and assertiveness during a moment in girls’ lives that's constantly changing,” Paola Escalante, Head of Google Mexico’s creative consulting branch, called the Zoo, says. The pre-teen and teen years are challenging, and in recent years, social media has made this time even more complicated. “Adolescence has always been the same, what has changed is technology,” says Paola. “With so much access to information, decision making can be overwhelming and social media is setting new standards not just regarding beauty, but also lifestyle and accomplishments. There’s a new layer of vulnerability that grows at a very fast pace.”

Tümü began as an after-work project that Paola started about two years ago. She and her co-founder, Zarina Rivera, had noticed that instead of reaching out to family and friends with their questions or problems, more and more often girls turn to internet communities. So they created a platform where girls can find content as well as ask questions and get answers from experts in a friendly way, and hopefully navigate what can be a complicated time more smoothly.

Paola never imagined how big Tümü would become or how much responsibility she’d feel for the girls using it. Some of them ask questions about eating disorders, or about being pressured into sex or into sending intimate photos. Some girls ask about depression. Sometimes, their mothers even turn to Tümü’s experts for answers.

Tümü has become more than just an online resource. The organization also hosts workshops and small events, which Paola hopes they’ll be able to offer to more communities in the country, and bring in more speakers to talk to the girls. At Tümü’s first offline event, Paola invited 19-year-old astronaut Alyssa Carson to speak. “That day I cried so much. I couldn’t believe that more than a thousand girls had gathered to hear her speak. And then I couldn’t believe that they had stayed for all the activities,” Paola says. “We gave them a journal and the girls were filling it willingly, writing down their reflections, how they saw themselves in five years, what they wanted to learn.”

The way Paola sees it, what girls need has less to do with empowerment and everything to do with being given the space to get to know themselves and their self-worth. “As grown-up women, we have different movements focused on women empowerment, and we need them because we are a generation of women who need to regain the power that culture has taken away,” she explains. “But younger generations have that power. They don’t need to be empowered—they need to be pushed to believe in themselves and figure out how to become the best version of themselves.” And, she says, young women should be given the opportunity to realize what they want before being pushed to get it. “I also don’t think that the message for them should be achieving their dreams. Very few girls know what their dreams are, and they don’t need the added pressure to have one and go after it. In order to figure out what they want they need to be happy with who they are now and understand themselves.”

Paola is proud of the work she’s doing through Tümü because she knows how important these kinds of resources are for young women. “I would’ve liked to have a helping hand when I was that age. It took me a while to have my ‘tümü moment’ as I call it, I don’t think I had it until I was 30,” says Paola. “I want to help build a better world for future generations.”

Source: The Official Google Blog

Hangouts Meet improvements for remote learning

What’s changing

We recently extended Hangouts Meet premium features to all G Suite customers through July 1, 2020 to support employees, educators, and students as they move to work and learn remotely.To improve the remote learning experience for teachers and students using Hangouts Meet, we’re making several improvements:

- Only meeting creators and calendar owners can mute or remove other participants in a meeting

- Meeting participants will not be able to re-join nicknamed meetings once the final participant has left

Who’s impacted

Admins and end usersWhy it’s important

We hope these added controls and improvements will enhance Hangouts Meet for our Education users.Additional details

Improved meeting control features: 'mute' and 'remove' For our Education customers, only the meeting creator, Calendar event owner, or person who creates a meeting on an in-room hardware device will be allowed to mute or remove other participants in a meeting. This ensures a teacher, as a meeting creator or Calendar event owner, can't be removed or muted by students participating in the event.Check out our Help Center to learn how to assign meeting creation privileges to teachers and staff members. We recommend that you assign these privileges to the organizational units (OUs) that contain your faculty and staff members.

Improved teacher controls for nicknamed meetings Participants will not be able to re-join a meeting after the final participant has left if:

- The meeting was created using a short link like g.co/meet/nickname

- The meeting was created at meet.google.com by entering a meeting nickname in the "Join or start a meeting” field

- The meeting was created in the Meet app by entering a nickname in the “Meeting code” field

We’ve also heard from some admins that they don’t want students to be able to create meetings. To do so, use this Help Center article to assign meeting creation privileges to teachers and staff only.

Additional resources for getting started with Hangouts Meet

Visit our Help Center to learn more about using Meet with low bandwidth and tips for training teachers and students.

Getting started

Admins: Visit the Help Center to learn more about assigning meeting creation privileges to users in your domain.End Users: No action is required.

Rollout pace

- Rapid and Scheduled Release domains: These features have started rolling out and should be available to all G Suite for Education and G Suite Enterprise for Education customers within 2-3 weeks.

Availability

- Available to G Suite for Education and G Suite Enterprise for Education customers

- Not available to G Suite Basic, G Suite Enterprise, G Suite Business and G Suite for Nonprofits customers

Resources

- G Suite Updates Blog: Extending Hangouts Meet premium features to all G Suite customers through July 1, 2020

- Google Help: Live stream a video meeting

- Google Help: Record a video meeting

- Google Help: Start a video meeting

- Google Help: Troubleshoot issues with Hangouts Meet

- Google Help: Use captions in a video meeting

- Google Help: Accept and present audience questions

- G Suite Admin Help: Set up Meet for distance learning

- G Suite Admin Help: Prepare your network

- G Suite Admin Help: Turn on Meet video calling

Source: G Suite Updates Blog

Hangouts Meet improvements for remote learning

What’s changing

We recently extended Hangouts Meet premium features to all G Suite customers through July 1, 2020 to support employees, educators, and students as they move to work and learn remotely.To improve the remote learning experience for teachers and students using Hangouts Meet, we’re making several improvements:

- Only meeting creators and calendar owners can mute or remove other participants in a meeting

- Meeting participants will not be able to re-join nicknamed meetings once the final participant has left

Who’s impacted

Admins and end usersWhy it’s important

We hope these added controls and improvements will enhance Hangouts Meet for our Education users.Additional details

Improved meeting control features: 'mute' and 'remove' For our Education customers, only the meeting creator, Calendar event owner, or person who creates a meeting on an in-room hardware device will be allowed to mute or remove other participants in a meeting. This ensures a teacher, as a meeting creator or Calendar event owner, can't be removed or muted by students participating in the event.Check out our Help Center to learn how to assign meeting creation privileges to teachers and staff members. We recommend that you assign these privileges to the organizational units (OUs) that contain your faculty and staff members.

Improved teacher controls for nicknamed meetings Participants will not be able to re-join a meeting after the final participant has left if:

- The meeting was created using a short link like g.co/meet/nickname

- The meeting was created at meet.google.com by entering a meeting nickname in the "Join or start a meeting” field

- The meeting was created in the Meet app by entering a nickname in the “Meeting code” field

We’ve also heard from some admins that they don’t want students to be able to create meetings. To do so, use this Help Center article to assign meeting creation privileges to teachers and staff only.

Additional resources for getting started with Hangouts Meet

Visit our Help Center to learn more about using Meet with low bandwidth and tips for training teachers and students.

Getting started

Admins: Visit the Help Center to learn more about assigning meeting creation privileges to users in your domain.End Users: No action is required.

Rollout pace

- Rapid and Scheduled Release domains: These features have started rolling out and should be available to all G Suite for Education and G Suite Enterprise for Education customers within 2-3 weeks.

Availability

- Available to G Suite for Education and G Suite Enterprise for Education customers

- Not available to G Suite Basic, G Suite Enterprise, G Suite Business and G Suite for Nonprofits customers

Resources

- G Suite Updates Blog: Extending Hangouts Meet premium features to all G Suite customers through July 1, 2020

- Google Help: Live stream a video meeting

- Google Help: Record a video meeting

- Google Help: Start a video meeting

- Google Help: Troubleshoot issues with Hangouts Meet

- Google Help: Use captions in a video meeting

- Google Help: Accept and present audience questions

- G Suite Admin Help: Set up Meet for distance learning

- G Suite Admin Help: Prepare your network

- G Suite Admin Help: Turn on Meet video calling

Source: G Suite Updates Blog

Hangouts Meet improvements for remote learning

What’s changing

We recently extended Hangouts Meet premium features to all G Suite customers through July 1, 2020 to support employees, educators, and students as they move to work and learn remotely.To improve the remote learning experience for teachers and students using Hangouts Meet, we’re making several improvements:

- Only meeting creators and calendar owners can mute or remove other participants in a meeting

- Meeting participants will not be able to re-join nicknamed meetings once the final participant has left

Who’s impacted

Admins and end usersWhy it’s important

We hope these added controls and improvements will enhance Hangouts Meet for our Education users.Additional details

Improved meeting control features: 'mute' and 'remove' For our Education customers, only the meeting creator, Calendar event owner, or person who creates a meeting on an in-room hardware device will be allowed to mute or remove other participants in a meeting. This ensures a teacher, as a meeting creator or Calendar event owner, can't be removed or muted by students participating in the event.Check out our Help Center to learn how to assign meeting creation privileges to teachers and staff members. We recommend that you assign these privileges to the organizational units (OUs) that contain your faculty and staff members.

Improved teacher controls for nicknamed meetings Participants will not be able to re-join a meeting after the final participant has left if:

- The meeting was created using a short link like g.co/meet/nickname

- The meeting was created at meet.google.com by entering a meeting nickname in the "Join or start a meeting” field

- The meeting was created in the Meet app by entering a nickname in the “Meeting code” field

We’ve also heard from some admins that they don’t want students to be able to create meetings. To do so, use this Help Center article to assign meeting creation privileges to teachers and staff only.

Additional resources for getting started with Hangouts Meet

Visit our Help Center to learn more about using Meet with low bandwidth and tips for training teachers and students.

Getting started

Admins: Visit the Help Center to learn more about assigning meeting creation privileges to users in your domain.End Users: No action is required.

Rollout pace

- Rapid and Scheduled Release domains: These features have started rolling out and should be available to all G Suite for Education and G Suite Enterprise for Education customers within 2-3 weeks.

Availability

- Available to G Suite for Education and G Suite Enterprise for Education customers

- Not available to G Suite Basic, G Suite Enterprise, G Suite Business and G Suite for Nonprofits customers

Resources

- G Suite Updates Blog: Extending Hangouts Meet premium features to all G Suite customers through July 1, 2020

- Google Help: Live stream a video meeting

- Google Help: Record a video meeting

- Google Help: Start a video meeting

- Google Help: Troubleshoot issues with Hangouts Meet

- Google Help: Use captions in a video meeting

- Google Help: Accept and present audience questions

- G Suite Admin Help: Set up Meet for distance learning

- G Suite Admin Help: Prepare your network

- G Suite Admin Help: Turn on Meet video calling

Source: G Suite Updates Blog

Pigweed: A collection of embedded libraries

Getting Started with Pigweed

See Pigweed in action

- Setup: Get started faster with simplified setup via a virtual environment with all required tools

- Development: Accelerated edit-compile-flash-test cycles with watchers and distributed testing

- Code Submission: Pre-configured code formatting and integrated presubmit checks

SETUP

A classic challenge in the embedded space is reducing the time from running git clone to having a binary executing on a device. Oftentimes, an entire suite of tools is needed for non-trivial production embedded projects. For example, your project likely needs:- A C++ compiler for your target device, and also for your host

- A build system (or three); for example, GN, Ninja, CMake, Bazel

- A code formatting program like clang-format

- A debugger like OpenOCD to flash and debug your embedded device

- A known Python version with known modules installed for scripting

- … and so on

DEVELOPMENT

In typical embedded development, adding even a small change involves the following additional manual steps:- Re-building the image

- Flashing the image to a device

- Ensuring that the change works as expected

- Verifying that existing tests continue to pass

Pigweed’s pw_watch module solves this inefficiency directly, providing a watcher that automatically invokes a build when a file is saved, and also runs the specific tests affected by the code changes. This drastically reduces the edit-compile-flash-test cycle for changes.

In the demo above, the pw_watch module (on the right) does the following:

- Detects source file changes

- Builds the affected libraries, tests, and binaries

- Flashes the tests to the device (in this case a STM32F429i Discovery board)

- Runs the specific unit tests

CODE SUBMISSION

When developing code as a part of a team, consistent code is an important part of a healthy codebase. However, setting up linters, configuring code formatting, and adding automated presubmit checks is work that often gets delayed indefinitely.Pigweed’s pw_presubmit module provides an off-the-shelf integrated suite of linters, based on tools that you’ve probably already used, that are pre-configured for immediate use for microcontroller developers. This means that your project can have linting and automatic formatting presubmit checks from its inception.

And a bunch of other modules

There are many modules in addition to the ones highlighted above...- pw_tokenizer – A module that converts strings to binary tokens at compile time. This enables logging with dramatically less overhead in flash, RAM, and CPU usage.

- pw_string – Provides the flexibility, ease-of-use, and safety of C++-style string manipulation, but with no dynamic memory allocation and a much smaller binary size impact. Using pw_string in place of the standard C functions eliminates issues related to buffer overflow or missing null terminators.

- pw_bloat – A module to generate memory reports for output binaries empowering developers with information regarding the memory impact of any change.

- pw_unit_test – Unit testing is important and Pigweed offers a portable library that’s broadly compatible with Google Test. Unlike Google Test, pw_unit_test is built on top of embedded friendly primitives; for example, it does not use dynamic memory allocation. Additionally, it is to port to new target platforms by implementing the test event handler interface.

- pw_kvs – A key-value-store implementation for flash-backed persistent storage with integrated wear levelling. This is a lightweight alternative to a file system for embedded devices.

- pw_cpu_exception_armv7m – Robust low level hardware fault handler for ARM Cortex-M; the handler even has unit tests written in assembly to verify nested-hardware-fault handling!

- pw_protobuf – An early preview of our wire-format-oriented protocol buffer implementation. This protobuf compiler makes a different set of implementation tradeoffs than the most popular protocol buffer library in this space, nanopb.

Why name the project Pigweed?

Pigweed, also known as amaranth, is a nutritious grain and leafy salad green that is also a rapidly growing weed. When developing the project that eventually became Pigweed, we wanted to find a name that was fun, playful, and reflective of how we saw Pigweed growing. Teams would start out using one module that catches their eye, and after that goes well, they’d quickly start using more.So far, so good ?

What’s next?

We’re continuing to evolve the collection and add new modules. It’s our hope that others in the embedded community find these modules helpful for their projects.By Keir Mierle and Mohammed Habibulla, on behalf of the Pigweed team