Announcing the Objectron Dataset

The state of the art in machine learning (ML) has achieved exceptional accuracy on many computer vision tasks solely by training models on photos. Building upon these successes and advancing 3D object understanding has great potential to power a wider range of applications, such as augmented reality, robotics, autonomy, and image retrieval. For example, earlier this year we released MediaPipe Objectron, a set of real-time 3D object detection models designed for mobile devices, which were trained on a fully annotated, real-world 3D dataset, that can predict objects’ 3D bounding boxes.

Yet, understanding objects in 3D remains a challenging task due to the lack of large real-world datasets compared to 2D tasks (e.g., ImageNet, COCO, and Open Images). To empower the research community for continued advancement in 3D object understanding, there is a strong need for the release of object-centric video datasets, which capture more of the 3D structure of an object, while matching the data format used for many vision tasks (i.e., video or camera streams), to aid in the training and benchmarking of machine learning models.

Today, we are excited to release the Objectron dataset, a collection of short, object-centric video clips capturing a larger set of common objects from different angles. Each video clip is accompanied by AR session metadata that includes camera poses and sparse point-clouds. The data also contain manually annotated 3D bounding boxes for each object, which describe the object’s position, orientation, and dimensions. The dataset consists of 15K annotated video clips supplemented with over 4M annotated images collected from a geo-diverse sample (covering 10 countries across five continents).

|

| Example videos in the Objectron dataset. |

A 3D Object Detection Solution

Along with the dataset, we are also sharing a 3D object detection solution for four categories of objects — shoes, chairs, mugs, and cameras. These models are released in MediaPipe, Google's open source framework for cross-platform customizable ML solutions for live and streaming media, which also powers ML solutions like on-device real-time hand, iris and body pose tracking.

|

| Sample results of 3D object detection solution running on mobile. |

In contrast to the previously released single-stage Objectron model, these newest versions utilize a two-stage architecture. The first stage employs the TensorFlow Object Detection model to find the 2D crop of the object. The second stage then uses the image crop to estimate the 3D bounding box while simultaneously computing the 2D crop of the object for the next frame, so that the object detector does not need to run every frame. The second stage 3D bounding box predictor runs at 83 FPS on Adreno 650 mobile GPU.

|

| Diagram of a reference 3D object detection solution. |

Evaluation Metric for 3D Object Detection

With ground truth annotations, we evaluate the performance of 3D object detection models using 3D intersection over union (IoU) similarity statistics, a commonly used metric for computer vision tasks, which measures how close the bounding boxes are to the ground truth.

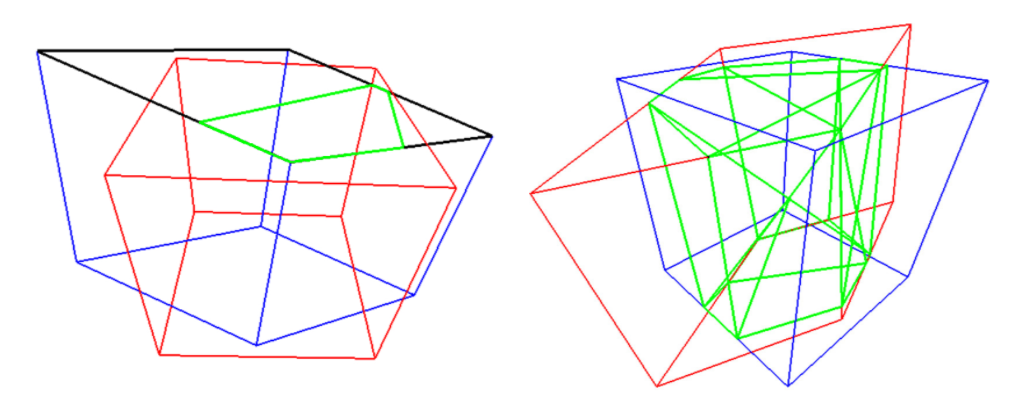

We propose an algorithm for computing accurate 3D IoU values for general 3D-oriented boxes. First, we compute the intersection points between faces of the two boxes using Sutherland-Hodgman Polygon clipping algorithm. This is similar to frustum culling, a technique used in computer graphics. The volume of the intersection is computed by the convex hull of all the clipped polygons. Finally, the IoU is computed from the volume of the intersection and volume of the union of two boxes. We are releasing the evaluation metrics source code along with the dataset.

Dataset Format

The technical details of the Objectron dataset, including usage and tutorials, are available on the dataset website. The dataset includes bikes, books, bottles, cameras, cereal boxes, chairs, cups, laptops, and shoes, and is stored in the objectron bucket on Google Cloud storage with the following assets:

- The video sequences

- The annotation labels (3D bounding boxes for objects)

- AR metadata (such as camera poses, point clouds, and planar surfaces)

- Processed dataset: shuffled version of the annotated frames, in tf.example format for images and SequenceExample format for videos.

- Supporting scripts to run evaluation based on the metric described above

- Supporting scripts to load the data into Tensorflow, PyTorch, and Jax and to visualize the dataset, including “Hello World” examples

With the dataset, we are also open-sourcing a data-pipeline to parse the dataset in popular Tensorflow, PyTorch and Jax frameworks. Example colab notebooks are also provided.

By releasing this Objectron dataset, we hope to enable the research community to push the limits of 3D object geometry understanding. We also hope to foster new research and applications, such as view synthesis, improved 3D representation, and unsupervised learning. Stay tuned for future activities and developments by joining our mailing list and visiting our github page.

Acknowledgements

The research described in this post was done by Adel Ahmadyan, Liangkai Zhang, Jianing Wei, Artsiom Ablavatski, Mogan Shieh, Ryan Hickman, Buck Bourdon, Alexander Kanaukou, Chuo-Ling Chang, Matthias Grundmann, and Tom Funkhouser. We thank Aliaksandr Shyrokau, Sviatlana Mialik, Anna Eliseeva, and the annotation team for their high quality annotations. We also would like to thank Jonathan Huang and Vivek Rathod for their guidance on TensorFlow Object Detection API.

Source: Google AI Blog

Announcing the Objectron Dataset

The state of the art in machine learning (ML) has achieved exceptional accuracy on many computer vision tasks solely by training models on photos. Building upon these successes and advancing 3D object understanding has great potential to power a wider range of applications, such as augmented reality, robotics, autonomy, and image retrieval. For example, earlier this year we released MediaPipe Objectron, a set of real-time 3D object detection models designed for mobile devices, which were trained on a fully annotated, real-world 3D dataset, that can predict objects’ 3D bounding boxes.

Yet, understanding objects in 3D remains a challenging task due to the lack of large real-world datasets compared to 2D tasks (e.g., ImageNet, COCO, and Open Images). To empower the research community for continued advancement in 3D object understanding, there is a strong need for the release of object-centric video datasets, which capture more of the 3D structure of an object, while matching the data format used for many vision tasks (i.e., video or camera streams), to aid in the training and benchmarking of machine learning models.

Today, we are excited to release the Objectron dataset, a collection of short, object-centric video clips capturing a larger set of common objects from different angles. Each video clip is accompanied by AR session metadata that includes camera poses and sparse point-clouds. The data also contain manually annotated 3D bounding boxes for each object, which describe the object’s position, orientation, and dimensions. The dataset consists of 15K annotated video clips supplemented with over 4M annotated images collected from a geo-diverse sample (covering 10 countries across five continents).

|

| Example videos in the Objectron dataset. |

A 3D Object Detection Solution

Along with the dataset, we are also sharing a 3D object detection solution for four categories of objects — shoes, chairs, mugs, and cameras. These models are released in MediaPipe, Google's open source framework for cross-platform customizable ML solutions for live and streaming media, which also powers ML solutions like on-device real-time hand, iris and body pose tracking.

|

| Sample results of 3D object detection solution running on mobile. |

In contrast to the previously released single-stage Objectron model, these newest versions utilize a two-stage architecture. The first stage employs the TensorFlow Object Detection model to find the 2D crop of the object. The second stage then uses the image crop to estimate the 3D bounding box while simultaneously computing the 2D crop of the object for the next frame, so that the object detector does not need to run every frame. The second stage 3D bounding box predictor runs at 83 FPS on Adreno 650 mobile GPU.

|

| Diagram of a reference 3D object detection solution. |

Evaluation Metric for 3D Object Detection

With ground truth annotations, we evaluate the performance of 3D object detection models using 3D intersection over union (IoU) similarity statistics, a commonly used metric for computer vision tasks, which measures how close the bounding boxes are to the ground truth.

We propose an algorithm for computing accurate 3D IoU values for general 3D-oriented boxes. First, we compute the intersection points between faces of the two boxes using Sutherland-Hodgman Polygon clipping algorithm. This is similar to frustum culling, a technique used in computer graphics. The volume of the intersection is computed by the convex hull of all the clipped polygons. Finally, the IoU is computed from the volume of the intersection and volume of the union of two boxes. We are releasing the evaluation metrics source code along with the dataset.

Dataset Format

The technical details of the Objectron dataset, including usage and tutorials, are available on the dataset website. The dataset includes bikes, books, bottles, cameras, cereal boxes, chairs, cups, laptops, and shoes, and is stored in the objectron bucket on Google Cloud storage with the following assets:

- The video sequences

- The annotation labels (3D bounding boxes for objects)

- AR metadata (such as camera poses, point clouds, and planar surfaces)

- Processed dataset: shuffled version of the annotated frames, in tf.example format for images and SequenceExample format for videos.

- Supporting scripts to run evaluation based on the metric described above

- Supporting scripts to load the data into Tensorflow, PyTorch, and Jax and to visualize the dataset, including “Hello World” examples

With the dataset, we are also open-sourcing a data-pipeline to parse the dataset in popular Tensorflow, PyTorch and Jax frameworks. Example colab notebooks are also provided.

By releasing this Objectron dataset, we hope to enable the research community to push the limits of 3D object geometry understanding. We also hope to foster new research and applications, such as view synthesis, improved 3D representation, and unsupervised learning. Stay tuned for future activities and developments by joining our mailing list and visiting our github page.

Acknowledgements

The research described in this post was done by Adel Ahmadyan, Liangkai Zhang, Jianing Wei, Artsiom Ablavatski, Mogan Shieh, Ryan Hickman, Buck Bourdon, Alexander Kanaukou, Chuo-Ling Chang, Matthias Grundmann, and Tom Funkhouser. We thank Aliaksandr Shyrokau, Sviatlana Mialik, Anna Eliseeva, and the annotation team for their high quality annotations. We also would like to thank Jonathan Huang and Vivek Rathod for their guidance on TensorFlow Object Detection API.

Source: Google AI Blog

Google Play’s Best of 2020 is back: Vote for your favorites

Google Play’s 2020 Best of awards are back—capping off a year unlike any other. Once again, we want to hear about your favorite content on Google Play.

Starting today until November 23, you can help pick our Users’ Choice winners by voting for your favorites from a shortlist of this year’s most loved and trending apps, games, movies, and books.

To cast your vote, visit this page before submissions close on November 23. Don’t forget to come back when the Users’ Choice winners, along with the rest of the Best of 2020 picks from our Google Play editors, are announced on December 1.

Source: The Official Google Blog

Fixing a Test Hourglass

describe('Terms of service are handled', () => { |

describe('Terms of service are handled', () => { |

public class FakeTermsOfService implements TermsOfService.Service { |

describe('Terms of service are handled', () => { it('accepts terms of service', async () => { |

References

- Just Say No to More End-to-End Tests, Mike Wacker, https://testing.googleblog.com/2015/04/just-say-no-to-more-end-to-end-tests.html

- Test Pyramid & Antipatterns, Khushi, https://khushiy.com/2019/02/07/test-pyramid-antipatterns/

- Testing on the Toilet: Fake Your Way to Better Tests, Jonathan Rockway and Andrew Trenk, https://testing.googleblog.com/2013/06/testing-on-toilet-fake-your-way-to.html

- Testing on the Toilet: Know Your Test Doubles, Andrew Trenk, https://testing.googleblog.com/2013/07/testing-on-toilet-know-your-test-doubles.html

- Hermetic Servers, Chaitali Narla and Diego Salas, https://testing.googleblog.com/2012/10/hermetic-servers.html

- Software Engineering at Google, Titus Winters, Tom Manshreck, Hyrum Wright, https://www.oreilly.com/library/view/software-engineering-at/9781492082781/

Source: Google Testing Blog

Security scorecards for open source projects

Scorecards is one of the first projects being released under the OpenSSF since its inception in August, 2020. The goal of the Scorecards project is to auto-generate a “security score” for open source projects to help users as they decide the trust, risk, and security posture for their use case. Scorecards defines an initial evaluation criteria that will be used to generate a scorecard for an open source project in a fully automated way. Every scorecard check is actionable. Some of the evaluation metrics used include a well-defined security policy, code review process, and continuous test coverage with fuzzing and static code analysis tools. A boolean is returned as well as a confidence score for each security check. Over time, Google will be improving upon these metrics with community contributions through the OpenSSF.

Source: Google Open Source Blog

Security scorecards for open source projects

Scorecards is one of the first projects being released under the OpenSSF since its inception in August, 2020. The goal of the Scorecards project is to auto-generate a “security score” for open source projects to help users as they decide the trust, risk, and security posture for their use case. Scorecards defines an initial evaluation criteria that will be used to generate a scorecard for an open source project in a fully automated way. Every scorecard check is actionable. Some of the evaluation metrics used include a well-defined security policy, code review process, and continuous test coverage with fuzzing and static code analysis tools. A boolean is returned as well as a confidence score for each security check. Over time, Google will be improving upon these metrics with community contributions through the OpenSSF.

Source: Google Open Source Blog

Meet the Googlers breaking down language barriers for migrants

Googler Ariel Koren was at the U.S.-Mexico border in 2018 when more than 7,000 people from Central America were arriving in the area. Ariel, who speaks nine languages, was there serving as an interpreter for asylum seekers fighting their cases.

Ariel, who leads Marketing for Google for Education in Latin America, knew language skills would continue to be an essential resource for migrants and refugees. She decided to team up with fellow Googler Fernanda Montes de Oca, who is also multilingual and speaks four languages. “We knew that our language skills are only valuable to the extent that we are using them actively to mobilize for others, ” says Fernanda. The two began working to create a network of volunteer translators, which they eventually called Respond Crisis Translation.

In addition to her job leading Google for Education Ecosystems in Google Mexico, Fernanda is responsible for recruiting and training Respond’s volunteer translators. Originally, the group saw an average of five new volunteers sign up each week; now, they sometimes receive more than 20 applications a day. Fernanda thinks the increased time at home may be driving the numbers. “Many of them are looking to do something that can have a social impact while they're staying at home,” she says. Today, Respond consists of about 1,400 volunteers and offers services in 53 languages.

Fernanda says she looks for people who are passionate about the cause, have experience in legal translations and have a commitment to building out a strong emotional support network. “Volunteers have to fight against family separation and support folks who have experienced disparate types of violence and abuse,” she says. “It’s also important to have a support network and be able to take care of yourself.” Volunteers have access to a therapist should they need it.

In January 2020, the group officially became an NGO and to date, Respond Crisis Translation has worked on about 1,600 cases, some of which have helped asylum seekers to win their cases. Respond Crisis Translation largely works on cases at the Mexico-U.S. border, but is also increasingly lending their efforts in Southern Mexico and Europe. The COVID-19 pandemic also prompted the group to explore more ways to help. Volunteers have created translated medical resources, supported domestic violence hotlines and have translated educational materials for migrant parents who are now helping their children with distance learning.

One challenge for the team is meeting increasing demand. “We weren’t just concerned about growing, but ensuring the quality of our work as we grew,” says Ariel. “Small language details like a typo or misspelled word are frequently used to disqualify an entire asylum case. The quality of our translation work is very important because it can impact whether a case is won or lost, which can literally mean the difference between life and death or a deportation. Every time there’s a story about someone who won their case we feel a sense of relief. That’s what motivates us to keep going.”

Ariel and Fernanda also hope Respond Crisis Translation can become an income source for indigenous language translators. Whenever they work with indigenous language speakers, Respond asks the NGO they’re working with to provide compensation to the translator for their labor.

Although Ariel and Fernanda didn’t expect their project to grow as quickly as it has, they’re thrilled to see the progress they’ve made. “Being a multilingual person is a very important part of my identity, so when I see that language is being used as a tool to systematically limit the fundamental right to freedom of mobility,” says Ariel. “I feel a responsibility to resist, and work alongside the language community to find solutions.”

Source: The Official Google Blog

Announcing v6 of the Google Ads API

Here are the highlights:

- The API now has Change History similar to the Google Ads UI with

ChangeEventincluding what interface and who made the changes. - You can now manage user access in your Google Ads account.

- Maximize conversion value and maximize conversions are now available as portfolio bidding strategies, which includes Search.

- The new

Customer.optimization_score_weighthelps you calculate your overall optimization score for your manager account. - New audience types are available including

CombinedAudienceandCustomAudience.

The following resources can help you get started:

If you have any questions or need additional help, contact us via the forum.

Source: Google Ads Developer Blog

New in Web Stories: Discover, WordPress and quizzes

Things are moving fast around Web Stories. To keep you in the loop, we’ll be rounding up the latest news every month. You’ll find new product integration and tooling updates, examples of great stories in the wild and educational content around Web Stories.

Web Stories arrive in Google Discover

Last month we announced that Web Stories are now available in Discover, part of the Google app on Android and iOS that’s used by more than 800 million people each month. The Stories carousel, now available in the United States, India and Brazil at the top of Discover, helps you find some of the best visual content from around the web. In the future, we intend to expand Web Stories in Discover to more countries and Google products.

New tools for the WordPress ecosystem

There are more and more ways to create Web Stories, and now WordPress users now have access to not just one but two visual story editors that are integrated into the WordPress CMS: Google’s Web Stories for WordPress and MakeStories for WordPress.

MakeStories also gained six new languages (English, German, French, Italian, Portugese and Russian), and has new features including new templates and preset rulers. They’ve also made it easier to publish your Web Stories with a new publishing flow that highlights critical pieces like metadata, analytics and ads setup. You can also now host stories on MakeStories.com, but serve them off your own publisher domain.

MakeStories new publishing workflow in action in WordPress.

There are many different options out there to build Web Stories, so pick the one that works best for you from amp.dev’s Tools section.

Quizzes and polls are coming to Web Stories

We’ll also be covering Web Story format updates by the Stories team here as they’re at the forefront of innovation of Web Stories: you can expect the features they bring to Stories to appear in the visual editor of your choice sometime after.

Web Stories are getting more interactive with quizzes and polls, or what the Stories team call Interactive Components. These features are currently available in the format, and you can learn more about them in developer documentation. Several visual editors are working on supporting these new features so you can use them without any coding necessary on your end.

Learn how to create beautiful Web Stories with Storytime

One of the best ways to learn the in’s and out’s of Web Story-telling is our educational Storytime series, with a new episode arriving every week. If you haven’t yet started watching, we encourage you to give it a try.

In October, we interviewed the Product Manager behind the Google Discover integration; talked about the art of writing, remixing, optimizing and promoting Stories; analyzed the fabric of a great Web Story; and covered all sorts of editorial patterns that work for Web Stories.

And you can check out all of these videos as Web Stories: