Posted by:Charmaine D’Silva, Product Lead, Android Privacy and FrameworkNarayan Kamath, Engineering Lead, Android Privacy and FrameworkStephan Somogyi, Product Lead, Android SecuritySudhi Herle, Engineering Lead, Android Security

This blog post is part of a weekly series for #11WeeksOfAndroid. For each #11WeeksOfAndroid, we’re diving into a key area so you don’t miss anything. This week, we spotlighted Privacy and Security; here’s a look at what you should know.

Privacy and security is core to how we design Android, and with every new release we increase our investment in this space. Android 11 continues to make important strides in these areas, and this week we’ll be sharing a series of updates and resources about Android privacy and security. But first, let’s take a quick look at some of the most important changes we’ve made in Android 11 to protect user privacy and make the platform more secure.

As shared in the “All things privacy in Android 11” video, we’re giving users even more control over sensitive permissions. Throughout the development of this release, we have engaged deeply and frequently with our developer community to design these features in a balanced way - amplifying user privacy while minimizing developer impact. Let’s go over some of these features:

One-time permission: In Android 10, we introduced a granular location permission that allows users to limit access to location only when an app is in use (aka foreground only). When presented with the new runtime permissions options, users choose foreground only location more than 50% of the time. This demonstrated to us that users really wanted finer controls for permissions. So in Android 11, we’ve introduced one time permissions that let users give an app access to the device microphone, camera, or location, just that one time. As an app developer, there are no changes that you need to make to your app for it to work with one time permissions, and the app can request permissions again the next time the app is used. Learn more about building privacy-friendly apps with these new changes in this video.

Background location: In Android 10 we added a background location usage reminder so users can see how apps are using this sensitive data on a regular basis. Users who interacted with the reminder either downgraded or denied the location permission over 75% of the time. In addition, we have done extensive research and believe that there are very few legitimate use cases for apps to require access to location in the background.

In Android 11, background location will no longer be a permission that a user can grant via a run time prompt and it will require a more deliberate action. If your app needs background location, the system will ensure that the app first asks for foreground location. The app can then broaden its access to background location through a separate permission request, which will cause the system to take the user to Settings in order to complete the permission grant.

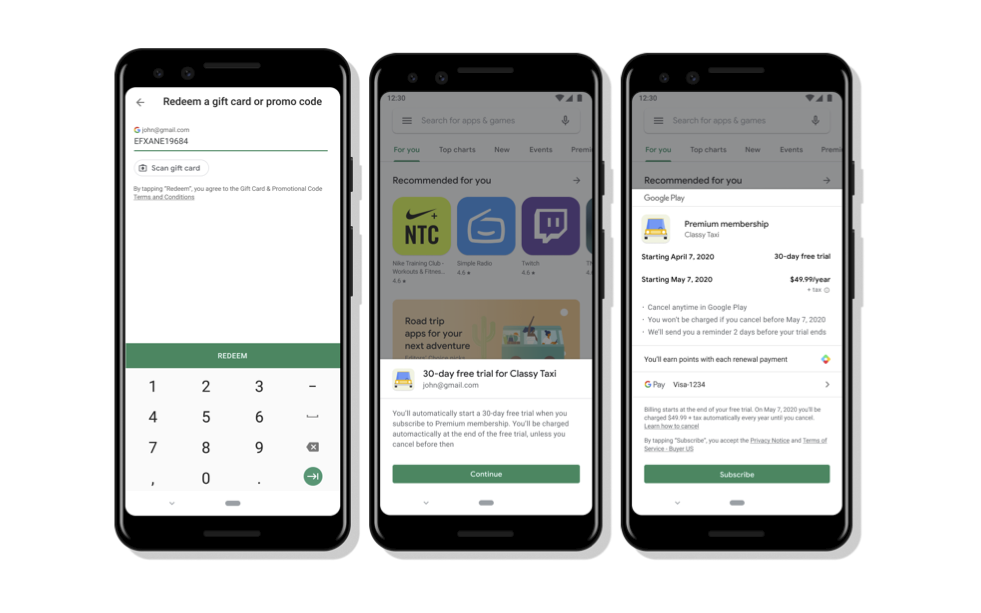

In February, we announced that Google Play developers will need to get approval to access background location in their app to prevent misuse. We're giving developers more time to make changes and won't be enforcing the policy for existing apps until 2021. Check out this helpful video to find possible background location usage in your code.

Permissions auto-reset: Most users tend to download and install over 60 apps on their device but interact with only a third of these apps on a regular basis. If users haven’t used an app that targets Android 11 for an extended period of time, the system will “auto-reset” all of the granted runtime permissions associated with the app and notify the user. The app can request the permissions again the next time the app is used. If you have an app that has a legitimate need to retain permissions, you can prompt users to turn this feature OFF for your app in Settings.

Data access auditing APIs: Android encourages developers to limit their access to sensitive data, even if they have been granted permission to do so. In Android 11, developers will have access to new APIs that will give them more transparency into their app’s usage of private and protected data. The APIs will enable apps to track when the system records the app’s access to private user data.

Scoped Storage: In Android 10, we introduced scoped storage which provides a filtered view into external storage, giving access to app-specific files and media collections. This change protects user privacy by limiting broad access to shared storage in many ways including changing the storage permission to only give read access to photos, videos and music and improving app storage attribution. Since Android 10, we’ve incorporated developer feedback and made many improvements to help developers adopt scoped storage, including: updated permission UI to enhance user experience, direct file path access to media to improve compatibility with existing libraries, updated APIs for modifying media, Manage External Storage permission to enable select use cases that need broad files access, and protected external app directories. In Android 11, scoped storage will be mandatory for all apps that target API level 30. Learn more in this video and check out the developer documentation for further details.

Google Play system updates: Google Play system updates were introduced with Android 10 as part of Project Mainline. Their main benefit is to increase the modularity and granularity of platform subsystems within Android so we can update core OS components without needing a full OTA update from your phone manufacturer. Earlier this year, thanks to Project Mainline, we were able to quickly fix a critical vulnerability in the media decoding subsystem. Android 11 adds new modules, and maintains the security properties of existing ones. For example, Conscrypt, which provides cryptographic primitives, maintained its FIPS validation in Android 11 as well.

BiometricPrompt API: Developers can now use the BiometricPrompt API to specify the biometric authenticator strength required by their app to unlock or access sensitive parts of the app. We are planning to add this to the Jetpack Biometric library to allow for backward compatibility and will share further updates on this work as it progresses.

Identity Credential API: This will unlock new use cases such as mobile drivers licences, National ID, and Digital ID. It’s being built by our security team to ensure this information is stored safely, using security hardware to secure and control access to the data, in a way that enhances user privacy as compared to traditional physical documents. We’re working with various government agencies and industry partners to make sure that Android 11 is ready for such digital-first identity experiences.

Thank you for your flexibility and feedback as we continue to build an increasingly more private and secure platform. You can learn about more features in the Android 11 Beta developer site. You can also learn about general best practices related to privacy and security.

Please follow Android Developers on Twitter and Youtube to catch helpful content and materials in this area all this week.

Resources

You can find the entire playlist of #11WeeksOfAndroid video content here, and learn more about each week here. We’ll continue to spotlight new areas each week, so keep an eye out and follow us on Twitter and YouTube. Thanks so much for letting us be a part of this experience with you!

Posted by Angela Ying, Product Manager, Google Play

Posted by Angela Ying, Product Manager, Google Play