Posted by Stephanie Cuthbertson, Director, Product Management

Android 11 is here! Today we’re pushing the source to the Android Open Source Project (AOSP) and officially releasing the newest version of Android. We built Android 11 with a focus on three themes: a People-centric approach to communication, Controls to let users quickly get to and control all of their smart devices, and Privacy to give users more ways to control how data on devices is shared. Read more in our Keyword post.

For developers, Android 11 has a ton of new capabilities. You’ll want to check out conversation notifications, device and media controls, one-time permissions, enhanced 5G support, IME transitions, and so much more. To help you work and develop faster, we also added new tools like compatibility toggles, ADB incremental installs, app exit reasons API, data access auditing API, Kotlin nullability annotations, and many others. We worked to make Android 11 a great release for you, and we can’t wait to see what you’ll build!

Watch for official Android 11 coming to a device near you, starting today with Pixel 2, 3, 3a, 4, and 4a devices. To get started, visit the Android 11 developer site.

People, Controls, Privacy

People

Android 11 is people-centric and expressive, reimagining the way we have conversations on our phones, and building an OS that can recognize and prioritize the most important people in our lives. For developers, Android 11 helps you build deeper conversational and personal interactions into your apps.

- Conversation notifications appear in a dedicated section at the top of the shade, with a people-forward design and conversation specific actions, such as opening the conversation as a bubble, creating a conversation shortcut on the home screen, or setting a reminder.

- Bubbles - Bubbles help users keep conversations in view and accessible while multitasking on their devices. Messaging and chat apps should use the Bubbles API on notifications to enable this in Android 11.

- Consolidated keyboard suggestions let Autofill apps and Input Method Editors (IMEs) securely offer users context-specific entities and strings directly in an IME’s suggestion strip, where they are most convenient for users.

Bubbles and people-centric conversations.

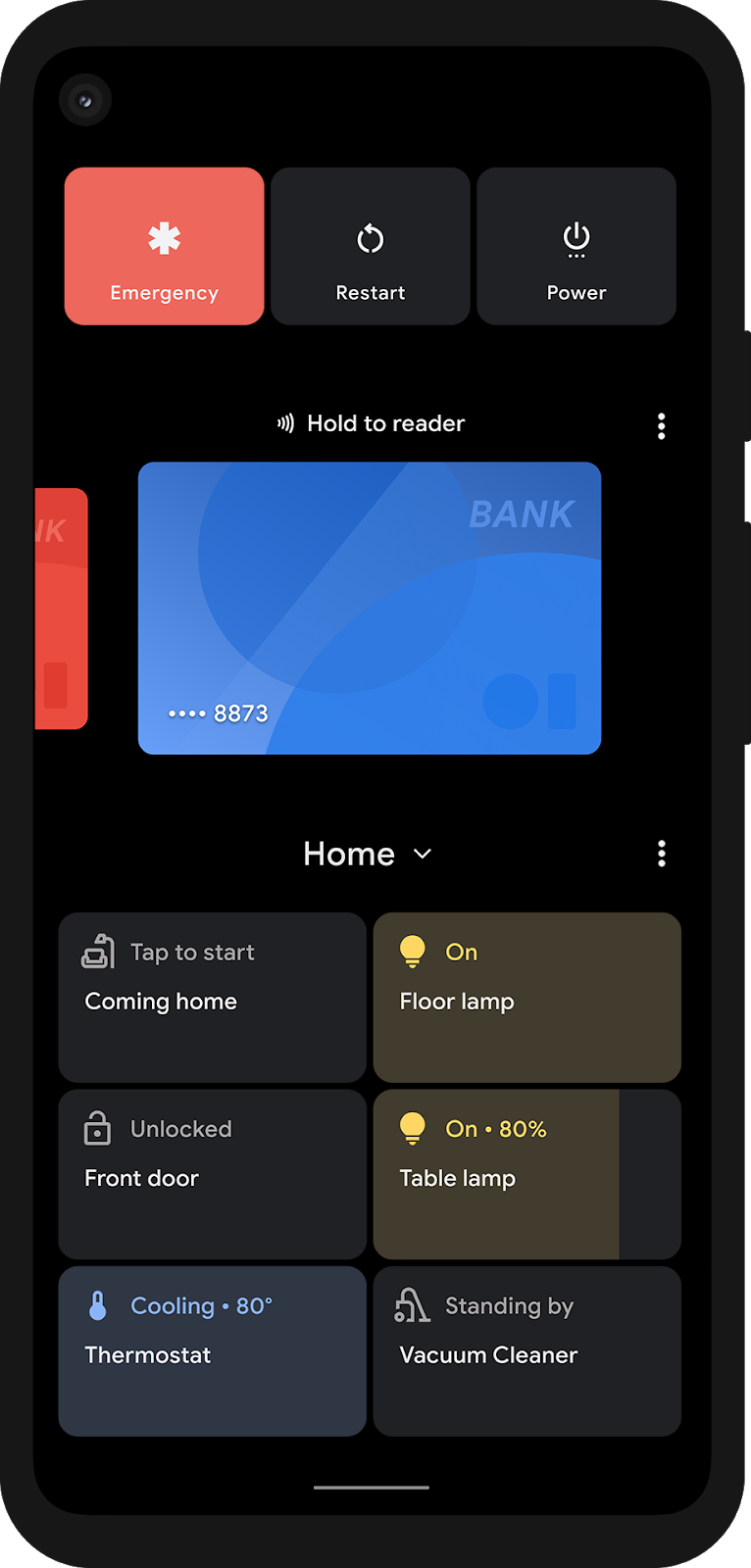

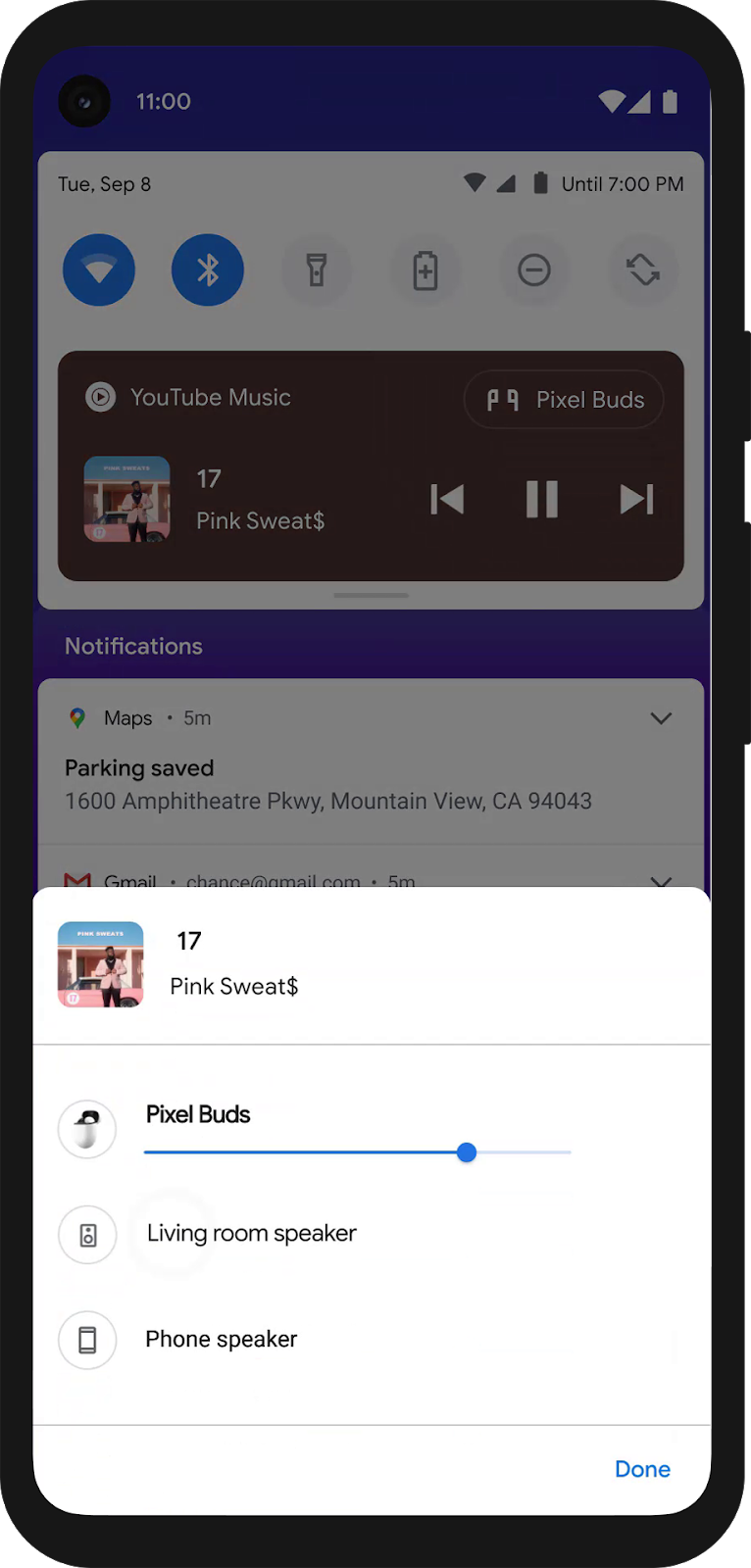

Controls

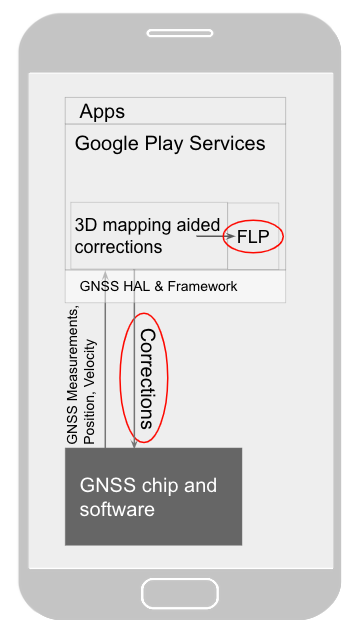

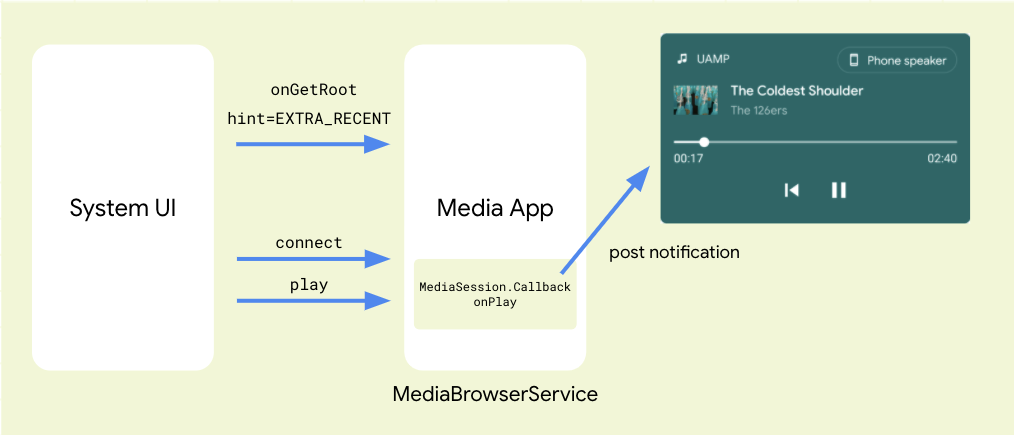

Android 11 lets users quickly get to and control all of their smart devices in one space. Developers can use new APIs to help users surface smart devices and control media:

- Device Controls make it faster and easier than ever for users to access and control their connected devices. Now, by simply long pressing the power button, they’re able to bring up device controls instantly, and in one place. Apps can use a new API to appear in the controls. More here.

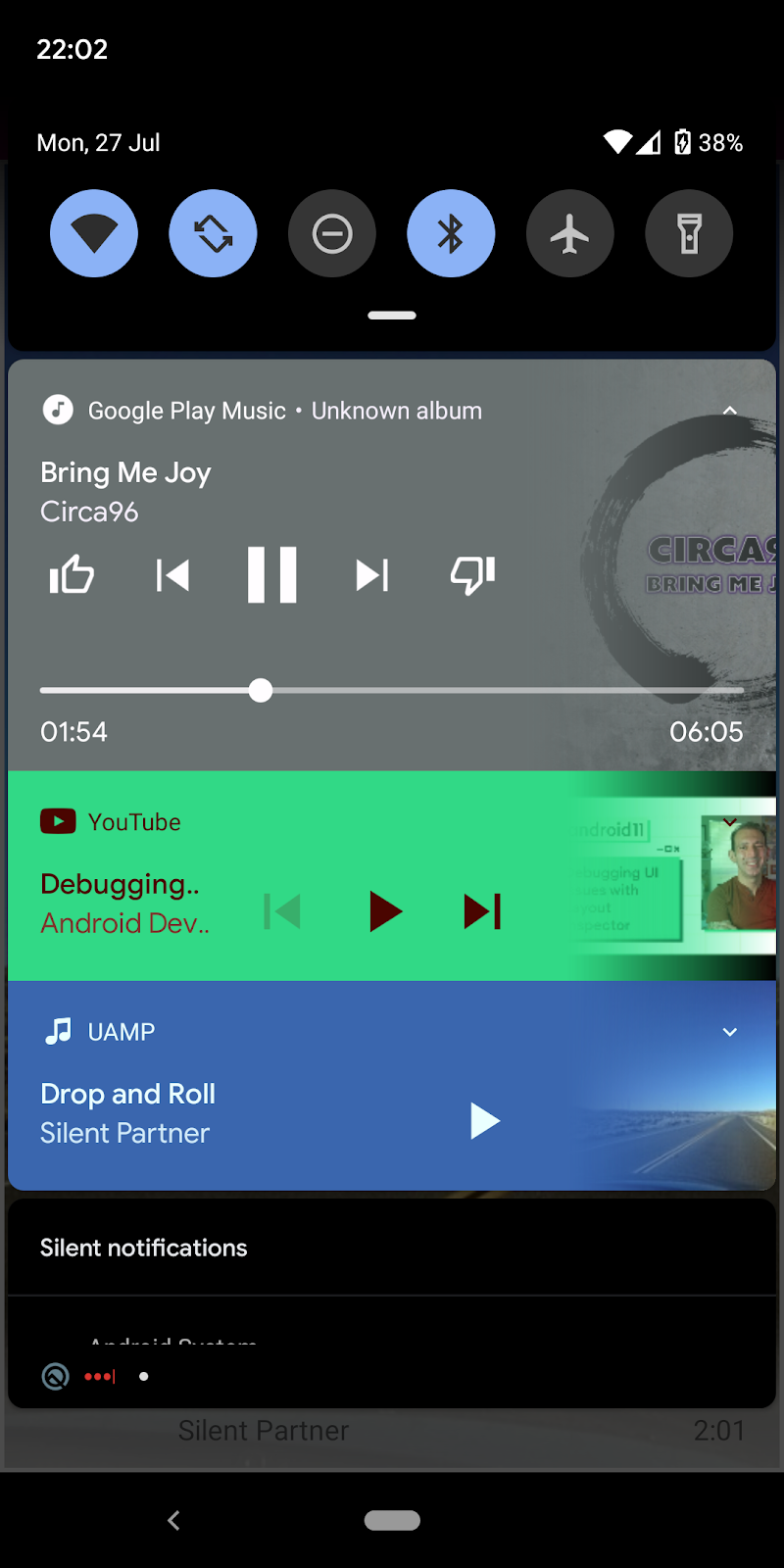

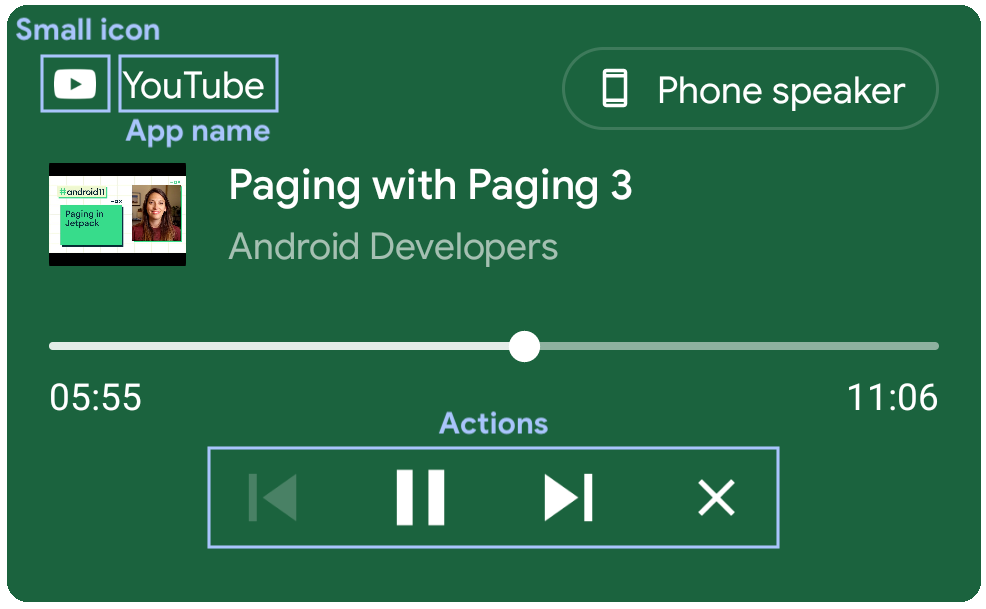

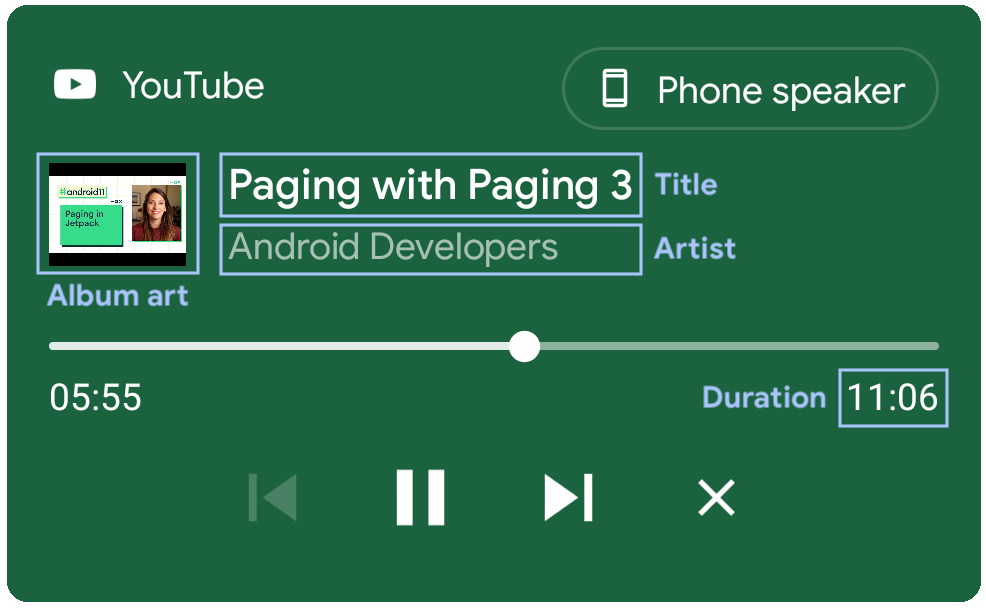

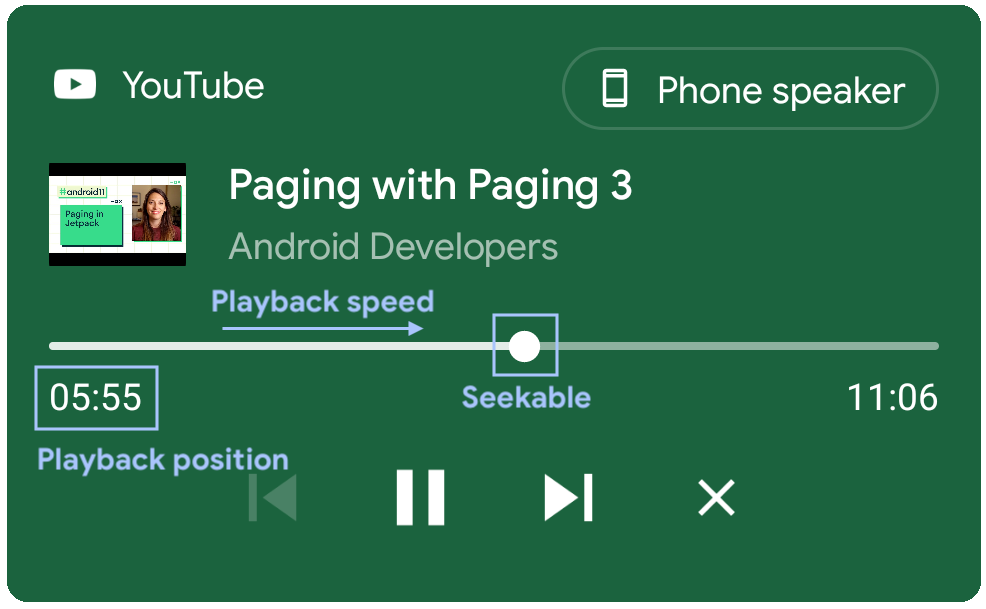

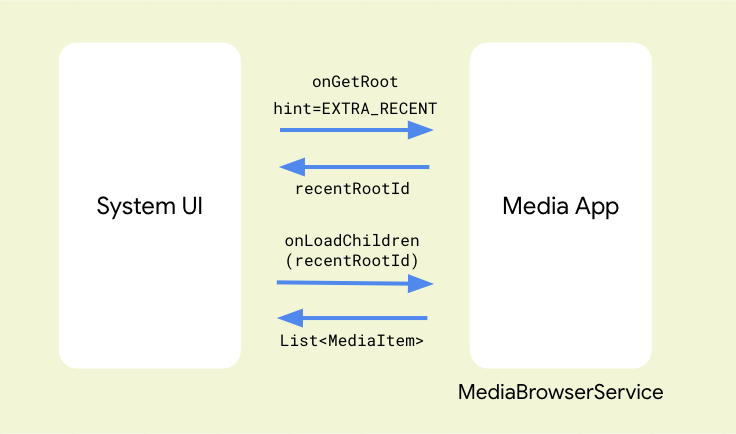

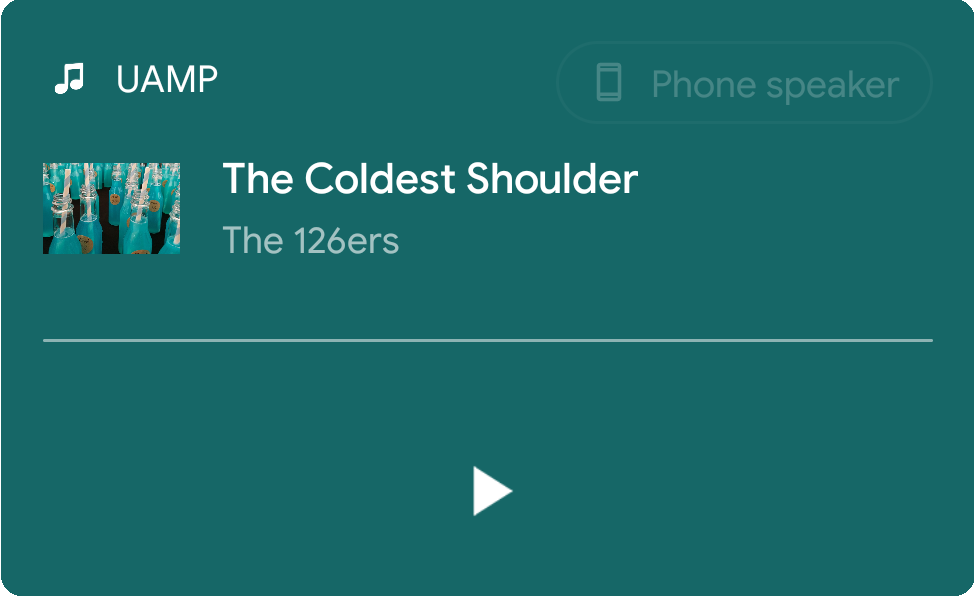

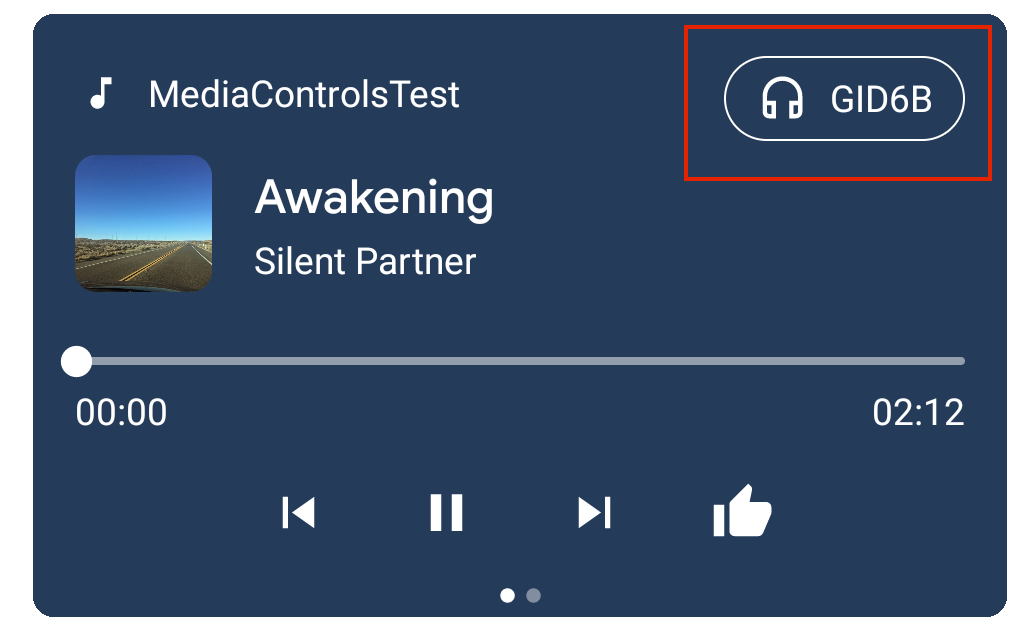

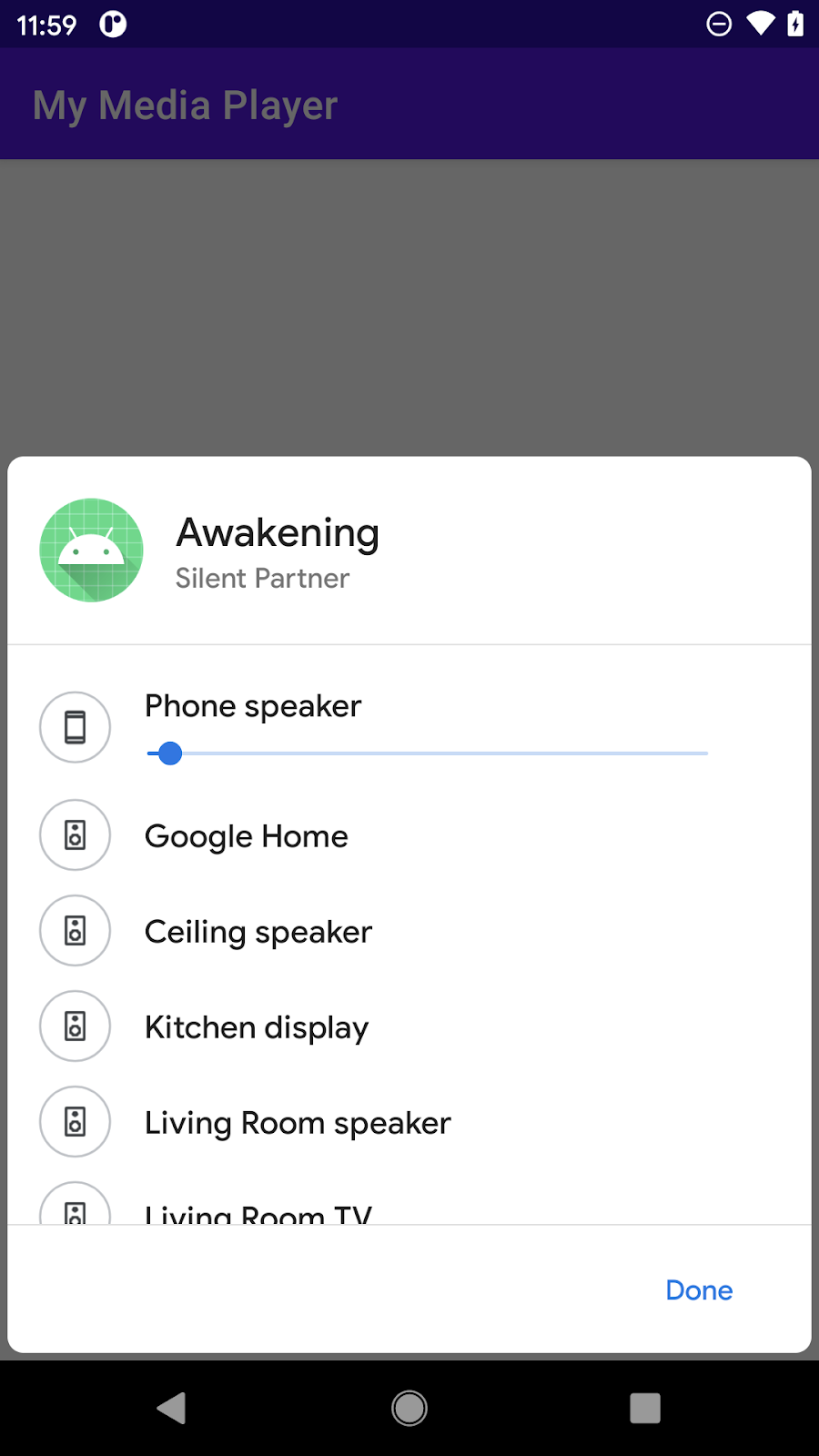

- Media Controls make it quick and convenient for users to switch the output device for their audio or video content, whether it be headphones, speakers or even their TV. More here.

Device controls and media controls.

Privacy

In Android 11, we’re giving users even more control and transparency over sensitive permissions and working to keep devices more secure through faster updates.

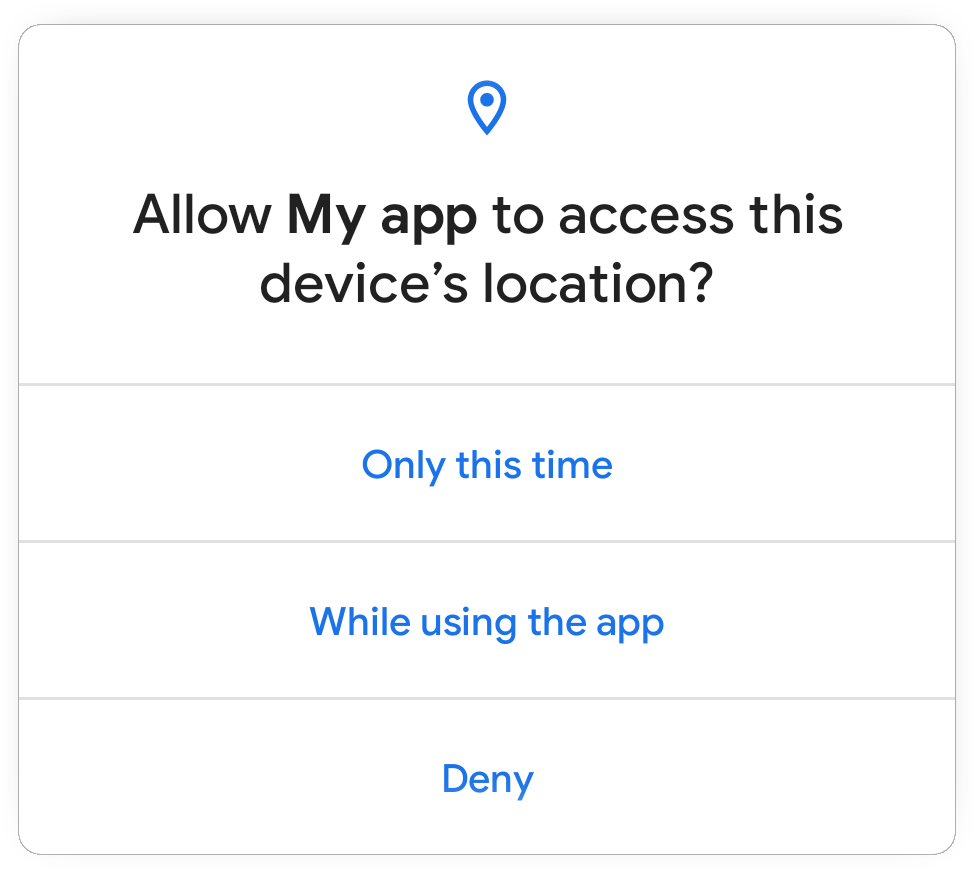

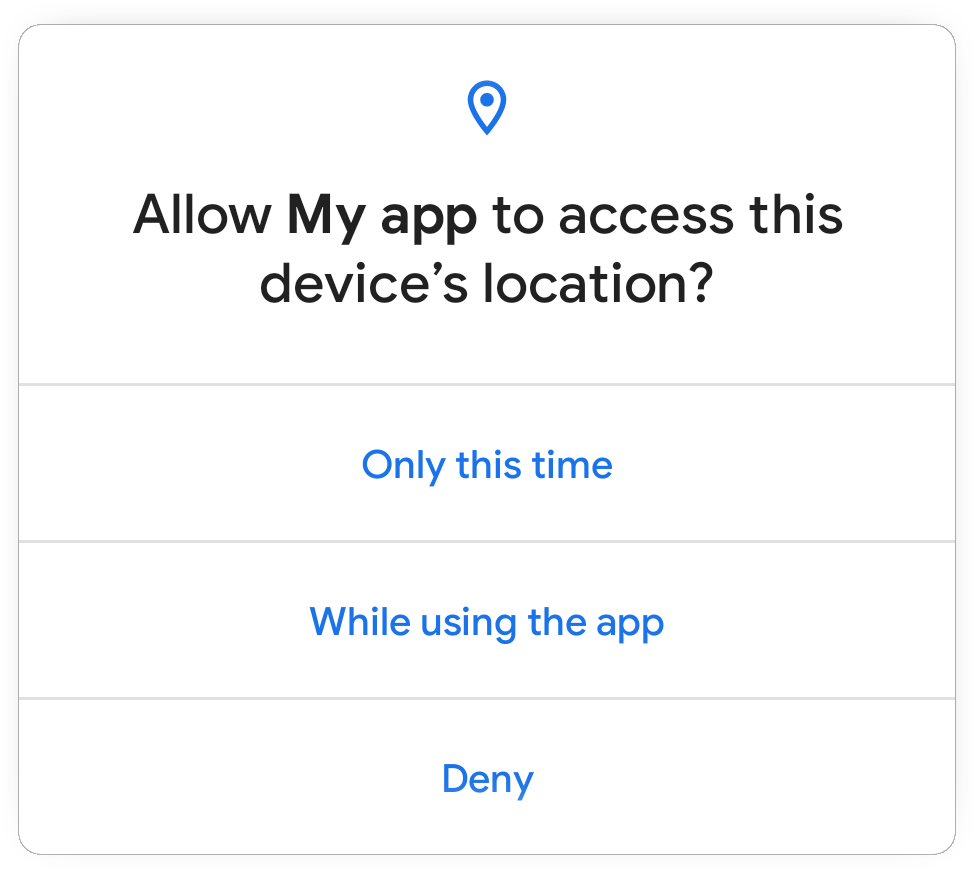

One-time permission - Now users can give an app access to the device microphone, camera, or location, just for one time. The app can request permissions again the next time the app is used. More here.

One-time permission dialog in Android 11.

Background location - Background location now requires additional steps from the user beyond granting a runtime permission. If your app needs background location, the system will ensure that you first ask for foreground location. You can then broaden your access to background location through a separate permission request, and the system will take the user to Settings to complete the permission grant.

Also note that in February we announced that Google Play developers will need to get approval to access background location in their app to prevent misuse. We're giving developers more time to make changes and won't be enforcing the policy for existing apps until 2021.

Permissions auto-reset - if users haven’t used an app for an extended period of time, Android 11 will “auto-reset” all of the runtime permissions associated with the app and notify the user. The app can request the permissions again the next time the app is used. More here.

Scoped storage - We’ve continued our work to better protect app and user data on external storage, and made further improvements to help developers more easily migrate. More here.

Google Play system updates - Launched last year, Google Play system updates help us expedite updates of core OS components to devices in the Android ecosystem. In Android 11, we more than doubled the number of updatable modules, including 12 new modules that will help improve privacy, security, and consistency for users and developers.

BiometricPrompt API - Developers can now use the BiometricPrompt API to specify the biometric authenticator strength required by their app to unlock or access sensitive parts of the app. For backward compatibility, we’ve just added these capabilities to the Jetpack Biometric library. We’ll share further updates as the work progresses.

Identity Credential API - This will unlock new use cases such as mobile drivers licences, National ID, and Digital ID. We’re working with various government agencies and industry partners to make sure that Android 11 is ready for digital-first identity experiences.

You can read about all of the Android 11 privacy features here.

Helpful innovation

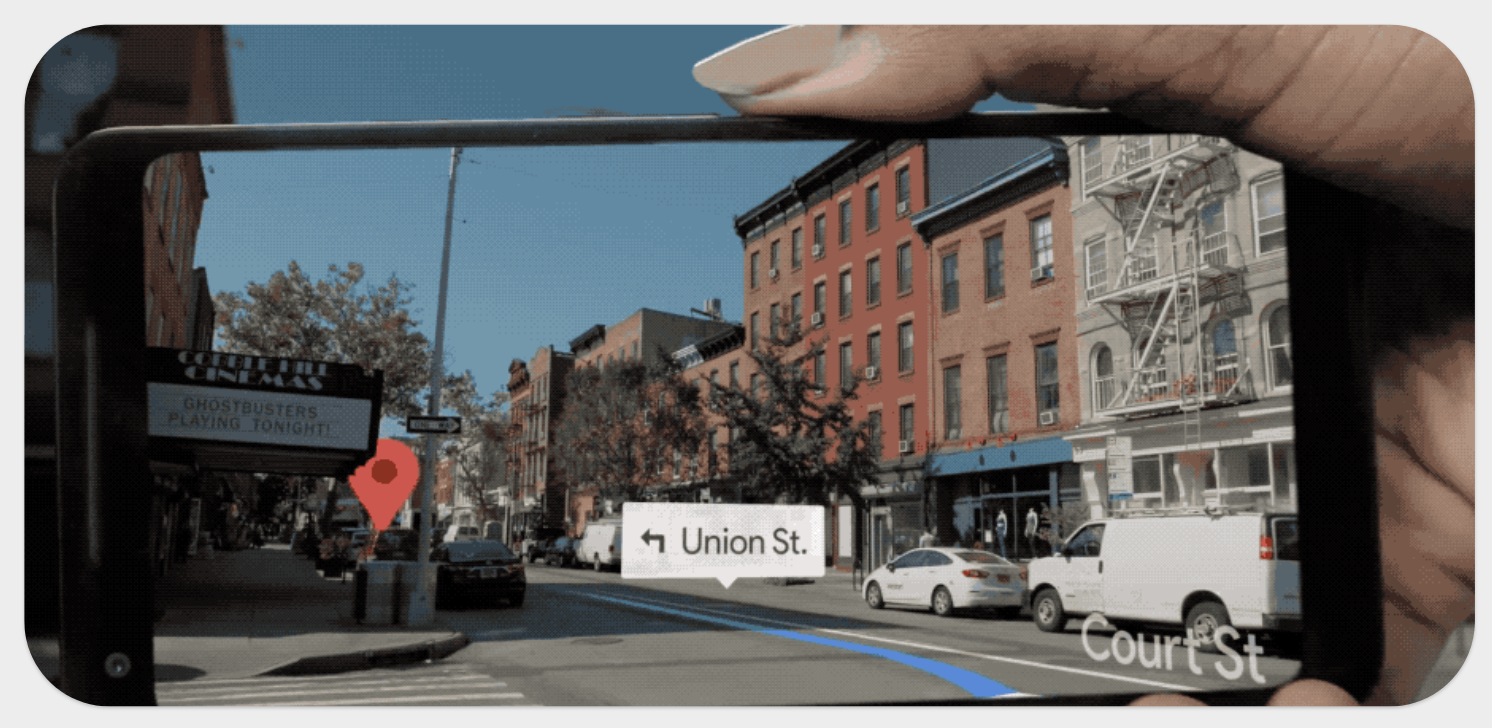

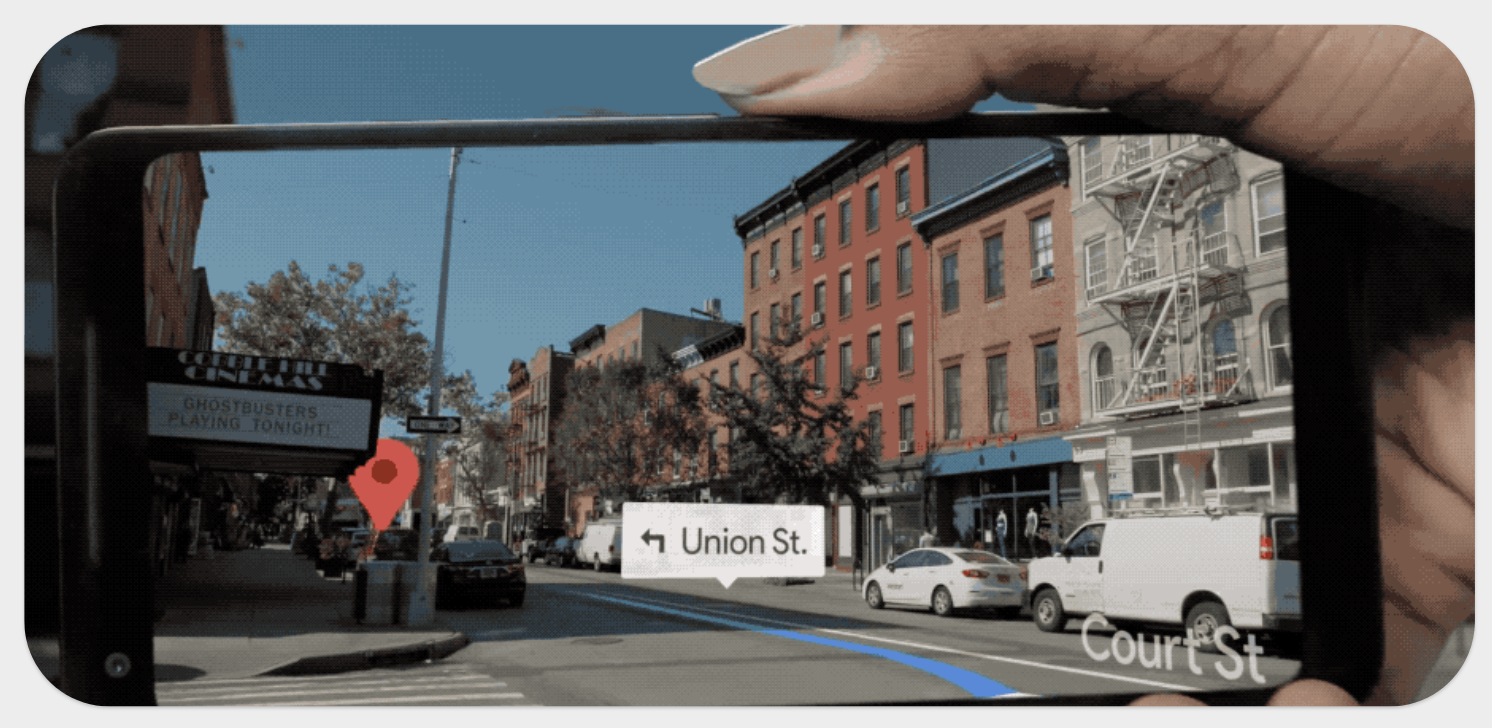

Enhanced 5G support - Android 11 includes updated developer support to help you take advantage of the faster speeds and lower latency of 5G networks. You can learn when the user is connected to a 5G network, check whether the connection is metered, and get an estimate of the connection bandwidth. To help you build experiences now for 5G, we’ve also added 5G support in the Android Emulator. To get started with 5G on Android, visit the 5G developer page.

Moving beyond the home, 5G can for example let you enhance your “on-the-go” experience by providing seamless interactions with the world around you from friends and family to businesses.

New screen types - Device makers are continuing to innovate by bringing exciting new device screens to market, such as hole-punch and waterfall screens. Android 11 adds support for these in the platform, with APIs to let you optimize your apps. You can manage both hole-punch and waterfall screens using the existing display cutout APIs. You can set a new window layout attribute to use the entire waterfall screen, and a new waterfall insets API helps you manage interaction near the edges.

Call screening support - Android 11 helps call-screening apps do more to manage robocalls. Apps can verify an incoming call’s STIR/SHAKEN status (standards that protect against caller ID spoofing) as part of the call details, and they can report a call rejection reason. Apps can also customize a system-provided post call screen to let users perform actions such as marking a call as spam or adding to contacts.

Polish and quality

OS resiliency - In Android 11 we’ve made the OS more dynamic and resilient as a whole by fine-tuning memory reclaiming processes, such as forcing user-imperceptible restarts of processes based on RSS HWM thresholds. Also, to improve performance and memory, Android 11 adds Binder caching, which optimizes highly used IPC calls to system services by caching data for those that retrieve relatively static data. Binder caching also improves battery life by reducing CPU time.

Synchronized IME transitions - New APIs let you synchronize your app’s content with the IME (input method editor, or on-screen keyboard) and system bars as they animate on and offscreen, making it much easier to create natural, intuitive and jank-free IME transitions. For frame-perfect transitions, a new WindowInsetsAnimation.Callback API notifies apps of per-frame changes to insets while the system bars or the IME animate. Additionally, you can use a new WindowInsetsAnimationController API to control system UI types like system bars, IME, immersive mode, and others. More here.

Synchronized IME transition through insets animation listener.

App-driven IME experience through WindowInsetsAnimationController.

HEIF animated drawables - The ImageDecoder API now lets you decode and render image sequence animations stored in HEIF files, so you can make use of high-quality assets while minimizing impact on network data and APK size. HEIF image sequences can offer drastic file-size reductions for image sequences when compared to animated GIFs.

Native image decoder - New NDK APIs let apps decode and encode images (such as JPEG, PNG, WebP) from native code for graphics or post processing, while retaining a smaller APK size since you don’t need to bundle an external library. The native decoder also takes advantage of Android’s process for ongoing platform security updates. See the NDK sample code for examples of how to use the APIs.

Low-latency video decoding in MediaCodec - Low latency video is critical for real-time video streaming apps and services like Stadia. Video codecs that support low latency playback return the first frame of the stream as quickly as possible after decoding starts. Apps can use new APIs to check and configure low-latency playback for a specific codec.

Variable refresh rate - Apps and games can use a new API to set a preferred frame rate for their windows. Most Android devices refresh the display at 60Hz refresh rate, but some support multiple refresh rates, such as 90Hz as well as 60Hz, with runtime switching. On these devices, the system uses the app’s preferred frame rate to choose the best refresh rate for the app. The API is available in both the SDK and NDK. See the details here.

Dynamic resource loader - Android 11 includes a new public API to let apps load resources and assets dynamically at runtime. With the Resource Loader framework you can include a base set of resources in your app or game and then load additional resources, or modify the loaded resources, as needed at runtime.

Neural Networks API (NNAPI) 1.3 - We continue to add ops and controls to support machine learning on Android devices. To optimize common use-cases, NNAPI 1.3 adds APIs for priority and timeout, memory domains, and asynchronous command queue. New ops for advanced models include signed integer asymmetric quantization, branching and loops, and a hard-swish op that helps accelerate next-generation on-device vision models such as MobileNetV3.

Developer friendliness

App compatibility tools - We worked to minimize compatibility impacts on your apps by making most Android 11 behavior changes opt-in, so they won’t take effect until you change the apps’ targetSdkVersion to 30. If you are distributing through Google Play, you’ll have more than a year to opt-in to these changes, but we recommend getting started testing early. To help you test, Android 11 lets you enable or disable many of the opt-in changes individually. More here.

App exit reasons - When your app exits, it’s important to understand why the app exited and what the state was at the time -- across the many device types, memory configurations, and user scenarios that your app runs in. Android 11 makes this easier with an exit reasons API that you can use to request details of the app’s recent exits.

Data access auditing - data access auditing lets you instrument your app to better understand how it accesses user data and from which user flows. For example, it can help you identify any inadvertent access to private data in your own code or within any SDKs you might be using. More here.

ADB Incremental - Installing very large APKs with ADB (Android Debug Bridge) during development can be slow and impact your productivity, especially those developers working on Android Games. With ADB Incremental in Android 11, installing large APKs (2GB+) from your development computer to an Android 11 device is up to 10x faster. More here.

Kotlin nullability annotations - Android 11 adds nullability annotations to more methods in the public API. And, it upgrades a number of existing annotations from warnings to errors. These help you catch nullability issues at build time, rather than at runtime. More here.

Get your apps ready for Android 11

With Android 11 on its way to users, now is the time to finish your compatibility testing and publish your updates.

Here are some of the top behavior changes to watch for (these apply regardless of your app’s targetSdkVersion):

- One-time permission - Users can now grant single-use permission to access location, device microphone and camera. More here.

- External storage access - Apps can no longer access other apps’ files in external storage. More here.

- Scudo hardened allocator - Scudo is now the heap memory allocator for native code in apps. More here.

- File descriptor sanitizer - Fdsan is now enabled by default to detect file descriptor handling issues for native code in apps. More here.

Android 11 also includes opt-in behavior changes - these affect your app once it’s targeting the new platform. We recommend assessing these changes as soon as you’ve published the compatible version of your app. For more information on compatibility testing and tools, check out the resources we shared for Android 11 Compatibility week and visit the Android 11 developer site for technical details.

Enhance your app with new features and APIs

Next, when you're ready, dive into Android 11 and learn about the new features and APIs that you can use. Here are some of the top features to get started with.

We recommend these for all apps:

- Dark theme (from Android 10) - Make sure to provide a consistent experience for users who enable system-wide dark theme by adding a Dark theme or enabling Force Dark.

- Gesture navigation (from Android 10) - Support gesture navigation by going edge-to-edge and ensure that custom gestures work well with gestures. More here.

- Sharing shortcuts (from Android 10) - Apps that want to receive shared data should use the sharing shortcuts APIs to create share targets. Apps that want to send shared data should make sure to use the system share sheet.

- Synchronized IME transitions - Provide seamless transitions to your users using the new WindowInsets and related APIs. More here.

- New screen types - for devices with hole-punch or waterfall screens, make sure to test and adjust your content for these screens as needed. More here.

We recommend these if relevant for your app:

- Conversations - Messaging and communication apps can participate in the conversation experience by providing long-lived sharing shortcuts and surfacing conversations in notifications. More here.

- Bubbles - Bubbles are a way to keep conversations in view and accessible while multitasking. Use the Bubbles API on notifications to enable this.

- 5G - If your app or content can benefit from the faster speeds and lower latency of 5G, explore our developer resources to see what you can build.

- Device controls - If your app supports external smart devices, make sure those devices are accessible from the new Android 11 device controls area. More here.

- Media controls - For media apps, we recommend supporting the Android 11 media controls so users can manage playback and resumption from the Quick Settings shade. More here.

Read more about all of the Android 11 features at developer.android.com/11.

Coming to a device near you!

Android 11 will begin rolling out today on select Pixel, OnePlus, Xiaomi, OPPO and realme phones, with more partners launching and upgrading devices over the coming months. If you have a Pixel 2, 3, 3a, 4, or 4a phone, including those enrolled in this year’s Beta program, watch for the over-the-air update arriving soon!

Android 11 factory system images for Pixel devices are also available through the Android Flash Tool, or you can download them here. As always, you can get the latest Android Emulator system images via the SDK Manager in Android Studio. For broader testing on other Treble-compliant devices, Generic System Images (GSI) are available here.

If you're looking for the Android 11 source code, you'll find it here in the Android Open Source Project repository under the Android 11 branches.

What’s next?

We’ll soon be closing the preview issue tracker and retiring open bugs logged against Developer Preview or Beta builds, but please keep the feedback coming! If you still see an issue that you filed in the preview tracker, just file a new issue against Android 11 in the AOSP issue tracker.

Thanks again to the many developers and early adopters who participated in the preview program this year! You gave us great feedback to help shape the release, and you filed thousands of issues that have made Android 11 a better platform for everyone.

We're looking forward to seeing your apps on Android 11!