Posted by Jeremy Walker, Developer Relations Engineer

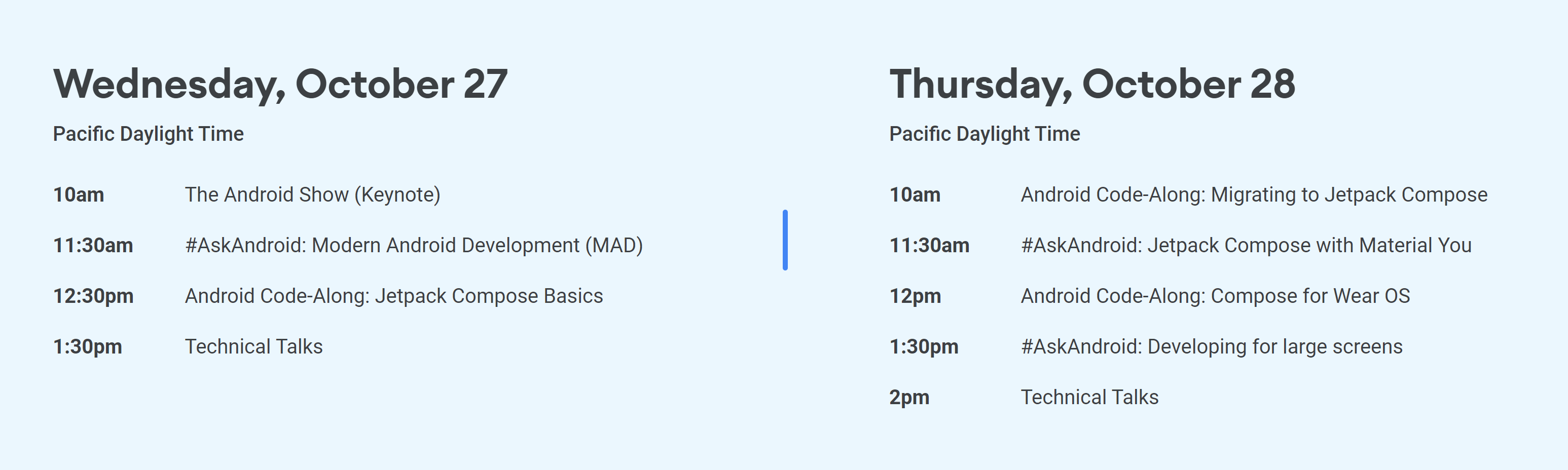

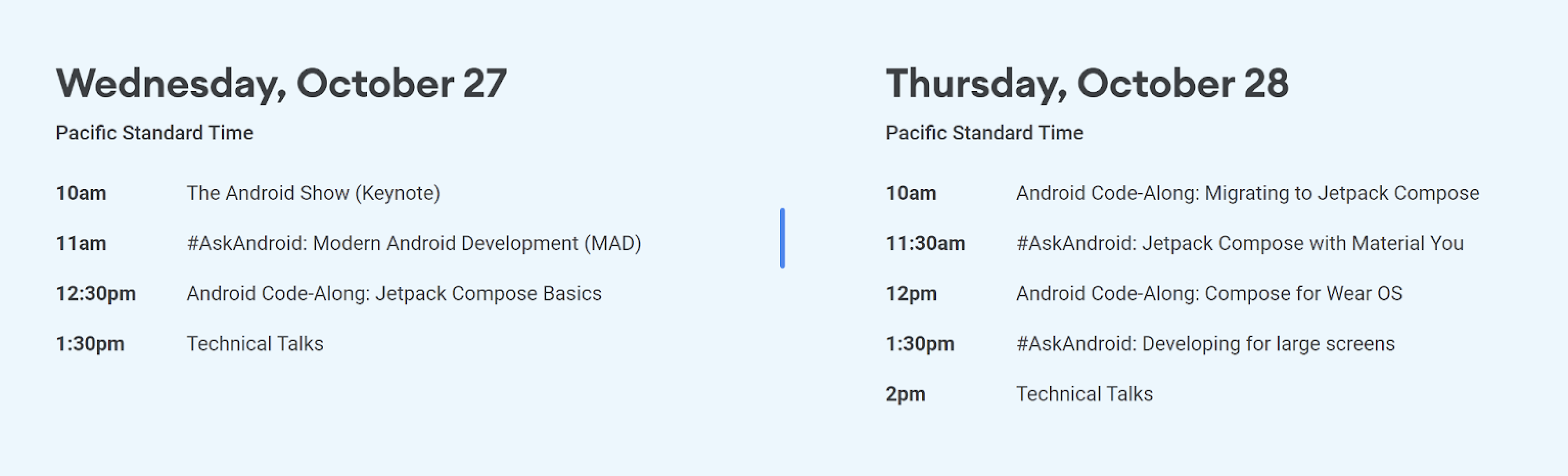

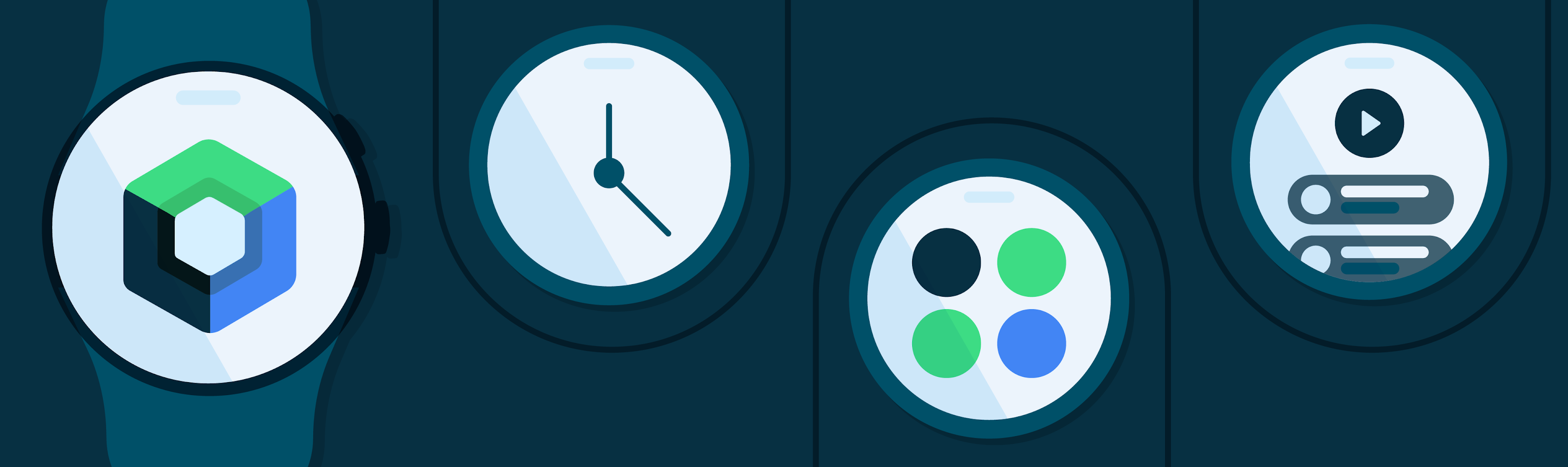

This year’s Android Dev Summit had many exciting announcements for Android developers, including some major updates for the Wear OS platform. At Google I/O, we announced the launch of the new Wear OS. Since then, Wear OS Powered by Samsung has launched on the Galaxy Watch4 series. Many developers such as Strava, Spotify, and Calm have already created helpful experiences for the latest version of Wear OS, and we’re looking forward to seeing what new experiences developers will help bring to the watch. To learn more and create better apps for the wrist, read more about the updates to our APIs, design tools, and the Play store.

Compose for Wear OS

The Jetpack Compose library simplifies and accelerates UI development, and we’re bringing Compose support to Wear OS. You can design your app with familiar UI components, adapted for the watch. These components include Material You, so you can create beautiful apps with less code.

Compose for Wear OS is now in developer preview. To learn more and get started:

- Watch the Compose for Wear OS talk

- Read an overview of Compose for Wear OS for more details

- Create a beginner app with our quick start guide

- Very simple and complex samples on GitHub

Try it out and share your feedback here or join the #compose-wear channel on the Jetbrains Slack and let us know there! Make sure you do it before we finalize APIs during beta!

Watch Face Studio

Watch faces are one of the most visible ways that users can express themselves on their smartwatches. Creating a watch face is a great way to showcase your brand for users on Wear OS. We’ve partnered with Samsung to provide better tools for watch face creation and make it easier to design watch faces for the Wear OS ecosystem.

Watch Face Studio is a design tool created by Samsung that allows you to produce and distribute your own watch faces without any coding. It includes includes intuitive graphics tools to allow you to easily design watch faces. You can create watch faces for your personal use, or upload them in Google Play Console to share with your users on Wear OS devices that support API level 28 and above.

Library updates

We recently released a number of Android Jetpack Wear OS libraries to help you follow best practices, reduce boilerplate, and create performant, glanceable experiences for your users.

Tiles are now enabled for most devices in the market, providing predictable, glanceable access to information and quick actions. The API is now in beta, check it out!

For developers who want more fine-grain control of their watch faces (outside of Watch Face Studio), we've launched the new Jetpack Watch Face APIs beta built from the ground up in Kotlin.

The new API offers a number of new features:

- Watch face styling which persists across both the watch and phone (no need for your own database).

- Support a WYSIWYG watch face configuration UI on the phone.

- Smaller, separate libraries (only include what you need).

- Battery improvements by encouraging good battery usage patterns out of the box; for example, reducing the interactive frame rate when battery is low.

- New Screenshot APIs so users can see their watch face changes in real time.

- And many more...

This is a great time to start moving from the older Watch Face Support Library to this new version.

Play Store updates

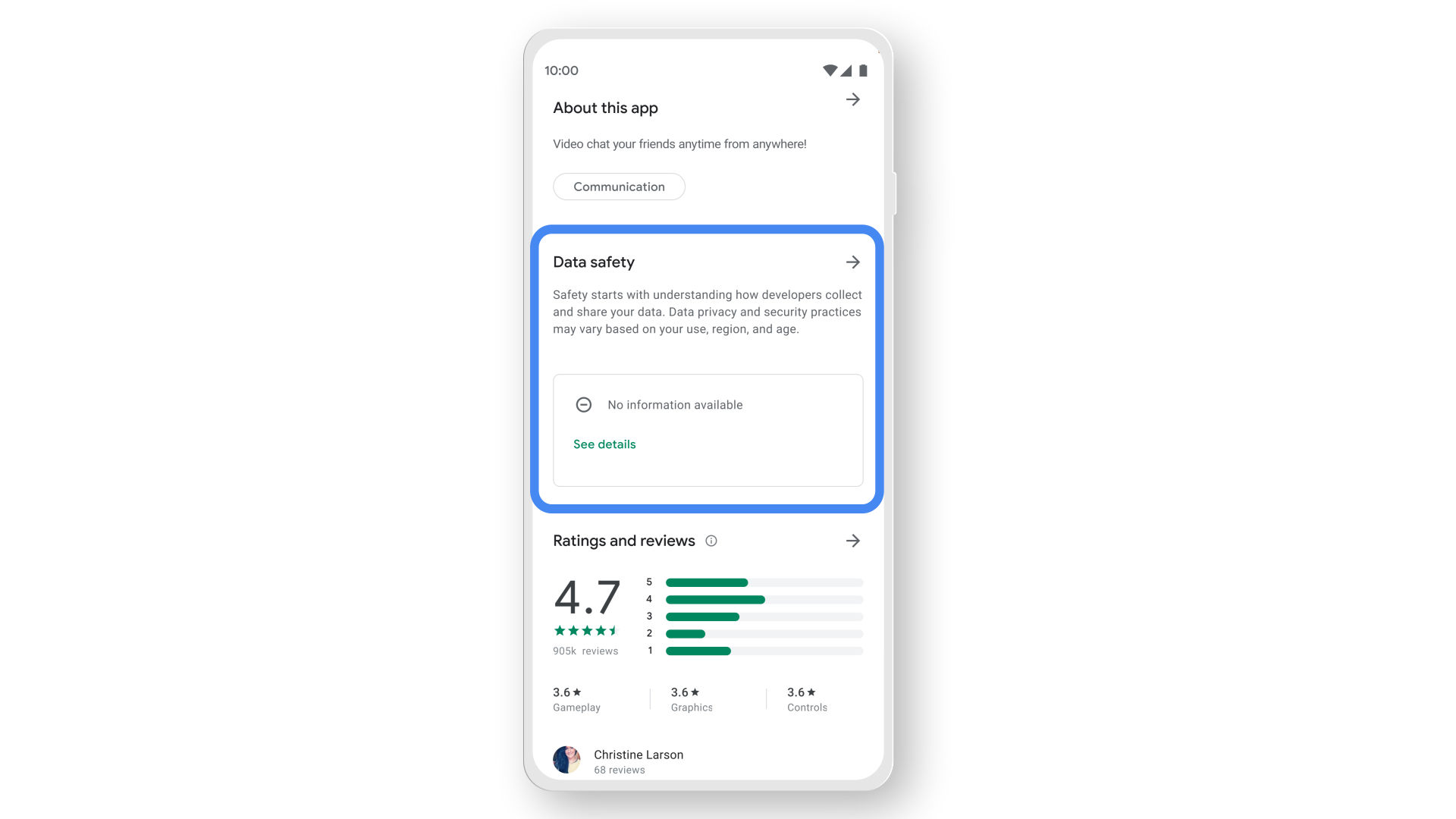

We’re making it easier for people to discover your Wear OS apps in the Google Play Store. Earlier this year, we enabled searching for watch faces and made it easier for people to find your apps in the Wear category. We also launched the capability for people to download apps onto their watches directly from the mobile Play Store. You can read more about these changes here.

We’ve also released updated Wear OS quality guidelines to help you meet your users’ expectations, as well as new screenshot guidelines to help your users have a better understanding of what your app will look like. To help people better understand how your app would work on their device in their location, we will be launching form factor and location specific ratings in 2022.

To learn more about developing for Wear OS, check out the developer website.