The following is a guest post from Google Analytics Certified Partner Feras Alhlou, Partner & Principal Consultant at E-Nor Inc.

Marketers and sales professionals want to know who’s visiting their site, what content the target audience is consuming and what converts site visitors to paying customers.

In a B2B environment -- where long sales cycles and multiple stakeholders affect sales decision -- “knowing who’s coming to your site” takes on another dimension.

Say you’re in charge of marketing an eLearning system, and your target market includes telecom, hi-tech/software companies and universities. Your sales cycle could span several months, and there are multiple personas/stakeholders who will evaluate your company and your product.

Some key personas include:

- Trainers, professors and teachers evaluating user experience and ease of uploading curricula and content

- Management/administrators evaluating your company, pricing, client testimonials, case studies, etc.

- IT assessing technical aspects of products, maintainability, your technical support processes, etc.

As a marketer, your job is to ensure your site addresses the needs of each stakeholder, while realizing that the interests/questions each group of stakeholders are likely to be different. It’s critical that the message and content (that you invested so much in creating) “sticks” with the unique personas in each market segment.

Easier said than done; measuring and optimizing all the above isn’t for the faint of heart.

But don’t fret. Integrating Google Analytics with Account-Based Marketing and

Firmographic data has come to the rescue.

B2B Measurement Framework

Let’s walk through a typical scenario and highlight key performance indicators (KPIs). The measurement framework our eLearning marketing manager has in mind includes (and yes, they follow GA’s ABC!):

Acquisition- What percent of my traffic comes from industries I target

- Telecom

- Hi-tech/software companies

- .edu’s

- Percentage increase or decrease in traffic from industries I’m not targeting

- Traffic volume and frequency from organizations our sales team targets offline

Behavior- Landing page stickiness by industry and organization

- What content is very popular

- What content is most shared

- All the above segmented by the three targeted industries

Conversion- Number of whitepaper downloads by industry and company

- Number of demo requests

- Sales follow-up call requests

- All the above segmented by the three targeted industries

If your site visitors aren’t providing you with company and industry data, it’s not possible to report on this data in Google Analytics. Hello

Insightera, a marketing personalization platform, enables your to enrich customer’s onsite journey with firmographic data in a seamless integrated fashion

(note, another product in the Google Analytics app gallery offering similar functionality is Demandbase).Rich Firmographic Data in Google Analytics

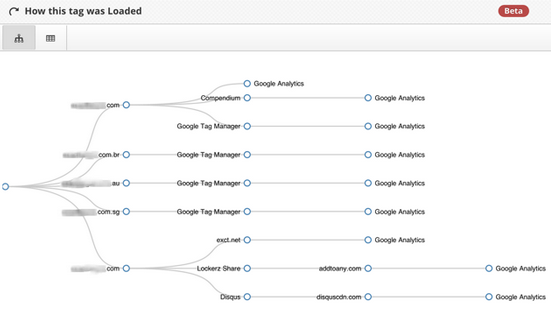

Insightera’s firmographic data is organized by 1) deriving information from site visitors by identifying their ISP 2) determining that organization’s information, including location, industry (and soon company size and company revenue will also be available).

With easy-to-navigate firmographic readily available, analytics data takes on a new dimension; advertising dollars can be better targeted, and you have the ability to customize a visitor’s experience in several new ways.

Here’s a few examples of the rich and super cool data you have access to with Insightera, nicely integrated in the Google Analytics Reports (in Custom Variables):

1- Traffic Distribution by Industry

Within the GA interface you have a nice presentation your traffic by industry. Telecom seems to be strong (24.1% of traffic) in the report below, while Education could use some love from your marketing team.

2- Engagement By Industry

You can also report on your KPIs by industry (e.g. see how “Education” is the number 2 industry in the report below)

3- Traffic & Engagement By Organization

This report below shows the platform’s ability to take data segmentation a step further, and highlights specific organizations within the industry visiting the website (e.g. Yale University)

With firmographic data integrated into Google Analytics, it is possible to optimize paid campaigns such as Google AdWords, LinkedIn, banner ads, etc., and pinpoint how many companies from a specified list visited your site, which industries and what size companies visited the site. It provides the opportunity to then target paid campaigns to those visitors and channels, or increase efforts to reach untapped segments of a targeted audience.

Technical Considerations

Not a whole lot of considerations. Insightera makes it easy to plug and play. In your ‘Admin’ interface, select your Custom Variables slots for the ‘Industry’ and ‘Organization’ -- and let the rich data flow. Double check that the selected custom variable slots are empty and that you’re not already using them for something else in your Google Analytics implementation.

Content Personalization

Equipped with this new data, you can automate and personalize remarketing efforts and create targeted ads based on any given criteria. In the example above, the education-specific whitepaper can be presented to your higher-ed visitors, while hi-tech/software related content can be presented to your hi-tech/software visitors.

Insightera’s recommendation engine filters visitors by location and industry, content preferences and CRM data and digital behavior patterns. This process then predicts which content or channel works best for each visitor.

Increase the Value of Universal Analytics with more User Centricity

If you’re an early adopter of Universal Analytics or planning to migrate to Universal Analytics, Insightera will soon have you covered. The same method described above can be applied and firmographic data can be integrated into Custom Dimensions.

With some additional customization, and if you are (and you should be) user-centric, you can take up your implementation a notch up and report on visitors, not just visits, across web, mobile and other devices. Examples include where you have premium/gated content behind registration, user logins or when users self-identify. In these examples, a user-id is associated with each authenticated visitor and stored in a Custom Dimension. Measuring user behavior across multiple sessions and across multiple devices will then be available and you’ll be able to stitch data from different data sources including Insightera as well CRM systems such

integrating GA with SalesForce.

Conclusion

As advertising and remarketing efforts reach new levels of focus, site owners have the most relevant information to meet their needs thanks to account-based marketing. Combining the power of Google Analytics with the new scope of firmographic data allows a new level of Performance Analytics. This set of tools offers deeper analytic insights into who your potential customers are, what they do, where they come from and what they consume.

Posted by Feras Alhlou, Principal Consultant, E-Nor, a Google Analytics Authorized Premium Reseller