Our next generation of Flutter, built for web, mobile, and desktop

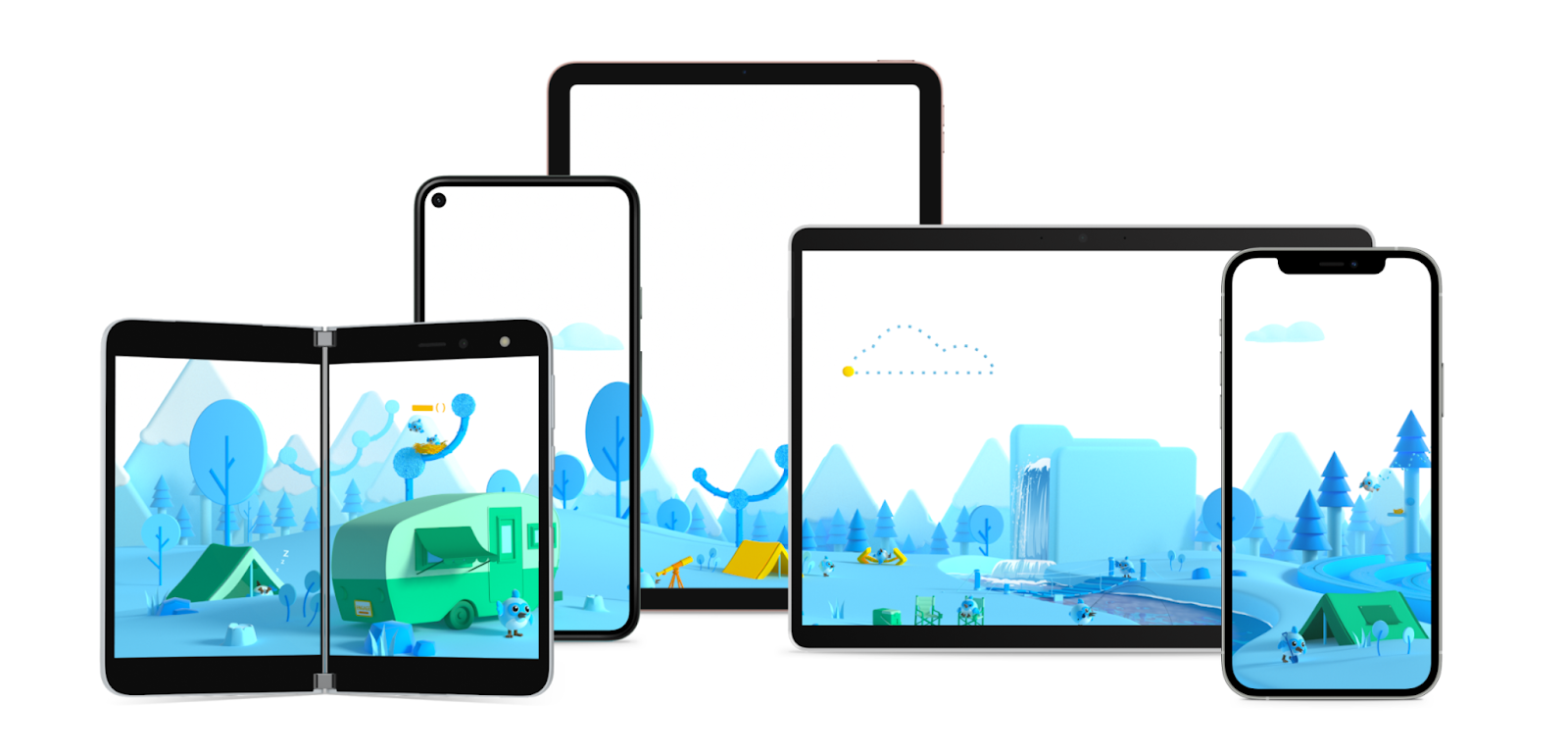

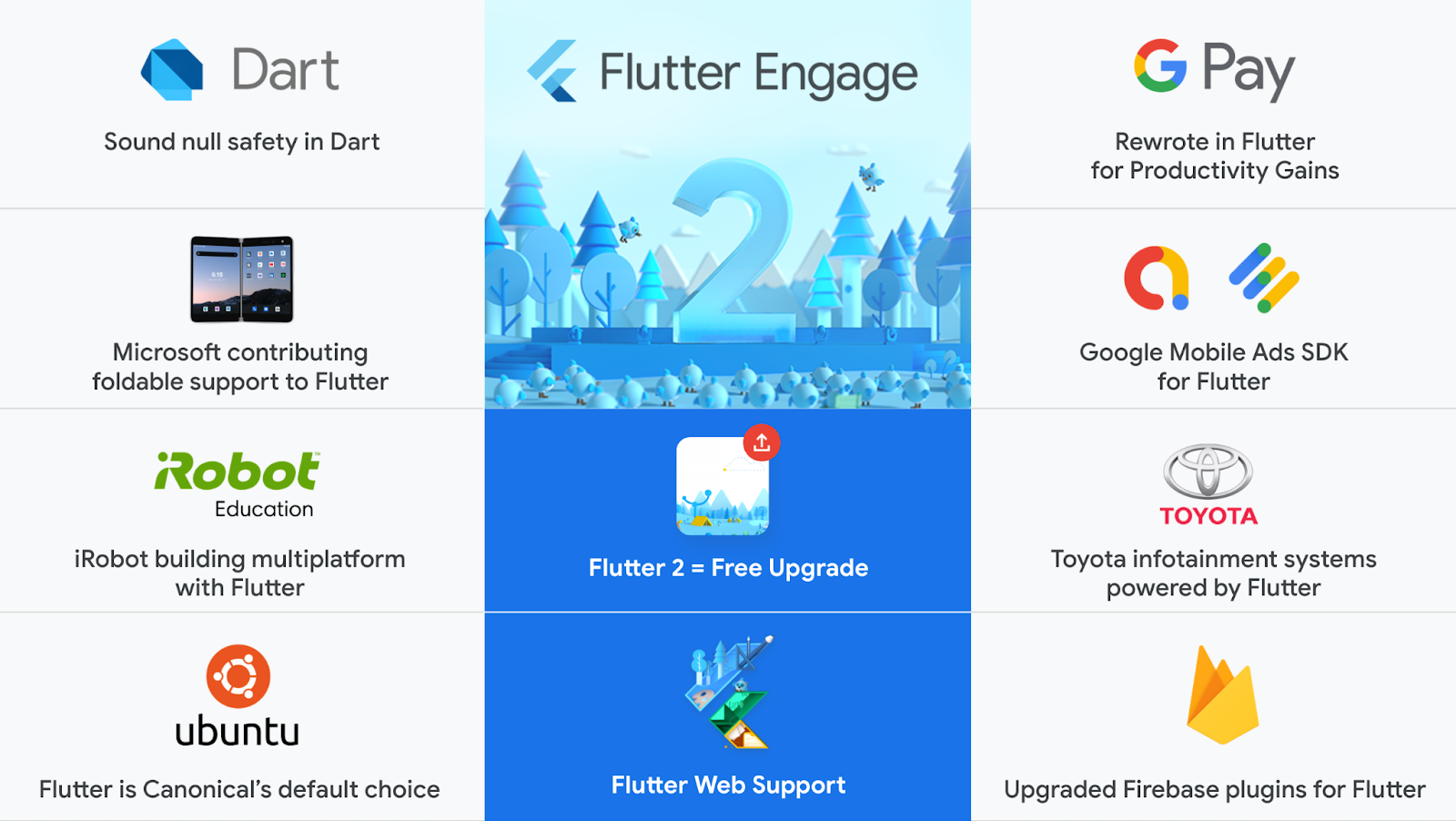

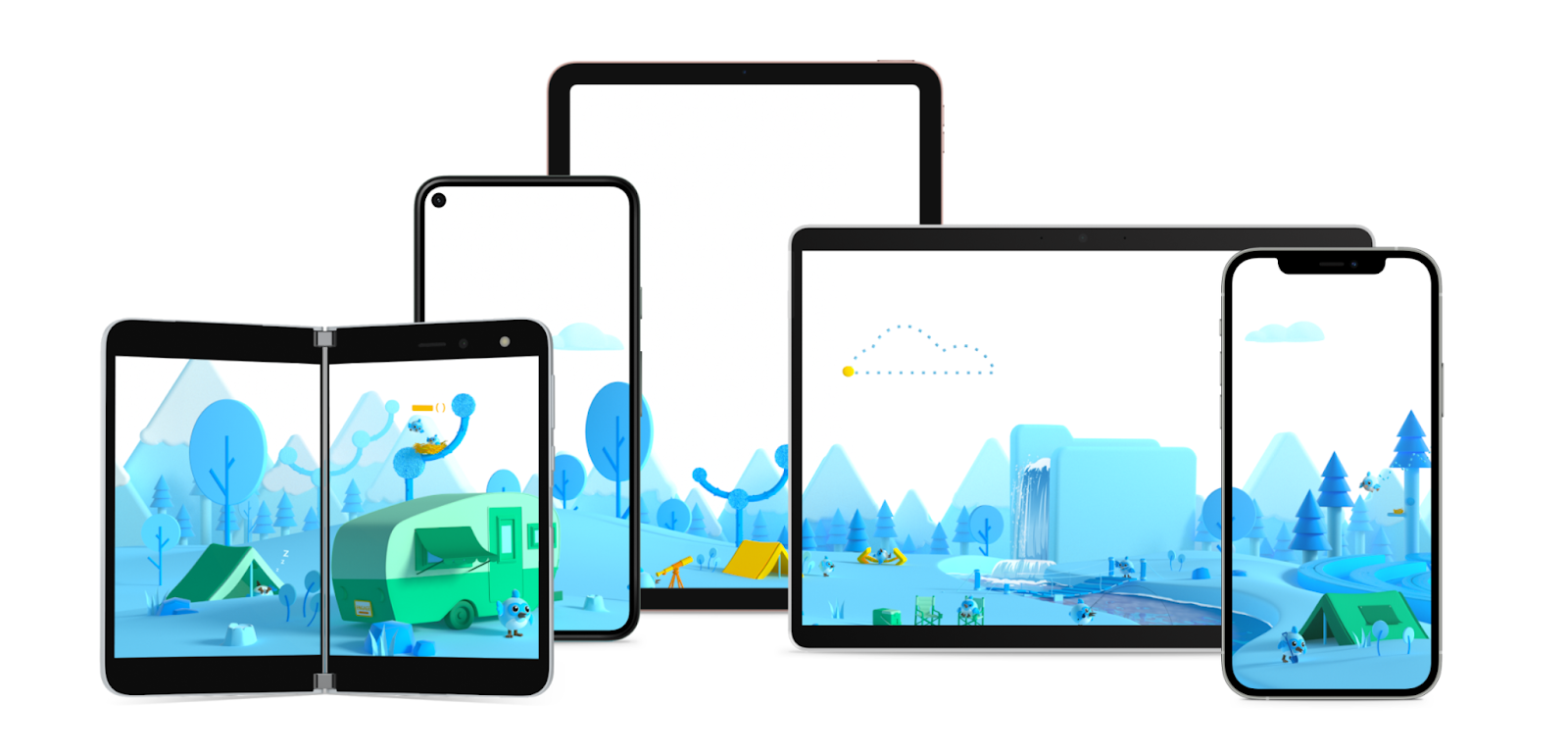

Today, we’re announcing Flutter 2: a major upgrade to Flutter that enables developers to create beautiful, fast, and portable apps for any platform. With Flutter 2, you can use the same codebase to ship native apps to five operating systems: iOS, Android, Windows, macOS, and Linux; as well as web experiences targeting browsers such as Chrome, Firefox, Safari, or Edge. Flutter can even be embedded in cars, TVs, and smart home appliances, providing the most pervasive and portable experience for an ambient computing world.

Our goal is to fundamentally shift how developers think about building apps, starting not with the platform you’re targeting but rather with the experience you want to create. Flutter allows you to handcraft beautiful experiences where your brand and design comes to the forefront. Flutter is fast, compiling your source to machine code, but thanks to our support for stateful hot reload, you still get the productivity of interpreted environments, allowing you to make changes while your app is running and see the results immediately. And Flutter is open, with thousands of contributors adding to the core framework and extending it with an ecosystem of packages.

In Flutter 2, released today, we’ve broadened Flutter from a mobile framework to a portable framework, unleashing your apps to run on a wide variety of different platforms with little or no change. There are already over 150,000 Flutter apps out there on the Play Store alone, and every app gets a free upgrade with Flutter 2 because they can now grow to target desktop and web without a rewrite.

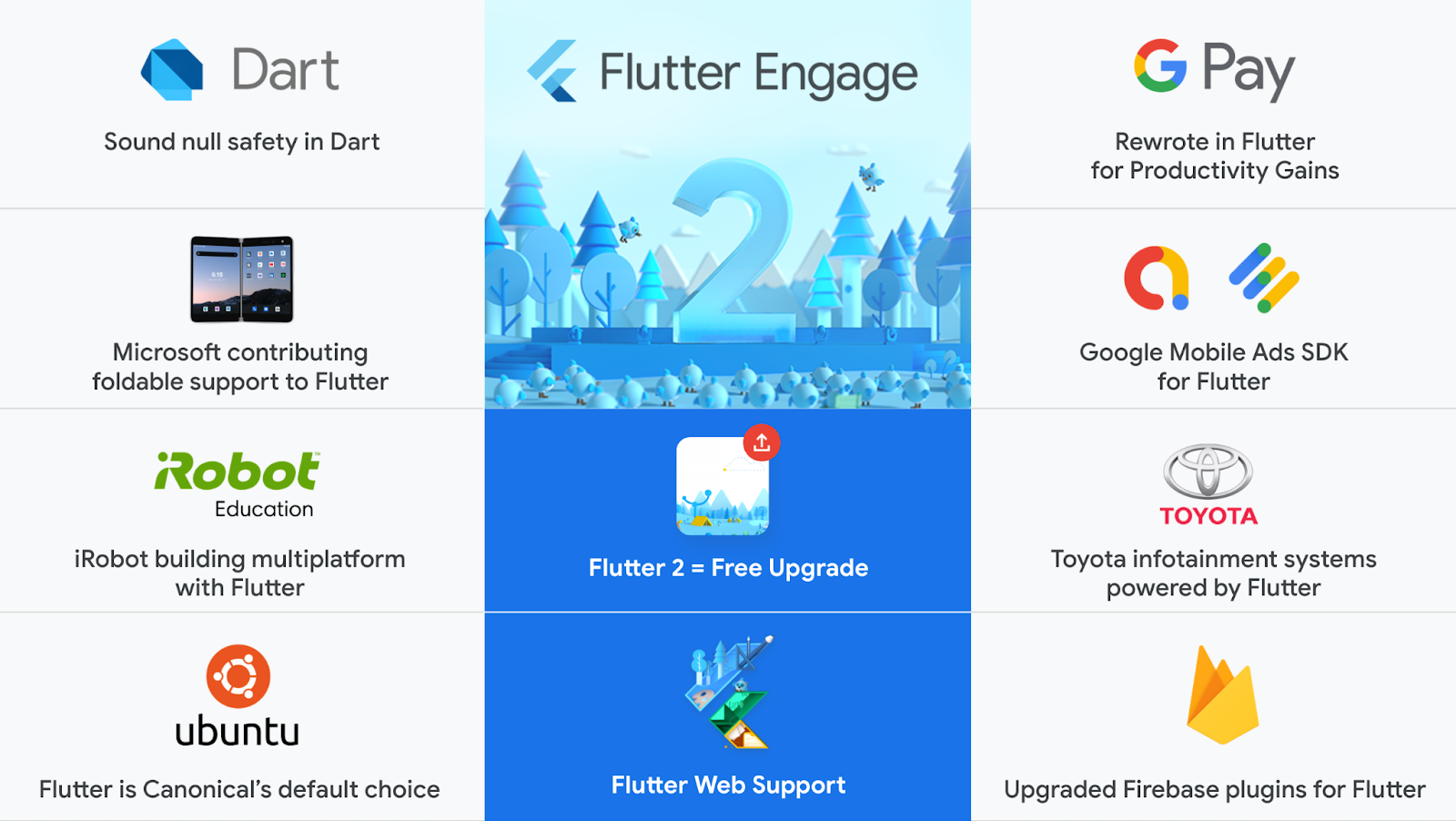

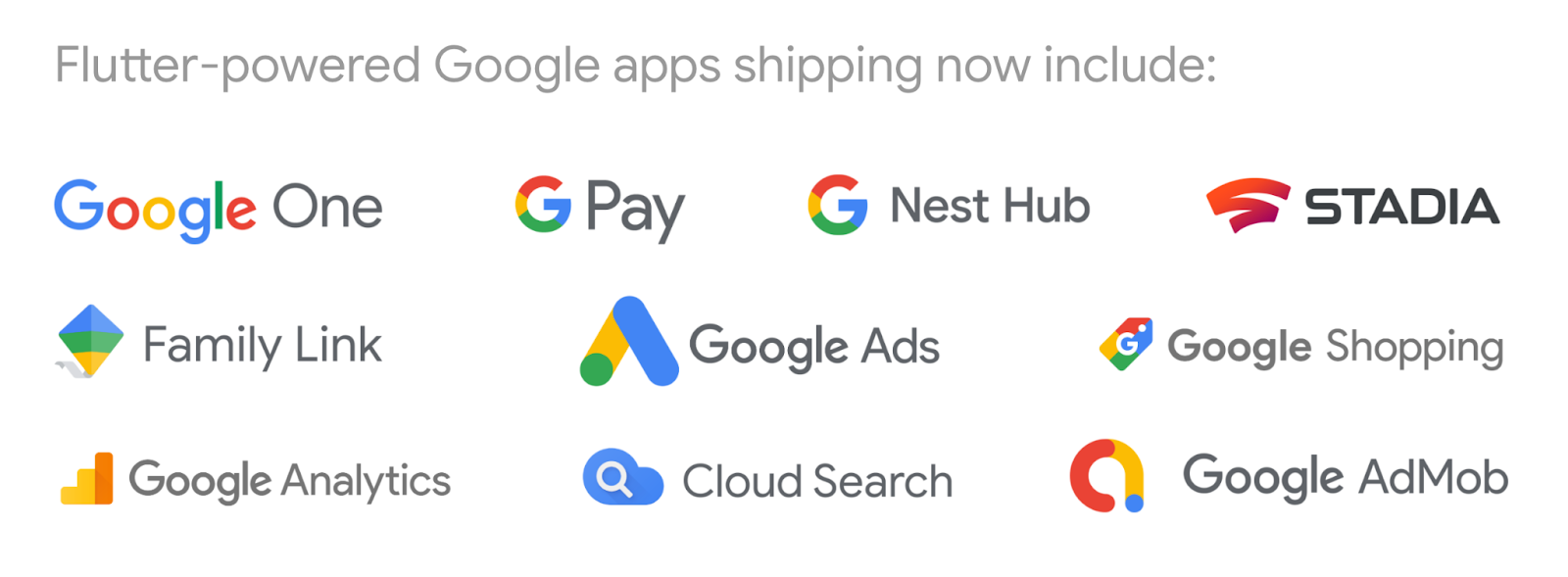

Customers from all around the world are using Flutter, including popular apps like WeChat, Grab, Yandex Go, Nubank, Sonos, Fastic, Betterment and realtor.com. Here at Google, we’re depending on Flutter, and over a thousand engineers at Google are building apps with Dart and Flutter. In fact, many of those products are already shipping, including Stadia, Google One, and the Google Nest Hub.

Google Pay switched to Flutter a few months ago for their flagship mobile app, and they already achieved major gains in productivity and quality. By unifying the codebase, the team removed feature disparity between platforms and eliminated over half a million lines of code. Google Pay also reports that their engineers are far more efficient, with a huge reduction in technical debt and unified release processes such as security reviews and experimentation across both iOS and Android.

Flutter on the web

Perhaps the single largest announcement in Flutter 2 is production-quality support for the web.

The early foundation of the web was document-centric. But the web platform has evolved to encompass richer platform APIs that enable highly sophisticated apps with hardware-accelerated 2D and 3D graphics and flexible layout and paint APIs. Flutter’s web support builds on these innovations, offering an app-centric framework that takes full advantage of all that the modern web has to offer.

This initial release focuses on three app scenarios in particular:

- Progressive web apps (PWAs) that combine the web’s reach with the capabilities of a desktop app.

- Single page apps (SPAs) that load once and transmit data to and from internet services.

- Bringing existing Flutter mobile apps to the web, enabling shared code for both experiences.

In the last months, as we prepared for the stable release of web support, we made lots of progress on performance optimization, adding a new CanvasKit-powered rendering engine built with WebAssembly. Flutter Plasma, a demo built by community member Felix Blaschke, showcases the ease of building sophisticated web graphics experiences with Dart and Flutter that can also run natively on desktop or mobile devices.

We’ve been extending Flutter to offer the best of the web platform. In recent months, we added text autofill, control over address bar URLs and routing, and PWA manifests. And because desktop browsers are as important as mobile browsers, we added interactive scrollbars and keyboard shortcuts, increased the default content density in desktop modes, and added screen reader support for accessibility on Windows, macOS, and Chrome OS.

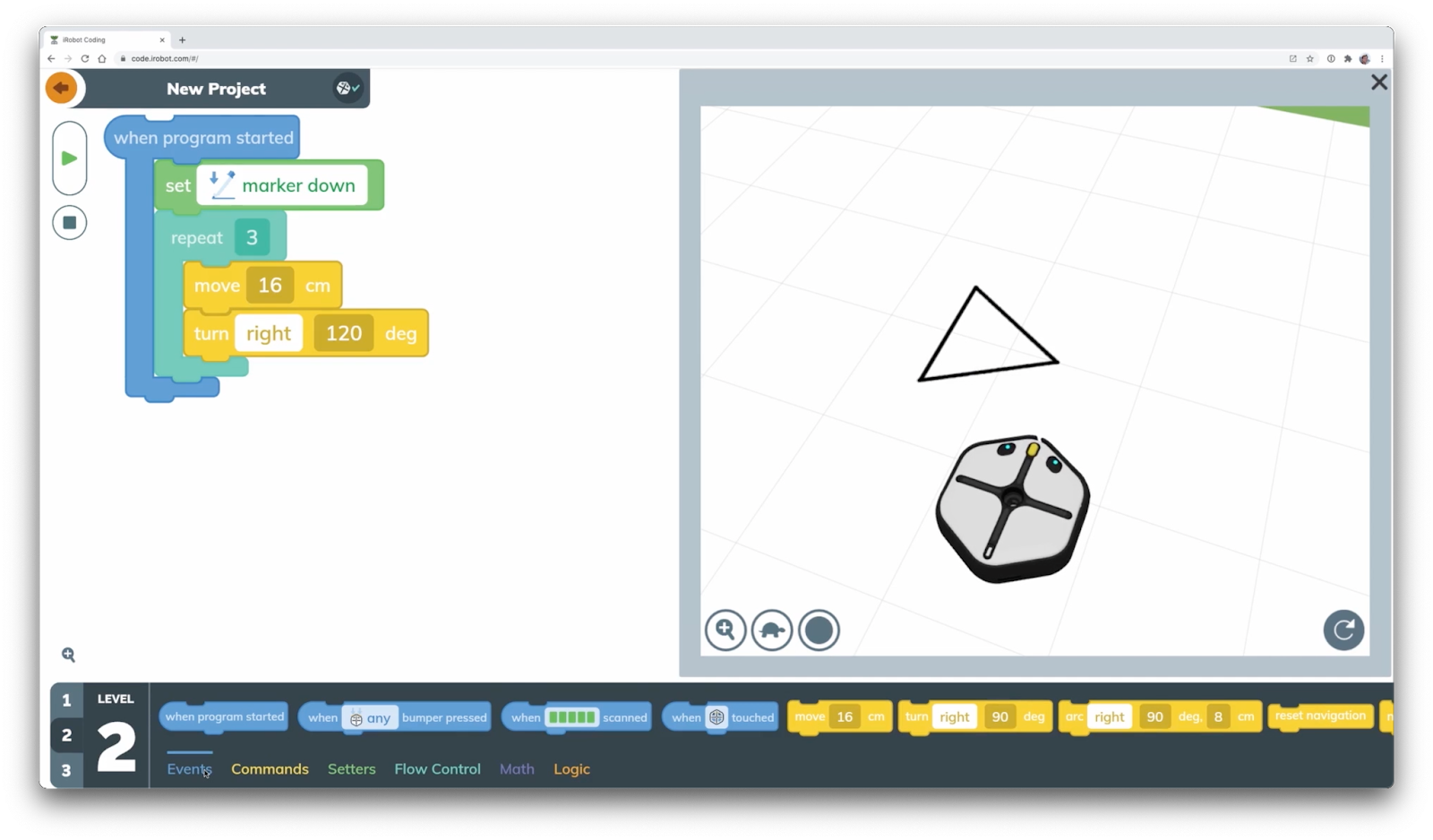

Some examples of web apps built with Flutter are already available. Among educators, iRobot is well known for their popular Root educational robots. Flutter’s production support for the web allows iRobot to take their existing educational programming environment and move it to the web, expanding its availability to Chromebooks and other devices where the browser is the best choice. iRobot's blog post has all the details on their progress so far and why they chose Flutter.

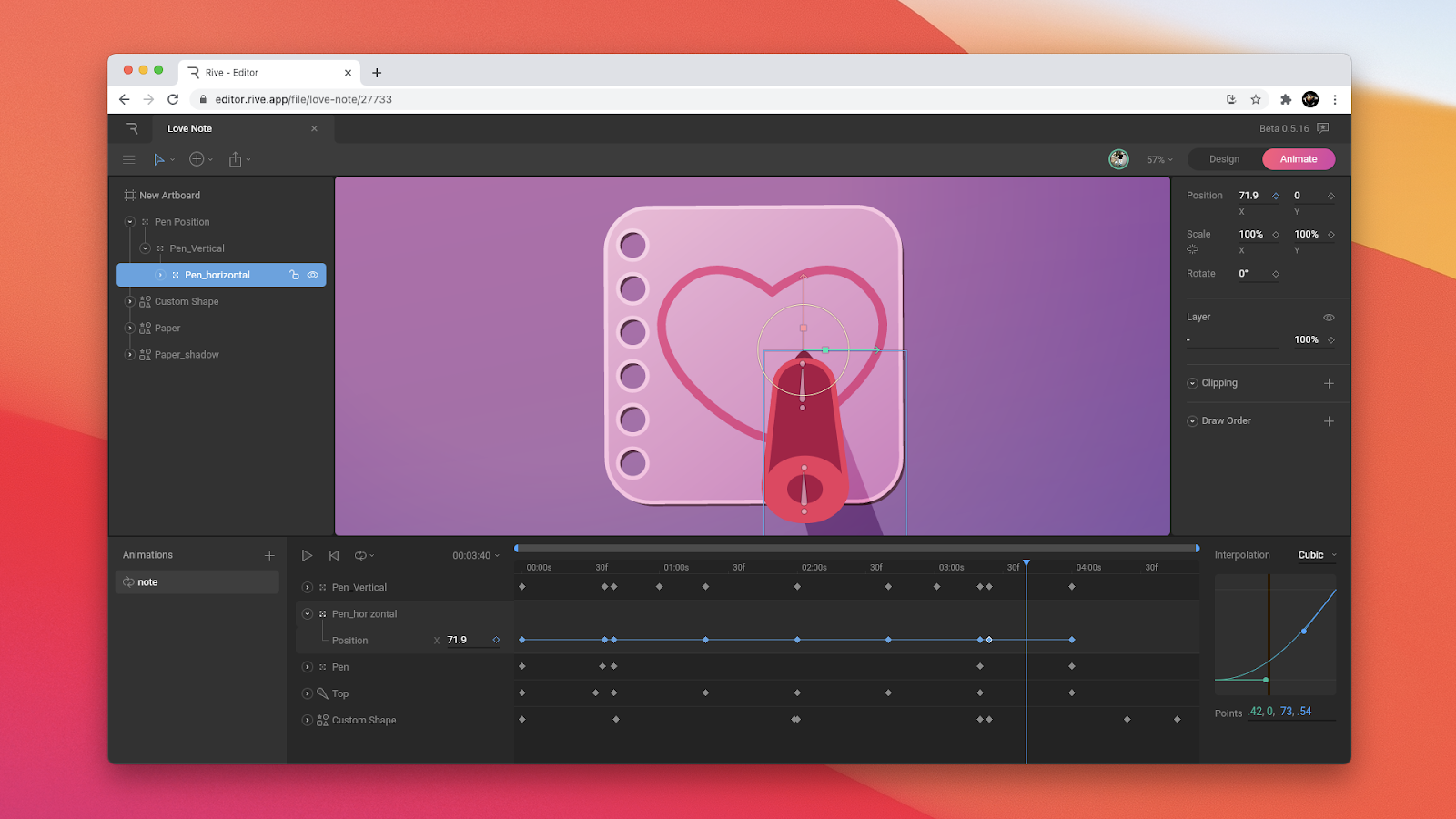

Another example is Rive, who offers designers a powerful tool for creating custom animations that can ship to any platform. Their updated web app, now available in beta, is built entirely with Flutter, and is a love letter to all that Flutter can offer in this environment.

You can find out more about Flutter on the web from our dedicated blog post over at our Medium publication.

Flutter 2 on desktops, foldables, and embedded devices

Beyond traditional mobile devices and the web, Flutter is increasingly stretching out to other device types, and we highlighted three partnerships in today’s keynote that demonstrate Flutter’s portability.

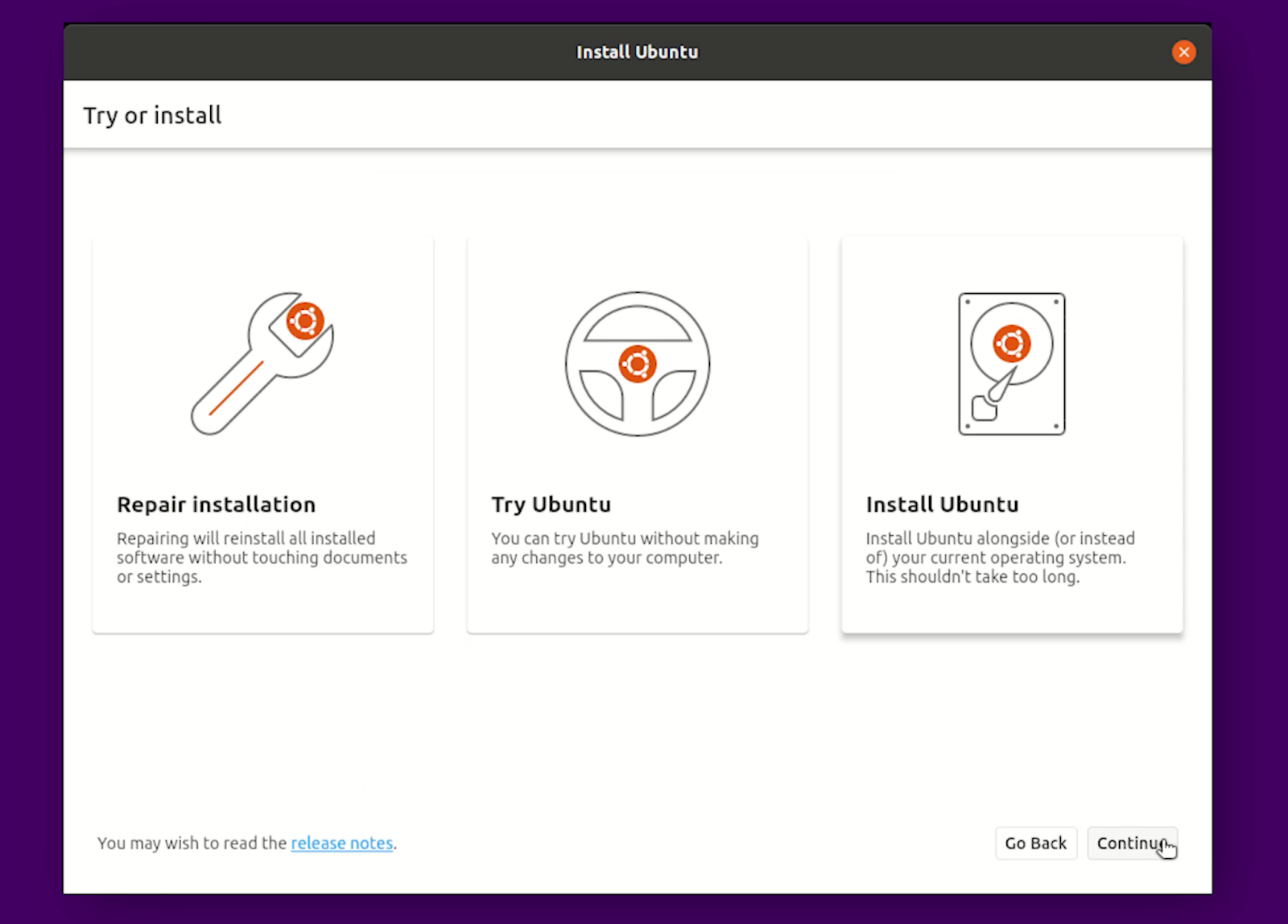

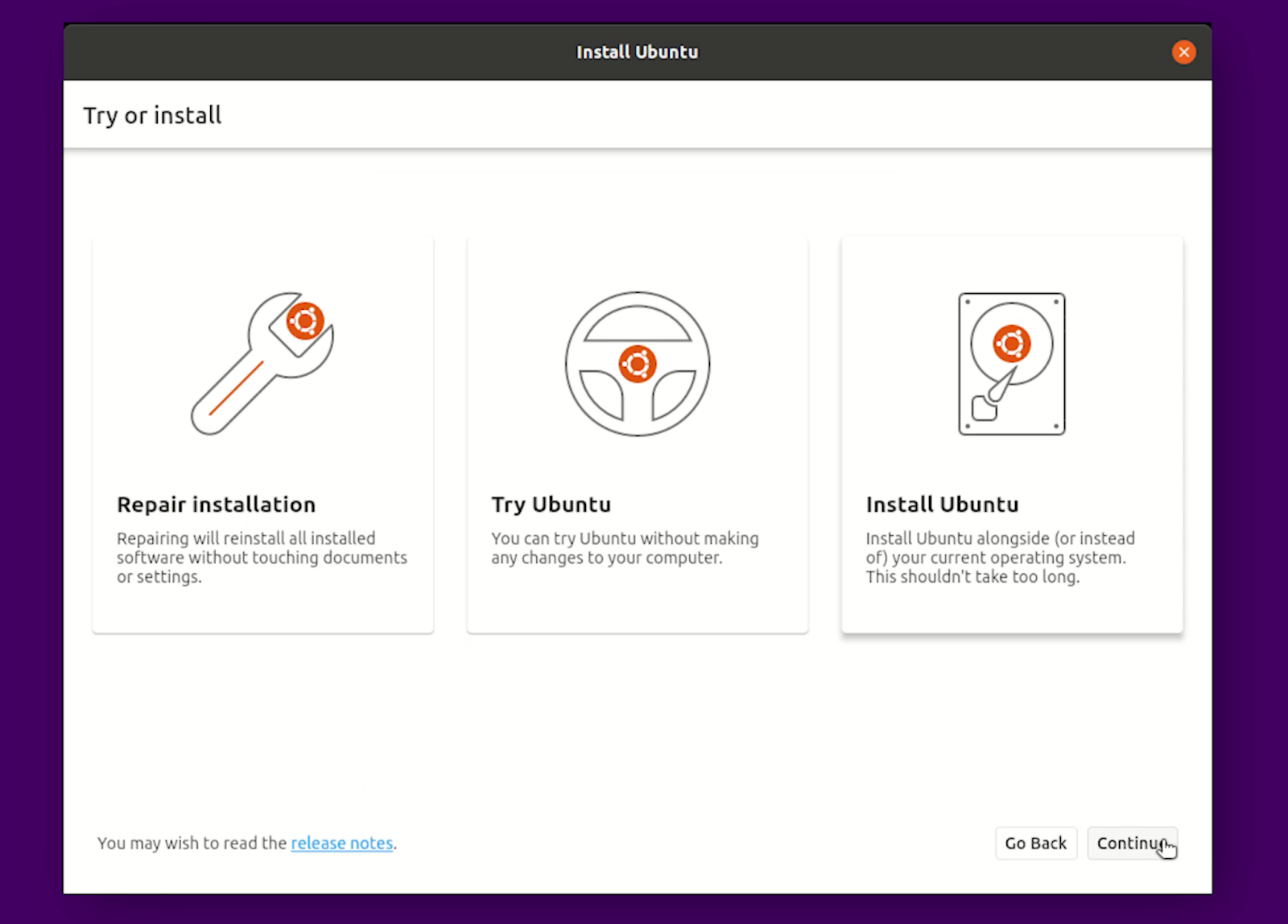

To start with, Canonical is partnering with us to bring Flutter to desktop, with engineers contributing code to support development and deployment on Linux. During today’s event, the Ubuntu team showed an early demo of their new installer app that was rewritten with Flutter. For Canonical, it is critical that they can deliver rock-solid yet beautiful experiences on a huge variety of hardware configurations. Moving forward, Flutter is the default choice for future desktop and mobile apps created by Canonical.

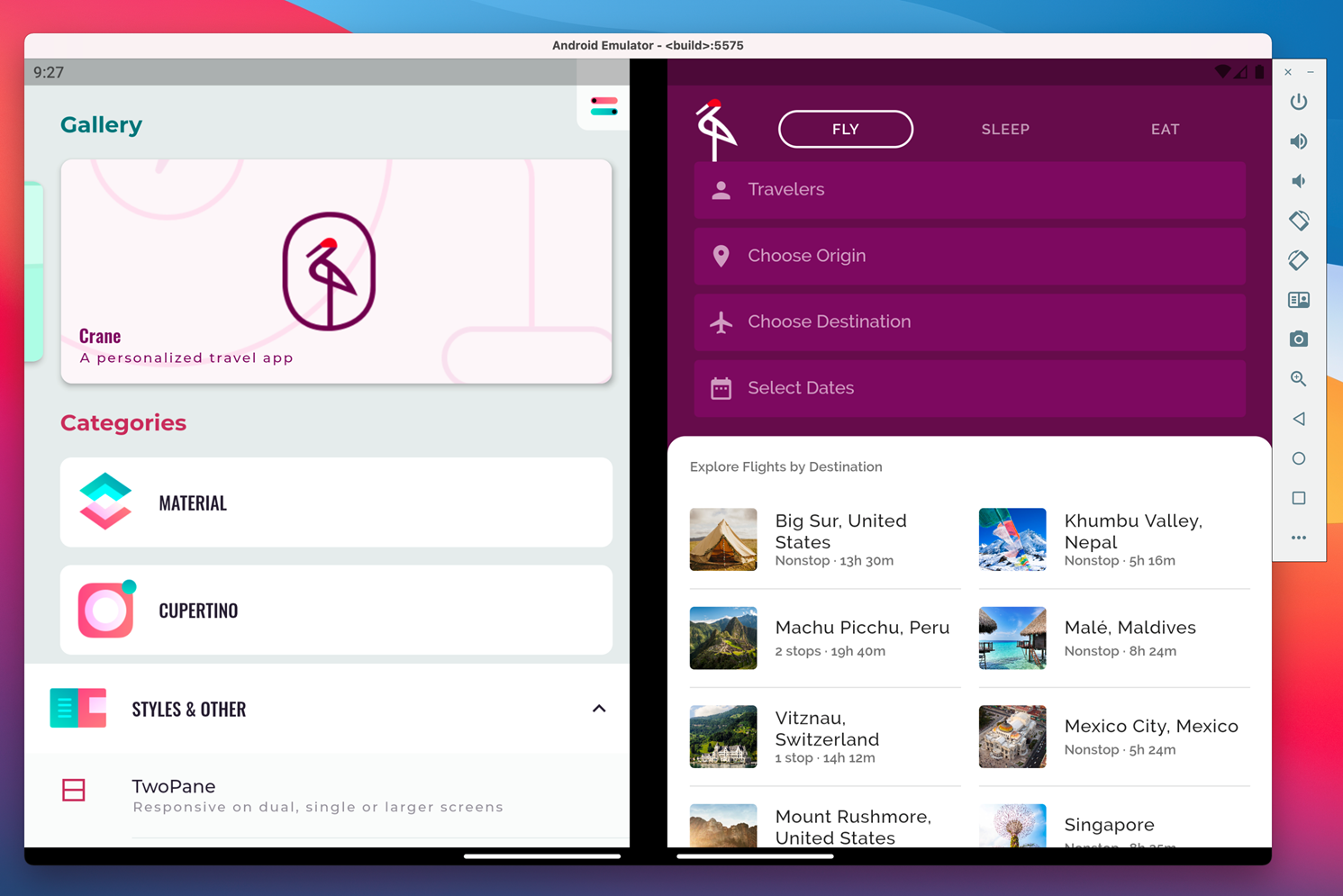

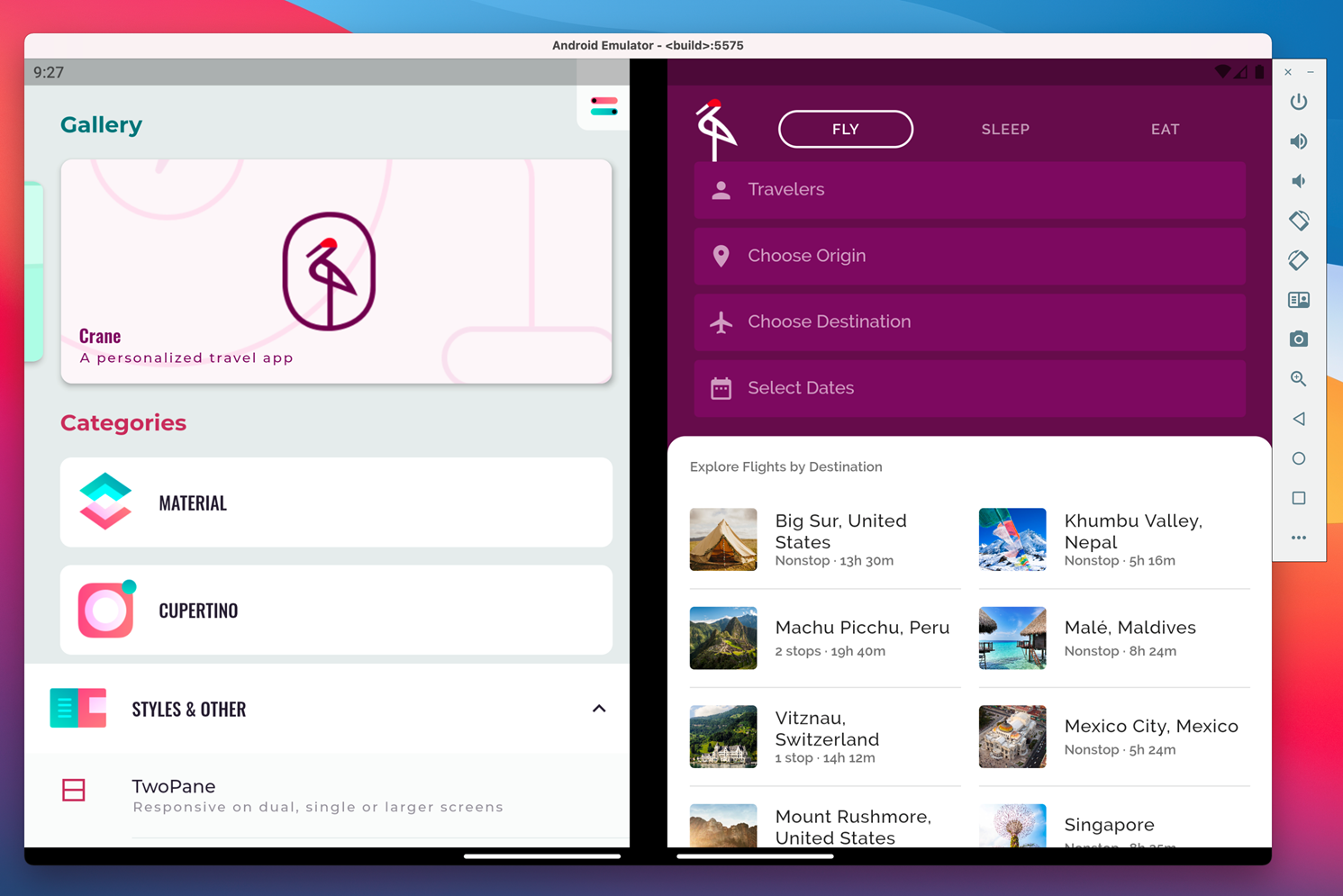

Secondly, Microsoft is continuing to expand its support for Flutter. In addition to an ongoing collaboration to offer high-quality Windows support in Flutter, today Microsoft is releasing contributions to the Flutter engine that support the emerging class of foldable Android devices. These devices introduce new design patterns, with apps that can either expand content or take advantage of the dual-screen nature to offer side-by-side experiences. In a blog post from the Surface engineering team, they demonstrate their work and invite others to join them in completing a high-quality implementation that works on Surface Duo and other devices.

Lastly, Toyota, the world’s best-selling automaker, announced its plans to bring a best-in-market digital experience to vehicles, by building infotainment systems powered by Flutter. Using Flutter marks a large departure in approach from how in-vehicle software has been developed in the past. Toyota chose Flutter because of its high performance and consistency of experience, fast iteration and developer ergonomics as well as smartphone-tier touch mechanics. By using Flutter’s embedder API, Toyota is able to tailor Flutter for the unique needs of an in-vehicle system.

We’re excited to continue our work with Toyota and others to bring Flutter to vehicles, TVs, and other embedded devices, and we hope to share further examples in the coming months.

The growing Flutter ecosystem

There are now over 15,000 packages for Flutter and Dart: from companies like Amazon, Microsoft, Adobe, Alibaba, eBay, and Square; to key packages like Lottie, Sentry and SVG, as well as Flutter Favorite packages such as sign_in_with_apple, google_fonts, geolocator, and sqflite.

Today we’re announcing the beta release of Google Mobile Ads for Flutter, a new SDK that works with AdMob and AdManager to offer a variety of ad formats, including banner, interstitial, native, and rewarded video ads. We’ve been piloting this SDK with several key customers, such as Sua Música, the largest music platform for independent artists in Latin America, and we’re now ready to open the Google Mobile Ads for Flutter SDK for broader adoption.

We’re also announcing updates to our Flutter plug-ins for several core Firebase services: Authentication, Cloud Firestore, Cloud Functions, Cloud Messaging, Cloud Storage, and Crashlytics, including support for sound null safety and an overhaul of the Cloud Messaging package.

Dart: The secret sauce behind Flutter

As we’ve noted, Flutter 2 is portable to many different platforms and form factors. The easy transition to supporting web, desktop, and embedded is thanks in large part to Dart, Google’s programming language that is optimized for multiplatform development.

Dart combines a unique set of capabilities for building apps:

- No-surprise portability, with compilers that generate high-performance Intel and ARM machine code for mobile and desktop, as well as tightly optimized JavaScript output for the web. The same Flutter framework source code compiles to all these targets.

- Iterative development with stateful hot reload on desktop and mobile, as well as language constructs designed for the asynchronous, concurrent patterns of modern UI programming.

- Google-class performance across all of these platforms, with sound null safety guaranteeing null constraints at runtime as well as during development.

There’s no other language that combines all these capabilities; perhaps this is why Dart is one of the fastest growing languages on GitHub.

Dart 2.12, available today, is our largest release since 2.0, with support for sound null safety. Sound null safety has the potential to eradicate dreaded null reference exceptions, offering guarantees at development and runtime that types can only contain null values if the developer expressly chooses. Best of all, this feature isn’t a breaking change: you can incrementally add it to your code at your own pace, with migration tooling available to help you when you’re ready.

Today’s update also includes a stable implementation of FFI, allowing you to write high-performance code that interoperates with C-based APIs; new integrated developer and profiler tooling written with Flutter; and a number of performance and size improvements that further upgrade your code for no cost other than a recompile. For more information, check out the dedicated Dart 2.12 announcement blog post.

Flutter 2: Available now

There’s far more to say about Flutter 2 than we can include in this article. In fact, the raw list of pull requests merged is a 200 page document! Head over to the separate technical blog on Flutter 2 for more information about the many new features and performance improvements that we think will please existing Flutter developers, and download it today.

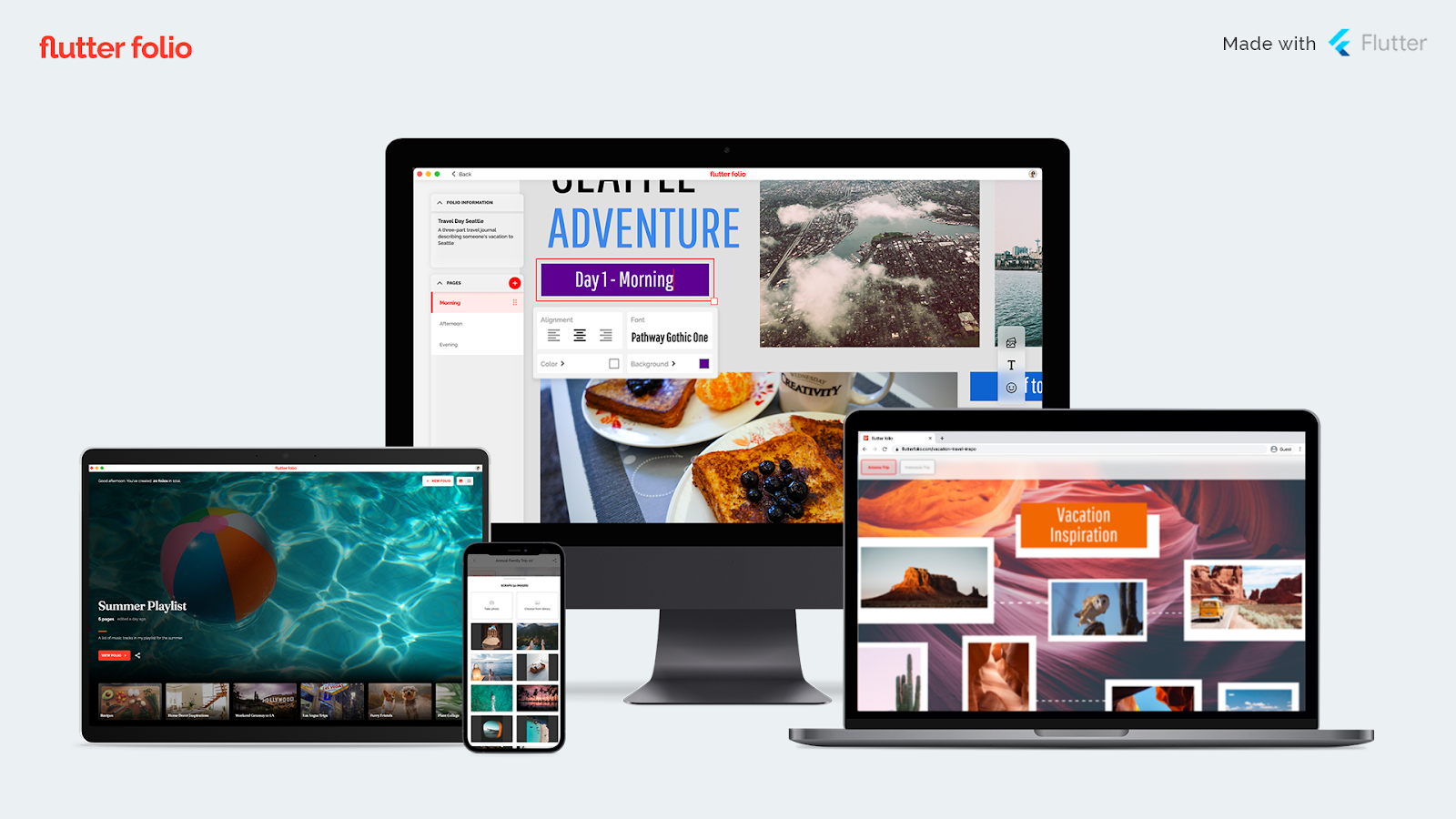

We also have a major new sample that showcases everything we just mentioned, built in collaboration with gskinner, an award-winning design team based in Edmonton, Canada. Flutter Folio is a scrapbooking app that is designed for all your devices. The small-screen experience is designed for capturing content; larger screens support editing with desktop- and tablet-specific idioms; and the web experience is tailored for sharing. All these tailored experiences share the same codebase, which is open source and available for you to peruse.

If you haven’t yet tried Flutter, we think you’ll find it to be a major upgrade for your app development experience. In Flutter, we’re offering an open source toolkit for building beautiful and fast apps that target mobile, desktop, web, and embedded devices from a single codebase, built both to solve Google’s demanding needs and those of our customers.

Flutter is free and open source. We’re excited to see what you build with Flutter 2!