To address these needs, a growing number of successful gaming companies use Google’s web-scale analytics services to create personalized experiences for their players. They use telemetry and smart instrumentation to gain insight into how players engage with the game and to answer questions like: At what game level are players stuck? What virtual goods did they buy? And what's the best way to tailor the game to appeal to both casual and hardcore players?

A new reference architecture describes how you can collect, archive and analyze vast amounts of gaming telemetry data using Google Cloud Platform’s data analytics products. The architecture demonstrates two patterns for analyzing mobile game events:

- Batch processing: This pattern helps you process game logs and other large files in a fast, parallelized manner. For example, leading mobile gaming company DeNA moved to BigQuery from Hadoop to get faster query responses for their log file analytics pipeline. In this GDC Lightning Talk video they explain the speed benefits of Google’s analytics tools and how the team was able to process large gaming datasets without the need to manage any infrastructure.

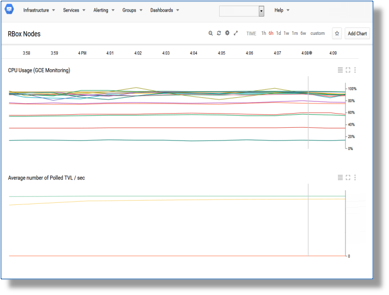

- Real-time processing: Use this pattern when you want to understand what's happening in the game right now. Cloud Pub/Sub and Cloud Dataflow provide a fully managed way to perform a number of data-processing tasks like data cleansing and fraud detection in real-time. For example, you can highlight a player with maximum hit-points outside the valid range. Real-time processing is also a great way to continuously update dashboards of key game metrics, like how many active users are currently logged in or which in-game items are most popular.

But why choose between one or the other pattern? A key benefit of this architecture is that you can write your data pipeline processing once and execute it in either batch or streaming mode without modifying your codebase. So if you start processing your logs in batch mode, you can easily move to real-time processing in the future. This is an advantage of the high-level Cloud Dataflow model that was released as open source by Google.

Cloud Dataflow loads the processed data into one or more BigQuery tables. BigQuery is built for very large scale, and allows you to run aggregation queries against petabyte-scale datasets with fast response times. This is great for interactive analysis and data exploration, like the example screenshot above, where a simple BigQuery SQL query dynamically creates a Daily Active Users (DAU) graph using Google Cloud Datalab.

And what about player engagement and in-game dynamics? The BigQuery example above shows a bar chart of the ten toughest game bosses. It looks like boss10 killed players more than 75% of the time, much more than the next toughest. Perhaps it would make sense to lower the strength of this boss? Or maybe give the player some more powerful weapons? The choice is yours, but with this reference architecture you'll see the results of your changes straight away. Review the new reference architecture to jumpstart your data-driven quest to engage your players and make your games more successful, contact us, or sign up for a free trial of Google Cloud Platform to get started.

Further Reading and Additional Resources

- Build a mobile gaming analytics platform - a reference architecture

- The world beyond batch: Streaming 101 - A high-level tour of modern data-processing concepts

- Google Dataflow: A Unified Model for Batch and Streaming Data Processing

- Processing Logs at Scale Using Cloud Dataflow

- Posted by Oyvind Roti, Solutions Architect