Posted by Swathi Dharshna Subbaraj - Project Coordinator, Google Dev Library

Android offers developers a rich set of tools and SDKs/APIs for building innovative and engaging mobile apps. Developers can create applications for a large and growing user base of over 2.5 billion devices worldwide.

Google Dev Library curates open-source Android libraries created and contributed by developers from around the world. Developers can easily leverage the vast array of useful code samples, GitHub repos, and libraries featuring Compose, networking, data storage to user interface design and image processing to build your own Android apps !

In this blog, we are sharing 7 popular projects by android contributors. These projects are some of the highest viewed projects on the platform and we hope these will give you a sneak peak into the type of interesting and innovative projects found on the platform. Let's dive into the list:

Coil by Colin White

Image loading for Android backed by Kotlin Coroutines

Coil is designed to be lightweight, efficient, and easy to use, and it offers a number of features such as automatic image caching, support for various image formats, and integration with popular image loading libraries like Glide and Picasso. If you are working on an Android app and need a reliable way to load and display images, this repository is definitely worth checking out !

LitePal by Lin Guo

An Android library that makes developers use SQLite database extremely easy

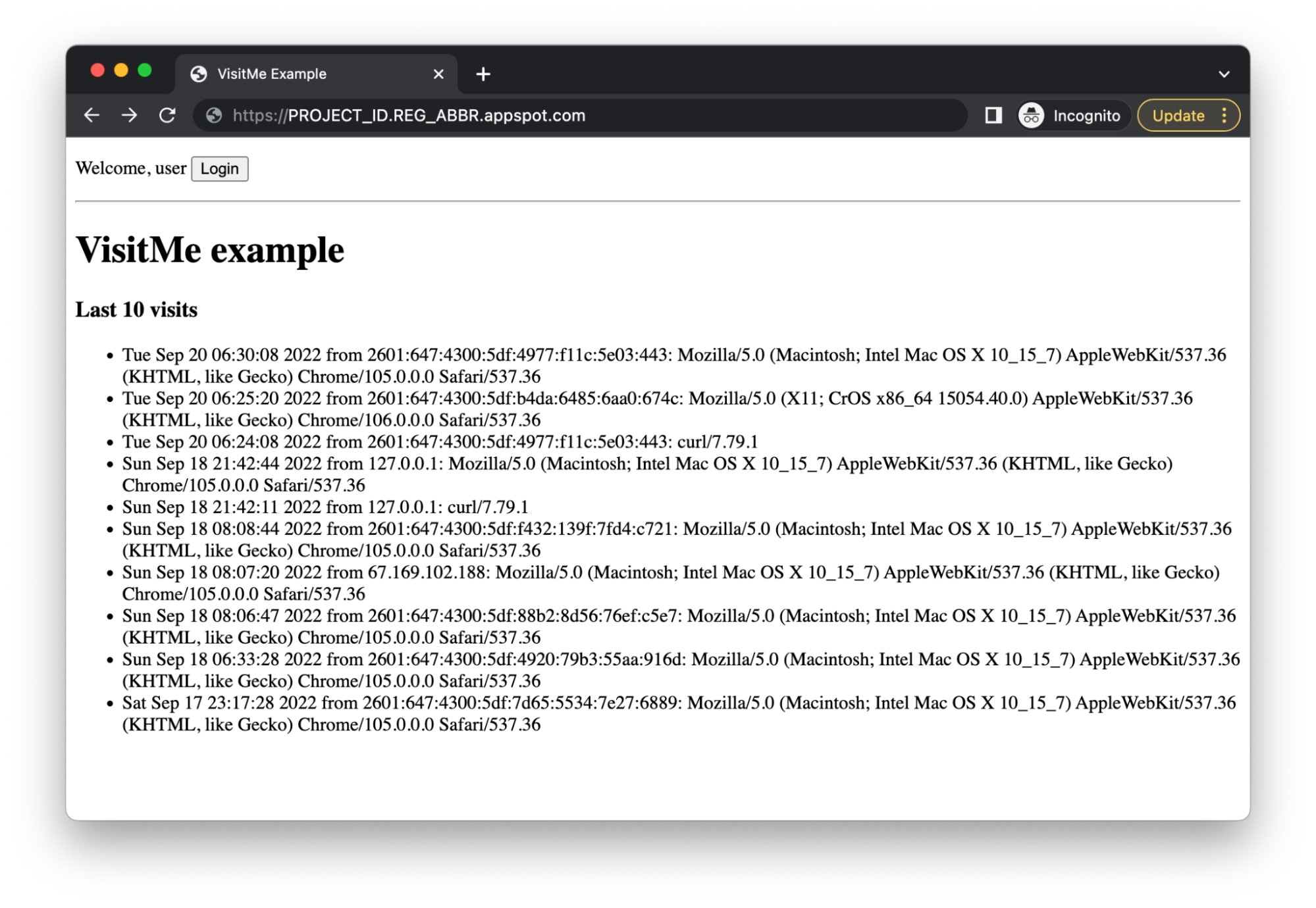

If you’re looking to streamline your database management processes, LitePal is an open source library for Android that helps developers with database management in your app development.

Tivi showcases modern development practices, including the use of Android Jetpack and other libraries. This TV show tracking Android project is helpful for developers to learn more about interesting and fun practices for Android development.

Showkase helps you organize, discover, search and visualize Jetpack Compose UI elements. With minimal configuration it generates a UI browser that helps you easily find your components, colors & typography.

Pokedex demonstrates modern Android development with Hilt, Coroutines, Flow, Jetpack (Room, ViewModel), and Material Design based on MVVM architecture. The repository includes the app's layout, features, and functionality, as well as documentation on how to implement and get resourceful.

If you are looking to learn or improve your knowledge of Jetpack Compose, Learn-Jetpack-Compose-By-Example contains a collection of example code and accompanying explanations for various components and features of Jetpack Compose. This repository aims to show the Jetpack Compose way of building common Android UI that we are accustomed to building.

The author, Shreyas Patil, goes into detail about how to use the MaterialDialog library and provides code examples to demonstrate its capabilities. The library allows developers to easily create dialogs with a variety of customization options, such as adding buttons, selecting the theme, and setting the title and content. Overall, the MaterialDialog library is a useful tool for Android developers looking to implement Material Design in your apps.