Posted by Mindy Brooks, General Manager, Kids and Family Apps play an increasingly important role in all of our lives and we’re proud that Google Play helps families find educational and delightful experiences for kids. Of course, this wouldn’t be possible without the continued ingenuity and commitment of our developer partners. From kid-friendly entertainment apps to educational games, you’ve helped make our platform a fantastic destination for high-quality content for families. Today, we’re sharing a few updates on how we’re building on this work to create a safe and positive experience on Play.

Apps play an increasingly important role in all of our lives and we’re proud that Google Play helps families find educational and delightful experiences for kids. Of course, this wouldn’t be possible without the continued ingenuity and commitment of our developer partners. From kid-friendly entertainment apps to educational games, you’ve helped make our platform a fantastic destination for high-quality content for families. Today, we’re sharing a few updates on how we’re building on this work to create a safe and positive experience on Play.

Expanding Play’s Teacher Approved Program

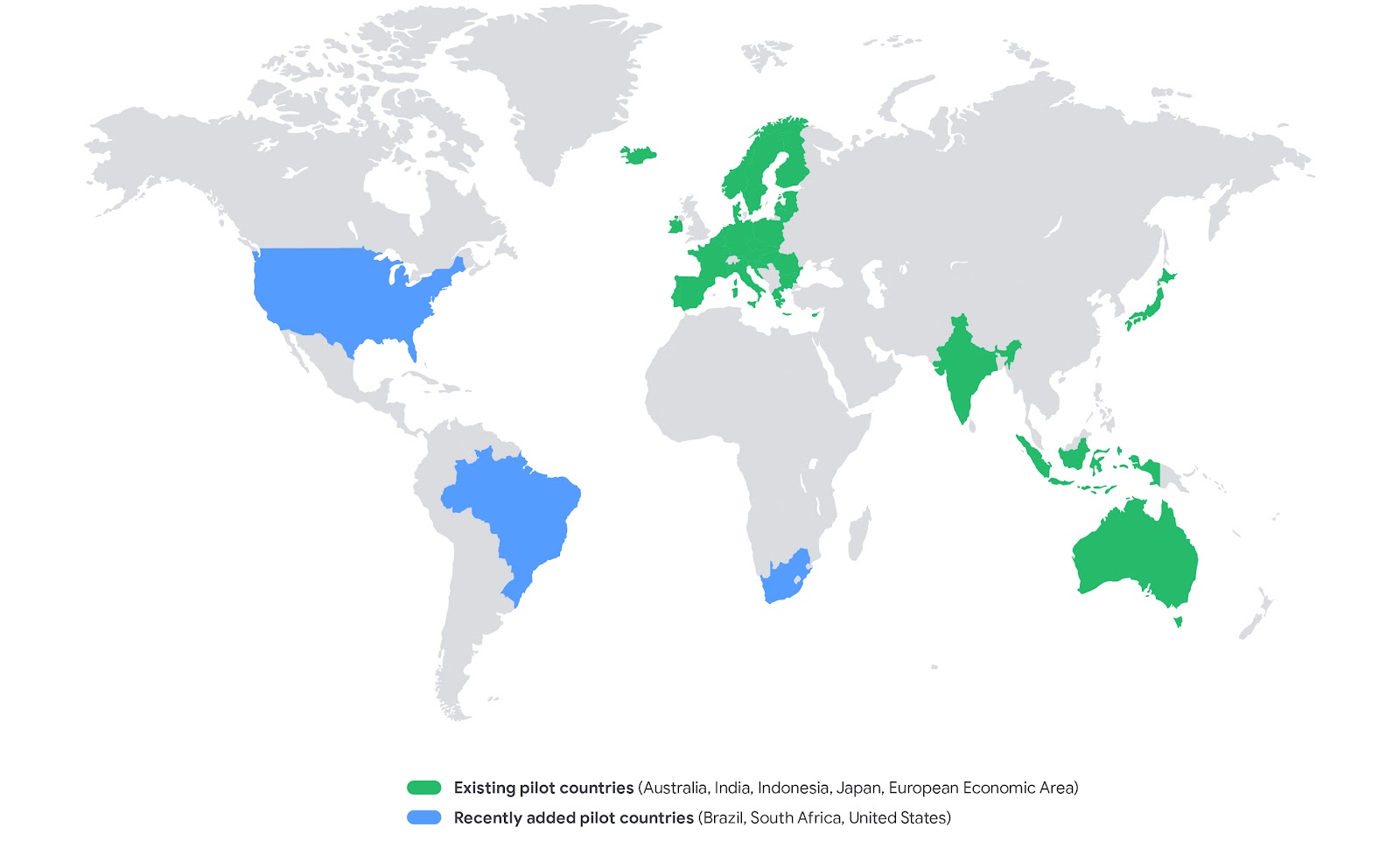

In 2020, we introduced the Teacher Approved program to highlight high-quality apps that are reviewed and rated by teachers and child development specialists. Through this program, all apps in the Play Store’s Kids tab are Teacher Approved, and families can now more easily discover quality apps and games.

As part of our continued investments in Teacher Approved, we’re excited to expand the program so that all apps that meet Play’s Families Policy will be eligible to be reviewed and shown on the Kids tab. We’re also streamlining the process for developers. Moving forward, the requirements for the Designed for Families program, which previously were a separate prerequisite from Teacher Approved eligibility, will be merged into the broader Families Policy. By combining our requirements into one policy and expanding eligibility for the Teacher Approved program, we look forward to providing families with even more Teacher Approved apps and to help you, our developer partners, reach more users.

If you’re new to the Teacher Approved program, you might wonder what we’re looking for. Beyond strict privacy and security requirements, great content for kids can take many forms, whether that’s sparking curiosity, helping kids learn, or just plain fun. Our team of teachers and experts across the world review and rate apps on factors like age-appropriateness, quality of experience, enrichment, and delight. For added transparency, we include information in the app listing about why the app was rated highly to help parents determine if the app is right for their child. Please visit Google Play Academy for more information about how to design high-quality apps for kids.

Building on our Ads Policies to Protect Children

When you're creating a great app experience for kids and families, it’s also important that any ads served to children are appropriate and compliant with our Families Policy. This includes using Families Self-Certified Ads SDKs to serve ads to children. We recently made changes to the Families Self-Certified Ads SDK Program to help better protect users and make life easier for Families developers. SDKs that participate in the program are now required to identify which versions of their SDKs are appropriate for use in Families apps and you can view the list of self-certified versions in our Help Center.

Next year, all Families developers will be required to use only those versions of a Families Self-Certified Ads SDK that the SDK has identified as appropriate for use in Families apps. We encourage you to begin preparing now before the policy takes full effect.

Building Transparency with New Data Safety Section Options

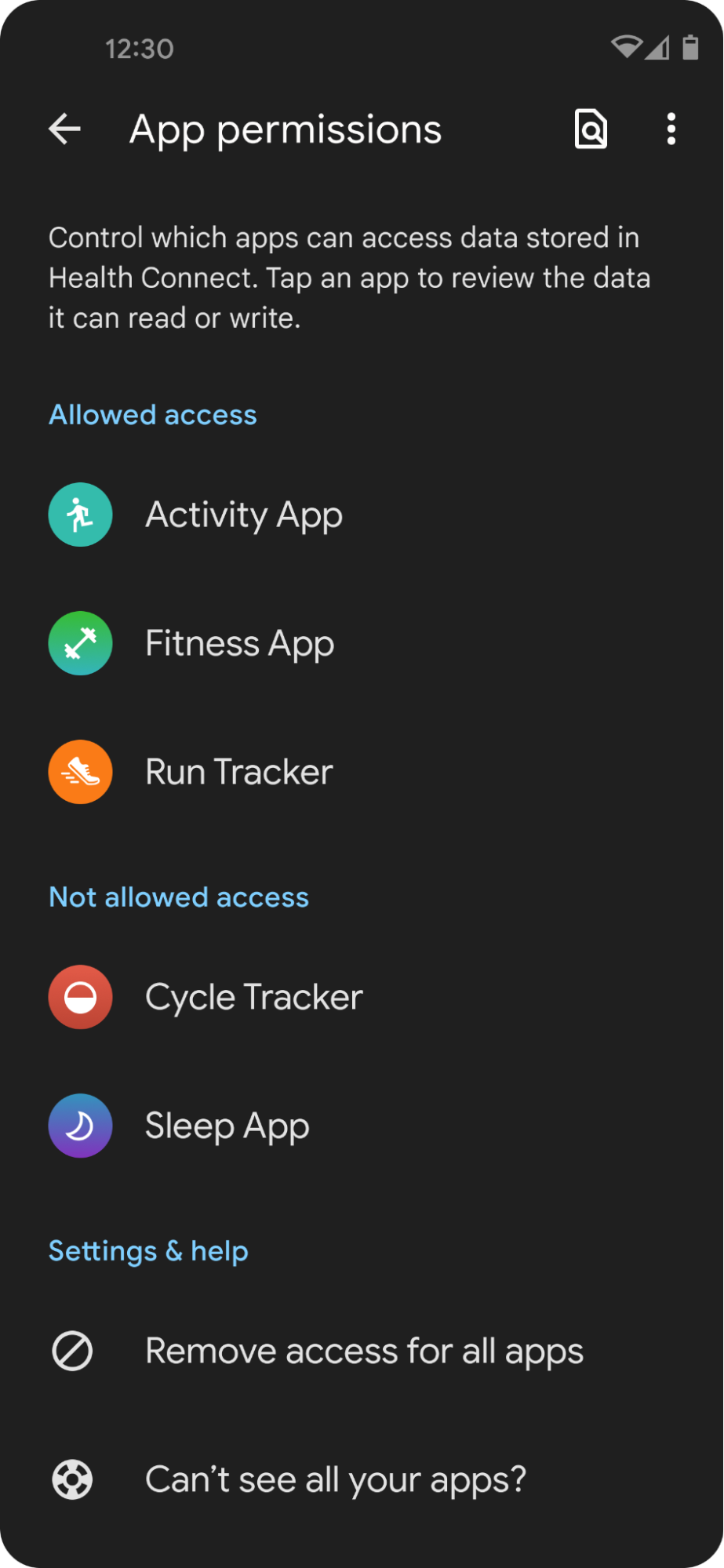

In the coming weeks, all apps which include children in their target audience will be able to showcase their compliance with Play’s Families Policy requirements with a special badge on the Data safety section. This is another great way that you can better help families find apps that meet their needs, while supporting Play’s commitment to provide users more transparency and control over their data. To display the badge, please visit the "Security practices" section of your Data safety form.

|

As always, we’re grateful for your partnership in helping to make Play a fantastic platform for delightful, high-quality content for kids and families. For more developer resources:

- Watch our PolicyBytes video

- Join a policy webinar, available for Global, India, Japan, Korea, South East Asia, and Indonesia

- Learn from our interactive Play Academy courses on complying with Play’s Families policies, including SDK requirements, selecting your target age and content settings, and more.

.png)