This is Google’s fourth annual year-in-review of 0-days exploited in-the-wild [2021, 2020, 2019] and builds off of the mid-year 2022 review. The goal of this report is not to detail each individual exploit, but instead to analyze the exploits from the year as a whole, looking for trends, gaps, lessons learned, and successes.

Executive Summary

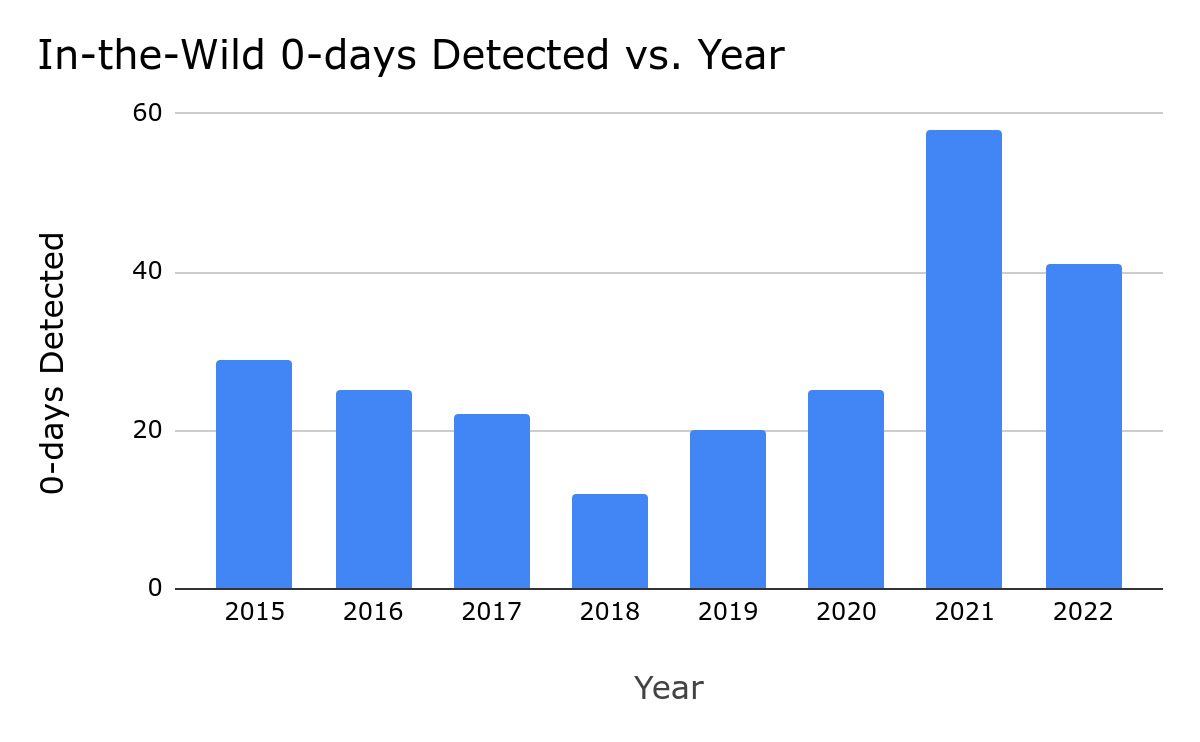

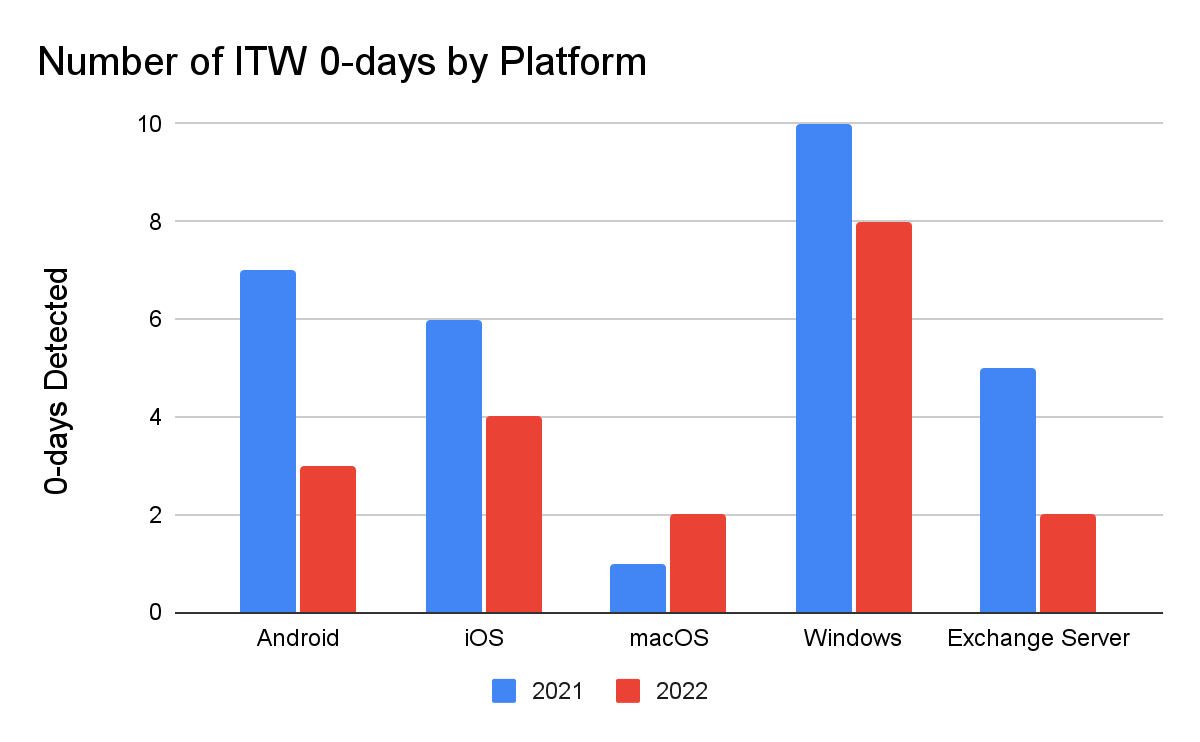

41 in-the-wild 0-days were detected and disclosed in 2022, the second-most ever recorded since we began tracking in mid-2014, but down from the 69 detected in 2021. Although a 40% drop might seem like a clear-cut win for improving security, the reality is more complicated. Some of our key takeaways from 2022 include:

N-days function like 0-days on Android due to long patching times. Across the Android ecosystem there were multiple cases where patches were not available to users for a significant time. Attackers didn’t need 0-day exploits and instead were able to use n-days that functioned as 0-days.

0-click exploits and new browser mitigations drive down browser 0-days. Many attackers have been moving towards 0-click rather than 1-click exploits. 0-clicks usually target components other than the browser. In addition, all major browsers also implemented new defenses that make exploiting a vulnerability more difficult and could have influenced attackers moving to other attack surfaces.

Over 40% of the 0-days discovered were variants of previously reported vulnerabilities. 17 out of the 41 in-the-wild 0-days from 2022 are variants of previously reported vulnerabilities. This continues the unpleasant trend that we’ve discussed previously in both the 2020 Year in Review report and the mid-way through 2022 report. More than 20% are variants of previous in-the-wild 0-days from 2021 and 2020.

Bug collisions are high. 2022 brought more frequent reports of attackers using the same vulnerabilities as each other, as well as security researchers reporting vulnerabilities that were later discovered to be used by attackers. When an in-the-wild 0-day targeting a popular consumer platform is found and fixed, it's increasingly likely to be breaking another attacker's exploit as well.

Based on our analysis of 2022 0-days we hope to see the continued focus in the following areas across the industry:

More comprehensive and timely patching to address the use of variants and n-days as 0-days.

More platforms following browsers’ lead in releasing broader mitigations to make whole classes of vulnerabilities less exploitable.

Continued growth of transparency and collaboration between vendors and security defenders to share technical details and work together to detect exploit chains that cross multiple products.

By the Numbers

For the 41 vulnerabilities detected and disclosed in 2022, no single find accounted for a large percentage of all the detected 0-days. We saw them spread relatively evenly across the year: 20 in the first half and 21 in the second half. The combination of these two data points, suggests more frequent and regular detections. We also saw the number of organizations credited with in-the-wild 0-day discoveries stay high. Across the 69 detected 0-days from 2021 there were 20 organizations credited. In 2022 across the 41 in-the-wild 0-days there were 18 organizations credited. It’s promising to see the number of organizations working on 0-day detection staying high because we need as many people working on this problem as possible.

2022 included the detection and disclosure of 41 in-the-wild 0-days, down from the 69 in 2021. While a significant drop from 2021, 2022 is still solidly in second place. All of the 0-days that we’re using for our analysis are tracked in this spreadsheet.

Limits of Number of 0-days as a Security Metric

The number of 0-days detected and disclosed in-the-wild can’t tell us much about the state of security. Instead we use it as one indicator of many. For 2022, we believe that a combination of security improvements and regressions influenced the approximately 40% drop in the number of detected and disclosed 0-days from 2021 to 2022 and the continued higher than average number of 0-days that we saw in 2022.

Both positive and negative changes can influence the number of in-the-wild 0-days to both rise and fall. We therefore can’t use this number alone to signify whether or not we’re progressing in the fight to keep users safe. Instead we use the number to analyze what factors could have contributed to it and then review whether or not those factors are areas of success or places that need to be addressed.

Example factors that would cause the number of detected and disclosed in-the-wild 0-days to rise:

Security Improvements - Attackers require more 0-days to maintain the same capability |

Discovering and fixing 0-days more quickly More entities publicly disclosing when a 0-day is known to be in-the-wild Adding security boundaries to platforms

|

Security Regressions - 0-days are easier to find and exploit |

Variant analysis is not performed on reported vulnerabilities Exploit techniques are not mitigated More exploitable vulnerabilities are added to code than fixed

|

Example factors that would cause the number of detected and disclosed in-the-wild 0-days to decline:

Security Improvements - 0-days take more time, money, and expertise to develop for use |

Fewer exploitable 0-day vulnerabilities exist Each new 0-day requires the creation of a new exploitation technique New vulnerabilities require researching new attack surfaces

|

Security Regressions - Attackers need fewer 0-days to maintain the same capability |

Slower to detect in-the-wild 0-days so a bug has a longer lifetime Extended time until users are able to install a patch Less sophisticated attack methods: phishing, malware, n-day exploits are sufficient

|

Brainstorming the different factors that could lead to this number rising and declining allows us to understand what’s happening behind the numbers and draw conclusions from there. Two key factors contributed to the higher than average number of in-the-wild 0-days for 2022: vendor transparency & variants. The continued work on detection and transparency from vendors is a clear win, but the high percentage of variants that were able to be used in-the-wild as 0-days is not great. We discuss these variants in more depth in the “Déjà vu of Déjà vu-lnerability” section.

In the same vein, we assess that a few key factors likely led to the drop in the number of in-the-wild 0-days from 2021 to 2022, positives such as fewer exploitable bugs such that many attackers are using the same bugs as each other, and negatives likeless sophisticated attack methods working just as well as 0-day exploits and slower to detect 0-days. The number of in-the-wild 0-days alone doesn’t tell us much about the state of in-the-wild exploitation, it’s instead the variety of factors that influenced this number where the real lessons lie. We dive into these in the following sections.

Are 0-days needed on Android?

In 2022, across the Android ecosystem we saw a series of cases where the upstream vendor had released a patch for the issue, but the downstream manufacturer had not taken the patch and released the fix for users to apply. Project Zero wrote about one of these cases in November 2022 in their “Mind the Gap” blog post.

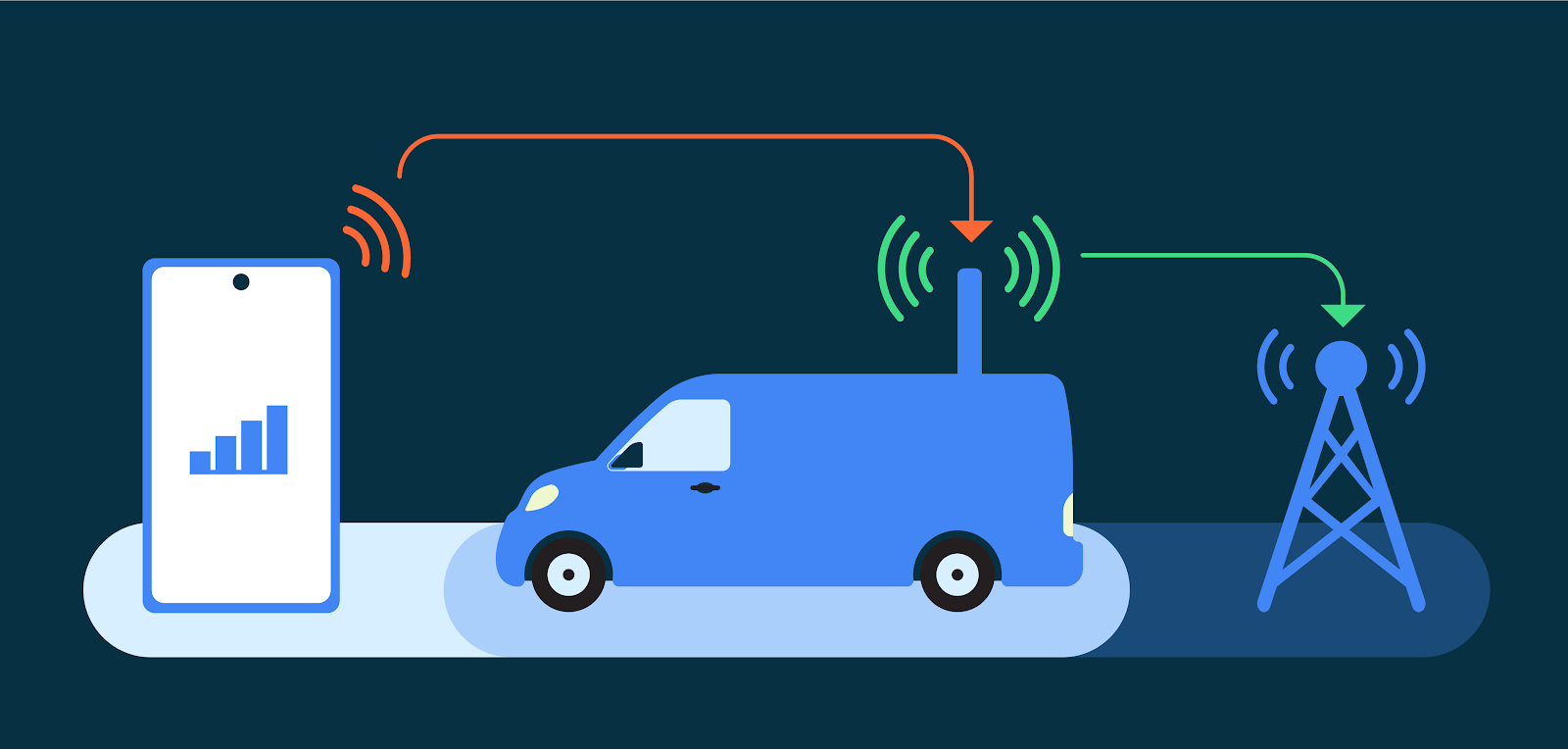

These gaps between upstream vendors and downstream manufacturers allow n-days - vulnerabilities that are publicly known - to function as 0-days because no patch is readily available to the user and their only defense is to stop using the device. While these gaps exist in most upstream/downstream relationships, they are more prevalent and longer in Android.

This is a great case for attackers. Attackers can use the known n-day bug, but have it operationally function as a 0-day since it will work on all affected devices. An example of how this happened in 2022 on Android is CVE-2022-38181, a vulnerability in the ARM Mali GPU. The bug was originally reported to the Android security team in July 2022, by security researcher Man Yue Mo of the Github Security Lab. The Android security team then decided that they considered the issue a “Won’t Fix” because it was “device-specific”. However, Android Security referred the issue to ARM. In October 2022, ARM released the new driver version that fixed the vulnerability. In November 2022, TAG discovered the bug being used in-the-wild. While ARM had released the fixed driver version in October 2022, the vulnerability was not fixed by Android until April 2023, 6 months after the initial release by ARM, 9 months after the initial report by Man Yue Mo, and 5 months after it was first found being actively exploited in-the-wild.

July 2022: Reported to Android Security team

Aug 2022: Android Security labels “Won’t Fix” and sends to ARM

Oct 2022: Bug fixed by ARM

Nov 2022: In-the-wild exploit discovered

April 2023: Included in Android Security Bulletin

In December 2022, TAG discovered another exploit chain targeting the latest version of the Samsung Internet browser. At that time, the latest version of the Samsung Internet browser was running on Chromium 102, which had been released 7 months prior in May 2022. As a part of this chain, the attackers were able to use two n-day vulnerabilities which were able to function as 0-days: CVE-2022-3038 which had been patched in Chrome 105 in June 2022 and CVE-2022-22706 in the ARM Mali GPU kernel driver. ARM had released the patch for CVE-2022-22706 in January 2022 and even though it had been marked as exploited in-the-wild, attackers were still able to use it 11 months later as a 0-day. Although this vulnerability was known as exploited in the wild in January 2022, it was not included in the Android Security Bulletin until June 2023, 17 months after the patch released and it was publicly known to be actively exploited in-the-wild.

These n-days that function as 0-days fall into this gray area of whether or not to track as 0-days. In the past we have sometimes counted them as 0-days: CVE-2019-2215 and CVE-2021-1048. In the cases of these two vulnerabilities the bugs had been fixed in the upstream Linux kernel, but without assigning a CVE as is Linux’s standard. We included them because they had not been identified as security issues needing to be patched in Android prior to their in-the-wild discovery. Whereas in the case of CVE-2022-38181 the bug was initially reported to Android and ARM published security advisories to the issues indicating that downstream users needed to apply those patches. We will continue trying to decipher this “gray area” of bugs, but welcome input on how they ought to be tracked.

Browsers Are So 2021

Similar to the overall numbers, there was a 42% drop in the number of detected in-the-wild 0-days targeting browsers from 2021 to 2022, dropping from 26 to 15. We assess this reflects browsers’ efforts to make exploitation more difficult overall as well as a shift in attacker behavior away from browsers towards 0-click exploits that target other components on the device.

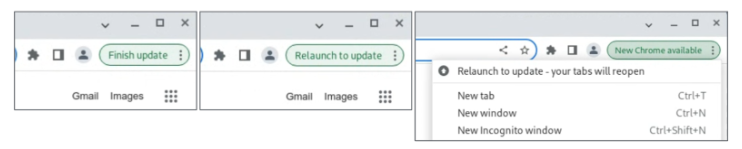

Advances in the defenses of the top browsers is likely influencing the push to other components as the initial vector in an exploit chain. Throughout 2022 we saw more browsers launching and improving additional defenses against exploitation. For Chrome that’s MiraclePtr, v8 Sandbox, and libc++ hardening. Safari launched Lockdown Mode and Firefox launched more fine-grained sandboxing. In his April 2023 Keynote at Zer0Con, Ki Chan Ahn, a vulnerability researcher and exploit developer at offensive security vendor, Dataflow Security, commented on how these types of mitigations are making browser exploitation more difficult and are an incentive for moving to other attack surfaces.

Browsers becoming more difficult to exploit pairs with an evolution in exploit delivery over the past few years to explain the drop in browser bugs in 2022. In 2019 and 2020, a decent percentage of the detected in-the-wild 0-days were delivered via watering hole attacks. A watering hole attack is where an attacker is targeting a group that they believe will visit a certain website. Anyone who visits that site is then exploited and delivered the final payload (usually spyware). In 2021, we generally saw a move to 1-click links as the initial attack vector. Both watering hole attacks and 1-click links use the browser as the initial vector onto the device. In 2022, more attackers began moving to using 0-click exploits instead, exploits that require no user interaction to trigger. 0-clicks tend to target device components other than browsers.

At the end of 2021, Citizen Lab captured a 0-click exploit targeting iMessage, CVE-2023-30860, used by NSO in their Pegasus spyware. Project Zero detailed the exploit in this 2-part blog post series. While no in-the-wild 0-clicks were publicly detected and disclosed in 2022, this does not signal a lack of use. We know that multiple attackers have and are using 0-click exploit chains.

0-clicks are difficult to detect because:

They are short lived

Often have no visible indicator of their presence

Can target many different components and vendors don’t even always realize all the components that are remotely accessible

Delivered directly to the target rather than broadly available like in a watering hole attack

Often not hosted on a navigable website or server

With 1-click exploits, there is a visible link that has to be clicked by the target to deliver the exploit. This means that the target or security tools may detect the link. The exploits are then hosted on a navigable server at that link.

0-clicks on the other hand often target the code that processes incoming calls or messages, meaning that they can often run prior to an indicator of an incoming message or call ever being shown. This also dramatically shortens their lifetime and the window in which they can be detected “live”. It’s likely that attackers will continue to move towards 0-click exploits and thus we as defenders need to be focused on how we can detect and protect users from these exploits.

Déjà vu-lnerability: Complete patching remains one of the biggest opportunities

17 out of 41 of the 0-days discovered in-the-wild in 2022 are variants of previously public vulnerabilities. We first published about this in the 2020 Year in Review report, “Deja vu-lnerability,” identifying that 25% of the in-the-wild 0-days from 2020 were variants of previously public bugs. That number has continued to rise, which could be due to:

Defenders getting better at identifying variants,

Defenders improving at detecting in-the-wild 0-days that are variants,

Attackers are exploiting more variants, or

Vulnerabilities are being fixed less comprehensively and thus there are more variants.

The answer is likely a combination of all of the above, but we know that the number of variants that are able to be exploited against users as 0-days is not decreasing. Reducing the number of exploitable variants is one of the biggest areas of opportunity for the tech and security industries to force attackers to have to work harder to have functional 0-day exploits.

Not only were over 40% of the 2020 in-the-wild 0-days variants, but more than 20% of the bugs are variants of previous in-the-wild 0-days: 7 from 2021 and 1 from 2020. When a 0-day is caught in the wild it’s a gift. Attackers don’t want us to know what vulnerabilities they have and the exploit techniques they’re using. Defenders need to take as much advantage as we can from this gift and make it as hard as possible for attackers to come back with another 0-day exploit. This involves:

Analyzing the bug to find the true root cause, not just the way that the attackers chose to exploit it in this case

Looking for other locations that the same bug may exist

Evaluating any additional paths that could be used to exploit the bug

Comparing the patch to the true root cause and determining if there are any ways around it

We consider a patch to be complete only when it is both correct and comprehensive. A correct patch is one that fixes a bug with complete accuracy, meaning the patch no longer allows any exploitation of the vulnerability. A comprehensive patch applies that fix everywhere that it needs to be applied, covering all of the variants. When exploiting a single vulnerability or bug, there are often multiple ways to trigger the vulnerability, or multiple paths to access it. Many times we see vendors block only the path that is shown in the proof-of-concept or exploit sample, rather than fixing the vulnerability as a whole. Similarly, security researchers often report bugs without following up on how the patch works and exploring related attacks.

While the idea that incomplete patches are making it easier for attackers to exploit 0-days may be uncomfortable, the converse of this conclusion can give us hope. We have a clear path toward making 0-days harder. If more vulnerabilities are patched correctly and comprehensively, it will be harder for attackers to exploit 0-days.

We’ve included all identified vulnerabilities that are variants in the table below. For more thorough walk-throughs of how the in-the-wild 0-day is a variant, check out the presentation from the FIRST conference [video, slides], the slides from Zer0Con, the presentation from OffensiveCon [video, slides] on CVE-2022-41073, and this blog post on CVE-2022-22620.

No Copyrights in Exploits

Unlike many commodities in the world, a 0-day itself is not finite. Just because one person has discovered the existence of a 0-day vulnerability and developed it into an exploit doesn’t prevent other people from independently finding it too and using it in their exploit. Most attackers who are doing their own vulnerability research and exploit development do not want anyone else to do the same as it lowers its value and makes it more likely to be detected and fixed quickly.

Over the last couple of years we’ve become aware of a trend of a high number of bug collisions, where more than one researcher has found the same vulnerability. This is happening amongst both attackers and security researchers who are reporting the bugs to vendors. While bug collisions have always occurred and we can’t measure the exact rate at which they’re occurring, the number of different entities independently being credited for the same vulnerability in security advisories, finding the same 0-day in two different exploits, and even conversations with researchers who work on both sides of the fence, suggest this is happening more often.

A higher number of bug collisions is a win for defense because that means attackers are overall using fewer 0-days. Limiting attack surfaces and making fewer bug classes exploitable can definitely contribute to researchers finding the same bugs, but more security researchers publishing their research also likely contributes. People read the same research and it incites an idea for their next project, but it incites similar ideas in many. Platforms and attack surfaces are also becoming increasingly complex so it takes quite a bit of investment in time to build up an expertise in a new component or target.

Security researchers and their vulnerability reports are helping to fix the same 0-days that attackers are using, even if those specific 0-days haven’t yet been detected in the wild, thus breaking the attackers’ exploits. We hope that vendors continue supporting researchers and investing in their bug bounty programs because it is helping fix the same vulnerabilities likely being used against users. It also highlights why thorough patching of known in-the-wild bugs and vulnerabilities by security researchers are both important.

What now?

Looking back on 2022 our overall takeaway is that as an industry we are on the right path, but there are also plenty of areas of opportunity, the largest area being the industry’s response to reported vulnerabilities.

- We must get fixes and mitigations to users quickly so that they can protect themselves.

- We must perform detailed analyses to ensure the root cause of the vulnerability is addressed.

- We must share as many technical details as possible.

- We must capitalize on reported vulnerabilities to learn and fix as much as we can from them.

None of this is easy, nor is any of this a surprise to security teams who operate in this space. It requires investment, prioritization, and developing a patching process that balances both protecting users quickly and ensuring it is comprehensive, which can at times be in tension. Required investments depend on each unique situation, but we see some common themes around staffing/resourcing, incentive structures, process maturity, automation/testing, release cadence, and partnerships.

We’ve detailed some efforts that can help ensure bugs are correctly and comprehensively fixed in this post: including root cause, patch, variant, and exploit technique analyses. We will continue to help with these analyses, but we hope and encourage platform security teams and other independent security researchers to invest in these efforts as well.

Final Thoughts: TAG’s New Exploits Team

Looking into the second half of 2023, we’re excited for what’s to come. You may notice that our previous reports have been on the Project Zero blog. Our 0-days in-the-wild program has moved from Project Zero to TAG in order to combine the vulnerability analysis, detection, and threat actor tracking expertise all in one team, benefiting from more resources and ultimately making: TAG Exploits! More to come on that, but we’re really excited for what this means for protecting users from 0-days and making 0-day hard.

One of the intentions of our Year in Review is to make our conclusions and findings “peer-reviewable”. If we want to best protect users from the harms of 0-days and make 0-day exploitation hard, we need all the eyes and brains we can get tackling this problem. We welcome critiques, feedback, and other ideas on our work in this area. Please reach out at 0day-in-the-wild <at> google.com.

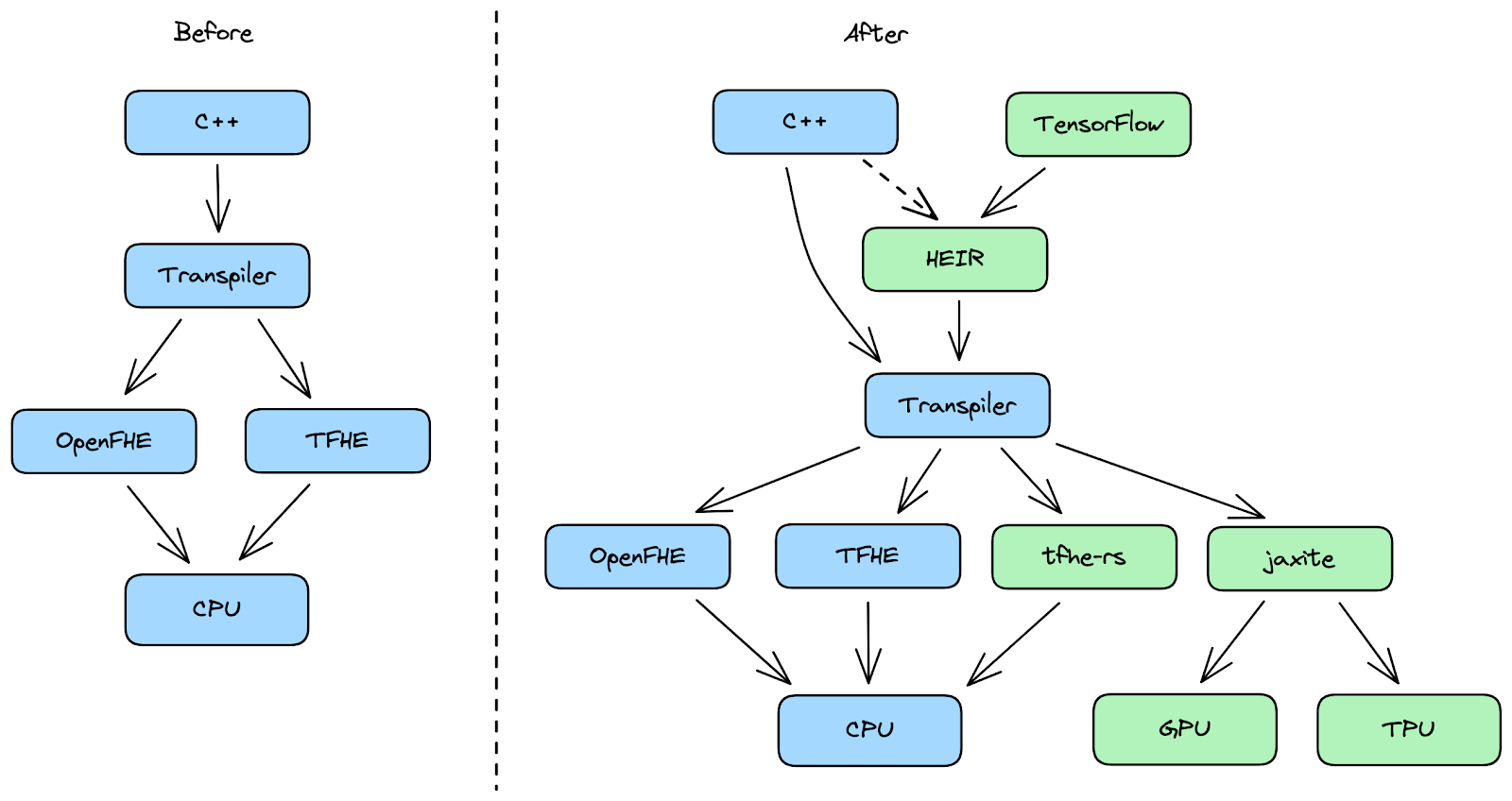

At Google, it’s our responsibility to keep users safe online and ensure they’re able to enjoy the products and services they love while knowing their personal information is private and secure. We’re able to do more with less data through the development of our privacy-enhancing technologies (PETs) like differential privacy and federated learning.

At Google, it’s our responsibility to keep users safe online and ensure they’re able to enjoy the products and services they love while knowing their personal information is private and secure. We’re able to do more with less data through the development of our privacy-enhancing technologies (PETs) like differential privacy and federated learning.

Posted by Miguel Guevara, Product Manager, Privacy and Data Protection Office

Posted by Miguel Guevara, Product Manager, Privacy and Data Protection Office

Posted by Court Jacinic, Senior UX Writer,

Posted by Court Jacinic, Senior UX Writer,