Posted by Matthias Grundmann, Research Scientist and Jianing Wei, Software Engineer, Google Research

One of the most compelling things about smartphones today is the ability to capture a moment on the fly. With motion photos, a

new camera feature available on the Pixel 2 and Pixel 2 XL phones, you no longer have to choose between a photo and a video so every photo you take captures more of the moment. When you take a photo with motion enabled, your phone also records and trims up to 3 seconds of video. Using advanced stabilization built upon technology we pioneered in

Motion Stills for Android, these pictures come to life in Google Photos. Let’s take a look behind the technology that makes this possible!

|

| Motion photos on the Pixel 2 in Google Photos. With the camera frozen in place the focus is put directly on the subjects. For more examples, check out this Google Photos album. |

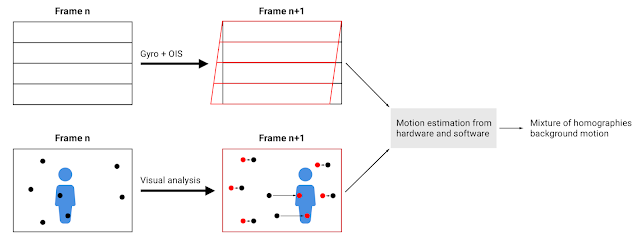

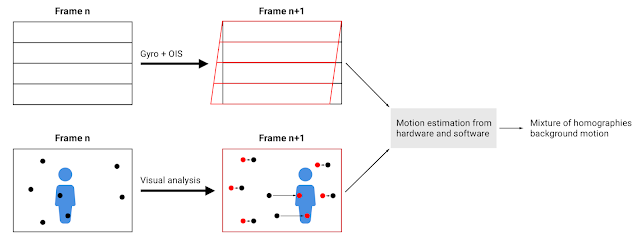

Camera Motion Estimation by Combining Hardware and SoftwareThe image and video pair that is captured every time you hit the shutter button is a full resolution JPEG with an embedded 3 second video clip. On the Pixel 2, the video portion also contains motion metadata that is derived from the gyroscope and optical image stabilization (OIS) sensors to aid the trimming and stabilization of the motion photo. By combining software based visual tracking with the motion metadata from the hardware sensors, we built a new hybrid motion estimation for motion photos on the Pixel 2.

Our approach aligns the background more precisely than the technique used in

Motion Stills or the purely hardware sensor based approach. Based on

Fused Video Stabilization technology, it reduces the artifacts from the visual analysis due to a complex scene with many depth layers or when a foreground object occupies a large portion of the field of view. It also improves the hardware sensor based approach by refining the motion estimation to be more accurate, especially at close distances.

|

| Motion photo as captured (left) and after freezing the camera by combining hardware and software For more comparisons, check out this Google Photos album. |

The purely software-based technique we introduced in Motion Stills uses the visual data from the video frames, detecting and tracking features over consecutive frames yielding motion vectors. It then classifies the motion vectors into foreground and background using motion models such as an

affine transformation or a

homography. However, this classification is not perfect and can be misled, e.g. by a complex scene or dominant foreground.

|

| Feature classification into background (green) and foreground (orange) by using the motion metadata from the hardware sensors of the Pixel 2. Notice how the new approach not only labels the skateboarder accurately as foreground but also the half-pipe that is at roughly the same depth. |

For motion photos on Pixel 2 we improved this classification by using the motion metadata derived from the gyroscope and the OIS. This accurately captures the camera motion with respect to the

scene at infinity, which one can think of as the background in the distance. However, for pictures taken at closer range,

parallax is introduced for scene elements at different depth layers, which is not accounted for by the gyroscope and OIS. Specifically, we mark motion vectors that deviate too much from the motion metadata as foreground. This results in a significantly more accurate classification of foreground and background, which also enables us to use a more complex motion model known as

mixture homographies that can account for

rolling shutter and undo the distortions it causes.

|

| Background motion estimation in motion photos. By using the motion metadata from Gyro and OIS we are able to accurately classify features from the visual analysis into foreground and background. |

Motion Photo Stabilization and PlaybackOnce we have accurately estimated the background motion for the video, we determine an optimally stable camera path to align the background using

linear programming techniques outlined in our

earlier posts. Further, we automatically trim the video to remove any accidental motion caused by putting the phone away. All of this processing happens on your phone and produces a small amount of metadata per frame that is used to render the stabilized video in real-time using a GPU shader when you tap the Motion button in Google Photos. In addition, we play the video starting at the exact timestamp as the HDR+ photo, producing a seamless transition from still image to video.

|

| Motion photos stabilize even complex scenes with large foreground motions. |

Motion Photo SharingUsing Google Photos, you can share motion photos with your friends and as videos and GIFs, watch them on the web, or view them on any phone. This is another example of combining hardware, software and machine learning to create new features for Pixel 2.

AcknowledgementsMotion photos is a result of a collaboration across several Google Research teams, Google Pixel and Google Photos. We especially want to acknowledge the work of Karthik Raveendran, Suril Shah, Marius Renn, Alex Hong, Radford Juang, Fares Alhassen, Emily Chang, Isaac Reynolds, and Dave Loxton.