Posted by Dan Galpin, Developer Advocate

This blog post is part of a weekly series for #11WeeksOfAndroid. For each of the #11WeeksOfAndroid, we’re diving into key areas so you don’t miss anything. This week, we spotlighted games, media, and 5G; here’s a look at what you should know.

What's the buzz in Android 11?

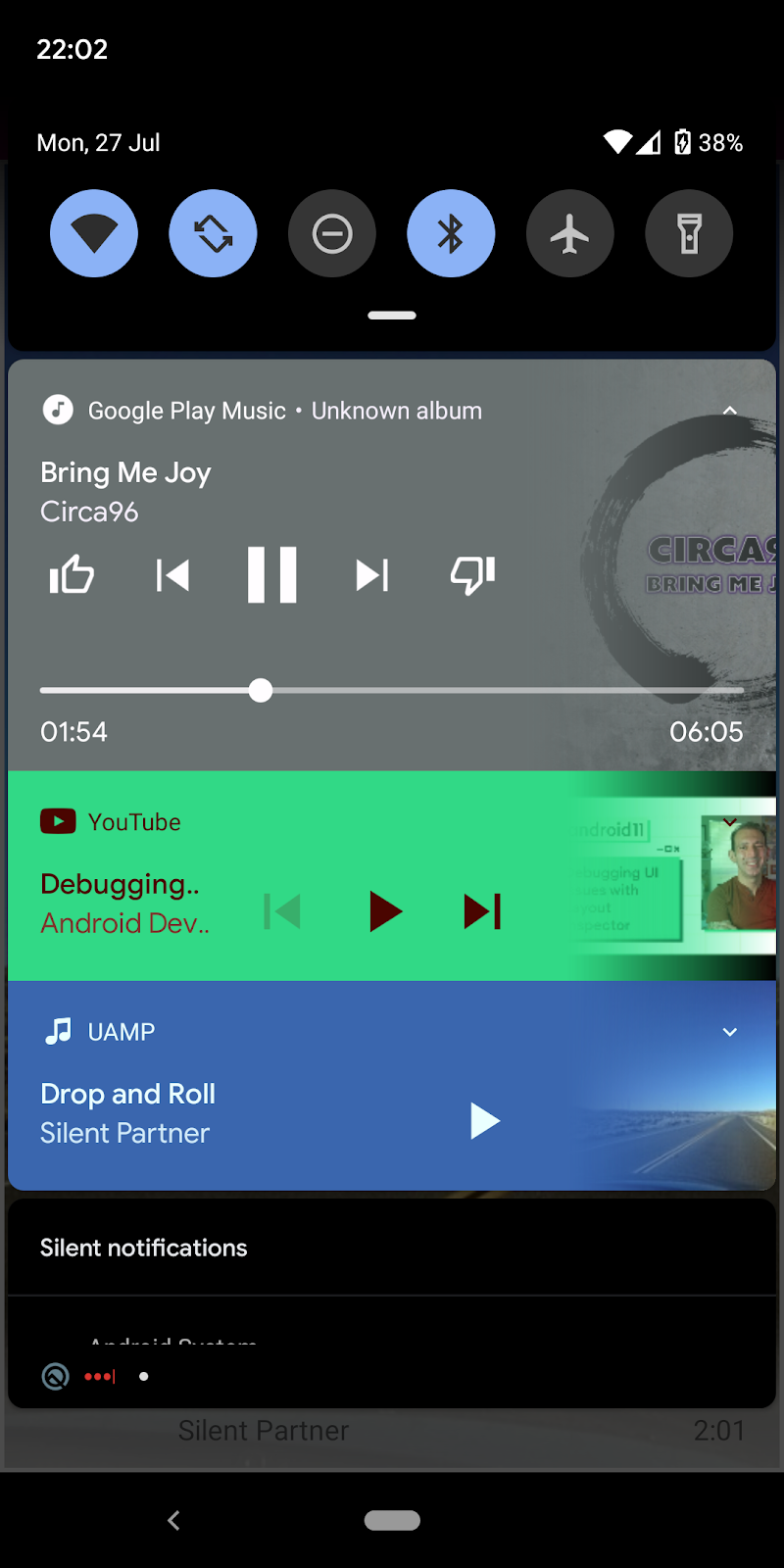

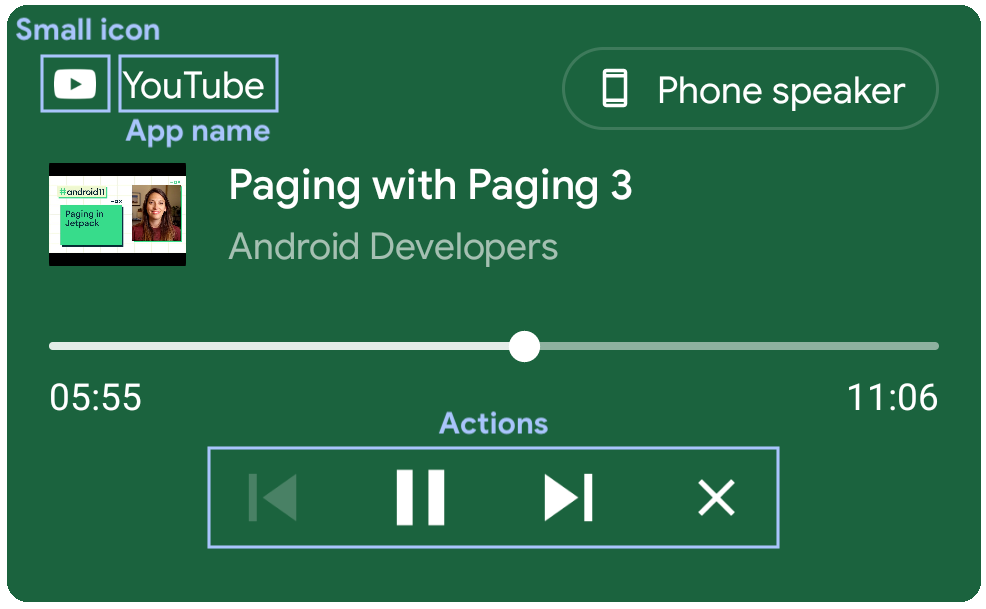

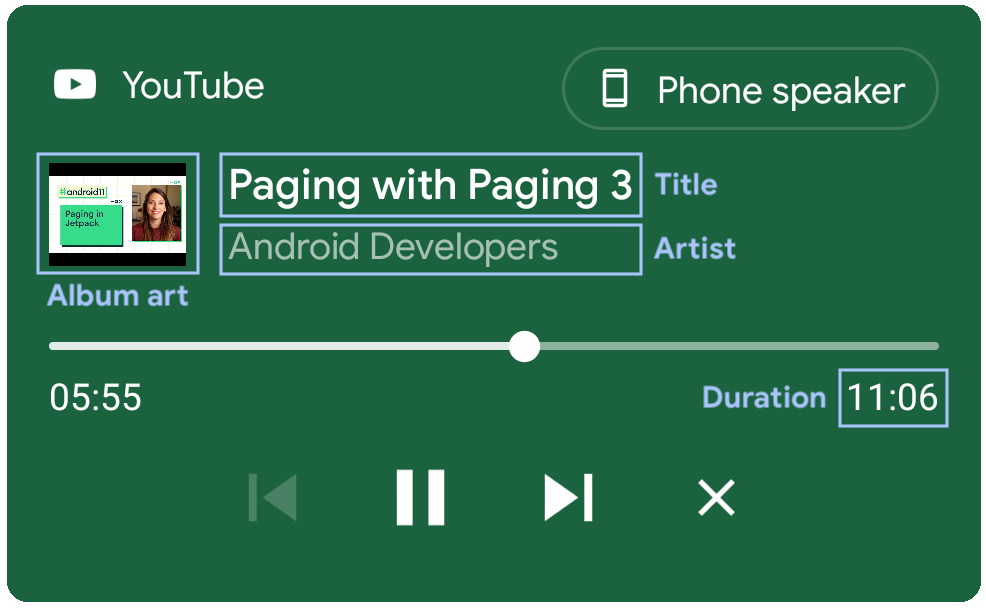

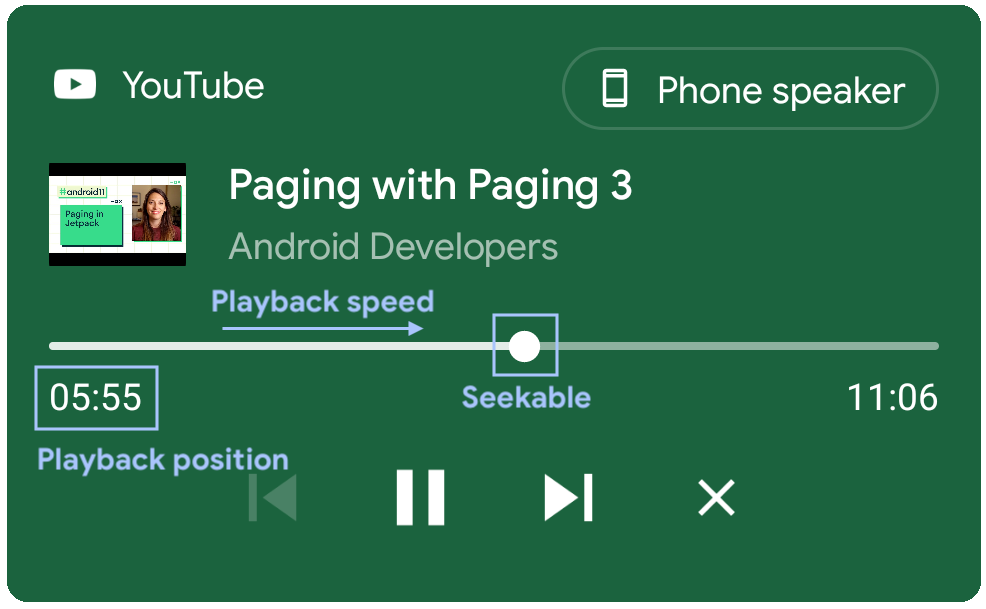

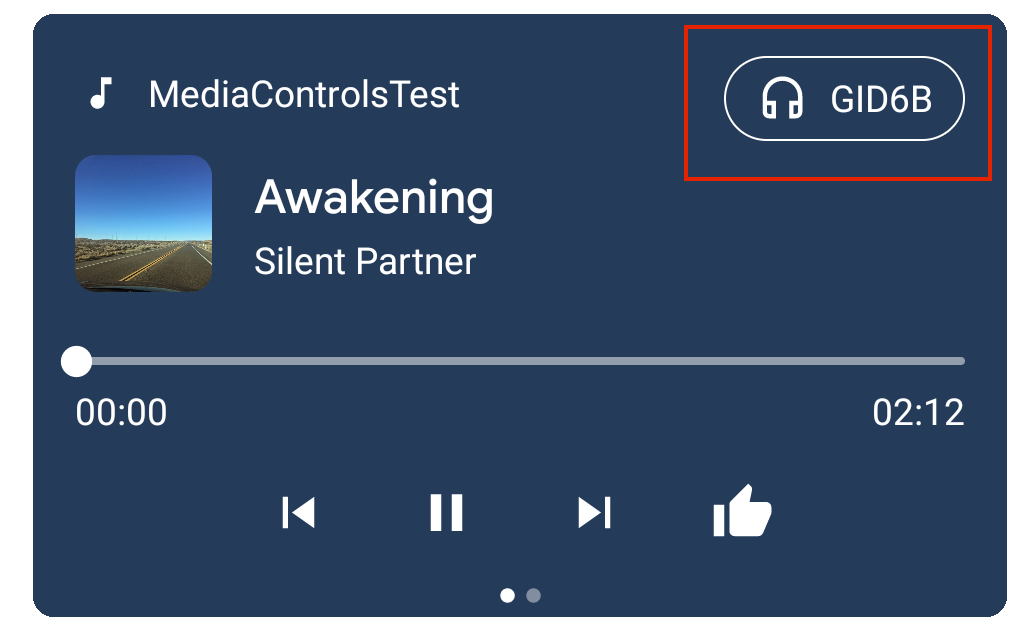

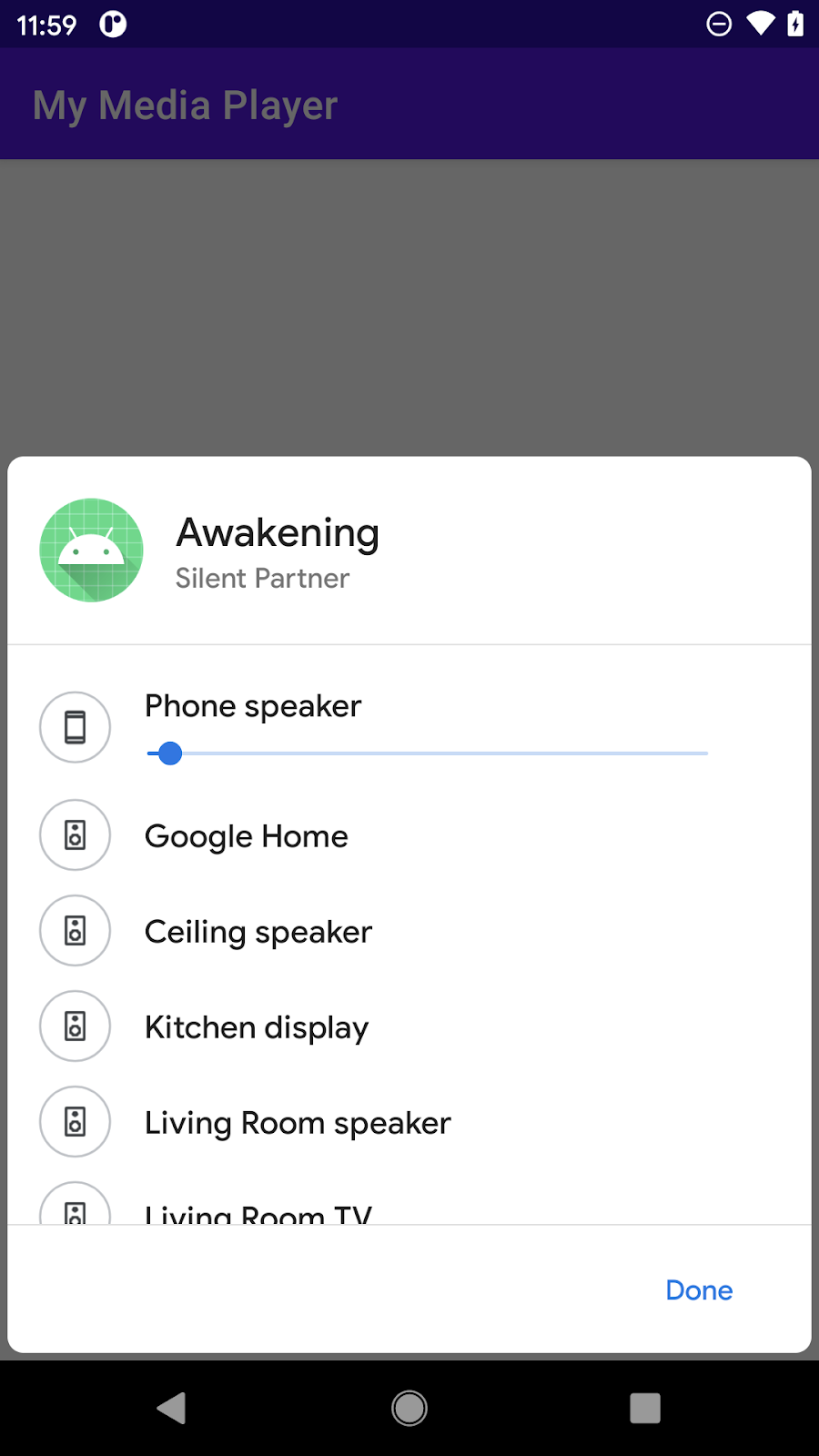

- You can now control media applications from a dedicated space within the notification area while enabling features such as playback resumption and seamless transfer.

- New and updated 5g APIs help you unlock transformative new user experiences.

- Adds new support for key game tools and technologies. On top of that foundation, we're building tools to both improve your game developer experience and help you better characterize the performance of your game, services to help you expand the reach of your game to more devices and new audiences, and new and improved features to support your games' go-to-market with Google Play.

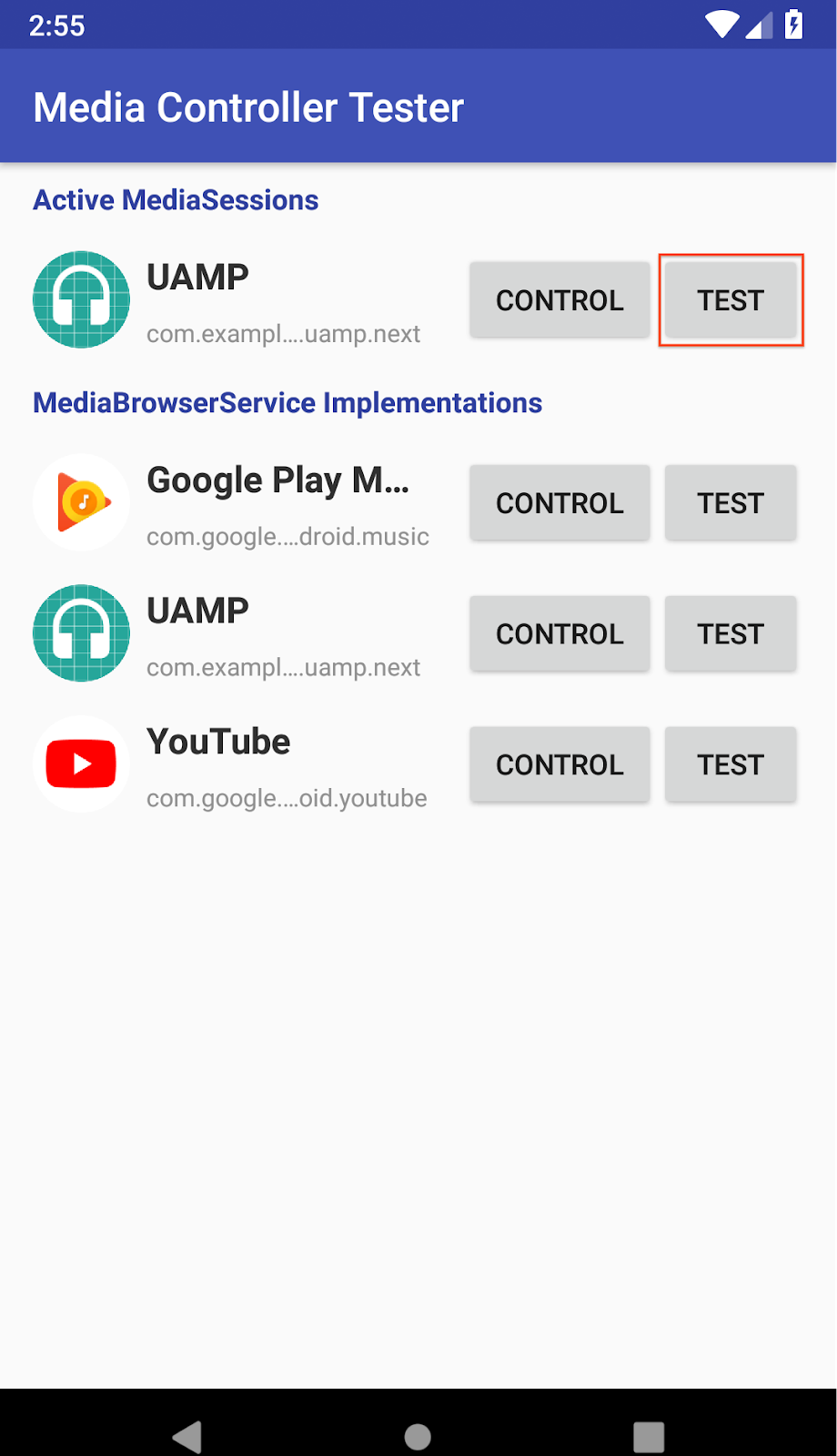

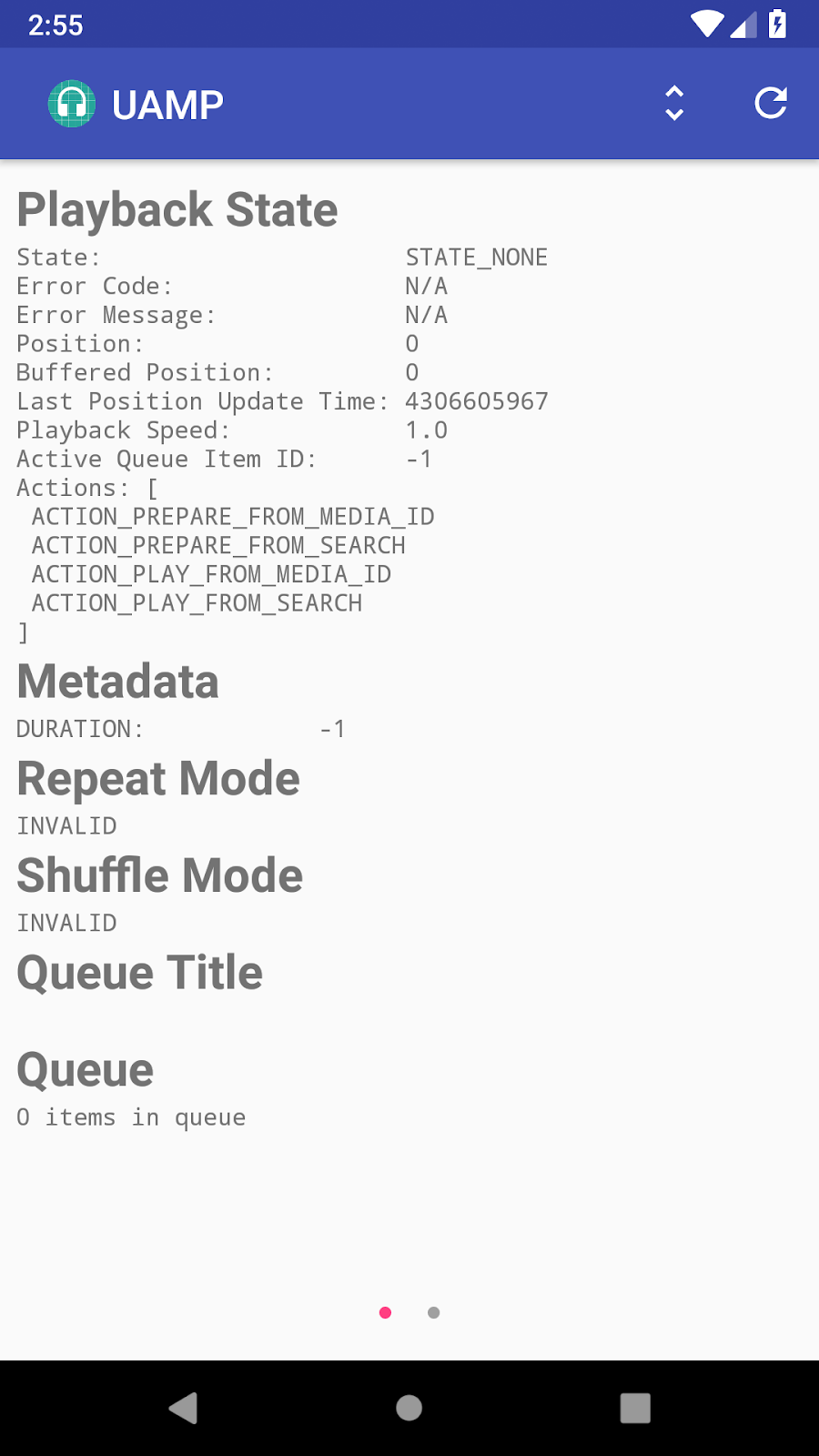

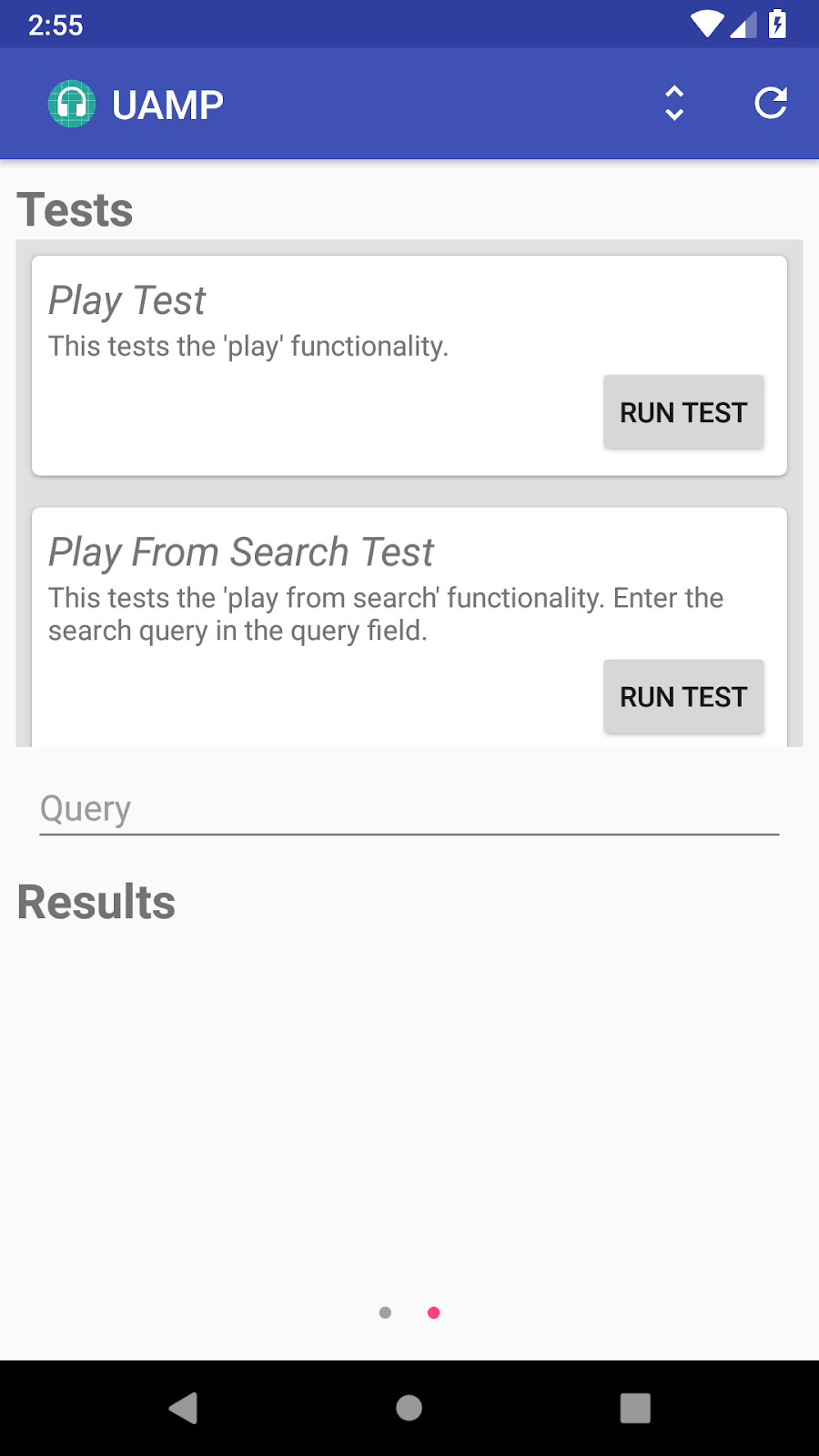

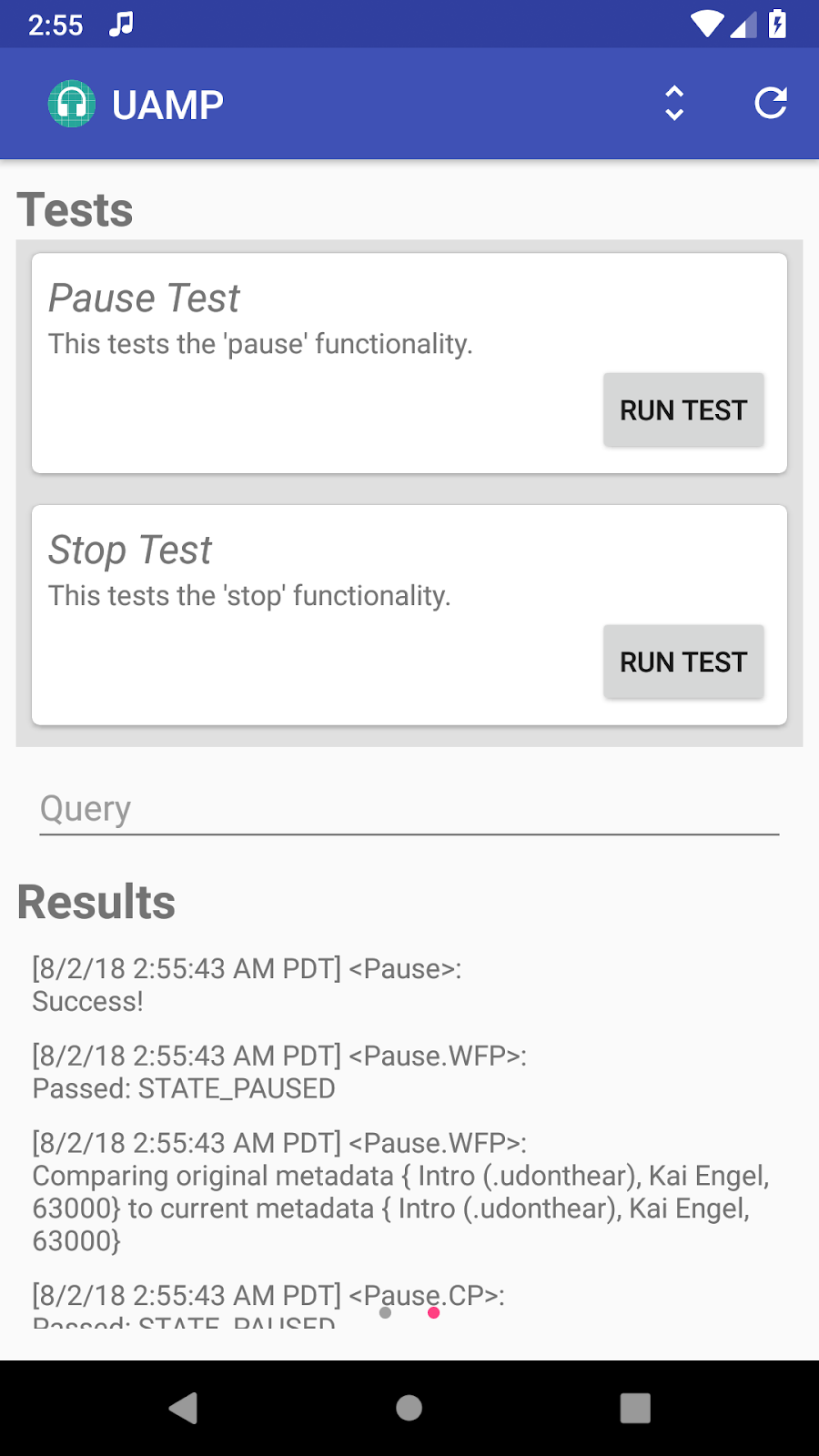

Android 11 media

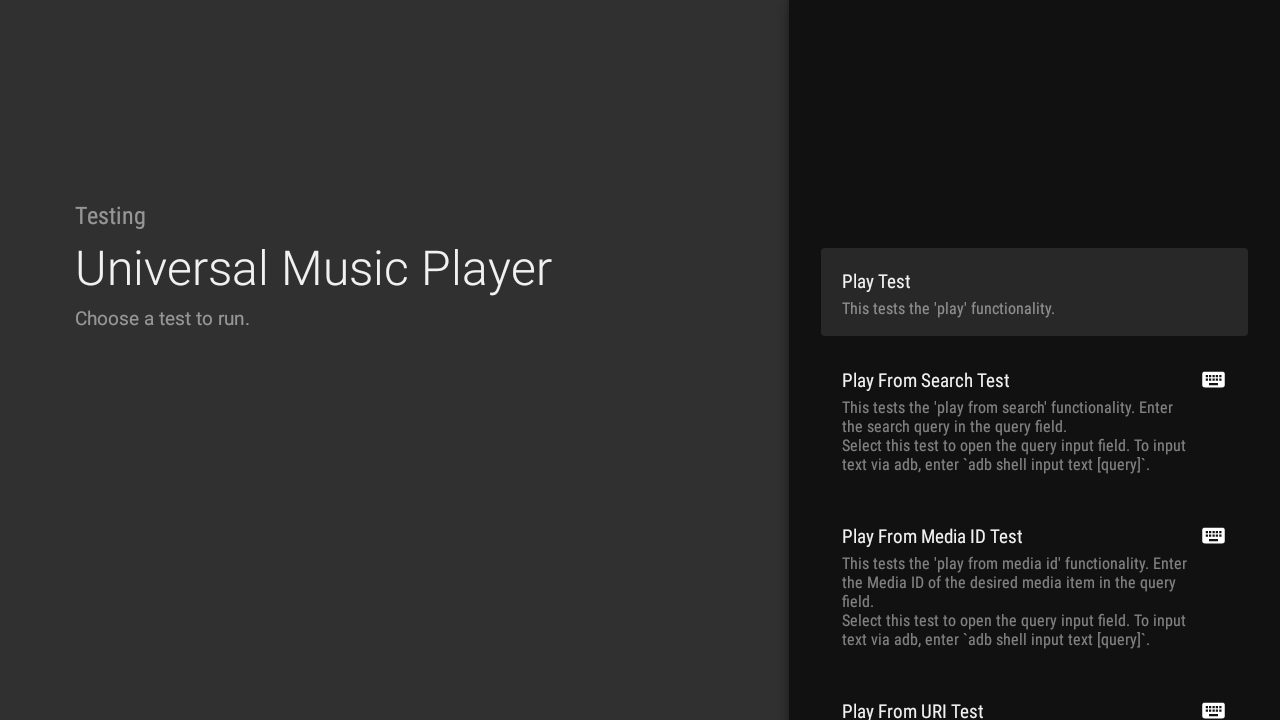

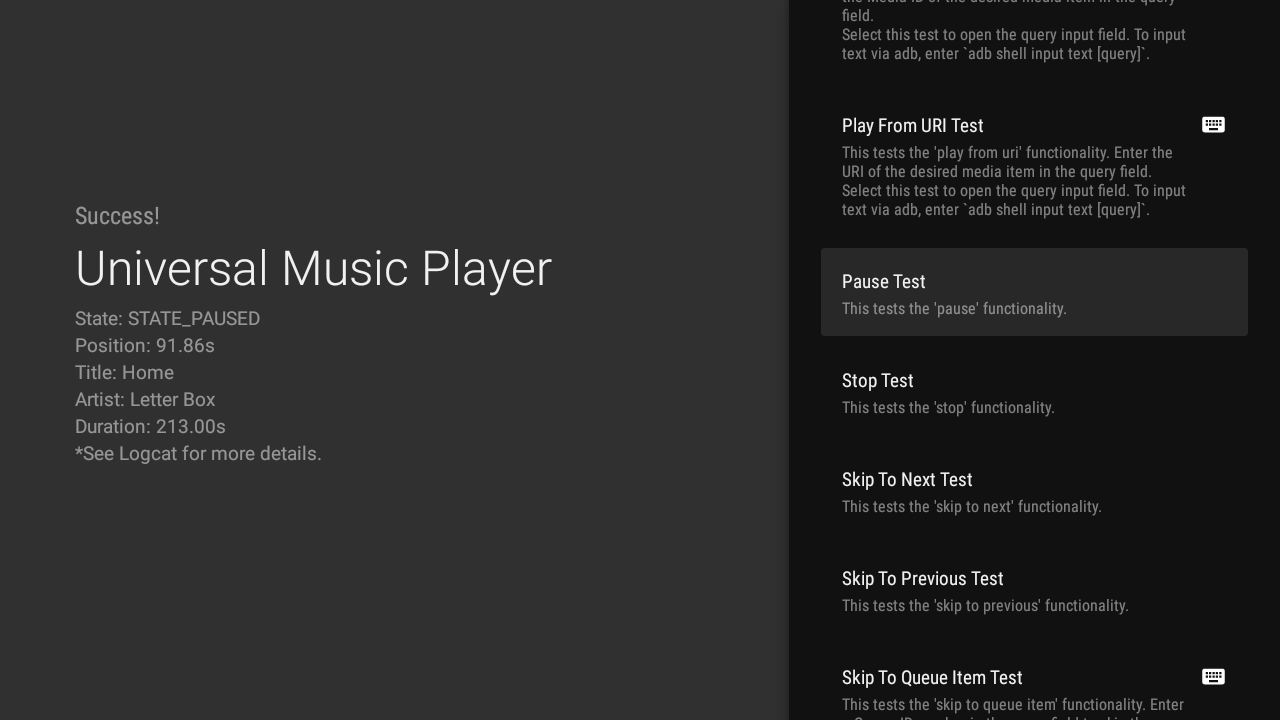

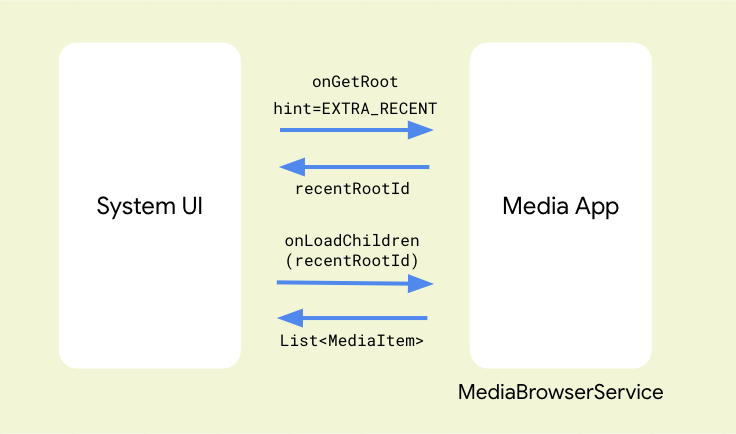

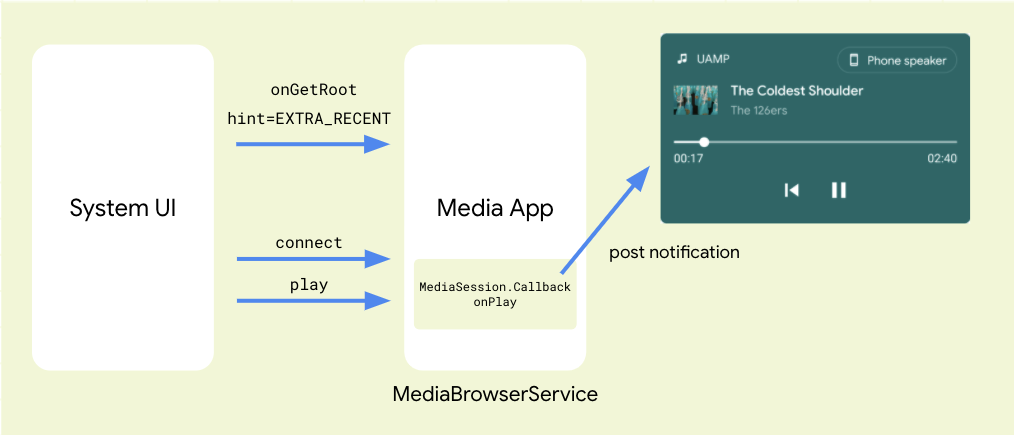

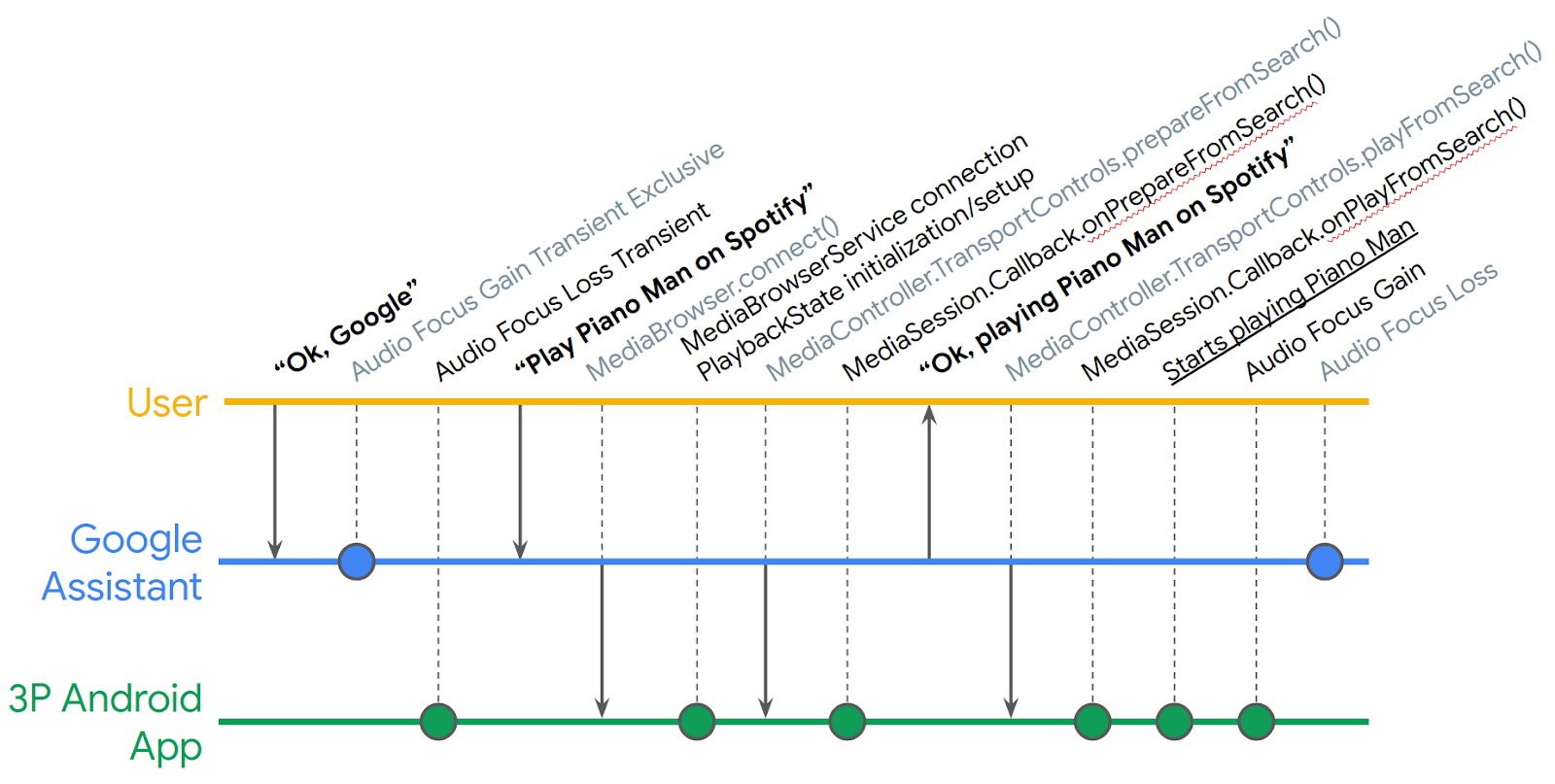

We covered how to take advantage of Android 11's new media controls by making sure your app is using MediaStyle with a valid MediaSession token. We showed how to support Media resumption by making your app discoverable with a MediaBrowserServiceCompat, using the EXTRA_RECENT hint to help with resuming content, and handling the onPlay and onGetRoot callbacks. Finally we showed you how to leverage the MediaRouter jetpack library to support seamless media transfer between devices. Check out the updated version of the UAMP sample which contains a reference implementation for media controls and playback resumption.

Android 11 and 5G

We covered some of the primary ways apps can benefit from 5g, including:

- Turning indoor use cases into outdoor use cases

- Turning photo-centric UX into video-centric or AR-centric UX

- Prefetch helpfully to make your app even more responsive

- Turn niche use cases into mainstream use cases, such as allowing streaming content everywhere

Android 11 adds new APIs and updates existing APIs to ensure you have all the tools you need to leverage the capabilities of 5G, such as an enhanced bandwidth estimation API, 5G detection capabilities, and a new meteredness flag from cellular carriers. The Android emulator now enables you to develop and test these APIs without needing a 5G device or network connection. All of this and more is available from our dedicated 5G page.

Catch up on what's happening with game development

We presented a special "11 Weeks" episode of The Android Game Developer Show providing an update on the tools, services, and technologies we're bringing to help you build, optimize, and distribute great games.

Check out d.android.com/games to learn about everything we've covered this week and more, and stay up to date by signing up for the games quarterly newsletter.

Android game development tooling

In Android Studio 4.1, we enhanced the System Trace view of the CPU Profiler and added the Native Memory Profiler, and both can now be launched standalone from Android Studio. The System Trace and Native Memory blog posts have more details on how to use them with your game or app.

You can sign up for developer previews of the Android Game Development Extension, and the Android GPU Inspector. The Android Game Development Extension helps with building multi-platform C/C++ games, while the GPU Inspector is used to profile and debug graphics. Stay tuned for the open beta of the Android GPU Inspector.

Reaching more devices and users with your game

We took a deep dive into the Android Performance Tuner, explaining annotations, quality levels, and fidelity parameters along with some best practices on how to use them. Once you've implemented that, we also covered how to use the new insights and analysis you'll get within Android Vitals.

We showed how Google Play Asset Delivery brings the benefits of app bundles to games with large asset sizes, flexible delivery modes, auto-updates, compression, and delta patching. Texture compression format targeting is coming very soon letting you tap into modern texture compression such as ASTC (now supported on over 50% of devices) allowing you to considerably cut your game size and in-memory footprint.

We published new codelabs to help you integrate Android Performance Tuner and Google Play Asset Delivery into your Unity or native C/C++ game.

We explained how we can help protect your game, players, and business by fighting monetization and distribution abuse.

Boost your games' go-to-market

We launched the open beta of Play Games Services - Friends to help you bootstrap and enhance your in-game friend networks while having your games surfaced in new clusters in the Play Games app.

We demonstrated the new release management experience in the Google Play Console beta and showed how it can help your testing and publishing workflow.

Day one auto-installs is a new Google Play feature that allows users to request the automatic installation of your game during pre-registration. Early experiments show a +20% increase in day 1 installs when using this feature. The new pre-registration menu in the beta Google Play Console makes it easier than ever to access this feature.

We showed how to optimize your store listing page to take advantage of the greatly improved games visual experience within Google Play, showcasing rich game graphics and engaging videos.

The new in-app review API lets you choose when to prompt users to write reviews from within your game, without heading back to the app details page. This API supports both public and private reviews for when your app is in beta.

Learning path

If you’re looking for an easy way to pick up the highlights of this week, check out the Games, media, and 5G pathway. A pathway is an ordered tutorial that allows users to complete a pre-defined module that culminates in a quiz. It includes videos and blog posts. A virtual badge is awarded to each user who passes the quiz. Test your knowledge of key takeaways about Android game development, media, and 5G to earn a limited edition badge.

Key takeaways

Thank you for tuning in and learning about the latest in Android game, media, and 5G development.

Seamless media transfer and media resumption

5G

5G Detection (Android Emulator)

Features found in Android Studio 4.1 (Beta Channel)

System Trace in Android Studio CPU Profiler

Android Studio Native Memory Profiler

Pre-release standalone tools

Android Game Development Extension

Features in the Android Game SDK

Android Performance Tuner (C/C++ Codelab) (Unity Codelab)

Google Play features

Play Asset Delivery (C/C++ Codelab) (Unity Codelab)

Play Games Services Friends Beta

You can find the entire playlist of #11WeeksOfAndroid video content here, and learn more about each week here. We’ll continue to spotlight new areas each week, so keep an eye out and follow us on Twitter and YouTube. Thanks so much for letting us be a part of this experience with you!

Posted by Nevin Mital, Partner Developer Relations

Posted by Nevin Mital, Partner Developer Relations