Posted by Mete Atamel, Developer Advocate

One of our goals here on the

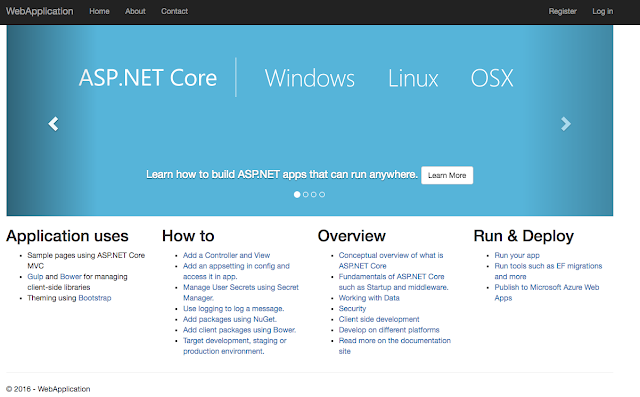

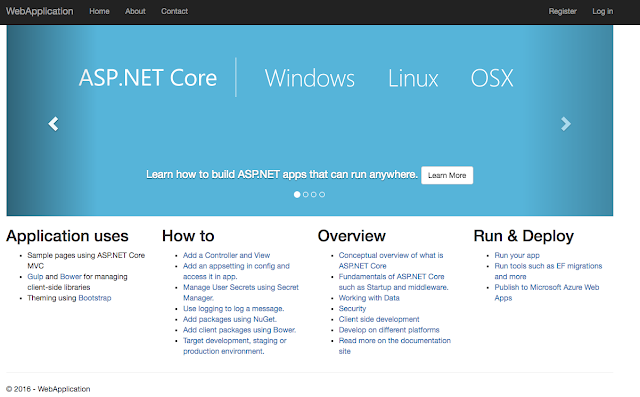

Google Cloud Platform team is to support the broadest possible array of platforms and operating systems. That’s why we’re so excited about the ASP.NET Core, the next generation of the open source ASP.NET web framework built on

.NET Core. With it, .NET developers can run their apps cross-platform on Windows, Mac and Linux.

One thing that ASP.NET Core does is allow .NET applications to run in Docker containers. All of a sudden, we’ve gone from Windows-only web apps to lean cross-platform web apps running in containers. This has been great to see!

|

| ASP.NET Core supports running apps across a variety of operating system platforms |

Containers can provide a stable runtime environment for apps, but they aren’t always easy to manage. You still need to worry about how to automate deployment of containers, how to scale up and down and how to upgrade or downgrade app versions reliably. In short, you need a container management platform that you can rely on in production.

That’s where the open-source

Kubernetes platform comes in. Kubernetes provides high-level building blocks such as pods, labels, controllers and services that collectively help maintenance of containerized apps.

Google Container Engine provides a hosted version of Kubernetes which can greatly simplify creating and managing Kubernetes clusters.

My colleague Ivan Naranjo recently published a blog post that shows you how to take an

ASP.NET Core app, containerize it with Docker and and run it on Google App Engine. In this post, we’ll take a containerized ASP.NET Core app and manage it with Kubernetes and

Google Container Engine. You'll be surprised how easy it is, especially considering that running an ASP.NET app on a non-Windows platform was unthinkable until recently.

Prerequisites

I am assuming a Windows development environment, but the instructions are similar on Mac or Linux.

First, we need to

install .NET core, install Docker and

install Google Cloud SDK for Windows. Then, we need to

create a Google Cloud Platform project. We'll use this project later on to host our Kubernetes cluster on Container Engine.

Create a HelloWorld ASP.NET Core app

.NET Core comes with .NET Core Command Line Tools, which makes it really easy to create apps from command line. Let’s create a HelloWorld folder and create a web app using dotnet command:

$ mkdir HelloWorld

$ cd HelloWorld

$ dotnet new -t web

|

Restore the dependencies and run the app locally:

$ dotnet restore

$ dotnet run

|

You can then visit

http://localhost:5000 to see the default ASP.NET Core page.

Get the app ready for publishing

Next, let’s pack the application and all of its dependencies into a folder to get it ready to publish.

$ dotnet publish -c Release

|

Once the app is published, we can test the resulting dll using the following:

$ cd bin/Release/netcoreapp1.0/publish/

$ dotnet HelloWorld.dll

|

Containerize the ASP.NET Core app with Docker

Let’s now take our HelloWorld app and containerize it with Docker. Create a Dockerfile in the root of our app folder:

FROM microsoft/dotnet:1.0.1-core

COPY . /app

WORKDIR /app

EXPOSE 8080/tcp

ENV ASPNETCORE_URLS http://*:8080

ENTRYPOINT ["dotnet", "HelloWorld.dll"]

|

This is the recipe for the Docker image that we'll create shortly. In a nutshell, we're creating an image based on microsoft/dotnet:latest image, copying the current directory to /app directory in the container, executing the commands needed to get the app running, making sure port 8080 is exposed and that ASP.NET Core is using that port.

Now we’re ready to build our Docker image and tag it with our Google Cloud project id:

$ docker build -t gcr.io/<PROJECT_ID>/hello-dotnet:v1 .

|

To make sure that our image is good, let’s run it locally in Docker:

$ docker run -d -p 8080:8080 -t gcr.io/<PROJECT_ID>/hello-dotnet:v1

|

Now when you visit

http://localhost:8080 to see the same default ASP.NET Core page, it is running inside a Docker container.

Create a Kubernetes cluster in Container Engine

We are ready to create our Kubernetes cluster but first, let’s first install

kubectl. In Google Cloud SDK Shell:

$ gcloud components install kubectl

|

Configure

kubectl command line access to the cluster with the following:

$ gcloud container clusters get-credentials hello-dotnet-cluster \

--zone europe-west1-b --project <PROJECT_ID>

|

Now, let’s push our image to Google Container Registry using gcloud, so we can later refer to this image when we deploy and run our Kubernetes cluster. In the Google Cloud SDK Shell, type:

$ gcloud docker push gcr.io/<PROJECT_ID>/hello-dotnet:v1

|

Create a Kubernetes cluster with two nodes in Container Engine:

$ gcloud container clusters create hello-dotnet-cluster --num-nodes 2 --machine-type n1-standard-1

|

This will take a little while but when the cluster is ready, you should see something like this:

Creating cluster hello-dotnet-cluster...done.

|

Deploy and run the app in Container Engine

At this point, we have our image hosted on Google Container Registry and we have our Kubernetes cluster ready in Google Container Engine. There’s only one thing left to do: run our image in our Kubernetes cluster. To do that, we can use the

kubectl command line tool.

Create a deployment from our image in Kubernetes:

$ kubectl run hello-dotnet --image=gcr.io/<PROJECT_ID>hello-dotnet:v1 \

--port=8080

deployment “hello-dotnet” created

|

Make sure the deployment and pod are running:

$ kubectl get deployments

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

hello-dotnet 1 1 1 0 28s

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-dotnet-3797665162-gu99e 1/1 Running 0 1m

|

And expose our deployment to the outside world:

$ kubectl expose deployment hello-dotnet --type="LoadBalancer"

service "hello-dotnet" exposed

|

Once the service is ready, we can see the external IP address:

$ kubectl get services

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello-dotnet XX.X.XXX.XXX XXX.XXX.XX.XXX 8080/TCP 1m

|

Finally, if you visit the external IP address on port 8080, you should see the default ASP.NET Core app managed by Kubernetes!

It’s fantastic to see the ASP.NET and Linux worlds are coming together. With Kubernetes, ASP.NET Core apps can benefit from automated deployments, scaling, reliable upgrades and much more. It’s a great time to be a .NET developer, for sure!