Posted by Saba Zaidi, Senior Interaction Designer, Google Assistant

Earlier this year we announced Smart Displays, a new category of devices with the Google Assistant built in, that augment voice experiences with immersive visuals. These new, highly visual devices can make it easier to convey complex information, suggest Actions, support transactions, and express your brand. Starting today, Smart Displays are available for purchase in major US retailers, both in-store and online.

Interacting through voice is fast and easy, because speaking comes naturally to people, and language doesn't constrain people to predefined paths, unlike traditional visual interfaces. However in audio-only interfaces, it can be difficult to communicate detailed information like lists or tables, and nearly impossible to represent rich content like images, charts or a visual brand identity. Smart Displays allow you to create Actions for the Assistant that can respond to natural conversation, and also display information and represent your brand in an immersive, visual way.

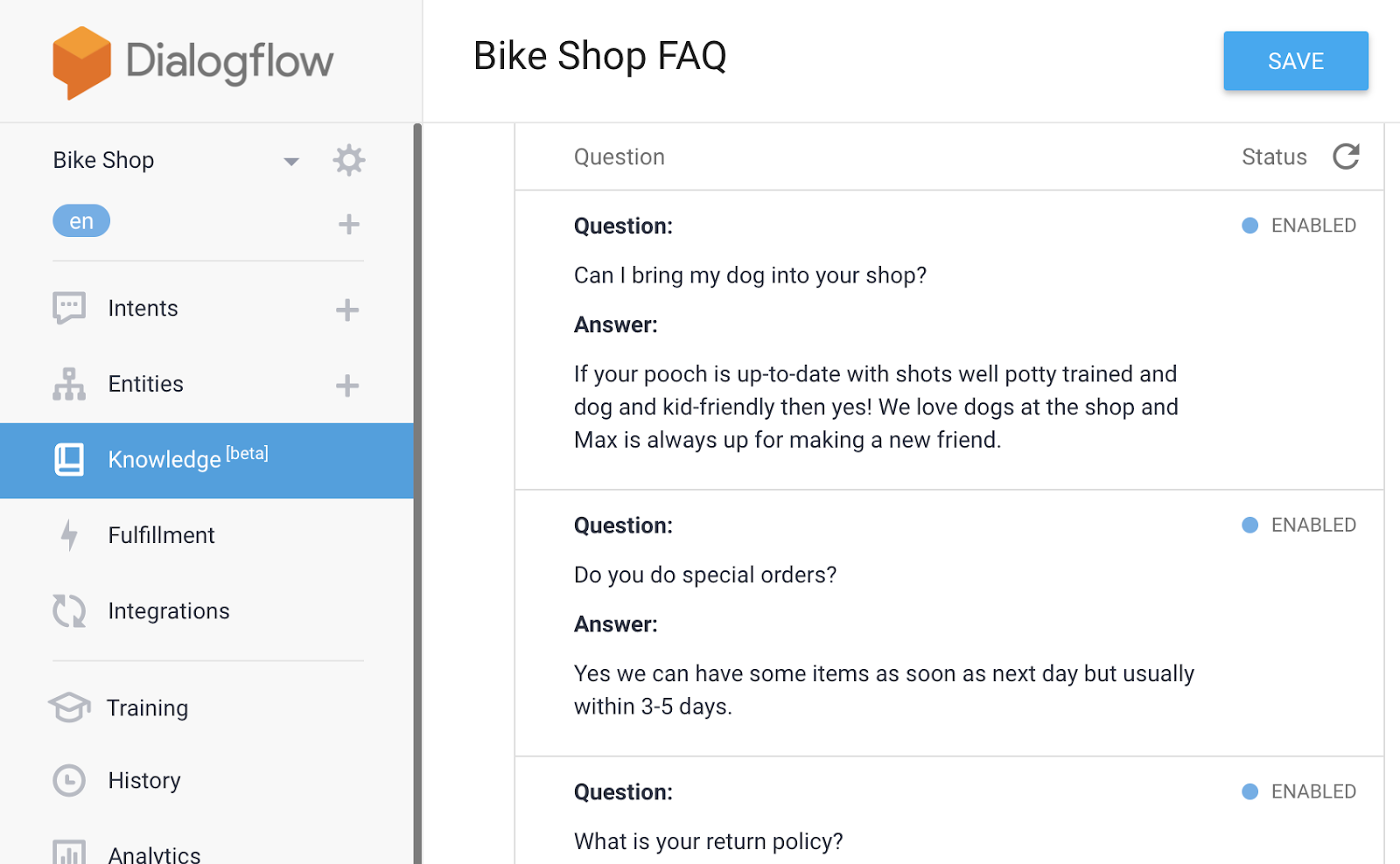

Today we're announcing consumer availability of rich responses optimized for Smart Displays. With rich responses, developers can use basic cards, lists, tables, carousels and suggestion chips, which give you an array of visual interactions for your Action, with more visual components coming soon. In addition, developers can also create custom themes to more deeply customize your Action's look and feel.

If you've already built a voice-centric Action for the Google Assistant, not to worry, it'll work automatically on Smart Displays. But we highly recommend adding rich responses and custom themes to make your Action even more visually engaging and useful to your users on Smart Displays. Here are a few tips to get you started:

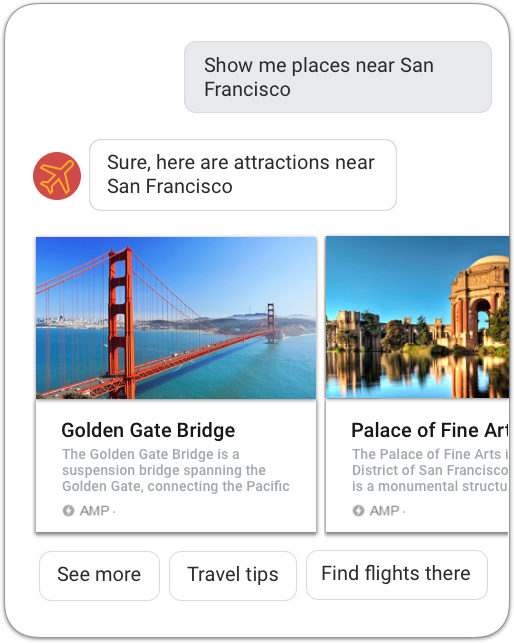

1. Consider using visual components instead of complex voice prompts

Smart Displays offer several visual formats for displaying information and facilitating user input. A carousel of images, a list or a table can help users scan information efficiently and then interact with a quick tap or swipe.

For example, consider a long, spoken prompt like: "Welcome to National Anthems! You can play the national anthems from 20 different countries, including the United States, Canada and the United Kingdom. Which would you like to hear?"

Instead of merely showing the transcript of that whole spoken prompt on the screen, a carousel of country flags makes it easy for users to scroll and tap the anthem they want to hear.

2. Use visual suggestions to streamline the conversation

Suggestion chips are a great way to surface recommendations, aid feature discovery and keep the conversation moving on Smart Displays.

In this example, suggestion chips can help users find the "surprise me" feature, find the most popular anthems, or filter anthems by region.

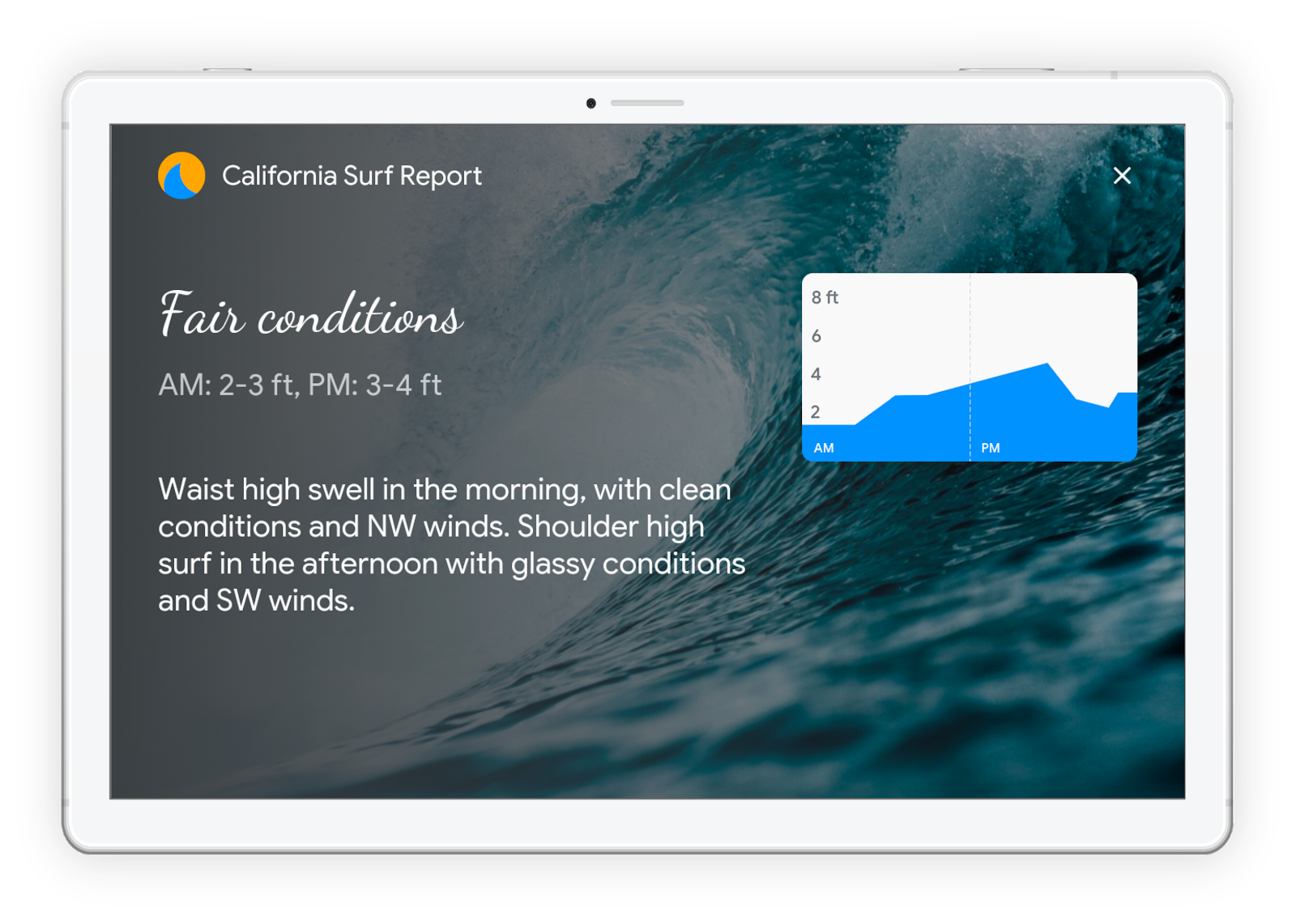

3. Express your brand with themes

You can take advantage of new custom themes to differentiate your experience and represent your brand's persona, choosing a custom voice, background image or color, font style, or the shape of your cards to match your branding.

For example, an Action like California Surf Report, could be themed in a more immersive and customized way.

4. Check out our library of developer resources

We offer more tips on designing and building for Smart Displays and other visual devices in our conversation design site and in our talk from I/O about how to design Actions across devices.

Then head to our documentation to learn how to customize the visual appearance of your Actions with rich responses. You can also test and tinker with customizations for Smart Displays in the Actions Console simulator.

Don't forget that once you publish your first Action you can join our community program* and receive your exclusive Google Assistant t-shirt and up to $200 of monthly Google Cloud credit.

We can't wait to see—quite literally—what you build next! Thanks for being a part of our community, and as always, if you have ideas or requests that you'd like to share with our team, don't hesitate to join the conversation.

*Some countries are not eligible to participate in the developer community program, please review the terms and conditions