The

JGroups messaging toolkit is a popular solution for clustering Java-based application servers in a reliable manner. This post describes how to store, host and manage your JGroups cluster member data using

Google Cloud Storage. The configuration provided here is particularly well-suited for the discovery of

Google Compute Engine nodes; however, for testing purposes, it can also be used with your current on-premises virtual machines.

Overview of JGroups clustering on Cloud Storage

JGroups versions 3.5 and later enable the discovery of clustered members, or nodes, on GCP via a JGroups protocol called

GOOGLE_PING. GOOGLE_PING stores information about each member in flat files in a Cloud Storage bucket, and then uses these files to discover initial members in a cluster. When new members are added, they read the addresses of the other cluster members from the Cloud Storage bucket, and then ping each member to announce themselves.

By default, JGroups members use multicast communication over UDP to broadcast their presence to other instances on a network. Google Cloud Platform, like most cloud providers and enterprise networks, does not support multicast; however, both GCP and JGroups support unicast communication over TCP as a viable fallback. In the unicast-over-TCP model, a new instance instead announces its arrival by iterating over the list of nodes already joined to a cluster, individually notifying each node.

Configure Cloud Storage to store JGroups configuration files

To allow JGroups to use Cloud Storage for file storage, begin by creating a Cloud Storage bucket:

- In the Cloud Platform Console, go to the Cloud Storage browser.

- Click Create bucket.

- In the Create bucket dialog, specify the following:

- A bucket name, subject to the bucket name requirements

- The Standard storage class

- A location where bucket data will be stored

Next, set up interoperability and create a new Cloud Storage developer key. You'll need the developer key for authentication:

GOOGLE_PING sends an authenticated request via the Cloud Storage XML API, which uses keyed-hash message authentication code (HMAC) authentication with Cloud Storage developer keys. To generate a developer key:

- Open the Storage settings page in the Google Cloud Platform Console.

- Select the Interoperability tab.

- If you have not set up interoperability before, click Enable interoperability access. Note: Interoperability access allows Cloud Storage to interoperate with tools written for other cloud storage systems. Because

GOOGLE_PING is based on the Amazon-oriented S3_PING class in JGroups, it requires interoperability access.

- Click Create a new key.

- Make note of the Access key and Secret values—you'll need them later.

Important: Keep your developer keys secret. Your developer keys are linked to your Google account, and you should treat them as you would treat any set of access credentials.

Configure your clustered application to use GOOGLE_PING

Now that you've created your Cloud Storage bucket and developer keys, configure your application's JGroups configuration to use the

GOOGLE_PING class. For most applications that use JGroups, you can do so as follows:

- Edit your JGroups XML configuration file (jgroups.xml in most cases).

- Modify the file to use TCP instead of UDP:

(tcp bind_port="7800")

- Locate the PING section and replace it with

GOOGLE_PING, as shown in the following example. Replace your-jgroups-bucket with the name of your Cloud Storage bucket, and replace your-access-key and your-secret with the values of your access key and secret:

(!-- PING timeout="2000" num_initial_members="3"/ --)

(GOOGLE_PING

location="your-jgroups-bucket"

access_key="your-access-key"

secret_access_key="your-secret"

timeout="2000" num_initial_members="3"/)

Now

GOOGLE_PING will use your Cloud Storage bucket and automatically create a folder that's named to match the cluster name.

Warning: By default, your virtual machines will communicate with your bucket insecurely through port 80. To set up an encrypted connection between the instances and the bucket, add the following attribute to the

GOOGLE_PING element:

(google_ping ...="" port="443")

If you use JBoss Wildfly application server, you can configure clustering by configuring the

JGroup subsystem and adding the the

GOOGLE_PING protocol.

Demonstration

This section walks you through a concrete demonstration of

GOOGLE_PING in action. This example sets up a cluster of Compute Engine instances that reside within the same Cloud Platform project, using their internal IPs as ping targets.

First, I start a sender application (using

Vert.x) on a Compute Engine instance, making it the first member of my cluster:

$ java -Djava.net.preferIPv4Stack=true

-Djgroups.bind_addr=10.240.0.2 -jar

my-sender-fatjar-3.1.0-fat.jar -cluster -cluster-host 10.240.0.2

Note: In general, you should bind to your Compute Engine instances' internal IP addresses. If you would prefer to cluster your instances by using their externally routable IP addresses, add the following parameter to your java command, replacing

(external_ip) with the external IP of the instance:

-Djgroups.external_addr=(external_ip)

When the application begins running, it displays "No reply," as no receiver nodes have been set up yet:

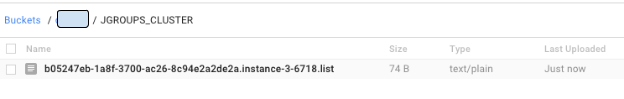

This sender node creates a folder and a

.list file in my Cloud Storage bucket. My JGroups cluster is configured with the name JGROUPS_CLUSTER, so my Cloud Storage folder is also automatically named

JGROUPS_CLUSTER:

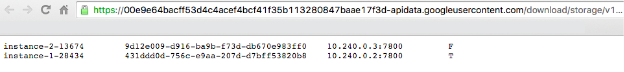

The

.list file lists all of the members in the

JGROUPS_CLUSTER cluster. In JGroups, the first node to start is designated as the cluster coordinator; as such, the single node I've started has been marked with a T, meaning that the node's cluster-coordinator status is true.

Next, I start a receiver application, also using Vert.x, on a second Compute Engine instance:

$ java -Djava.net.preferIPv4Stack=true

-Djgroups.bind_addr=10.240.0.2 -jar

my-receiver-fatjar-3.1.0-fat.jar -cluster -cluster-host

10.240.0.2

This action adds an entry to the

.list file for the new member node:

Once the node has been added to the

.list file, the node begins receiving "ping!" messages from the first member node:

The second node responds to each "ping!" message with a "pong!" message. When the first node receives a "pong!" message, it displays "Received reply pong!" in the sender application's standard output:

Get started

You can give

GOOGLE_PING a try for free by

signing up for a free trial.

-

Posted by Grace Mollison, Solutions Architect