Posted by Vishwath Mohan, Security Engineer

To keep users safe, most apps and devices have an authentication mechanism, or a way to prove that you're you. These mechanisms fall into three categories: knowledge factors, possession factors, and biometric factors. Knowledge factors ask for something you know (like a PIN or a password), possession factors ask for something you have (like a token generator or security key), and biometric factors ask for something you are (like your fingerprint, iris, or face).

Biometric authentication mechanisms are becoming increasingly popular, and it's easy to see why. They're faster than typing a password, easier than carrying around a separate security key, and they prevent one of the most common pitfalls of knowledge-factor based authentication—the risk of shoulder surfing.

As more devices incorporate biometric authentication to safeguard people's private information, we're improving biometrics-based authentication in Android P by:

- Defining a better model to measure biometric security, and using that to functionally constrain weaker authentication methods.

- Providing a common platform-provided entry point for developers to integrate biometric authentication into their apps.

A better security model for biometrics

Currently, biometric unlocks quantify their performance today with two metrics borrowed from machine learning (ML): False Accept Rate (FAR), and False Reject Rate (FRR).

In the case of biometrics, FAR measures how often a biometric model accidentally classifies an incorrect input as belonging to the target user—that is, how often another user is falsely recognized as the legitimate device owner. Similarly, FRR measures how often a biometric model accidentally classifies the user's biometric as incorrect—that is, how often a legitimate device owner has to retry their authentication. The first is a security concern, while the second is problematic for usability.

Both metrics do a great job of measuring the accuracy and precision of a given ML (or biometric) model when applied to random input samples. However, because neither metric accounts for an active attacker as part of the threat model, they do not provide very useful information about its resilience against attacks.

In Android 8.1, we introduced two new metrics that more explicitly account for an attacker in the threat model: Spoof Accept Rate (SAR) and Imposter Accept Rate (IAR). As their names suggest, these metrics measure how easily an attacker can bypass a biometric authentication scheme. Spoofing refers to the use of a known-good recording (e.g. replaying a voice recording or using a face or fingerprint picture), while impostor acceptance means a successful mimicking of another user's biometric (e.g. trying to sound or look like a target user).

Strong vs. Weak Biometrics

We use the SAR/IAR metrics to categorize biometric authentication mechanisms as either strong or weak. Biometric authentication mechanisms with an SAR/IAR of 7% or lower are strong, and anything above 7% is weak. Why 7% specifically? Most fingerprint implementations have a SAR/IAR metric of about 7%, making this an appropriate standard to start with for other modalities as well. As biometric sensors and classification methods improve, this threshold can potentially be decreased in the future.

This binary classification is a slight oversimplification of the range of security that different implementations provide. However, it gives us a scalable mechanism (via the tiered authentication model) to appropriately scope the capabilities and the constraints of different biometric implementations across the ecosystem, based on the overall risk they pose.

While both strong and weak biometrics will be allowed to unlock a device, weak biometrics:

- require the user to re-enter their primary PIN, pattern, password or a strong biometric to unlock a device after a 4-hour window of inactivity, such as when left at a desk or charger. This is in addition to the 72-hour timeout that is enforced for both strong and weak biometrics.

- are not supported by the forthcoming BiometricPrompt API, a common API for app developers to securely authenticate users on a device in a modality-agnostic way.

- can't authenticate payments or participate in other transactions that involve a KeyStore auth-bound key.

- must show users a warning that articulates the risks of using the biometric before it can be enabled.

These measures are intended to allow weaker biometrics, while reducing the risk of unauthorized access.

BiometricPrompt API

Starting in Android P, developers can use the BiometricPrompt API to integrate biometric authentication into their apps in a device and biometric agnostic way. BiometricPrompt only exposes strong modalities, so developers can be assured of a consistent level of security across all devices their application runs on. A support library is also provided for devices running Android O and earlier, allowing applications to utilize the advantages of this API across more devices .

Here's a high-level architecture of BiometricPrompt.

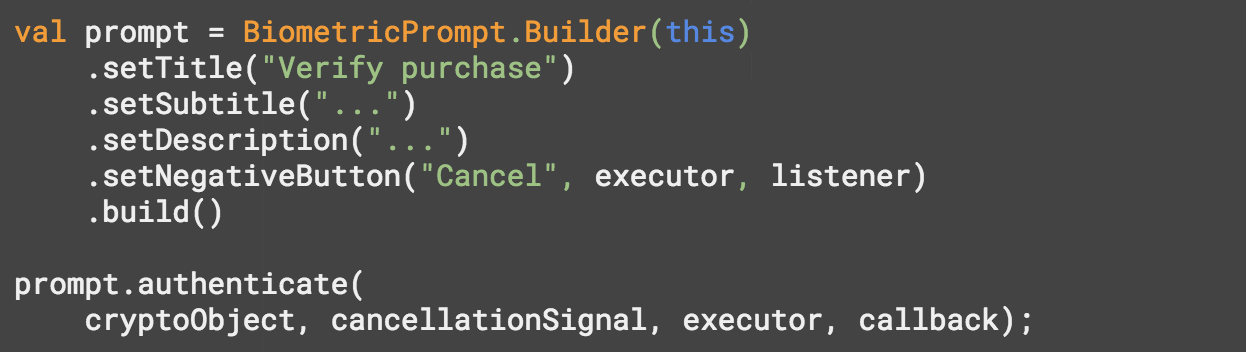

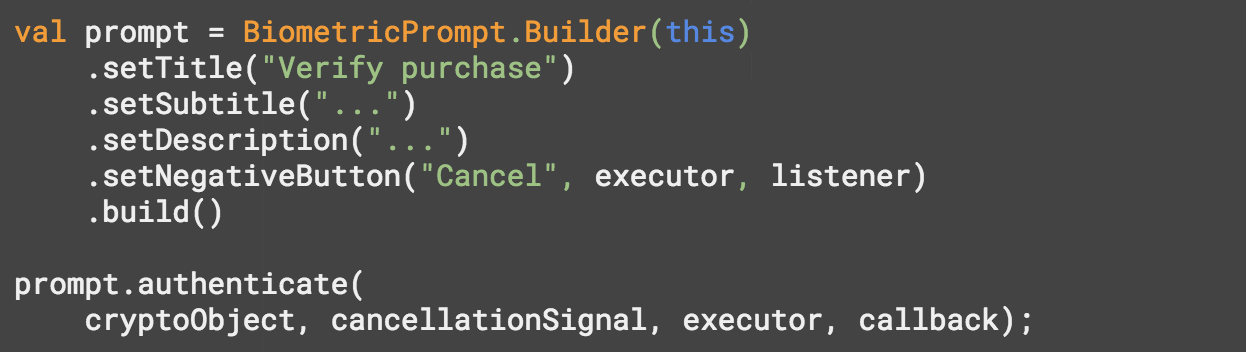

The API is intended to be easy to use, allowing the platform to select an appropriate biometric to authenticate with instead of forcing app developers to implement this logic themselves. Here's an example of how a developer might use it in their app:

Conclusion

Biometrics have the potential to both simplify and strengthen how we authenticate our digital identity, but only if they are designed securely, measured accurately, and implemented in a privacy-preserving manner.

We want Android to get it right across all three. So we're combining secure design principles, a more attacker-aware measurement methodology, and a common, easy to use biometrics API that allows developers to integrate authentication in a simple, consistent, and safe manner.

Acknowledgements: This post was developed in joint collaboration with Jim Miller

Posted by Nicholas Lativy, Software Engineer

Posted by Nicholas Lativy, Software Engineer

Posted by Ivan Lozano, Information Security Engineer

Posted by Ivan Lozano, Information Security Engineer

Posted by

Posted by

Posted by James Bender, Product Manager, Google Play

Posted by James Bender, Product Manager, Google Play