Google is proud to be a Platinum Sponsor of the International Conference on Computer Vision (ICCV 2023), a premier annual conference, which is being held this week in Paris, France. As a leader in computer vision research, Google has a strong presence at this year’s conference with 60 accepted papers and active involvement in 27 workshops and tutorials. Google is also proud to be a Platinum Sponsor for the LatinX in CV workshop. We look forward to sharing some of our extensive computer vision research and expanding our partnership with the broader research community.

Attending ICCV 2023? We hope you’ll visit the Google booth to chat with researchers who are actively pursuing the latest innovations in computer vision, and check out some of the scheduled booth activities (e.g., demos and Q&A sessions listed below). Visit the @GoogleAI Twitter account to find out more about the Google booth activities at ICCV 2023.

Take a look below to learn more about the Google research being presented at ICCV 2023 (Google affiliations in bold).

Board and Organizing Committee

General Chair: Cordelia Schmid

Finance Chair: Ramin Zabih

Industrial Relations Chair: Rahul Sukthankar

Publicity and Social Media Co-Chair: Boqing Gong

Google Research booth activities

Title: ImagenThings: Instant Personalized Image-to-Image Generation

Presenters: Xuhui Jia, Suraj Kothawade

Wednesday, October 4th at 12:30 PM CEST

Title: Open Images V7 (paper, dataset, blog post)

Presenters: Rodrigo Benenson, Jasper Uijlings, Jordi Pont-Tuset

Wednesday, October 4th at 3:30 PM CEST

Title: AI4Design (paper)

Presenters: Andrew Marmon, Peggy Chi, C.K. Ng

Thursday, October 5th at 10:30 AM CEST

Title: Preface: A Data-driven Volumetric Prior for Few-shot Ultra High-resolution Face Synthesis

Presenters: Marcel Bühler, Kripasindhu Sarkar

Thursday, October 5th at 12:30 PM CEST

Title: WHOOPS! A Vision-and-Language Benchmark of Synthetic and Compositional Images

Presenters: Yonatan Bitton

Thursday, October 5th at 1:00 PM CEST

Title: Image Search in Fact Check Explorer (blog post)

Presenters: Yair Alon, Avneesh Sud

Thursday, October 5th at 3:30 PM CEST

Title: UnLoc: A Unified Framework for Video Localization Tasks (paper)

Presenters: Arsha Nagrani, Xuehan Xiong

Friday, October 6th at 10:30 AM CEST

Title: Prompt-Tuning Latent Diffusion Models for Inverse Problems

Presenters: Hyungjin Chung

Friday, October 6th at 12:30 PM CEST

Title: Neural Implicit Representations for Real World Applications

Presenters: Federico Tombari, Fabian Manhardt, Marie-Julie Rakotosaona

Friday, October 6th at 3:30 PM CEST

Accepted papers

Multi-Modal Neural Radiance Field for Monocular Dense SLAM with a Light-Weight ToF Sensor

Xinyang Liu, Yijin Li, Yanbin Teng, Hujun Bao, Guofeng Zhang, Yinda Zhang, Zhaopeng Cui

ITI-GEN: Inclusive Text-to-Image Generation

Cheng Zhang, Xuanbai Chen, Siqi Chai, Chen Henry Wu, Dmitry Lagun, Thabo Beeler, Fernando De la Torre

ASIC: Aligning Sparse in-the-wild Image Collections

Kamal Gupta, Varun Jampani, Carlos Esteves, Abhinav Shrivastava, Ameesh Makadia, Noah Snavely, Abhishek Kar

VQ3D: Learning a 3D-Aware Generative Model on ImageNet

Kyle Sargent, Jing Yu Koh, Han Zhang, Huiwen Chang, Charles Herrmann, Pratul Srinivasan, Jiajun Wu, Deqing Sun

Open-domain Visual Entity Recognition: Towards Recognizing Millions of Wikipedia Entities

Hexiang Hu, Yi Luan, Yang Chen*, Urvashi Khandelwal, Mandar Joshi, Kenton Lee, Kristina Toutanova, Ming-Wei Chang

Sigmoid Loss for Language Image Pre-training

Xiaohua Zhai, Basil Mustafa, Alexander Kolesnikov, Lucas Beyer

Tracking Everything Everywhere All at Once

Qianqian Wang, Yen-Yu Chang, Ruojin Cai, Zhengqi Li, Bharath Hariharan, Aleksander Holynski, Noah Snavely

Zip-NeRF: Anti-Aliased Grid-Based Neural Radiance Fields

Jonathan T. Barron, Ben Mildenhall, Dor Verbin, Pratul P. Srinivasan, Peter Hedman

Delta Denoising Score

Amir Hertz*, Kfir Aberman, Daniel Cohen-Or*

DreamBooth3D: Subject-Driven Text-to-3D Generation

Amit Raj, Srinivas Kaza, Ben Poole, Michael Niemeyer, Nataniel Ruiz, Ben Mildenhall, Shiran Zada, Kfir Aberman, Michael Rubinstein, Jonathan Barron, Yuanzhen Li, Varun Jampani

Encyclopedic VQA: Visual Questions about Detailed Properties of Fine-grained Categories

Thomas Mensink, Jasper Uijlings, Lluis Castrejon, Arushi Goel*, Felipe Cadar*, Howard Zhou, Fei Sha, André Araujo, Vittorio Ferrari

GECCO: Geometrically-Conditioned Point Diffusion Models

Michał J. Tyszkiewicz, Pascal Fua, Eduard Trulls

Learning from Semantic Alignment between Unpaired Multiviews for Egocentric Video Recognition

Qitong Wang, Long Zhao, Liangzhe Yuan, Ting Liu, Xi Peng

Neural Microfacet Fields for Inverse Rendering

Alexander Mai, Dor Verbin, Falko Kuester, Sara Fridovich-Keil

Rosetta Neurons: Mining the Common Units in a Model Zoo

Amil Dravid, Yossi Gandelsman, Alexei A. Efros, Assaf Shocher

Teaching CLIP to Count to Ten

Roni Paiss*, Ariel Ephrat, Omer Tov, Shiran Zada, Inbar Mosseri, Michal Irani, Tali Dekel

Vox-E: Text-guided Voxel Editing of 3D Objects

Etai Sella, Gal Fiebelman, Peter Hedman, Hadar Averbuch-Elor

CC3D: Layout-Conditioned Generation of Compositional 3D Scenes

Sherwin Bahmani, Jeong Joon Park, Despoina Paschalidou, Xingguang Yan, Gordon Wetzstein, Leonidas Guibas, Andrea Tagliasacchi

Delving into Motion-Aware Matching for Monocular 3D Object Tracking

Kuan-Chih Huang, Ming-Hsuan Yang, Yi-Hsuan Tsai

Generative Multiplane Neural Radiance for 3D-Aware Image Generation

Amandeep Kumar, Ankan Kumar Bhunia, Sanath Narayan, Hisham Cholakkal, Rao Muhammad Anwer, Salman Khan, Ming-Hsuan Yang, Fahad Shahbaz Khan

M2T: Masking Transformers Twice for Faster Decoding

Fabian Mentzer, Eirikur Agustsson, Michael Tschannen

MULLER: Multilayer Laplacian Resizer for Vision

Zhengzhong Tu, Peyman Milanfar, Hossein Talebi

SVDiff: Compact Parameter Space for Diffusion Fine-Tuning

Ligong Han*, Yinxiao Li, Han Zhang, Peyman Milanfar, Dimitris Metaxas, Feng Yang

Towards Authentic Face Restoration with Iterative Diffusion Models and Beyond

Yang Zhao, Tingbo Hou, Yu-Chuan Su, Xuhui Jia, Yandong Li, Matthias Grundmann

Unified Visual Relationship Detection with Vision and Language Models

Long Zhao, Liangzhe Yuan, Boqing Gong, Yin Cui, Florian Schroff, Ming-Hsuan Yang, Hartwig Adam, Ting Liu

3D Motion Magnification: Visualizing Subtle Motions from Time-Varying Radiance Fields

Brandon Y. Feng, Hadi Alzayer, Michael Rubinstein, William T. Freeman, Jia-Bin Huang

Global Features are All You Need for Image Retrieval and Reranking

Shihao Shao, Kaifeng Chen, Arjun Karpur, Qinghua Cui, André Araujo, Bingyi Cao

Introducing Language Guidance in Prompt-Based Continual Learning

Muhammad Gul Zain Ali Khan, Muhammad Ferjad Naeem, Luc Van Gool, Didier Stricker, Federico Tombari, Muhammad Zeshan Afzal

Multiscale Structure Guided Diffusion for Image Deblurring

Mengwei Ren*, Mauricio Delbracio, Hossein Talebi, Guido Gerig, Peyman Milanfar

Robust Monocular Depth Estimation under Challenging Conditions

Stefano Gasperini, Nils Morbitzer, HyunJun Jung, Nassir Navab, Federico Tombari

Score-Based Diffusion Models as Principled Priors for Inverse Imaging

Berthy T. Feng*, Jamie Smith, Michael Rubinstein, Huiwen Chang, Katherine L. Bouman, William T. Freeman

Towards Universal Image Embeddings: A Large-Scale Dataset and Challenge for Generic Image Representations

Nikolaos-Antonios Ypsilantis, Kaifeng Chen, Bingyi Cao, Mario Lipovsky, Pelin Dogan-Schonberger, Grzegorz Makosa, Boris Bluntschli, Mojtaba Seyedhosseini, Ondrej Chum, André Araujo

U-RED: Unsupervised 3D Shape Retrieval and Deformation for Partial Point Clouds

Yan Di, Chenyangguang Zhang, Ruida Zhang, Fabian Manhardt, Yongzhi Su, Jason Rambach, Didier Stricker, Xiangyang Ji, Federico Tombari

AvatarCraft: Transforming Text into Neural Human Avatars with Parameterized Shape and Pose Control

Ruixiang Jiang, Can Wang, Jingbo Zhang, Menglei Chai, Mingming He, Dongdong Chen, Jing Liao

Learning Versatile 3D Shape Generation with Improved AR Models

Simian Luo, Xuelin Qian, Yanwei Fu, Yinda Zhang, Ying Tai, Zhenyu Zhang, Chengjie Wang, Xiangyang Xue

Novel-view Synthesis and Pose Estimation for Hand-Object Interaction from Sparse Views

Wentian Qu, Zhaopeng Cui, Yinda Zhang, Chenyu Meng, Cuixia Ma, Xiaoming Deng, Hongan Wang

PreSTU: Pre-Training for Scene-Text Understanding

Jihyung Kil*, Soravit Changpinyo, Xi Chen, Hexiang Hu, Sebastian Goodman, Wei-Lun Chao, Radu Soricut

Self-supervised Learning of Implicit Shape Representation with Dense Correspondence for Deformable Objects

Baowen Zhang, Jiahe Li, Xiaoming Deng, Yinda Zhang, Cuixia Ma, Hongan Wang

Self-regulating Prompts: Foundational Model Adaptation without Forgetting

Muhammad Uzair Khattak, Syed Talal Wasi, Muzammal Nasee, Salman Kha, Ming-Hsuan Yan, Fahad Shahbaz Khan

Spectral Graphormer: Spectral Graph-Based Transformer for Egocentric Two-Hand Reconstruction using Multi-View Color Images

Tze Ho Elden Tse*, Franziska Mueller, Zhengyang Shen, Danhang Tang, Thabo Beeler, Mingsong Dou, Yinda Zhang, Sasa Petrovic, Hyung Jin Chang, Jonathan Taylor, Bardia Doosti

Synthesizing Diverse Human Motions in 3D Indoor Scenes

Kaifeng Zhao, Yan Zhang, Shaofei Wang, Thabo Beeler, Siyu Tang

Tracking by 3D Model Estimation of Unknown Objects in Videos

Denys Rozumnyi, Jiri Matas, Marc Pollefeys, Vittorio Ferrari, Martin R. Oswald

UnLoc: A Unified Framework for Video Localization Tasks

Shen Yan, Xuehan Xiong, Arsha Nagrani, Anurag Arnab, Zhonghao Wang*, Weina Ge, David Ross, Cordelia Schmid

Verbs in Action: Improving Verb Understanding in Video-language Models

Liliane Momeni, Mathilde Caron, Arsha Nagrani, Andrew Zisserman, Cordelia Schmid

VLSlice: Interactive Vision-and-Language Slice Discovery

Eric Slyman, Minsuk Kahng, Stefan Lee

Yes, we CANN: Constrained Approximate Nearest Neighbors for Local Feature-Based Visual Localization

Dror Aiger, André Araujo, Simon Lynen

Audiovisual Masked Autoencoders

Mariana-Iuliana Georgescu*, Eduardo Fonseca, Radu Tudor Ionescu, Mario Lucic, Cordelia Schmid, Anurag Arnab

CLR: Channel-wise Lightweight Reprogramming for Continual Learning

Yunhao Ge, Yuecheng Li, Shuo Ni, Jiaping Zhao, Ming-Hsuan Yang, Laurent Itti

LU-NeRF: Scene and Pose Estimation by Synchronizing Local Unposed NeRFs

Zezhou Cheng*, Carlos Esteves, Varun Jampani, Abhishek Kar, Subhransu Maji, Ameesh Makadia

Multiscale Representation for Real-Time Anti-Aliasing Neural Rendering

Dongting Hu, Zhenkai Zhang, Tingbo Hou, Tongliang Liu, Huan Fu, Mingming Gong

Nerfbusters: Removing Ghostly Artifacts from Casually Captured NeRFs

Frederik Warburg, Ethan Weber, Matthew Tancik, Aleksander Holynski, Angjoo Kanazawa

Segmenting Known Objects and Unseen Unknowns without Prior Knowledge

Stefano Gasperini, Alvaro Marcos-Ramiro, Michael Schmidt, Nassir Navab, Benjamin Busam, Federico Tombari

SparseFusion: Fusing Multi-Modal Sparse Representations for Multi-Sensor 3D Object Detection

Yichen Xie, Chenfeng Xu, Marie-Julie Rakotosaona, Patrick Rim, Federico Tombari, Kurt Keutzer, Masayoshi Tomizuka, Wei Zhan

SwiftFormer: Efficient Additive Attention for Transformer-Based Real-time Mobile Vision Applications

Abdelrahman Shaker, Muhammad Maa, Hanoona Rashee, Salman Kha, Ming-Hsuan Yan, Fahad Shahbaz Kha

Agile Modeling: From Concept to Classifier in Minutes

Otilia Stretcu, Edward Vendrow, Kenji Hata, Krishnamurthy Viswanathan, Vittorio Ferrari, Sasan Tavakkol, Wenlei Zhou, Aditya Avinash, Enming Luo, Neil Gordon Alldrin, MohammadHossein Bateni, Gabriel Berger, Andrew Bunner, Chun-Ta Lu, Javier A Rey, Giulia DeSalvo, Ranjay Krishna, Ariel Fuxman

CAD-Estate: Large-Scale CAD Model Annotation in RGB Videos

Kevis-Kokitsi Maninis, Stefan Popov, Matthias Niessner, Vittorio Ferrari

Counting Crowds in Bad Weather

Zhi-Kai Huang, Wei-Ting Chen, Yuan-Chun Chiang, Sy-Yen Kuo, Ming-Hsuan Yang

DreamPose: Fashion Video Synthesis with Stable Diffusion

Johanna Karras, Aleksander Holynski, Ting-Chun Wang, Ira Kemelmacher-Shlizerman

InfiniCity: Infinite-Scale City Synthesis

Chieh Hubert Lin, Hsin-Ying Lee, Willi Menapace, Menglei Chai, Aliaksandr Siarohin, Ming-Hsuan Yang, Sergey Tulyakov

SAMPLING: Scene-Adaptive Hierarchical Multiplane Images Representation for Novel View Synthesis from a Single Image

Xiaoyu Zhou, Zhiwei Lin, Xiaojun Shan, Yongtao Wang, Deqing Sun, Ming-Hsuan Yang

Tutorial

Learning with Noisy and Unlabeled Data for Large Models beyond Categorization

Sifei Liu, Hongxu Yin, Shalini De Mello, Pavlo Molchanov, Jose M. Alvarez, Jan Kautz, Xiaolong Wang, Anima Anandkumar, Ming-Hsuan Yang, Trevor Darrell

Speaker: Varun Jampani

Workshops

LatinX in AI

Platinum Sponsor

Panelists: Daniel Castro Chin, Andre Araujo

Invited Speaker: Irfan Essa

Volunteers: Ming-Hsuan Yang, Liangzhe Yuan, Pedro Velez, Vincent Etter

Scene Graphs and Graph Representation Learning

Organizer: Federico Tombari

International Workshop on Analysis and Modeling of Faces and Gestures

Speaker: Todd Zickler

3D Vision and Modeling Challenges in eCommerce

Speaker: Leonidas Guibas

BigMAC: Big Model Adaptation for Computer Vision

Organizer: Mathilde Caron

Adversarial Robustness In the Real World (AROW)

Organizer: Yutong Bai

GeoNet: 1st Workshop on Robust Computer Vision across Geographies

Speaker: Sara Beery

Organizer: Tarun Kalluri

Quo Vadis, Computer Vision?

Speaker: Bill Freeman

To NeRF or not to NeRF: A View Synthesis Challenge for Human Heads

Speaker: Thabo Beeler

Organizer: Stefanos Zafeiriou

New Ideas in Vision Transformers

Speaker: Cordelia Schmid

Organizer: Ming-Hsuan Yang

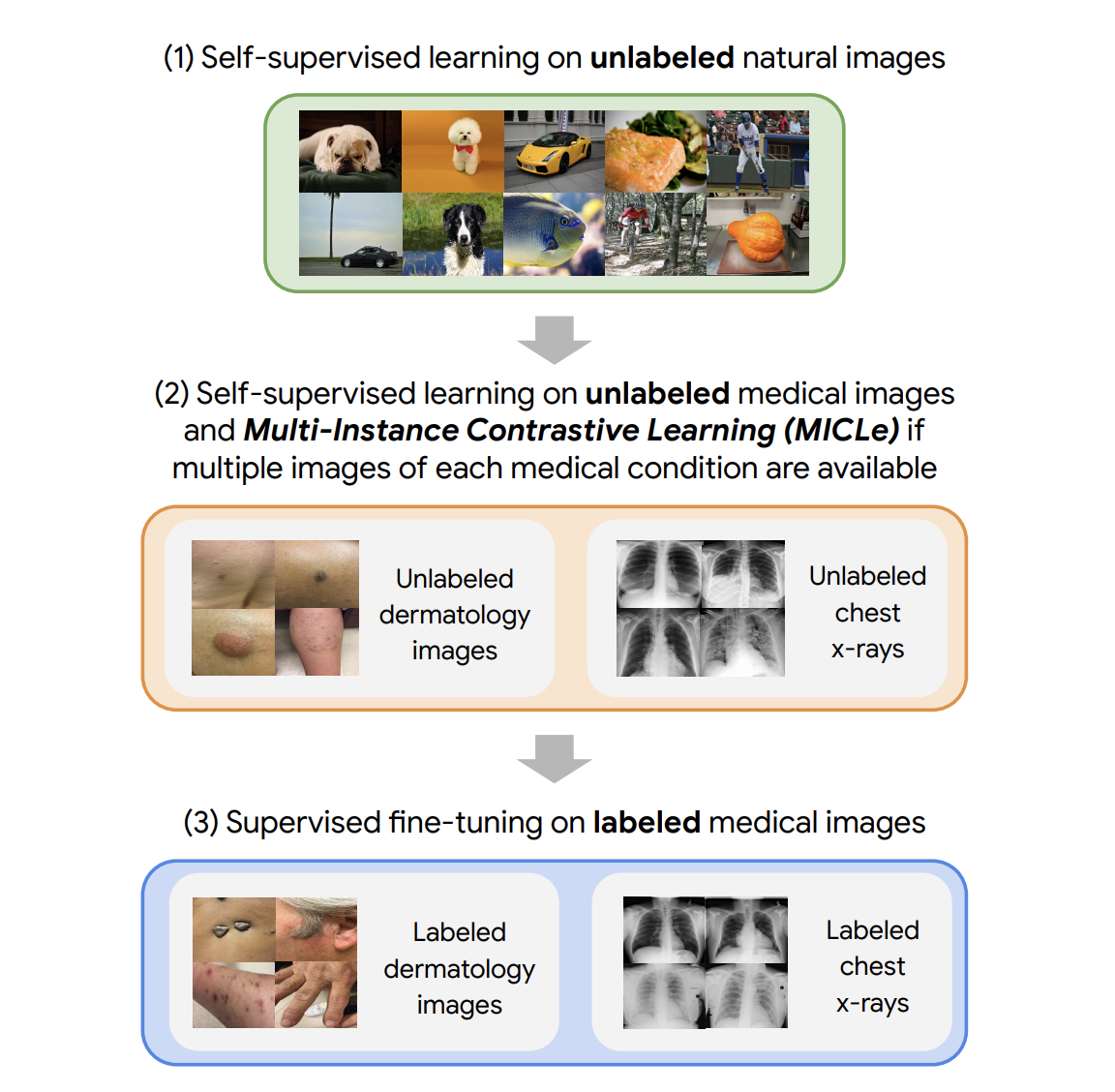

Representation Learning with Very Limited Images: The Potential of Self, Synthetic and Formula Supervision

Speaker: Manel Baradad Jurjo

Resource Efficient Deep Learning for Computer Vision

Speaker: Prateek Jain

Organizer: Jiahui Yu, Rishabh Tiwari, Jai Gupta

Computer Vision Aided Architectural Design

Speaker: Noah Snavely

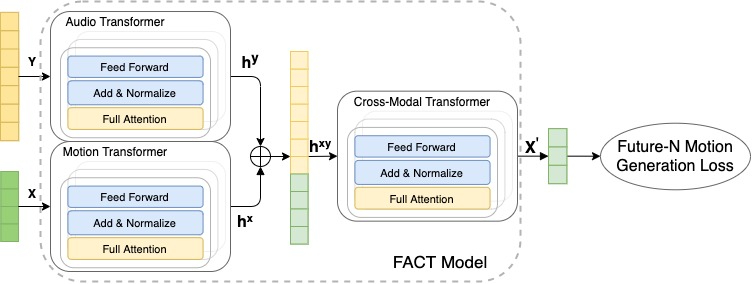

AV4D: Visual Learning of Sounds in Spaces

Organizer: David Harwath

Vision-and-Language Algorithmic Reasoning

Speaker: François Chollet

Neural Fields for Autonomous Driving and Robotics

Speaker: Jon Barron

International Challenge on Compositional and Multimodal Perception

Organizer: Ranjay Krishna

Open-Vocabulary 3D Scene Understanding (OpenSUN3D)

Speaker: Thomas Funkhouser

Organizer: Francis Engelmann, Johanna Wald, Federico Tombari, Leonidas Guibas

Frontiers of Monocular 3D Perception: Geometric Foundation Models

Speaker: Leonidas Guibas

PerDream: PERception, Decision Making and REAsoning Through Multimodal Foundational Modeling

Organizer: Daniel McDuff

Recovering 6D Object Pose

Speaker: Fabian Manhardt, Martin Sundermeyer

Organizer: Martin Sundermeyer

Women in Computer Vision (WiCV)

Panelist: Arsha Nagrani

Language for 3D Scenes

Organizer: Leonidas Guibas

AI for 3D Content Creation

Speaker: Kai-Hung Chang

Organizer: Leonidas Guibas

Computer Vision for Metaverse

Speaker: Jon Barron, Thomas Funkhouser

Towards the Next Generation of Computer Vision Datasets

Speaker: Tom Duerig

* Work done while at Google