This is the final blog post for #11WeeksOfAndroid. Thank you for joining us over the past 11 weeks as we dove into key areas of Android development. In case you missed it, here’s a recap of everything we talked about during each week:

Week 1 - People and identity

Discover how to implement the conversation shortcut and bubbles with ‘conversation notifications’. Also, learn more about conversation additions and other System UI news, and discover the people and conversations developer documentation here. Finally, you can also listen to the Android Backstage podcast where the System UI team is interviewed on people and bubbles.

To tackle user and developer complexity that makes identity a challenge for developers, we've been working on One Tap and Block Store, part of our new Google Identity Services Library.

If you’re interested in learning more about Identity, we published the video “in Identity on Android: what’s new in sign-in,” where Vishal explains the new libraries in the Google Identity System.

Two teams that worked very early with us are the Facebook Messenger team and the direct messaging team from Twitter. Read the story from Twitter here and find out how we worked with Facebook on the implementation here.

Find out more with the People and Identity learning path, playlist, and the week’s wrap-up blog post.

Week 2 - Machine learning

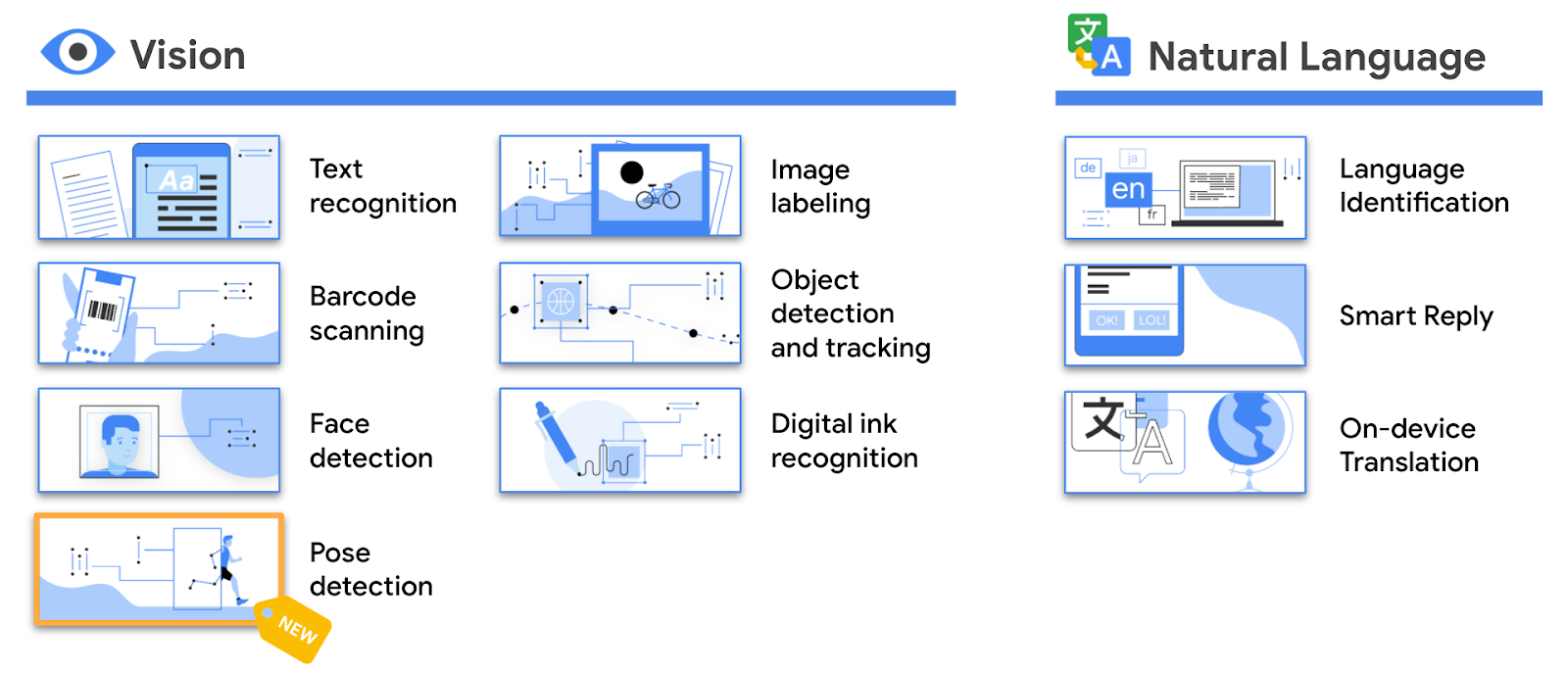

We kicked off the week by announcing the winners of the #AndroidDevChallenge! Check out all the winning apps and see how they used ML Kit and TensorFlow Lite, all focused on demonstrating how machine learning can come to life in a powerful way to help users get things done, like an app to help visually impaired navigate crowded spaces or another to help students learn sign language.

We recently made ML Kit a standalone SDK and it no longer requires a Firebase account. Just one line in your build.gradle file and you can start bringing ML functionality into your app.

Another much anticipated addition is the support for swapping Google models with your own for both Image Labeling as well as Object Detection and Tracking.

Find out about the importance of finding the unique intersection of user problems and ML strengths and how the People + AI Guidebook can help you make ML product decisions. Check out the interview with the Read Along team for more inspiration.

This week we also highlighted how adding a custom model to your Android app has never been easier.

Finally, try out our codelabs:

Find out more with the Machine Learning pathway, playlist, and the week’s wrap-up blog post.

Week 3 - Privacy and security

As shared in the “Privacy and Security” blog post, we’re giving users even more control and transparency over user data access.

In Android 11, we introduced various privacy improvements such as one time permissions that let users give an app access to the device microphone, camera, or location, just that one time. Learn more about building privacy-friendly apps with these new changes. You can also learn about various Android security updates in this video.

Other notable updates include:

- Permissions auto-reset: If users haven’t used an app that targets Android 11 for an extended period of time, the system will “auto-reset” all of the granted runtime permissions associated with the app and notify the user.

- Data access auditing APIs: In Android 11, developers will have access to new APIs that will give them more transparency into their app’s usage of private and protected data. Learn more about new tools in Android 11 to make your apps more private and stable.

- Scoped Storage: In Android 11, scoped storage will be mandatory for all apps that target API level 30. Learn more and check out the storage FAQ.

- Google Play system updates: Google Play system updates were introduced with Android 10 as part of Project Mainline, making it easier to bring core OS component updates to users.

- Jetpack Biometric library:The library has been updated to include new BiometricPrompt features in Android 11 in order to allow for backward compatibility.

Find out more with the ‘privacy, trust and security’ learning pathway, playlist, and documentation on privacy and security best practices.

Week 4 - Android 11 compatibility

We shipped the second Beta of Android 11 and added a new release milestone called Platform Stability to clearly signal to developers that all APIs and system behaviors are complete. Find out more about Beta 2 and platform stability, including what this milestone means for developers, and the Android 11 timeline. Note: since week #4, we shipped the third and final beta and are getting close to releasing Android 11 to AOSP and the ecosystem. Be sure to check that your apps are working!

To get your apps ready for Android 11, check out some of these helpful resources:

In our “Accelerating Android updates” blog post, we looked at how we’re continuing to get the latest OS to reach critical mass by expanding Android’s updatability architecture.

We also highlighted Excelliance Tech, who recently moved their LeBian SDK away from non-SDK interfaces, toward stable, official APIs so they can stay more compatible with the Android OS over time. Check out the Excelliance Tech story.

Find out more with the Android 11 Compatibility learning pathway, playlist, and the week’s wrap-up blog post.

Week 5 - Languages

With the Android 11 beta, we further improved the developer experience for Kotlin on Android by officially recommending coroutines for asynchronous work. If you’re new to coroutines, check out:

Also, check out our new Kotlin case studies page for the latest case studies and data, including the new Google Home case study, and our state of Kotlin on Android video. For beginners, we announced the launch of our new Android basics in Kotlin course.

If you’re a Java language developer, watch support for newer Java APIs on how we’ve made newer OpenJDK libraries available across versions of Android. With Android 11, we also updated the Android runtime to make app startup even faster with I/O prefetching.

Android 11 included updates across the native toolchain, including better tools for profile-guided optimization (PGO) and improvements to native dependency management in Android Studio 4.0.

Finally, we continue to focus on improvements to the D8 and R8 compilers in Android Studio with better support for Kotlin in the R8 shrinker. Learn more.

Find out more with the languages learning pathway, playlist, and the week’s wrap-up blog post.

Week 6 - Android Jetpack

Interested in what’s new in Jetpack? Check out the #Android11 Beta launch with a quick fly-by introducing many of the updates to our libraries, with tips on how to get started.

- Dive deeper into major releases like Hilt, with cheat sheets to help you get started, and learn how we migrated our own samples to use Hilt for dependency injection. Less boilerplate = more fun.

- Discover more about Paging 3.0, a complete rewrite of the library using Kotlin coroutines and adding features like improved error handling, better transformations, and much more.

- Get to know CameraX Beta, and learn how it helps developers manage edge cases across different devices and OS versions, so that you don’t have to.

This year, we've made several major improvements with the release of Navigation 2.3, which allows you to navigate between different screens of your app with ease while also allowing you to follow Android UI principles.

In Android 11, we continued our work to give users even more control over sensitive permissions. Now there are type-safe contracts for common intents and more via new ActivityResult APIs. These changes simplify how you request permissions, and we’ll continue to work on making permissions easier in the future.

Also learn about our recent releases of the AppStartup library as well as what’s new in WorkManager.

Find out more with the Jetpack learning pathway, playlist, and the week’s wrap-up blog post.

Week 7 - Android developer tools

We have brought together an overview of what is new in Android Developer tools.

Check out the latest updates in design tools, and go even deeper:

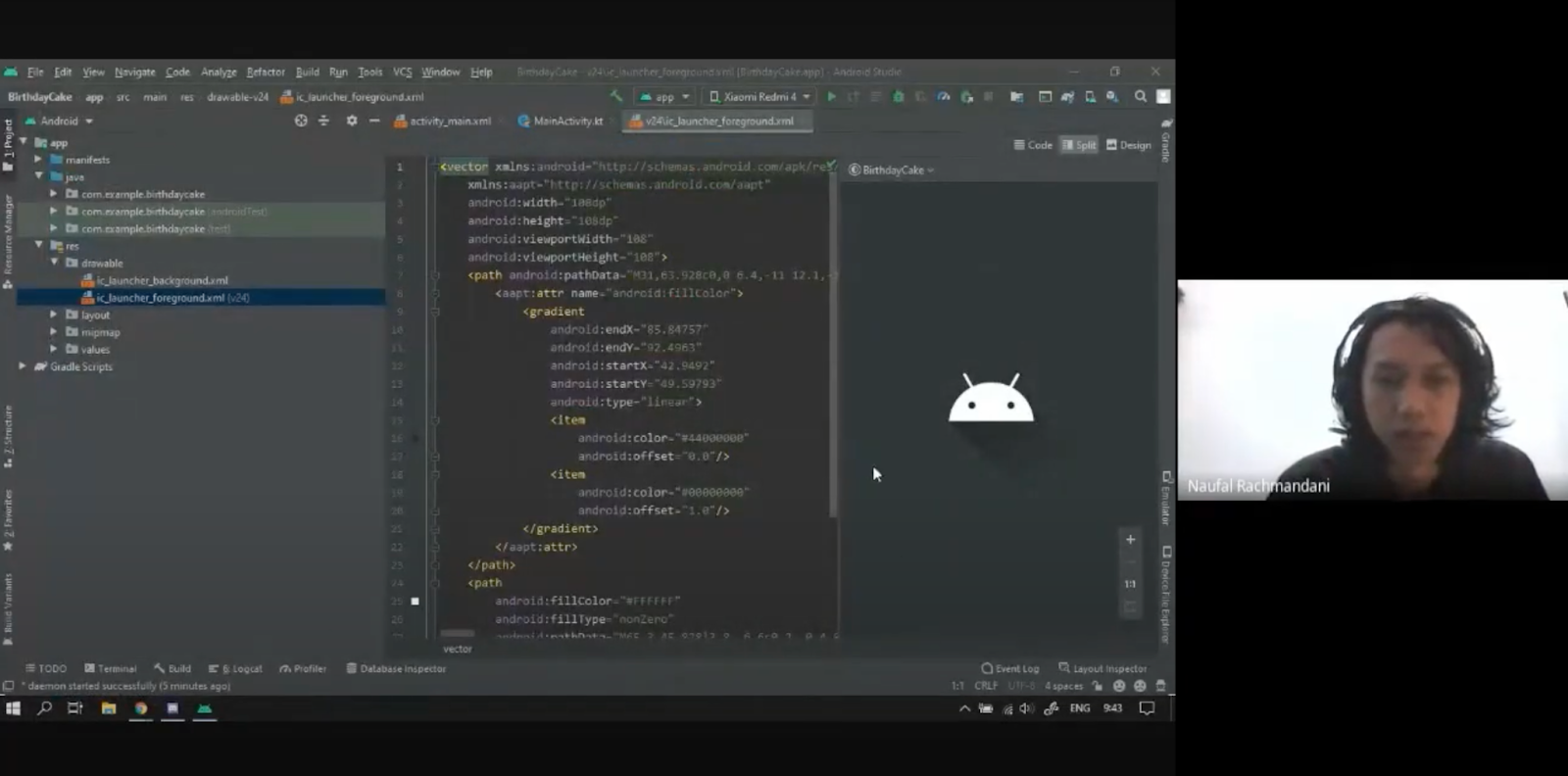

Also, find out about debugging your layouts, with updates to the layout inspector. Discover the latest developments for Jetpack Compose Design tools, and also how to use the new database inspector in Android Studio.

Discover the latest development tools we have in place for Jetpack Hilt in Android Studio.

Learn about the build system in Android developer tools:

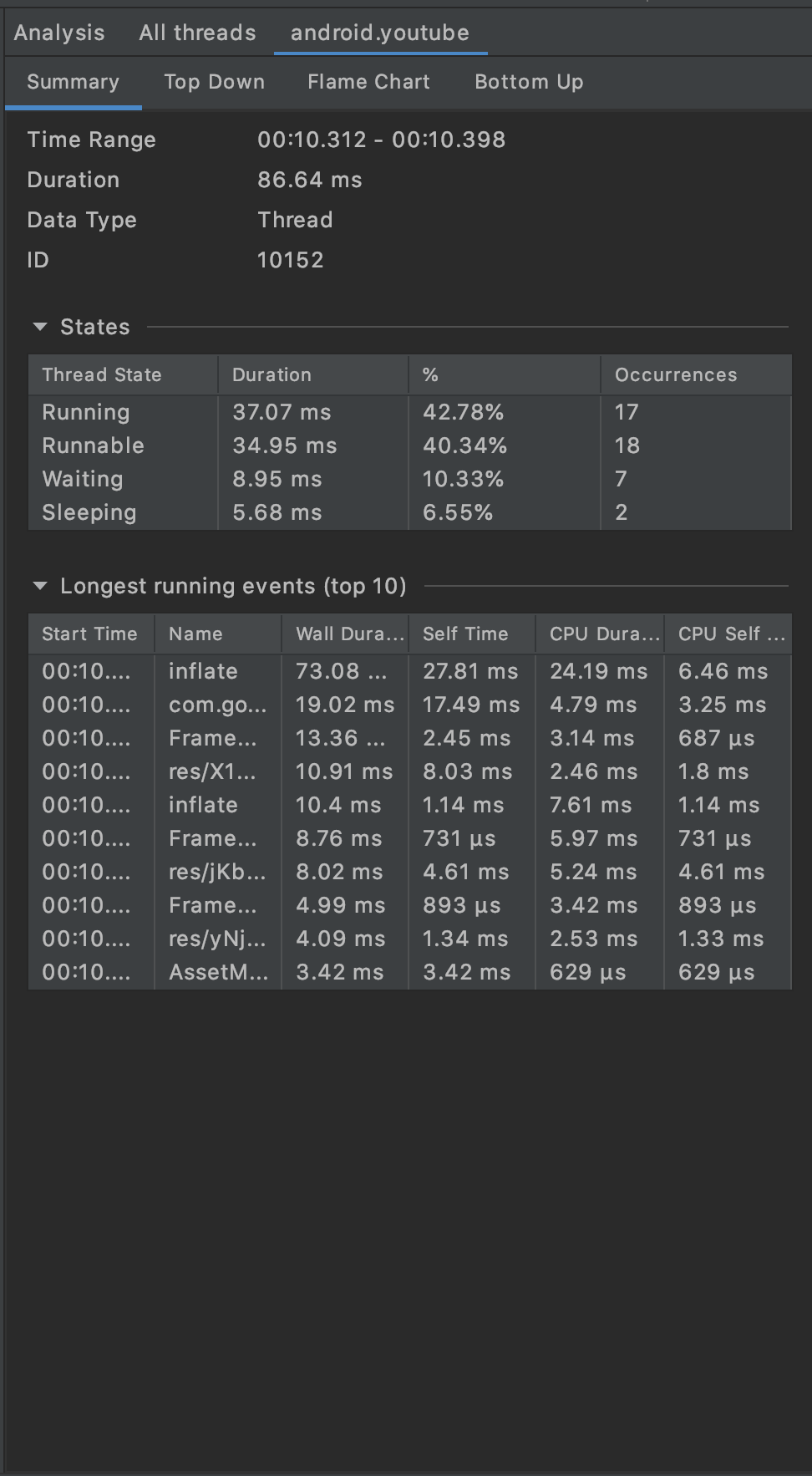

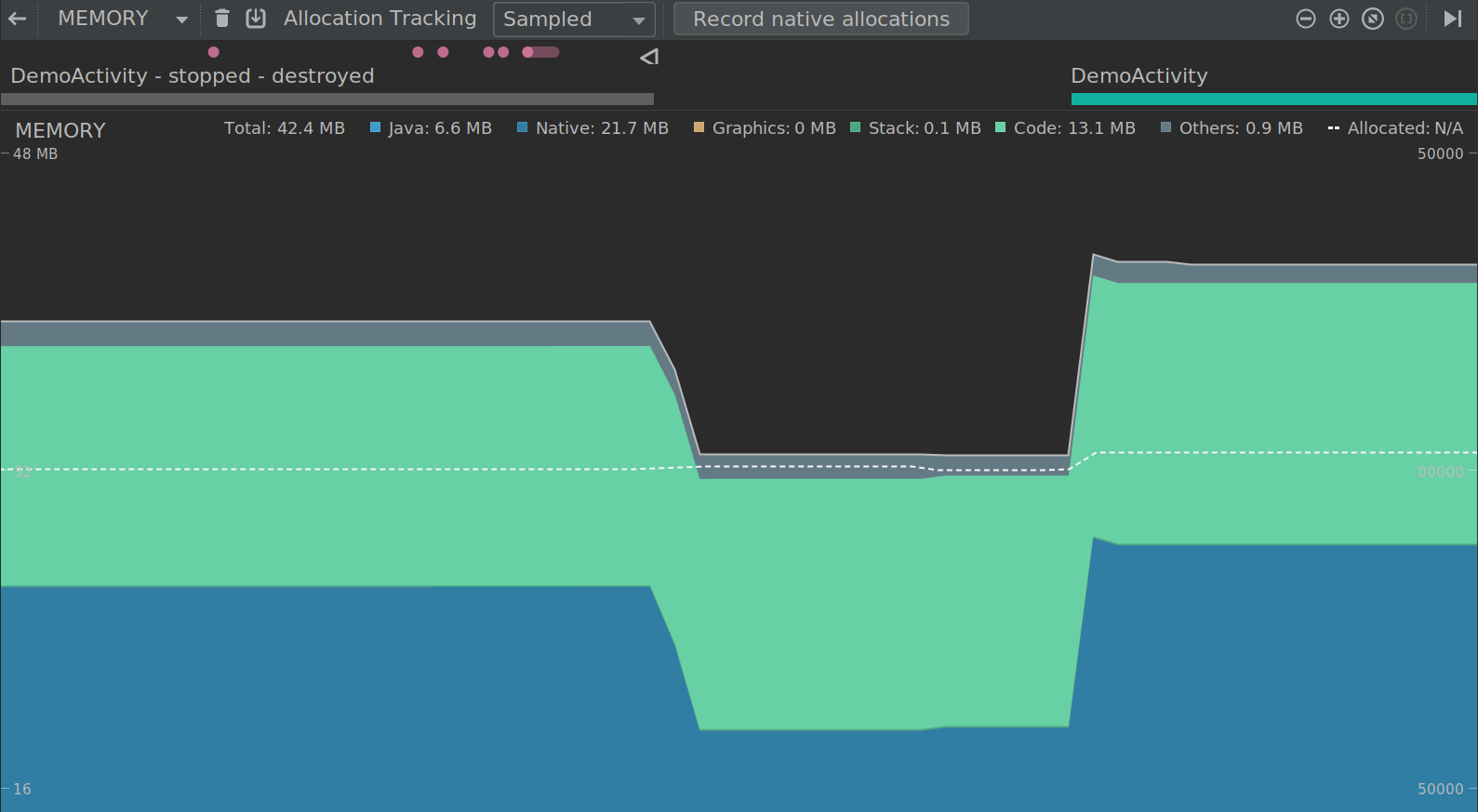

To learn about the latest updates on virtual testing, read this blog on the Android Emulator. Lastly, to see the latest changes for performance tools, watch performance profilers content about System Trace. Additionally, check out more about C++ memory profiling with Android Studio 4.1.

Find out more with the Android developer tools learning pathway, playlist, and the week’s wrap-up blog post.

Week 8 - App distribution and monetization

Check out our webinars about the new Google Play Console beta if you weren’t able to tune in live.

We shared recent improvements we’ve made to app bundles, as well as our intention to require new apps and games to publish with this format in the second half of 2021. The new in-app review API means developers can now ask for ratings and reviews from within your app!

Don’t forget about our policy around more transparent subscriptions to help increase user trust in Google Play Billing. We also expanded our feature set to help you better reach and retain buyers, and launched Play Billing Library 3, which will be required by mid-2021.

Google Play Pass launched in nine new markets last month. Developers using both Google Play Pass and direct billing on Google Play have earned an average of 2.5 times US revenue with Google Play Pass, without diminishing Google Play store earnings. Learn more and express interest in joining.

Find out more with the app distribution and monetization learning pathway, playlist, and the week’s wrap-up blog post.

Week 9 - Android beyond phones

Check out some of the highlights from this week, including;

Find out more with the learning pathways for Android TV and Large Screens, Beyond phones playlist, and the week’s wrap-up blog post.

Week 10 - Games and media

We shared several games updates and presented a special "11 Weeks" episode of The Android Game Developer Show.

You can also take advantage of Android 11's new media controls by making sure your app is using MediaStyle with a valid MediaSession token. Learn how to support media resumption by making your app discoverable with a MediaBrowserServiceCompat, using the EXTRA_RECENT hint to help with resuming content, and handling the onPlay and onGetRoot callbacks. Then check out how to leverage the MediaRouter jetpack library and check out the updated version of the UAMP sample.

Finally, we covered some of the primary ways apps can benefit from 5G. Android 11 adds new APIs and updates existing APIs to help ensure you have all the tools you need to leverage the capabilities of 5G, such as an enhanced bandwidth estimation API, 5G detection capabilities, and a new meteredness flag from cellular carriers. The Android emulator now enables you to develop and test these APIs without needing a 5G device or network connection. All of this and more is available from our dedicated 5G page.

Find out more with the ‘games and media’ learning pathway, playlist, and the wrap-up blog post, and visit d.android.com/games to stay up to date on all of our tools and resources for game developers.

Week 11 - UI

In our final week, we released 4 new codelabs, 9 new samples, new documentation and a podcast from the Compose team. If you prefer videos; we’ve got you covered:

New in Android 11 is the ability for apps to create seamless transitions between the on screen keyboard being opened and closed. To find out how to add this to your app, slide on over to the video, blog posts and sample app

We recommend following the Material Design guidelines to ensure that apps operate consistently, enabling patterns learned in one app to be used in another. Find out more about Material Theming (color, type and shape), dark theme and Material’s motion system using the Material Design Components (MDC) library. If you haven’t already migrated to MDC, then check out our migration guide.

It even becomes possible to ease your migration with libraries like the new MDC-Android Compose Theme Adapter which converts an MDC XML theme into a Compose `MaterialTheme`.

Find out more with the Compose learning pathway, the Modern UI learning pathway, playlist, and the week’s wrap-up blog post.

Resources

You can find the entire playlist of #11WeeksOfAndroid video content here. Follow us on Twitter and YouTube, and subscribe to our email list to receive all the latest news and resources. Thanks so much for letting us be a part of this experience with you!