By Brian Stevens, Vice President, Cloud Platforms

San Francisco

— Today at

Google Cloud Next ‘17, we’re thrilled to announce new

Google Cloud Platform (GCP) products, technologies and services that will help you imagine, build and run the next generation of cloud applications on our platform.

Bring your code to App Engine, we’ll handle the rest

In 2008, we launched

Google App Engine, a pioneering serverless runtime environment that lets developers build web apps, APIs and mobile backends at Google-scale and speed. For nearly 10 years, some of the most innovative companies built applications that serve their users all over the world on top of App Engine. Today, we’re excited to announce into general availability a major expansion of App Engine centered around openness and developer choice that keeps App Engine’s original promise to developers: bring your code, we’ll handle the rest.

App Engine now supports

Node.js,

Ruby,

Java 8,

Python 2.7 or 3.5,

Go 1.8, plus PHP 7.1 and .NET Core, both in beta, all backed by App Engine’s 99.95% SLA. Our managed runtimes make it easy to start with your favorite languages and use the open source libraries and packages of your choice. Need something different than what’s out of the box? Break the glass and go beyond our managed runtimes by supplying your own Docker container, which makes it simple to run any language, library or framework on App Engine.

The future of cloud is open: take your app to-go by having App Engine generate a Docker container containing your app and deploy it to any container-based environment, on or off GCP. App Engine gives developers an open platform while still providing a fully managed environment where developers focus only on code and on their users.

Cloud Functions public beta at your service

Up one level from fully managed applications, we’re launching

Google Cloud Functions into public beta. Cloud Functions is a completely serverless environment to build and connect cloud services without having to manage infrastructure. It’s the smallest unit of compute offered by GCP and is able to spin up a single function and spin it back down instantly. Because of this, billing occurs only while the function is executing, metered to the nearest one hundred milliseconds.

Cloud Functions is a great way to build lightweight backends, and to extend the functionality of existing services. For example, Cloud Functions can respond to file changes in

Google Cloud Storage or incoming

Google Cloud Pub/Sub messages, perform lightweight data processing/ETL jobs or provide a layer of logic to respond to webhooks emitted by any event on the internet. Developers can securely invoke Cloud Functions directly over HTTP right out of the box without the need for any add-on services.

Cloud Functions is also a great option for mobile developers using

Firebase, allowing them to build backends integrated with the Firebase platform. Cloud Functions for Firebase handles events emitted from the Firebase Realtime Database, Firebase Authentication and Firebase Analytics.

Growing the Google BigQuery universe: introducing BigQuery Data Transfer Service

Since our earliest days, our customers turned to Google to promote their advertising messages around the world, at a scale that was previously unimaginable. Today, those same customers want to use BigQuery, our powerful data analytics service, to better understand how users interact with those campaigns. With that, we’ve developed deeper integration between broader Google and GCP with the public beta of the

BigQuery Data Transfer Service, which automates data movement from select Google applications directly into BigQuery. With BigQuery Data Transfer Service, marketing and business analysts can easily export data from Adwords, DoubleClick and YouTube directly into BigQuery, making it available for immediate analysis and visualization using the extensive set of tools in the BigQuery ecosystem.

Slashing data preparation time with Google Cloud Dataprep

In fact, our goal is to make it easy to import data into BigQuery, while keeping it secure.

Google Cloud Dataprep is a new serverless browser-based service that can dramatically cut the time it takes to prepare data for analysis, which

represents about 80% of the work that data scientists do. It intelligently connects to your data source, identifies data types, identifies anomalies and suggests data transformations. Data scientists can then visualize their data schemas until they're happy with the proposed data transformation. Dataprep then creates a data pipeline in

Google Cloud Dataflow, cleans the data and exports it to BigQuery or other destinations. In other words, you can now prepare structured and unstructured data for analysis with clicks, not code. For more information on Dataprep, apply to be part of the private beta. Also, you’ll find more news about our latest database and data and analytics capabilities

here and

here.

Hello, (more) world

Not only are we working hard on bringing you new products and capabilities, but we want your users to access them quickly and securely

— wherever they may be. That’s why we’re announcing three new

Google Cloud Platform regions: California, Montreal and the Netherlands. These will bring the total number of Google Cloud regions up from six today, to more than 17 locations in the future. These new regions will deliver lower latency for customers in adjacent geographic areas, increased scalability and more disaster recovery options. Like other Google Cloud regions, the new regions will feature a minimum of three zones, benefit from Google’s global, private fibre network and offer a complement of GCP services.

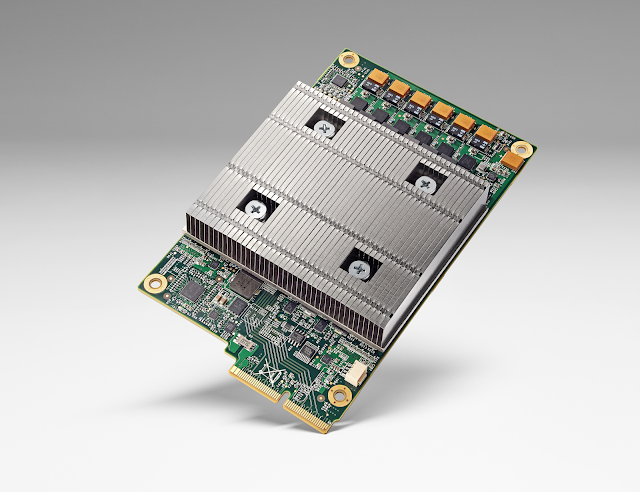

Supercharging our infrastructure . . .

Customers run demanding workloads on GCP, and we're constantly striving to improve the performance of our VMs. For instance, we were honored to be the first public cloud provider to

run Intel Skylake, a custom Xeon chip that delivers significant enhancements for compute-heavy workloads and a larger range of VM memory and CPU options.

We’re also doubling the number of vCPUs you can run in an instance from 32 to 64 and now offering up to 416GB of memory, which customers have asked us for as they move large enterprise applications to Google Cloud. Meanwhile, we recently began offering

GPUs, which provide substantial performance improvements to parallel workloads like training machine learning models.

To continually unlock new energy sources, Schlumberger collects large quantities of data to build detailed subsurface earth models based on acoustic measurements, and GCP compute infrastructure has the unique characteristics that match Schlumberger's needs to turn this data into insights. High performance scientific computing is integral to its business, so GCP's flexibility is critical.

Schlumberger can mix and match GPUs and CPUs and dynamically create different shapes and types of virtual machines, choosing memory and storage options on demand.

"We are now leveraging the strengths offered by cloud computation stacks to bring our data processing to the next level." — Ashok Belani, Executive Vice President Technology, Schlumberger

. . . without supercharging our prices

We aim to keep costs low. Today we announced

Committed Use Discounts that provide up to 57% off the list price on

Google Compute Engine, in exchange for a one or three year purchase commitment. Committed Use Discounts are based on the total amount of CPU and RAM you purchase, and give you the flexibility to use different instance and machine types; they apply automatically, even if you change instance types (or size). There are no upfront costs with Committed Use Discounts, and they are billed monthly. What’s more, we automatically apply Sustained Use Discounts to any additional usage above a commitment.

We're also dropping

prices for Compute Engine. The specific cuts vary by region. Customers in the United States will see a 5% price drop; customers in Europe will see a 4.9% drop and customers using our Tokyo region an 8% drop.

Then there’s our improved Free Tier. First, we’ve extended the free trial from 60 days to 12 months, allowing you to use your $300 credit across all GCP services and APIs, at your own pace and on your own schedule. Second, we’re introducing new Always Free products

— non-expiring usage limits that you can use to test and develop applications at no cost. New additions include Compute Engine, Cloud Pub/Sub, Google Cloud Storage and Cloud Functions, bringing the number of Always Free products up to 15, and broadening the horizons for developers getting started on GCP. Visit the

Google Cloud Platform Free Tier page today for further details, terms, eligibility and to sign up.

We'll be diving into all of these product announcements in much more detail in the coming days, so stay tuned!